aorus 3080 lcd screen not working pricelist

AORUS - the premium gaming brand from GIGABYTE had launched a completely new series of RTX 30 graphics cards, including RTX 3090 Xtreme, RTX 3090 Master, RTX 3080 Xtreme, and RTX 3080 Master.

Besides excellent cooling and superior performance, LCD Edge View is another spotlight of AORUS RTX 30 series graphics cards. LCD Edge View is a small LCD located on the top of the graphics card. What could users do with this small LCD? Let’s find it out.

LCD Edge View is a LCD located on the graphics card, you can use it todisplay GPU info including temperature, usage, clock speed, fan speed, VRAM usage, VRAM clock and total card power. All this information can be shown one by one or just certain ones on the LCD.

Besides that, there are three different displaying styles available and users could choose their ideal one. However, not just GPU info but FPS (Frame Per Second) in the game or other application could be displayed through LCD Edge View.

The LCD Edge View can also show customized content including text, pictures or even short GIF animations.Users could input the preferred text to the LCD, also set the font size, bold or italic. It also supports multi-language so users could input whatever type of text they want.

About the picture, LCD Edge View allows users to upload a JPEG file to it and AORUS RGB Fusion software will let users choose which region of the picture should be shown. The support of short GIF animations is the most interesting part.

Users can upload a short animation in terms of GIF to be shown on the LCD so they can easily build up a graphics card with their own style. All of the customizations above can be done via AORUS RGB Fusion software.

There’s something more interesting with LCD Edge View: The little CHIBI.CHIBI is a little falcon digitally living in the LCD Edge View and will grow up as more time users spend with their graphics card. Users could always check their little CHIBI through the LCD Edge View and watch it eat, sleep or fly around, which is quite interactive and interesting.

In conclusion, LCD Edge View can display a series of useful GPU information, customized text, pictures, and animations, allowing users to build up the graphics card with their own style. Users can also have more interaction with their card via the little CHIBI, the exclusive little digital falcon living inside the LCD Edge View, which brings more fun while playing with the graphics card.

High-end gaming laptops don"t come cheap and the GIGABYTE AORUS 17 YE5 is no exception. Powered by a 12th-generation Intel Core i7 processor and with support for up to 64 GB of DDR5 4800 memory, this extreme machine features a high-end NVIDIA 30 Series video card with a maximum power draw of 130 W. A FullHD IPS display with 360 Hz refresh is also present in the list of specs and features, so the list price of US$3,699 should not come as a surprise.

The 32 percent discount currently applied by Amazon to the AORUS 17 YE5-A4US544SP brings this gaming laptop"s price down to US$2,499. The accurate list of specs and features includes the following:Graphics adapter: NVIDIA GeForce RTX 3080 Ti (16 GB GDDR6, 1395 MHz boost)

The GIGABYTE AORUS 17 YE5 comes with firmware-based TPM and support for Intel Platform Trust Technology. It supports Windows Hello face authentication and features an HD webcam and a built-in dual microphone setup. The operating system preloaded is Windows 11 Pro, a step up from the Home edition mentioned by GIGABYTE on the official product page.

* Las especificaciones del producto y su apariencia pueden ser diferentes de un país a otro. Te recomendamos que compruebes las especificaciones y apariencia disponibles en tu país con tu vendedor local. Los colores de los productos pueden no ser perfectamente exactos debido a las variaciones causadas por las variables fotográficas y los ajustes de color de tu monitor, por lo que pueden ser diferentes a los colores mostrados en esta página web. Aunque nos esforzamos por ofrecer la informacion más exacta y detallada en el momento de su publicación, nos reservamos el derecho de realizar cambios sin notificación previa.

The best graphics cards allows creatives to run high-end software smoothly whilst also being able to easily switch between applications when you"re working. Gamers also benefit from the best graphics cards: a powerful card minimises lag and can easily run 4K visuals, allowing you full immersion in your games.

Nvidia"s RTX family of GPUs has continued to deliver since its release. The top of the range 3090 may offer incredible performance, it exceeds most budgets. The 3080 and 3080 TI cards are a substantially more affordable option, yet they still manage to pack a serious punch in the power department.

The Gigabyte AORUS GeForce RTX 3080 Xtreme Graphics Card comes with Nvidia"s GeForce RTX 3080, which is one of the best graphics cards you can buy right now. The RTX 3080 brings all the advancements of Nvidia"s latest Ampere architecture, including next-generation ray-tracing capabilities and 10GB of fast GDDR6X memory, which means it can make light work of 4K gaming.

OpenCL and Cuda applications in particular absolutely fly on the Turing architecture, so the RTX 4000 will make a massive difference when working with creative software, plug-ins and filters, resulting in an excellent performance when rendering images, 3D and video.

Graphics cards serve two major roles in computers. By using their impressive hardware power, GPUs maximise 3D visuals and determine the right resolution and frame rate to give you the best on-screen action.

It"s important to note that individual graphics cards have generic reference models that aren"t usually for sale. Each manufacturer like MSI, Asus, Gigabyte and so on sell their own versions of each card which will all look slightly different.

These specifications vary between GPU generations and across the various tiers, and the cores in Nvidia and AMD cards aren’t the same. Nvidia uses the term Cuda coreswhile AMD refers to GCN cores.This means that AMD and Nvidia cards cannot be compared in that respect.

Another key difference is how the two classes of graphics cards are manufactured. With gaming cards, Nvidia and AMD produce and sell reference designs, but many other manufacturers, including Asus, MSI, Zotac, EVGA and Sapphire, sell variations on the reference specification with different cooling systems and faster clock speeds. For Quadro cards, though, Nvidia works with a single manufacturer – PNY – to produce all its hardware.

RTX 3080 laptop deals are ideal for those looking to the top-tier of the gaming laptop spectrum, however, still want to save some cash while maximizing the value wherever possible. Understandable, of course, as these are some of the most premium portable powerhouses going right now, and there"s no getting around it: these are expensive machines. However, the bigger they are, the harder they fall, right? Well, sort of, as it"s all relative. The overriding good news, however, is that we"re seeing more and more RTX 3080 laptops hitting prices well below $2,000 / £2,000 these days.

These machines are synonymous with the best gaming laptops and that"s for good reason; they"re extraordinarily powerful for running modern games maxed out in not only Full HD, but 1440p and even 4K (should the native resolution allow).

Some of the best brands in the industry run this GPU in their higher-end models to push their machines to their limits and offer true next-gen performance. The RTX 3080 card sits at the top of the hierarchy right now, with unbeaten performance in both laptops and desktops.

RTX 3080 rigs were top of our Black Friday gaming laptop deals shopping list with one or two still live, so worth checking out. The same can be said for the wider Black Friday laptop deals in general. You"ll be surprised how many better deals you find before and after Black Friday.

Save $240- This clearance deal for an ASUS ROG Zephrus machine is a steal of a deal right now. It comes with an AMD Ryzen 9, 16GB memory, 1TB SSD, and of course an RTX 3080.

Gigabyte Aorus 15P |$2,399.00$2,013.94 at Amazon(opens in new tab)Save $385 -The RTX 3080 Gigabyte Arous 15P has taken a $385 discount at Amazon this week, taking us almost down to $2,000 for a high-end gaming laptop with a 300Hz refresh rate 15.6-inch display along with an Intel Core i7-11800H processor, 32GB RAM, and a 1TB SSD inside.

Asus ROG Strix Scar 15 | $2,399.99 $2,099.99 at Newegg(opens in new tab)Save $300 -The Asus ROG Strix Scar 15 is one of the best gaming laptops around, so it"s always a bargain when it goes on sale, but a 12% saving is much better than most deals we"ve seen on RTX 3080 laptops, so this is definitely one to jump on while you can.

Razer Blade 14 |$2,799.99$2,199.99 at Amazon(opens in new tab)Save $600 -This is not far away from the lowest price we"ve ever seen on the RTX 3080 Razer Blade 14, making this deal an excellent offer if you"re looking to invest some cash. This is a premium machine with a premium price, but the performance can"t be beaten.

Gigabyte Aorus 17 | $3,699 $2,499 at Best Buy(opens in new tab)Save $1,200- The Gigabyte Aorus 17 with Intel Core i9-12900H CPU, RTX 3080 Ti GPU, 32GB RAM, 1TB SSD, and 360Hz full HD display is about as powerful a gaming laptop as you"re going to find right now, making this $1,200 saving at Best Buy an unbelievable deal.

Acer Predator Helios 300 |$2,099.99$1,699.97 at Amazon(opens in new tab)Save $400-This is the lowest price we"ve seen for this Acer Predator Helios 300, thanks to a 19% reduction. The 15.6-inch gaming laptop comes with an i7 core processor, 1TB SSD, RTX 3080, and Windows 11 with a capability of 165Hz QHD.

Asus ROG Zephyrus G15 15.6-inch gaming laptop |£2,299.99£1,750.00 at Amazon(opens in new tab)Save £550 -This is a luxury laptop and we don"t see RTX 3080 configurations of the Asus ROG Zephyrus G15 at this price every day. You"re getting excellent specs here; a Ryzen 9 processor, 16GB RAM, and 1TB SSD. On top of all that, there"s an opto-mechanical keyboard as well, all for well under £2,000.

Alienware m15 R6 | £2,399 £1,899 at Currys(opens in new tab)Save £500 - It"s rare to find an Alienware gaming laptop on sale, much less one packing an RTX 3080 GPU, Intel Core i7-11800H, 16GB RAM, a 1 TB SSD, and a 360Hz full HD display, so jump on this one while you can.

MSI Raider GE76 | £2,899.99 £2,369.97 at Laptops Direct(opens in new tab)Save £530 - Get a great deal on this 17.3-inch gaming laptop from MSI and play the latest games on the go with an Intel Core i7-12700H processor, Nvidia RTX 3080 GPU, 16GB RAM, and a 1TB SSD – all for £530 off at Laptops Direct.

Asus ROG Zephyrus| £4,006.00£2,967.97 at Laptops Direct(opens in new tab)Save £1038 - Get this Asus ROG Zephyrus with a Core i9-12900H, Nvidia RTX 3080 Ti GPU, 32GB RAM, and a 2TB SSD for over £1000 less this week, thanks to this deal from Laptops Direct.

We check over 250 million products every day for the best pricesUS RTX 3080 laptop retailers:Amazon(opens in new tab)|Dell(opens in new tab)|Newegg(opens in new tab)|Best Buy(opens in new tab)|B&H Photo(opens in new tab)

UK RTX 3080 laptop retailers:Amazon(opens in new tab)|Ebuyer(opens in new tab)|Overclockers UK(opens in new tab)|Box(opens in new tab)|Laptops Direct(opens in new tab)Which RTX 3080 laptops are available?Budget machines like the Asus TUF and Dell G15 range don"t tend to venture too far into RTX 3080 territory, so you"ll be looking amongst the best Razer laptops and best Alienware laptops for a high-end GPU. When it comes to RTX 3080 laptop deals, though, the Gigabyte Aorus and MSI Leopard both regularly feature amongst the best savings. However, we"d also take a look at the Asus ROG Strix line for more options as well.How much is an RTX 3080 laptop?Outside of discounts, an RTX 3080 laptop will generally cost you more than $2,000. However, we"re seeing more and more high-end rigs taking larger price cuts at the moment, with the lowest prices we"ve spotted sitting around the $1,699 - $1,799 mark. Those discounts were found on MSI and Gigabyte rigs at Newegg, so we"d recommend starting your search there.Is an RTX 3080 laptop worth it?RTX 3080 laptops don"t come cheap, so if you"re simply dipping your toes into the world of PC gaming they might not offer the best value for money on the market. However, if you"re a keen player it"s worth viewing your RTX 3080 laptop as an investment.

However, if you"re not fussed about getting the best of the best, you"ll find RTX 3070 laptops come in much cheaper, and an RTX 3060 laptop is even cheaper still. You won"t get the lightning performance out of these rigs, but if you"re just looking for everyday entertainment while still grabbing the latest generation these models are perfectly positioned in the market. If you"re shopping under $1,000, though, we"d recommend checking out RTX 3050 laptops.

As far as i understand you were giving a choice to get a different model from the get go. Not that you would have to wait extra time for it. If that is the case keep the aorus? I mean i assume you spent at least 1.5k Eu or dollars, and it wouldn"t sit pretty well with me having a defective looking card with a sh*t software on top of that. But that"s just me.

As i said, the performance gain in terms of fps will be negligible. The strix has better build quality and cooling system for sure. Plus I"m not hearing good things about gigabyte as of late in terms of customer service and rma

Yesterday was a pretty exciting day for most of us, I guess. The GeForce RTX 3080 was finally available in Malaysia… and they are gone. With limited availability from all brands, they all sold out pretty quickly. While we wait for the brands to restock their wares, well here are the GeForce RTX 3080 cards from NVIDIA’s partners that were launched here in Malaysia.

In case you missed it, we were actually a part of ASUS Malaysia’s launch of the ASUS TUF Gaming GeForce RTX 3080. This is in fact going to be one of the most affordable GeForce RTX 3080 cards available here in Malaysia. Unlike earlier TUF Gaming cards, this time around we are getting a seriously tough card that looks the part, and performs great too. In fact it offers all the trappings of the previous generation of ROG Strix cards, like the Axial-tech fans and a thick 2.7-slot cooler. You can check out our review of the TUF Gaming GeForce RTX 3080 OC Edition.

The GIGABYTE GeForce RTX 3080 is GIGABYTE’s more affordable option, with a simple, no frills design. With that said, it still sports everything you need to extract the maximum performance out of the GeForce RTX 3080 GPU, with two 90mm fans, one 80mm fan, seven heatpipes and a big and thick heatsink to provide all the cooling you need.

Taking things up a notch, the GIGABYTE GeForce RTX 3080 Gaming OC offers not only a more stylish design, but also greater performance with the GeForce RTX 3080 GPU running at 1800MHz. Interestingly, the GIGABYTE GeForce RTX 3080 Gaming OC is actually marginally thinner than the EAGLE OC, although that shouldn’t affect the cooling as the 1mm difference should be due to the shroud design rather than the actual heatsink itself.

ZOTAC made a stark departure from their previous designs with the ZOTAC Gaming GeForce RTX 3080 Trinity, for better or worse. Even the naming scheme has been changed from their usual AMP branding, which just sounds like a big missed opportunity for puns, considering that this is, after all, the NVIDIA Ampere generation… Seven heatpipes, three 11-bladed fans and a huge heatsink ensures that the higher TGP of the GeForce RTX 30 series is well managed to deliver optimal performance. It is also the only card we have seen thus far to have RGB lighting on the backplate, so if you fancy that, this will be the card for you.

The MSI VENTUS series have been MSI’s more value-oriented lineup, and this time the MSI GeForce RTX 3080 VENTUS 3X takes that to a whole new level. Coming with three fans, a thick beefy heatsink and a strong backplate that also helps with the cooling, the MSI GeForce RTX 3080 VENTUS 3X cuts back on all the frivolous RGB for a performance-focused design.

For a more powerful yet flashier card from MSI, you will have to look towards the MSI GeForce RTX 3080 Gaming X Trio. This graphics card sports the latest TORX FAN 4.0 that sports fan blades with a linked outer ring to increase airflow into the heatsink. The heatsink itself also sports deflectors and curved edges to avoid airflow harmonics, promising lower noise levels while efficiently cooling the components.

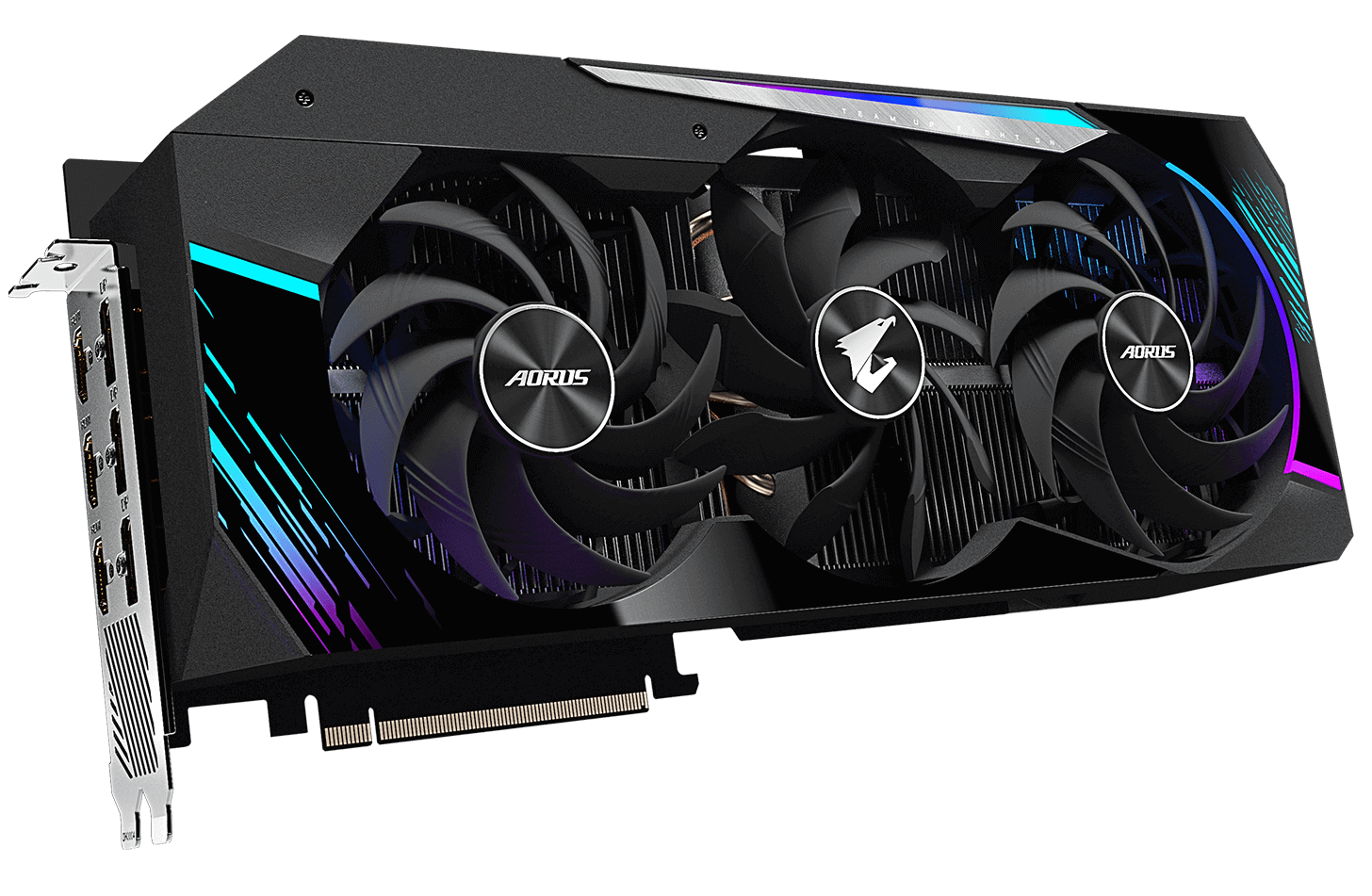

GIGABYTE’s more premium offerings are finally here in Malaysia. Starting with the GIGABYTE AORUS GeForce RTX 3080 MASTER. Aside from some relatively minor differences, the AORUS GeForce RTX 3080 MASTER offers most of the benefits of the AORUS GeForce RTX 3080 XTREME, including the Max-Covered Cooling system that comprises of two 115mm fans and one 100mm fans. The fans overlap each other to ensure that there aren’t any dead zones in the heatsinks, and also sport a wind claw to channel airflow into the heatsink fins.

To further bump up the cooling, GIGABYTE combined a vapor chamber and seven heatpipes in their AORUS GeForce RTX 30 series cards to improve the heat transfer from the GPU die into the heatsink. Near the rear end of the card is a color LCD display, customizable via the RGB Fusion 2.0 software. Interestingly enough, while the GIGABYTE GeForce RTX 3080 Eagle OC and Gaming OC cards had an extension to place the power connectors right at the end of the graphics card, the GIGABYTE AORUS ones don’t. Last but not least, the GIGABYTE AORUS GeForce RTX 30 series cards offer six display outputs, although you can only use four monitors tops.

Adding on a third 8-pin PCIe power connector, the GIGABYTE AORUS GeForce RTX 3080 XTREME is designed for the hardcore enthusiasts who want to take the GeForce RTX 3080 to its limits. Right out of the box, the AORUS GeForce RTX 3080 XTREME touts a 1905MHz boost clock, a good 195MHz faster than the reference GeForce RTX 3080. Aside from these differences, the XTREME is similar to the MASTER, with both of them sharing the same cooler fundamentally, although with some aesthetic changes to make the XTREME look even beefier, as if its physical dimensions aren’t enough to impress everyone out there.

Featuring the beefiest cooler ASUS has to offer, the ROG Strix GeForce RTX 3080 OC Edition is over-engineered to ensure that you are able to experience the full potential of the GeForce RTX 3080 without having to worry about not having enough power or cooling. Aside from that, the ASUS ROG Strix GeForce RTX 3080 OC Edition also packs one of the highest clocks we have seen yet, with an OC Mode accessible via GPU Tweak II to add an additional 30MHz to the already impressive 1905MHz boost clocks.

Excellent circuit design with the top-grade materials, not only maximizes the excellence of the GPU, but also maintains stable and long-life operation.

This blog post is designed to give you different levels of understanding of GPUs and the new Ampere series GPUs from NVIDIA. You have the choice: (1) If you are not interested in the details of how GPUs work, what makes a GPU fast, and what is unique about the new NVIDIA RTX 30 Ampere series, you can skip right to the performance and performance per dollar charts and the recommendation section. These form the core of the blog post and the most valuable content.

This is a high-level explanation that explains quite well why GPUs are better than CPUs for deep learning. If we look at the details, we can understand what makes one GPU better than another.

There are now enough cheap GPUs that almost everyone can afford a GPU with Tensor Cores. That is why I only recommend GPUs with Tensor Cores. It is useful to understand how they work to appreciate the importance of these computational units specialized for matrix multiplication. Here I will show you a simple example of A*B=C matrix multiplication, where all matrices have a size of 32×32, what a computational pattern looks like with and without Tensor Cores. This is a simplified example, and not the exact way how a high performing matrix multiplication kernel would be written, but it has all the basics. A CUDA programmer would take this as a first “draft” and then optimize it step-by-step with concepts like double buffering, register optimization, occupancy optimization, instruction-level parallelism, and many others, which I will not discuss at this point.

While this example roughly follows the sequence of computational steps for both with and without Tensor Cores, please note that this is a very simplified example. Real cases of matrix multiplication involve much larger shared memory tiles and slightly different computational patterns.

The Ampere Tensor Cores have another advantage in that they share more data between threads. This reduces the register usage. Registers are limited to 64k per streaming multiprocessor (SM) or 255 per thread. Comparing the Volta vs Ampere Tensor Core, the Ampere Tensor Core uses 3x fewer registers, allowing for more tensor cores to be active for each shared memory tile. In other words, we can feed 3x as many Tensor Cores with the same amount of registers. However, since bandwidth is still the bottleneck, you will only see tiny increases in actual vs theoretical TFLOPS. The new Tensor Cores improve performance by roughly 1-3%.

This section is for those who want to understand the more technical details of how I derive the performance estimates for Ampere GPUs. If you do not care about these technical aspects, it is safe to skip this section.

Suppose we have an estimate for one GPU of a GPU-architecture like Ampere, Turing, or Volta. It is easy to extrapolate these results to other GPUs from the same architecture/series. Luckily, NVIDIA already benchmarked the A100 vs V100 across a wide range of computer vision and natural language understanding tasks. Unfortunately, NVIDIA made sure that these numbers are not directly comparable by using different batch sizes and the number of GPUs whenever possible to favor results for the A100. So in a sense, the benchmark numbers are partially honest, partially marketing numbers. In general, you could argue that using larger batch sizes is fair, as the A100 has more memory. Still, to compare GPU architectures, we should evaluate unbiased memory performance with the same batch size.

As we parallelize networks across more and more GPUs, we lose performance due to some networking overhead. The A100 8x GPU system has better networking (NVLink 3.0) than the V100 8x GPU system (NVLink 2.0) — this is another confounding factor. Looking directly at the data from NVIDIA, we can find that for CNNs, a system with 8x A100 has a 5% lower overhead than a system of 8x V100. This means if going from 1x A100 to 8x A100 gives you a speedup of, say, 7.00x, then going from 1x V100 to 8x V100 only gives you a speedup of 6.67x. For transformers, the figure is 7%.

As of now, one of these degradations was found: Tensor Core performance was decreased so that RTX 30 series GPUs are not as good as Quadro cards for deep learning purposes. This was also done for the RTX 20 series, so it is nothing new, but this time it was also done for the Titan equivalent card, the RTX 3090. The RTX Titan did not have performance degradation enabled.

While this feature is still experimental and training sparse networks are not commonplace yet, having this feature on your GPU means you are ready for the future of sparse training.

The Brain Float 16 format (BF16) uses more bits for the exponent such that the range of possible numbers is the same as for FP32: [-3*10^38, 3*10^38]. BF16 has less precision, that is significant digits, but gradient precision is not that important for learning. So what BF16 does is that you no longer need to do any loss scaling or worry about the gradient blowing up quickly. As such, we should see an increase in training stability by using the BF16 format as a slight loss of precision.

Overall, though, these new data types can be seen as lazy data types in the sense that you could have gotten all the benefits with the old data types with some additional programming efforts (proper loss scaling, initialization, normalization, using Apex). As such, these data types do not provide speedups but rather improve ease of use of low precision for training.

To overcome thermal issues, water cooling will provide a solution in any case. Many vendors offer water cooling blocks for RTX 3080/RTX 3090 cards, which will keep them cool even in a 4x GPU setup. Beware of all-in-one water cooling solution for GPUs if you want to run a 4x GPU setup, though it is difficult to spread out the radiators in most desktop cases.

Another solution to the cooling problem is to buy PCIe extenders and spread the GPUs within the case. This is very effective, and other fellow PhD students at the University of Washington and I use this setup with great success. It does not look pretty, but it keeps your GPUs cool! It can also help if you do not have enough space to spread the GPUs. For example, if you can find the space within a desktop computer case, it might be possible to buy standard 3-slot-width RTX 3090 and spread them with PCIe extenders within the case. With this, you might solve both the space issue and cooling issue for a 4x RTX 3090 setup with a single simple solution.

The RTX 3090 is a 3-slot GPU, so one will not be able to use it in a 4x setup with the default fan design from NVIDIA. This is kind of justified because it runs at 350W TDP, and it will be difficult to cool in a multi-GPU 2-slot setting. The RTX 3080 is only slightly better at 320W TDP, and cooling a 4x RTX 3080 setup will also be very difficult.

It is also difficult to power a 4x 350W = 1400W system in the 4x RTX 3090 case. Power supply units (PSUs) of 1600W are readily available, but having only 200W to power the CPU and motherboard can be too tight. The components’ maximum power is only used if the components are fully utilized, and in deep learning, the CPU is usually only under weak load. With that, a 1600W PSU might work quite well with a 4x RTX 3080 build, but for a 4x RTX 3090 build, it is better to look for high wattage PSUs (+1700W). Some of my followers have had great success with cryptomining PSUs — have a look in the comment section for more info about that. Otherwise, it is important to note that not all outlets support PSUs above 1600W, especially in the US. This is the reason why in the US, there is currently not a standard desktop PSU above 1600W on the market. If you get a server or cryptomining PSUs, beware of the form factor — make sure it fits into your computer case.

As we can see, setting the power limit does not seriously affect performance. Limiting the power by 50W — more than enough to handle 4x RTX 3090 — decreases performance by only 7%.

The following benchmark includes not only the Tesla A100 vs Tesla V100 benchmarks but I build a model that fits those data and four different benchmarks based on the Titan V, Titan RTX, RTX 2080 Ti, and RTX 2080.[1,2,3,4] In an update, I also factored in the recently discovered performance degradation in RTX 30 series GPUs. And since I wrote this blog post, we now also have the first solid benchmark for computer vision which confirms my numbers.

Compared to an RTX 2080 Ti, the RTX 3090 yields a speedup of 1.41x for convolutional networks and 1.35x for transformers while having a 15% higher release price. Thus the Ampere RTX 30 yields a substantial improvement over the Turing RTX 20 series in raw performance and is also cost-effective (if you do not have to upgrade your power supply and so forth).

Here I have three PCIe 3.0 builds, which I use as base costs for 2/4 GPU systems. I take these base costs and add the GPU costs on top of it. The GPU costs are the mean of the GPU’s Amazon and eBay costs. For the new Ampere GPUs, I use just the release price. Together with the performance values from above, this yields performance per dollar values for these systems of GPUs. For the 8-GPU system, I use a Supermicro barebone — the industry standard for RTX servers — as baseline cost. Note that these bar charts do not account for memory requirements. You should think about your memory requirements first and then look for the best option in the chart. Here some rough guidelines for memory:Using pretrained transformers; training small transformer from scratch>= 11GB

Some of these details require you to self-reflect about what you want and maybe research a bit about how much memory the GPUs have that other people use for your area of interest. I can give you some guidance, but I cannot cover all areas here.

The RTX 3080 is currently by far the most cost-efficient card and thus ideal for prototyping. For prototyping, you want the largest memory, which is still cheap. With prototyping, I mean here prototyping in any area: Research, competitive Kaggle, hacking ideas/models for a startup, experimenting with research code. For all these applications, the RTX 3080 is the best GPU.

Suppose I would lead a research lab/startup. I would put 66-80% of my budget in RTX 3080 machines and 20-33% for “rollout” RTX 3090 machines with a robust water cooling setup. The idea is, RTX 3080 is much more cost-effective and can be shared via a slurm cluster setup as prototyping machines. Since prototyping should be done in an agile way, it should be done with smaller models and smaller datasets. RTX 3080 is perfect for this. Once students/colleagues have a great prototype model, they can rollout the prototype on the RTX 3090 machines and scale to larger models.

It is a bit contradictory that I just said if you want to train big models, you need lots of memory, but we have been struggling with big models a lot since the onslaught of BERT and solutions exists to train 24 GB models in 10 GB memory. If you do not have the money or what to avoid cooling/power issues of the RTX 3090, you can get RTX 3080 and just accept that you need do some extra programming by adding memory-saving techniques. There are enough techniques to make it work, and they are becoming more and more commonplace.

If you are not afraid to tinker a bit and implement some of these techniques — which usually means integrating packages that support them with your code — you will be able to fit that 24GB large network on a smaller GPU. With that hacking spirit, the RTX 3080, or any GPU with less than 11 GB memory, might be a great GPU for you.

I could imagine if you need that extra memory, for example, to go from RTX 2080 Ti to RTX 3090, or if you want a huge boost in performance, say from RTX 2060 to RTX 3080, then it can be pretty worth it. But if you stay “in your league,” that is, going from Titan RTX to RTX 3090, or, RTX 2080 Ti to RTX 3080, it is hardly worth it. You gain a bit of performance, but you will have headaches about the power supply and cooling, and you are a good chunk of money lighter. I do not think it is worth it. I would wait until a better alternative to GDDR6X memory is released. This will make GPUs use less power and might even make them faster. Maybe wait a year and see how the landscape has changed since then.

In general, I would recommend the RTX 3090 for anyone that can afford it. It will equip you not only for now but will be a very effective card for the next 3-7 years. As such, it is a good investment that will stay strong. It is unlikely that HBM memory will become cheap within three years, so the next GPU would only be about 25% better than the RTX 3090. We will probably see cheap HBM memory in 3-5 years, so after that, you definitely want to upgrade.

For PhD students, those who want to become PhD students, or those who get started with a PhD, I recommend RTX 3080 GPUs for prototyping and RTX 3090 GPUs for doing rollouts. If your department has a GPU cluster, I would highly recommend a Slurm GPU cluster with 8 GPU machines. However, since the cooling of RTX 3080 GPUs in an 8x GPU server setup is questionable it is unlikely that you will be able to run these. If the cooling works, I would recommend 66-80% RTX 3080 GPUs and the rest of the GPUs being either RTX 3090 or Tesla A100. If cooling does not work I would recommend 66-80% RTX 2080 and the rest being Tesla A100s. Again, it is crucial, though, that you make sure that heating issues in your GPU servers are taken care of before you commit to specific GPUs for your servers. More on GPU clusters below.

For anyone without strictly competitive requirements (research, competitive Kaggle, competitive startups), I would recommend in order: Used RTX 2080 Ti, used RTX 2070, new RTX 3080, new RTX 3070. If you do not like used cards, but the RTX 3080. If you cannot afford the RTX 3080, go with the RTX 3070. All of these cards are very cost-effective solutions and will ensure fast training of most networks. If you use the right memory tricks and are fine with some extra programming, there are now enough tricks to make a 24 GB neural network fit into a 10 GB GPU. As such, if you accept a bit of uncertainty and some extra programming, the RTX 3080 might also be a better choice compared to the RTX 3090 since performance is quite similar between these cards.

If your budget is limited and an RTX 3070 is too expensive, a used RTX 2070 is about $260 on eBay. It is not clear yet if there will be an RTX 3060, but if you are on a limited budget, it might also be worth waiting a bit more. If priced similarly to the RTX 2060 and GTX 1060, you can expect a price of $250 to $300 and a pretty strong performance.

GPU cluster design depends highly on use. For a +1,024 GPU system, networking is paramount, but if users only use at most 32 GPUs at a time on such a system investing in powerful networking infrastructure is a waste. Here, I would go with similar prototyping-rollout reasoning, as mentioned in the RTX 3080 vs RTX 3090 case.

In general, RTX cards are banned from data centers via the CUDA license agreement. However, often universities can get an exemption from this rule. It is worth getting in touch with someone from NVIDIA about this to ask for an exemption. If you are allowed to use RTX cards, I would recommend standard Supermicro 8 GPU systems with RTX 3080 or RTX 3090 GPUs (if sufficient cooling can be assured). A small set of 8x A100 nodes ensures effective “rollout” after prototyping, especially if there is no guarantee that the 8x RTX 3090 servers can be cooled sufficiently. In this case, I would recommend A100 over RTX 6000 / RTX 8000 because the A100 is pretty cost-effective and future proof.

In the case you want to train vast networks on a GPU cluster (+256 GPUs), I would recommend the NVIDIA DGX SuperPOD system with A100 GPUs. At a +256 GPU scale, networking is becoming paramount. If you want to scale to more than 256 GPUs, you need a highly optimized system, and putting together standard solutions is no longer cutting it.

Especially at a scale of +1024 GPUs, the only competitive solutions on the market are the Google TPU Pod and NVIDIA DGX SuperPod. At that scale, I would prefer the Google TPU Pod since their custom made networking infrastructure seems to be superior to the NVIDIA DGX SuperPod system — although both systems come quite close to each other. The GPU system offers a bit more flexibility of deep learning models and applications over the TPU system, while the TPU system supports larger models and provides better scaling. So both systems have their advantages and disadvantages.

I do not recommend buying multiple RTX Founders Editions (any) or RTX Titans unless you have PCIe extenders to solve their cooling problems. They will simply run too hot, and their performance will be way below what I report in the charts above. 4x RTX 2080 Ti Founders Editions GPUs will readily dash beyond 90C, will throttle down their core clock, and will run slower than properly cooled RTX 2070 GPUs.

I do not recommend buying Tesla V100 or A100 unless you are forced to buy them (banned RTX data center policy for companies) or unless you want to train very large networks on a huge GPU cluster — these GPUs are just not very cost-effective.

If you can afford better cards, do not buy GTX 16 series cards. These cards do not have tensor cores and, as such, provide relatively poor deep learning performance. I would choose a used RTX 2070 / RTX 2060 / RTX 2060 Super over a GTX 16 series card. If you are short on money, however, the GTX 16 series cards can be a good option.

If you already have RTX 2080 Tis or better GPUs, an upgrade to RTX 3090 may not make sense. Your GPUs are already pretty good, and the performance gains are negligible compared to worrying about the PSU and cooling problems for the new power-hungry RTX 30 cards — just not worth it.

You can use different types of GPUs in one computer (e.g., GTX 1080 + RTX 2080 + RTX 3090), but you will not be able to parallelize across them efficiently.

Despite heroic software engineering efforts, AMD GPUs + ROCm will probably not be able to compete with NVIDIA due to lacking community and Tensor Core equivalent for at least 1-2 years.

Generally, no. PCIe 4.0 is great if you have a GPU cluster. It is okay if you have an 8x GPU machine, but otherwise, it does not yield many benefits. It allows better parallelization and a bit faster data transfer. Data transfers are not a bottleneck in any application. In computer vision, in the data transfer pipeline, the data storage can be a bottleneck, but not the PCIe transfer from CPU to GPU. So there is no real reason to get a PCIe 4.0 setup for most people. The benefits will be maybe 1-7% better parallelization in a 4 GPU setup.

Yes, you can! But you cannot parallelize efficiently across GPUs of different types. I could imagine a 3x RTX 3070 + 1 RTX 3090 could make sense for a prototyping-rollout split. On the other hand, parallelizing across 4x RTX 3070 GPUs would be very fast if you can make the model fit onto those GPUs. The only other reason why you want to do this that I can think of is if you’re going to use your old GPUs. This works just fine, but parallelization across those GPUs will be inefficient since the fastest GPU will wait for the slowest GPU to catch up to a synchronization point (usually gradient update).

Generally, NVLink is not useful. NVLink is a high speed interconnect between GPUs. It is useful if you have a GPU cluster with +128 GPUs. Otherwise, it yields almost no benefits over standard PCIe transfers.

Definitely buy used GPUs. Used RTX 2070 ($400) and RTX 2060 ($300) are great. If you cannot afford that, the next best option is to try to get a used GTX 1070 ($220) or GTX 1070 Ti ($230). If that is too expensive, a used GTX 980 Ti (6GB $150) or a used GTX 1650 Super ($190). If that is too expensive, it is best to roll with free GPU cloud services. These usually provided a GPU for a limited amount of time/credits, after which you need to pay. Rotate between services and accounts until you can afford your own GPU.

I built a carbon calculator for calculating your carbon footprint for academics (carbon from flights to conferences + GPU time). The calculator can also be used to calculate a pure GPU carbon footprint. You will find that GPUs produce much, much more carbon than international flights. As such, you should make sure you have a green source of energy if you do not want to have an astronomical carbon footprint. If no electricity provider in our area provides green energy, the best way is to buy carbon offsets. Many people are skeptical about carbon offsets. Do they work? Are they scams?

I believe skepticism just hurts in this case, because not doing anything would be more harmful than risking the probability of getting scammed. If you worry about scams, just invest in a portfolio of offsets to minimize risk.

It does not seem so. Since the granularity of the sparse matrix needs to have 2 zero-valued elements, every 4 elements, the sparse matrices need to be quite structured. It might be possible to adjust the algorithm slightly, which involves that you pool 4 values into a compressed representation of 2 values, but this also means that precise arbitrary sparse matrix multiplication is not possible with Ampere GPUs.

I do not recommend Intel CPUs unless you heavily use CPUs in Kaggle competitions (heavy linear algebra on the CPU). Even for Kaggle competitions AMD CPUs are still great, though. AMD CPUs are cheaper and better than Intel CPUs in general for deep learning. For a 4x GPU built, my go-to CPU would be a Threadripper. We built dozens of systems at our university with Threadrippers, and they all work great — no complaints yet. For 8x GPU systems, I would usually go with CPUs that your vendor has experience with. CPU and PCIe/system reliability is more important in 8x systems than straight performance or straight cost-effectiveness.

No. GPUs are usually perfectly cooled if there is at least a small gap between GPUs. Case design will give you 1-3 C better temperatures, space between GPUs will provide you with 10-30 C improvements. The bottom line, if you have space between GPUs, cooling does not matter. If you have no space between GPUs, you need the right cooler design (blower fan) or another solution (water cooling, PCIe extenders), but in either case, case design and case fans do not matter.

AMD GPUs are great in terms of pure silicon: Great FP16 performance, great memory bandwidth. However, their lack of Tensor Cores or the equivalent makes their deep learning performance poor compared to NVIDIA GPUs. Packed low-precision math does not cut it. Without this hardware feature, AMD GPUs will never be competitive. Rumors show that some data center card with Tensor Core equivalent is planned for 2020, but no new data emerged since then. Just having data center cards with a Tensor Core equivalent would also mean that few would be able to afford such AMD GPUs, which would give NVIDIA a competitive advantage.

Let’s say AMD introduces a Tensor-Core-like-hardware feature in the future. Then many people would say, “But there is no software that works for AMD GPUs! How am I supposed to use them?” This is mostly a misconception. The AMD software via ROCm has come to a long way, and support via PyTorch is excellent. While I have not seen many experience reports for AMD GPUs + PyTorch, all the software features are integrated. It seems, if you pick any network, you will be just fine running it on AMD GPUs. So here AMD has come a long way, and this issue is more or less solved.

Thus, it is likely that AMD will not catch up until Tensor Core equivalent is introduced (1/2 to 1 year?) and a strong community is built around ROCm (2 years?). AMD will always snatch a part of the market share in specific subgroups (e.g., cryptocurrency mining, data centers). Still, in deep learning, NVIDIA will likely keep its monopoly for at least a couple more years.

I am a competitive computer vision, pretraining, or machine translation researcher: 4x RTX 3090. Wait until working builds with good cooling, and enough power are confirmed (I will update this blog post).

I am an NLP researcher: If you do not work on machine translation, language modeling, or pretraining of any kind, an RTX 3080 will be sufficient and cost-effective.

I started deep learning, and I am serious about it: Start with an RTX 3070. If you are still serious after 6-9 months, sell your RTX 3070 and buy 4x RTX 3080. Depending on what area you choose next (startup, Kaggle, research, applied deep learning), sell your GPUs, and buy something more appropriate after about three years (next-gen RTX 40s GPUs).

I want to try deep learning, but I am not serious about it: The RTX 2060 Super is excellent but may require a new power supply to be used. If your motherboard has a PCIe x16 slot and you have a power supply with around 300 W, a GTX 1050 Ti is a great option since it will not require any other computer components to work with your desktop computer.

GPU Cluster used for parallel models across less than 128 GPUs:If you are allowed to buy RTX GPUs for your cluster: 66% 8x RTX 3080 and 33% 8x RTX 3090 (only if sufficient cooling is guaranteed/confirmed). If cooling of RTX 3090s is not sufficient buy 33% RTX 6000 GPUs or 8x Tesla A100 instead. If you are not allowed to buy RTX GPUs, I would probably go with 8x A100 Supermicro nodes or 8x RTX 6000 nodes.

2016-06-25: Reworked multi-GPU section; removed simple neural network memory section as no longer relevant; expanded convolutional memory section; truncated AWS section due to not being efficient anymore; added my opinion about the Xeon Phi; added updates for the GTX 1000 series

For past updates of this blog post, I want to thank Mat Kelcey for helping me to debug and test custom code for the GTX 970; I want to thank Sander Dieleman for making me aware of the shortcomings of my GPU memory advice for convolutional nets; I want to thank Hannes Bretschneider for pointing out software dependency problems for the GTX 580; and I want to thank Oliver Griesel for pointing out notebook solutions for AWS instances. I want to thank Brad Nemire for providing me with an RTX Titan for benchmarking purposes.

Three things go into choosing the best gaming laptop: performance, portability, and price. So, whether you need a colossal workstation or a sleek, ultra-thin gaming notebook, your future gaming laptop should find a way to fit perfectly into your daily life. As much as most of us would love the best gaming PC(opens in new tab), the reality is that some people don"t have the room (or the need) for a full-size PC and monitor set-up.

MSI Stealth 15M Gaming Laptop (15.6-in, i7-1280P, RTX 3060, 16GB RAM, 512GB SSD) | AU$2,529AU$1,949 at MWave(opens in new tab)Enjoy a chunk off this slim MSI gaming laptop, which offers good gaming performance in an affordable (and only 1.8kg) package. If you"re after a machine that will feel right for both work and gaming, this one is well worth investigation - especially at this price. That said, it was AU$1,799 a while ago, so it may be worth waiting for another price drop.

We"ve played with the Razer Blade 15 Advanced with a 10th Gen Intel chip and RTX 3080 (95W) GPU inside it. And we fell in love all over again, and we"re looking forward to getting the Intel Alder Lake versions with the RTX 3080 Ti humming away inside, too. Soon, my precious, soon.

These latest models, you see, have upped the graphics processing even further than the previous setups, with support for up to the 16GB RTX 3080 Ti, which is incredible in this small chassis. You will get some throttling because of that slimline design, but you"re still getting outstanding performance from this beautiful machine.

It"s not the lightest gaming laptop you can buy, but five pounds is still way better than plenty of traditional gaming laptops, while also offering similar performance and specs. That heft helps make it feel solid too. It also means the Blade 15 travels well in your backpack. An excellent choice for the gamer on the go... or if you don"t have the real estate for a full-blown gaming desktop and monitor.

Keyboard snobs will be happy to see a larger shift and half-height arrow keys. The Blade 15 Advanced offers per-key RGB lighting over the Base Model"s zonal lighting. Typing feels great, and I"ve always liked the feel of the Blade"s keycaps. The trackpad can be frustrating at times, but you"re going to want to use a mouse with this gorgeous machine anyway, so it"s not the end of the world.

One of the best things about the Blade 15 is the number of configurations(opens in new tab) Razer offers. From the RTX 3060 Base Edition to the RTX 3080 Ti Advanced with a 144Hz 4K panel, there"s something for almost everyone. It"s one of the most beautiful gaming laptops around and still one of the most powerful.

We checked out the version with AMD"s RX 6800S under the hood, though there is an option for an RX 6700S, for a chunk less cash. Arguably, that cheaper option sounds a bit better to us, as the high-end one can get a little pricey and close in on the expensive but excellent Razer Blade 14. It"s not helped much by its 32GB of DDR5-4800 RAM in that regard, though we do love having all that speedy memory raring to go for whatever you can throw at it.

The RX 6800S makes quick work of our benchmarking suite, and I have to say I"m heartily impressed with the G14"s gaming performance overall. That"s even without turning to the more aggressive Turbo preset—I tested everything with the standard Performance mode. It"s able to top the framerate of RTX 3080 and RTX 3070 mobile chips pretty much across the board, and while it does slip below the RTX 3080 Ti in the Razer Blade 17, that"s a much larger laptop with a much larger price tag.

One of my favorite things about the G14 is in the name—it"s a 14-inch laptop. The blend of screen real estate and compact size is a great inbetween of bulkier 15- and 17-inch designs, and not quite as compromised as a 13-inch model can feel. But the big thing with the 2022 model is that 14-inch size has been fitted out with a larger 16:10 aspect ratio than previous models" 16:9 panels.

The only real downside with this machine is the battery life, which really isn"t the best while gaming—less than an hour while actually playing. You"ll get more when playing videos or doing something boring like working, but we do expect a bit more from a modern laptop. It"s not a deal-breaker, but definitely something you"ll want to bear in mind.

I am mighty tempted to push the Razer Blade 14 further up the list, simply because the 14-inch form factor has absolutely won me over. The Asus ROG Zephyrus G14 in the No. 2 slot reintroduced the criminally under-used laptop design, but Razer has perfected it. Feeling noticeably smaller than the 15-inch Blade and closer to the ultrabook Stealth 13, the Blade 14 mixes a matte black MacBook Pro-style with genuine PC gaming pedigree.

The Razer style is classic, and it feels great to hold, too. And with the outstanding AMD Ryzen 9 5900HX finally finding its way into a Blade notebook, you"re getting genuine processing power you can sling into a messenger bag. And soon you will be able to get your hands on the Blade 14 with the brand new Ryzen 9 6900HX chip at its heart which will actually be able to save on battery on the go by using its RDNA 2-based onboard graphics.

Add in some extra Nvidia RTX 30-series graphics power—now all the way up to an RTX 3080 Ti, but wear earplugs—and you"ve got a great mix of form and function that makes it the most desirable laptop I"ve maybe ever tested.

My only issue is that the RTX 3080 Ti would be too limited by the diminutive 14-inch chassis and run a little loud. So I would then recommend the lower-spec GPU options, though if you"re spending $1,800 on a notebook, that feels like too high for 1080p gaming. But you"re not buying the Blade 14 specifically for outright performance and anything else; this is about having all the power you need in a form factor that works for practical mobility.

The PC is all about choice, and Razer has finally given us the choice to use an AMD CPU in its machines, although it would be great if we had the option elsewhere in its range of laptops. It"s notable that we"ve heard nothing about a potential Blade 14 using an AMD discrete Radeon GPU alongside that Ryzen CPU. Ah well.

Forgetting the politics a second, the Razer Blade 14 itself is excellent, and is one of the most desirable gaming laptops I"ve had in my hands this year. Maybe ever. The criminally underused 14-inch form factor also deserves to become one of the biggest sellers in Razer"s extensive lineup of laptops. And if this notebook becomes the success it ought to be, then the company may end up having to make some difficult choices about what CPUs it offers, and where.

The choice youhave to make, though, is which graphics card to go with. Sure, the RTX 3080 is quicker, but it leaves a lot more gaming performance on the workshop floor. That"s why the cheaper RTX 3060, with its full-blooded frame rates, gets my vote every day.

The most significant improvement from its previous model is a slimmer, sleeker design. Along with thinner bezels around a 144 Hz display, the sleeker design gives it a more high-end vibe. It’s a welcome toned-down look, in case you’re hoping for a gaming laptop that doesn’t shout ‘gamer’ as soon as you pull it out of your bag. The display itself seems the only downside, not having as rich a colour range as the other gaming laptops on this list.

The Legion Pro 5 proves that AMD is absolutely a serious competitor in the gaming laptop space. Pairing the mobile Ryzen 7 5800H with the RTX 3070 results in a laptop that not only handles modern games with ease, but that can turn its hand at more serious escapades too.

The QHD 16:10 165Hz screen is a genuine highlight here and one that makes gaming and just using Windows a joy. It"s an IPS panel with a peak brightness of 500nits too, so you"re not going to be left wanting whether you"re gaming or watching movies.

The Legion Pro 5 really is a beast when it comes to gaming too, with that high-powered RTX 3070 (with a peak delivery of 140W it"s faster than some 3080s) being a great match for that vibrant screen. You"re going to be able to run the vast majority of games at the native 2560 x 1600 resolution at the max settings and not miss a beat. The fact that you can draw on DLSS and enjoy some ray tracing extras for the money all helps to make this an incredibly attractive package.

If anything knocks the Legion 5 Pro, it would have to be its rather underwhelming speakers and microphone combo. Anything with a hint of bass tends to suffer, which is a shame. The microphone was another surprising disappointment. My voice, I was told, sounded distant and quiet during work calls, which paired with a mediocre 720p webcam doesn"t make for the best experience. I will commend the Legion for fitting a webcam on a screen with such a small top bezel though—A for effort.

The Lenovo Legion Pro 5 made me realize that Legion laptops deserve a spot at the top, being one of the more impressive AMD-powered laptops we"ve gotten our hands on recently. From the bright, colorful screen to the great feeling full-sized keyboard, The Legion Pro 5 has everything you want in a gaming laptop for a lot less than the competition manages.

MSI GS66 Stealth | Intel Core i7 | Nvidia RTX 3080(opens in new tab)There"s no getting away from it; this is a lot of laptop for a lot of money. The RTX 3080 is a quality gaming GPU, and that 240Hz 1440p screen will allow you to get the most out of it, too. The Max-Q 3.0 features mean this thin-and-light gaming laptop feels slick and quick with 32GB RAM and a 1TB SSD backing it all up.

The MSI GS66 is one hell of a machine: It"s sleek, slick, and powerful. But it"s not Nvidia Ampere"s power without compromise, however. MSI has had to be a little parsimonious about its power demands to pack something as performant as an RTX 3080 into an 18mm thin chassis.

The top GPU is the 95W version, which means it only just outperforms a fully unleashed RTX 3070, the sort you"ll find in the Gigabyte Aorus 15G XC. But it is still an astonishingly powerful slice of mobile graphics silicon.

The GS66 also comes with an outstanding 240Hz 1440p panel, which perfectly matches the powerful GPU when it comes to games. Sure, you"ll have to make some compromises compared to an RTX 3080 you might find in a hulking workstation, but the MSI GS66 Stealth is a genuinely slimline gaming laptop.

We loved Acer"s Predator Helios 300 during the GTX 10-series era, and the current generation Helios still manages to punch above its weight class compared to other $1,500 laptops. It may not be the best gaming laptop, but it"s one of the best value machines around.

The newest version of the Helios packs an RTX 3060 GPU and a sleeker form factor without raising the price significantly. It also has a 144Hz screen and smaller bezels, putting it more in line with sleek thin-and-lights than its more bulky brethren of the previous generation.

There"s absolutely no question you can buy a much more sensible gaming laptop than this, but there is something about the excesses of the ROG Strix Scar 17 that make it incredibly appealing. It feels like everything about it has been turned up to 11, from the overclocked CPU—which is as beastly as it gets—to the gorgeously speedy 360Hz screen on the top model. Asus has pushed that little bit harder than most to top our gaming laptop benchmarks.

And top the benchmarks of the best gaming laptops it does, thanks in the main to the GeForce RTX 3070 Ti that can be found beating away at its heart. This is the 150W version of Nvidia"s new Ampere GPU, which means it"s capable of hitting the kind of figures thinner machines can only dream of. You can draw on Nvidia"s excellent DLSS, where implemented, to help hit ridiculous frame rates, too. And if that"s not enough, you can also grab this machine kitted out with an RTX 3080 Ti too.

Importantly, all this power comes at a cost not only to the temperatures but also to the battery life. Sure, you"re not as likely to play games with the thing unplugged, but if you ever have to, an hour is all you get.

I"m not enamored with the touchpad either, while we"re nit-picking. I keep trying to click the space beneath it, and my poor, callous fingers keep forgetting where the edges are. This particular model doesn"t come with a camera either, which is a glaring omission for the price, and there"s a distinct lack of USB Type-A ports for the unnecessary arsenal of peripherals I"m packing. There are a couple of USB Type-C ports around the back to make up for it, though, and I"m happy there"s a full-sized keyboard.

While you could get a Lenovo Legion 5 Pro with its RTX 3070 for half the price, spending $2,999 on this Strix Scar config will put you ahead of the competition with very little effort. And sure, it"s not as stylish or as apt with ray tracing as the Blade 17, but there"s a good $1,000 price difference there. And for something that can outpace the laptops of yesteryear in almost every running, I"d pay that price for sure.

As we said at the top, an RTX 3080 confined in an 18mm chassis will perform markedly slower than one in a far chunkier case with room for higher performance cooling.

A. This will arguably have the most immediate impact on your choice of build. Picking the size of your screen basically dictates the size of your laptop. A 13-inch machine will be a thin-and-light ultrabook, while a 17-inch panel almost guarantees workstation stuff. At 15-inches you"re looking at the most common size of gaming laptop screen.

A. We love high refresh rate screens here, and while you cannot guarantee your RTX 3060 is going to deliver 300 fps in the latest games, you"ll still see a benefit in general looks and feel running a 300Hz display.

A 1440p screen offers the perfect compromise between high resolution and decent gaming performance, while a 4K notebook will overstress your GPU and tax your eyeballs as you squint at your 15-inch display.

Ms.Josey

Ms.Josey

Ms.Josey

Ms.Josey