39 lcd panel price free sample

With all the advantages and disadvantages, lcdds are essentially a good choice for those who see the TV starting from 4k smartphone. Nowadays, in addition to the wholesale models, lcdds are essentially a good option for those that don ’ t have the capacity of a device.

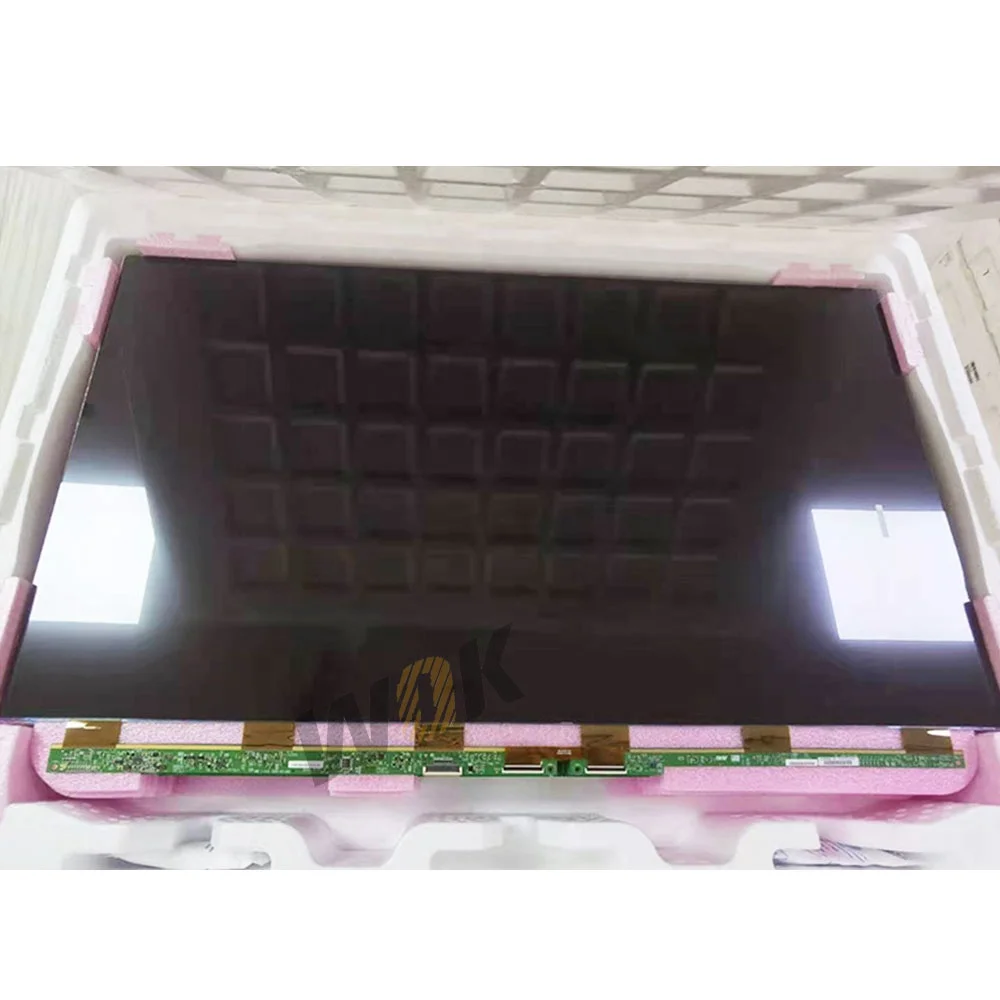

Guangzhou qiangfeng electronics co., LTD was formally established in 2010, the registered capital of one million yuan, is a company with powerful strength and abundant resources LCD,is Innolux Corporation (Taiwan) company special authorization of professional distributors,mainly manages the brand have: Innolux,AUO, LG, BOE, etc., can be in Hong Kong, and overseas countries for LCD SKD product distribution services,the company to "focus on quality, service" business philosophy,We welcome new and old customers from all walks of life to contact us for future business relationships and mutual success.

A: It is SKD SemiKnocked Down,We can provide you with all the accessories you need for a complete TV machine.For example, TV panel,T-con, Motherboard, power supply,Wire ,tv casing......

A:Prices will be affected by factors such as different delivery locations, purchase quantities, specific requirements, exchange rates, etc.Please contact customer service for order price

The HDMI (High-Definition Multimedia Interface) cable transfers digital video and audio signals between two devices. Connect an HDMI cord to your TV and any compatible input device like a DVD player, gaming system, or computer. You can then view the images on your TV by changing the input source on your television display.What’s the difference between an LCD TV and LCD HDTV?

A TV has to be able to display a vertical resolution of at least 720p for it to be considered an HDTV. A standard definition LCD TV has a resolution of 480p. So a standard Toshiba LCD TV will be able to show an HDTV picture but it will be scaled to fix the pixel field of that standard television. An LCD HDTV is the TV that will allow you to view your TV with a true HDTV quality image.Does a Toshiba smart TV connect to the internet?

LCD (Liquid Crystal Display) - The most frequent technology, LCD TVs have lightweight, flat screens that have HD picture quality and low power consumption.

LED (Light Emitting Diode) - Uses LEDs for high brightness. Please note, per industry practice, some TVs are called LED when in fact they are LCD TVs with LED backlighting.What are the uses for a JVC analog TV?

Screen size - This JVC television product category covers screen sizes from 30-inch to 39-inch, with 32-inch and 37-inch being common sizes within the range. Note that format shapes come in letterbox and widescreen. You"ll need widescreen to watch TV programs and movies that are in widescreen format.

In general, all-prefix-sums can be used to convert certain sequential computations into equivalent, but parallel, computations, as shown in Figure 39-1.

Table 39-1. A Sequential Computation and Its Parallel EquivalentSequentialParallelout[0] = 0; for j from 1 to n do out[j] = out[j-1] + f(in[j-1]);forall j in parallel do temp[j] = f(in[j]); all_prefix_sums(out, temp);

The pseudocode in Algorithm 1 shows a first attempt at a parallel scan. This algorithm is based on the scan algorithm presented by Hillis and Steele (1986) and demonstrated for GPUs by Horn (2005). Figure 39-2 illustrates the operation. The problem with Algorithm 1 is apparent if we examine its work complexity. The algorithm performs O(n log2 n) addition operations. Remember that a sequential scan performs O(n) adds. Therefore, this naive implementation is not work-efficient. The factor of log2 n can have a large effect on performance.

To solve this problem, we need to double-buffer the array we are scanning using two temporary arrays. Pseudocode for this is given in Algorithm 2, and CUDA C code for the naive scan is given in Listing 39-1. Note that this code will run on only a single thread block of the GPU, and so the size of the arrays it can process is limited (to 512 elements on NVIDIA 8 Series GPUs). Extension of scan to large arrays is discussed in Section 39.2.4.

Our implementation of scan from Section 39.2.1 would probably perform very badly on large arrays due to its work-inefficiency. We would like to find an algorithm that would approach the efficiency of the sequential algorithm, while still taking advantage of the parallelism in the GPU. Our goal in this section is to develop a work-efficient scan algorithm for CUDA that avoids the extra factor of log2 n work performed by the naive algorithm. This algorithm is based on the one presented by Blelloch (1990). To do this we will use an algorithmic pattern that arises often in parallel computing: balanced trees. The idea is to build a balanced binary tree on the input data and sweep it to and from the root to compute the prefix sum. A binary tree with n leaves has d = log2 n levels, and each level d has 2 d nodes. If we perform one add per node, then we will perform O(n) adds on a single traversal of the tree.

The tree we build is not an actual data structure, but a concept we use to determine what each thread does at each step of the traversal. In this work-efficient scan algorithm, we perform the operations in place on an array in shared memory. The algorithm consists of two phases: the reduce phase (also known as the up-sweep phase) and the down-sweep phase. In the reduce phase, we traverse the tree from leaves to root computing partial sums at internal nodes of the tree, as shown in Figure 39-3. This is also known as a parallel reduction, because after this phase, the root node (the last node in the array) holds the sum of all nodes in the array. Pseudocode for the reduce phase is given in Algorithm 3.

In the down-sweep phase, we traverse back down the tree from the root, using the partial sums from the reduce phase to build the scan in place on the array. We start by inserting zero at the root of the tree, and on each step, each node at the current level passes its own value to its left child, and the sum of its value and the former value of its left child to its right child. The down-sweep is shown in Figure 39-4, and pseudocode is given in Algorithm 4. CUDA C code for the complete algorithm is given in Listing 39-2. Like the naive scan code in Section 39.2.1, the code in Listing 39-2 will run on only a single thread block. Because it processes two elements per thread, the maximum array size this code can scan is 1,024 elements on an NVIDIA 8 Series GPU. Scans of larger arrays are discussed in Section 39.2.4.

Binary tree algorithms such as our work-efficient scan double the stride between memory accesses at each level of the tree, simultaneously doubling the number of threads that access the same bank. For deep trees, as we approach the middle levels of the tree, the degree of the bank conflicts increases, and then it decreases again near the root, where the number of active threads decreases (due to the if statement in Listing 39-2). For example, if we are scanning a 512-element array, the shared memory reads and writes in the inner loops of Listing 39-2 experience up to 16-way bank conflicts. This has a significant effect on performance.

Bank conflicts are avoidable in most CUDA computations if care is taken when accessing __shared__ memory arrays. We can avoid most bank conflicts in scan by adding a variable amount of padding to each shared memory array index we compute. Specifically, we add to the index the value of the index divided by the number of shared memory banks. This is demonstrated in Figure 39-5. We start from the work-efficient scan code in Listing 39-2, modifying only the highlighted blocks A through E. To simplify the code changes, we define a macro CONFLICT_FREE_OFFSET, shown in Listing 39-3.

Example 39-3. Macro Used for Computing Bank-Conflict-Free Shared Memory Array Indices#define NUM_BANKS 16 #define LOG_NUM_BANKS 4 #define CONFLICT_FREE_OFFSET(n) \ ((n) >> NUM_BANKS + (n) >> (2 * LOG_NUM_BANKS))

The blocks A through E in Listing 39-2 need to be modified using this macro to avoid bank conflicts. Two changes must be made to block A. Each thread loads two array elements from the __global__ array g_idata into the __shared__ array temp. In the original code, each thread loads two adjacent elements, resulting in the interleaved indexing of the shared memory array, incurring two-way bank conflicts. By instead loading two elements from separate halves of the array, we avoid these bank conflicts. Also, to avoid bank conflicts during the tree traversal, we need to add padding to the shared memory array every NUM_BANKS (16) elements. We do this using the macro in Listing 39-3 as shown in Listing 39-4. Note that we store the offsets to the shared memory indices so that we can use them again at the end of the scan, when writing the results back to the output array g_odata in block E.

The basic idea is simple. We divide the large array into blocks that each can be scanned by a single thread block, and then we scan the blocks and write the total sum of each block to another array of block sums. We then scan the block sums, generating an array of block increments that that are added to all elements in their respective blocks. In more detail, let N be the number of elements in the input array, and B be the number of elements processed in a block. We allocate N/B thread blocks of B/2 threads each. (Here we assume that N is a multiple of B, and we extend to arbitrary dimensions in the next paragraph.) A typical choice for B on NVIDIA 8 Series GPUs is 128. We use the scan algorithm of the previous sections to scan each block i independently, storing the resulting scans to sequential locations of the output array. We make one minor modification to the scan algorithm. Before zeroing the last element of block i (the block of code labeled B in Listing 39-2), we store the value (the total sum of block i) to an auxiliary array SUMS. We then scan SUMS in the same manner, writing the result to an array INCR. We then add INCR[i] to all elements of block i using a simple uniform add kernel invoked on N/B thread blocks of B/2 threads each. This is demonstrated in Figure 39-6. For details of the implementation, please see the source code available at http://www.gpgpu.org/scan-gpugems3/.

After optimizing shared memory accesses, the main bottlenecks left in the scan code are global memory latency and instruction overhead due to looping and address computation instructions. To better cover the global memory access latency and improve overall efficiency, we need to do more computation per thread. We employ a technique suggested by David Lichterman, which processes eight elements per thread instead of two by loading two float4 elements per thread rather than two float elements (Lichterman 2007). Each thread performs a sequential scan of each float4, stores the first three elements of each scan in registers, and inserts the total sum into the shared memory array. With the partial sums from all threads in shared memory, we perform an identical tree-based scan to the one given in Listing 39-2. Each thread then constructs two float4 values by adding the corresponding scanned element from shared memory to each of the partial sums stored in registers. Finally, the float4 values are written to global memory. This approach, which is more than twice as fast as the code given previously, is a consequence of Brent"s Theorem and is a common technique for improving the efficiency of parallel algorithms (Quinn 1994).

Our efforts to create an efficient scan implementation in CUDA have paid off. Performance is up to 20 times faster than a sequential version of scan running on a fast CPU, as shown in the graph in Figure 39-7. Also, thanks to the advantages provided by CUDA, we outperform an optimized OpenGL implementation running on the same GPU by up to a factor of seven. The graph also shows the performance we achieve when we use the naive scan implementation from Section 39.2.1 for each block. Because both the naive scan and the work-efficient scan must be divided across blocks of the same number of threads, the performance of the naive scan is slower by a factor of O(log2 B), where B is the block size, rather than a factor of O(log2 n). Figure 39-8 compares the performance of our best CUDA implementation with versions lacking bankconflict avoidance and loop unrolling.

Figure 39-7 Performance of the Work-Efficient, Bank-Conflict-Free Scan Implemented in CUDA Compared to a Sequential Scan Implemented in C++, and a Work-Efficient Implementation in OpenGL

Prior to the introduction of CUDA, several researchers implemented scan using graphics APIs such as OpenGL and Direct3D (see Section 39.3.4 for more). To demonstrate the advantages CUDA has over these APIs for computations like scan, in this section we briefly describe the work-efficient OpenGL inclusive-scan implementation of Sengupta et al. (2006). Their implementation is a hybrid algorithm that performs a configurable number of reduce steps as shown in Algorithm 5. It then runs the double-buffered version of the sum scan algorithm previously shown in Algorithm 2 on the result of the reduce step. Finally it performs the down-sweep as shown in Algorithm 6.

The main advantages CUDA has over OpenGL are its on-chip shared memory, thread synchronization functionality, and scatter writes to memory, which are not exposed to OpenGL pixel shaders. CUDA divides the work of a large scan into many blocks, and each block is processed entirely on-chip by a single multiprocessor before any data is written to off-chip memory. In OpenGL, all memory updates are off-chip memory updates. Thus, the bandwidth used by the OpenGL implementation is much higher and therefore performance is lower, as shown previously in Figure 39-7.

Stream compaction is an important primitive in a variety of general-purpose applications, including collision detection and sparse matrix compression. In fact, stream compaction was the focus of most of the previous GPU work on scan (see Section 39.3.4). Stream compaction is the primary method for transforming a heterogeneous vector, with elements of many types, into homogeneous vectors, in which each element has the same type. This is particularly useful with vectors that have some elements that are interesting and many elements that are not interesting. Stream compaction produces a smaller vector with only interesting elements. With this smaller vector, computation is more efficient, because we compute on only interesting elements, and thus transfer costs, particularly between the GPU and CPU, are potentially greatly reduced.

Informally, stream compaction is a filtering operation: from an input vector, it selects a subset of this vector and packs that subset into a dense output vector. Figure 39-9 shows an example. More formally, stream compaction takes an input vector vi and a predicate p, and outputs only those elements in vi for which p(vi ) is true, preserving the ordering of the input elements. Horn (2005) describes this operation in detail.

The GPUs on which Horn implemented stream compaction in 2005 did not have scatter capability, so Horn instead substituted a sequence of gather steps to emulate scatter. To compact n elements required log n gather steps, and while these steps could be implemented in one fragment program, this "gather-search" operation was fairly expensive and required more memory operations. The addition of a native scatter in recent GPUs makes stream compaction considerably more efficient. Performance of our stream compaction test is shown in Figure 39-11.

A summed-area table (SAT) is a two-dimensional table generated from an input image in which each entry in the table stores the sum of all pixels between the entry location and the lower-left corner of the input image. Summed-area tables were introduced by Crow (1984), who showed how they can be used to perform arbitrary-width box filters on the input image. The power of the summed-area table comes from the fact that it can be used to perform filters of different widths at every pixel in the image in constant time per pixel. Hensley et al. (2005) demonstrated the use of fast GPU-generated summed-area tables for interactive rendering of glossy environment reflections and refractions. Their implementation on GPUs used a scan operation equivalent to the naive implementation in Section 39.2.1. A work-efficient implementation in CUDA allows us to achieve higher performance. In this section we describe how summed-area tables can be computed using scans in CUDA, and we demonstrate their use in rendering approximate depth of field.

Figure 39-12 shows a simple scene rendered with approximate depth of field, so that objects far from the focal length are blurry, while objects at the focal length are in focus. In the first pass, we render the teapots and generate a summed-area table in CUDA from the rendered image using the technique just described. In the second pass, we render a full-screen quad with a shader that samples the depth buffer from the first pass and uses the depth to compute a blur factor that modulates the width of the filter kernel. This determines the locations of the four samples taken from the summed-area table at each pixel.

Figure 39-12 Approximate Depth of Field Rendered by Using a Summed-Area Table to Apply a Variable-Size Blur to the Image Based on the Depth of Each Pixel

Rather than write a custom scan algorithm to process RGB images, we decided to use our existing code along with a few additional simple kernels. Computing the SAT of an RGB8 input image requires four steps. First we de-interleave the RGB8 image into three separate floating-point arrays (one for each color channel). Next we scan all rows of each array in parallel. Then the arrays must be transposed and all rows scanned again (to scan the columns). This is a total of six scans of width x height elements each. Finally, the three individual summed-area tables are interleaved into the RGB channels of a 32-bit floating-point RGBA image. Note that we don"t need to transpose the image again, because we can simply transpose the coordinates we use to look up into it. Table 39-1 shows the time spent on each of these computations for two image sizes.

Table 39-1. Performance of Our Summed-Area Table Implementation on an NVIDIA GeForce 8800 GTX GPU for Two Different Image SizesResolution(De)interleave (ms)Transpose (ms)6 Scans (ms)Total (ms)512x5120.440.230.971.64

Radix sort is particularly well suited for small sort keys, such as small integers, that can be expressed with a small number of bits. At a high level, radix sort works as follows. We begin by considering one bit from each key, starting with the least-significant bit. Using this bit, we partition the keys so that all keys with a 0 in that bit are placed before all keys with a 1 in that bit, otherwise keeping the keys in their original order. See Figure 39-13. We then move to the next least-significant bit and repeat the process.

Figure 39-14 The Operation Requires a Single Scan and Runs in Linear Time with the Number of Input ElementsIn a temporary buffer in shared memory, we set a 1 for all false sort keys (b = 0) and a 0 for all true sort keys.

In our merge kernel, we run p threads in parallel. The most interesting part of our implementation is the computation and sorting of the p smallest elements from two sorted sequences in the input buffers. Figure 39-15 shows this process. For p elements, the output of the pairwise parallel comparison between the two sorted sequences is bitonic and can thus be efficiently sorted with log2 p parallel operations.

Panel display: The required information must be on a panel which is presented or displayed under customary conditions of purchase. This eliminates placement of required information on a bottom panel of a cosmetic unless it is very small and customarily picked up by hand where inspected for possible purchase.

Net Contents Declaration on PDP: Minimum letter height determined by the area of the PDP. In the case of "boudoir-type" containers, including decorative cosmetic containers of the cartridge, pill box, compact or pencil type, and cosmetics of 1/4 oz. or less capacity, the type size is determined by the total dimensions of the container. If the container is mounted on a display card, the display panel determines the letter height [21 CFR 701.13(e) and (i)].

Location: If the cosmetic is sold at retail in an outer container, the net contents statement must appear (1) within the bottom 30% of the PDP of the outer container, generally parallel in line to the base on which the package rests, and (2) on an information panel of the inner container. The bottom location requirement is waived for PDPs of 5 square inches or less.

The PDP may be a tear-away tag or tape affixed to a decorative container or to a container of less than 1/4 oz., or it may be the panel of a display card to which the container is affixed.

When a cosmetic is required to bear net quantity of contents declarations on the inner and outer container, the declaration on the outer container must appear on the PDP; on the inner container, it may appear on an information panel other than the panel bearing the name of the product, i.e., the front panel.

Economy Size: Representations of this type are permitted if the firm offers at least one other packaged size of the same brand, only one is labeled "economy size," and the unit price of the package so labeled is substantially (at least 5%) reduced compared to that of the other package.

Giant Pint, Full Quart: Supplemental statements describing the net quantity of contents are permitted on panels other than the PDP. However, these statements must not be deceptive or exaggerate the amount present in the package.

The ingredient declaration may appear on any information panel of the package which is the outer container in form of a folding carton, box, wrapper etc. if the immediate container is so packaged, or which is the jar, bottle, box etc. if the immediate container is not packaged in an outer container. It may also appear on a tag, tape or card firmly affixed to a decorative or small size container.

The ingredient declaration may appear on any information panel of the package which is the outer container in form of a folding carton, box, wrapper etc. if the immediate container is so packaged, or which is the jar, bottle, box etc. if the immediate container is not packaged in an outer container. It may also appear on a tag, tape or card firmly affixed to a decorative or small size container.

As an alternative to the declaration of ingredients on an information panel, the declaration may appear in letters not less than 1/16 of an inch in height in:

Among the various conditions described in §§ 701.3(j) and (k) that must be met if off-package ingredient labeling is utilized as an alternative to the declaration of ingredients on an information panel, the following deserve particular attention:

We also like 43-inch TVs because they are some of the best budget TVs as well. They offer great value for the price, and can often be found for under $300.

The LG C2 OLED TV is this year’s set to beat. Not only is it the best OLED TV thanks to an impressive display panel, but a premium design, maximum versatility and great smart TV platform hit all the high marks, too.

What’s more, this C series lineup is LG’s largest in terms of size options — the configurations span from 42- to 83-inches, all of which sport full arrays of HDMI 2.1 ports. Most also feature LG’s evo OLED panel, which was first introduced on the LG G1 OLED TV and now looks to upgrade the C2’s performance.

The Toshiba C350 Fire TV is the 2021 addition to the small family of Amazon-powered smart TVs, offering good features and decent performance for its extremely affordable price. Our testing found it to be a decent example of the Fire TV template, combining good-enough 4K picture quality, impressively short lag times, and Amazon"s great Fire TV smart features. This includes built-in Alexa voice control, a pretty big app store and (of course) an interface that puts Amazon"s Prime Video service front and center. The 43-inch version is the smallest of the range of screen sizes and also the cheapest — expect to pay around $280 (and likely less during sales events) making it one of the smartest affordable TVs you can get.

Amazon has dabbled in the smart TV game for a few years now, letting TV manufacturers use the same software found on some of the best streaming devices, like the Fire TV Stick 4K Max and Fire TV Cube to power value priced smart TVs. But the Amazon Fire TV Omni series represents a pretty dramatic change to that formula, as the first Amazon-powered smart TV to carry the Amazon brand instead of another manufacturer, as well as the first of likely many Fire TV models with built-in far field microphones for hands-free voice control.

Ms.Josey

Ms.Josey

Ms.Josey

Ms.Josey