f5 ltm lcd panel factory

I currently have an F5 Big-IP 1600 series device. It is running 11.6.0 HF6. I was in the middle of configuring it and setting up users when I experienced an issue. All of the credentials for the admin accounts I have set up including the changed root and admin accounts are now not working. I can connect to the F5 login screen and I can SSH to it using Putty, but no password works for me. I even tried using caps in certain areas in case I inadvertently used caps for the login names. How can I just reset the F5 back to factory settings?

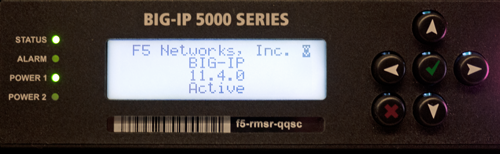

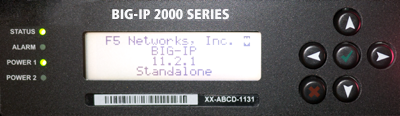

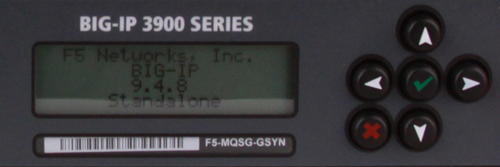

The liquid crystal display, or LCD panel, provides the ability to control theunit without attaching a serial or network cable. The following menus are available on the LCD panel.

Normally, the screens cycle on the LCD panel at a constant rate. However,press the Check button to toggle the LCD panel between Hold and Rotate modes. In Hold mode, a single screen is displayed. The Rotate mode changes the screen displayed on the LCD panel every four seconds.

Pressing theX button puts the LCD panel in Menu mode. The buttons Left Arrow, Right Arrow, Up Arrow, and Down Arrow are functional only when the LCD panel is in Menu mode.

After you put the LCD panel in menu mode, use the Left Arrow, RightArrow, Up Arrow, and Down Arrow buttons to select menu options. There are four menu options:

You can use the Information menu to access help pages about using theLCD panel functionality. You can also find more information on what different LED activity means, and on the failover state of the unit in a redundant system. Table 3.1 shows the options available on the Information menu.

Use the Check button to turn on (checked) or off(cleared) the heartbeat displayed on the LCD screen. This heartbeat displays if the SCCP is running on the system. This heartbeat does not affect the failover mechanism of the system.

This setting controls the brightness of the LCD panelwhen the backlight is off. Use the Left and Right arrow keys to set the brightness of the LCD panel.

Important: After halting the unit from the LCD panel, you must wait at least 60 seconds before shutting down or restarting the unit. Failure to do so may result in unexpected behavior due to improperly shutting down the system.

Note: To determine whether a specific BIG-IP platform has a Switch Card Control Processor (SCCP) subsystem or an Always-On Management (AOM) subsystem, refer to K9476: The F5 hardware/software compatibility matrix.

BIG-IP Local Traffic Manager provides intelligent traffic management for rapid application deployment, optimization, load balancing, and offloading. LTM increases operational efficiency and ensures peak network performance by providing a flexible, high-performance application delivery system. With its application-centric perspective, BIG-IP LTM optimizes your network infrastructure to deliver availability, security, and performance for critical business applications.

This document is the result of a joint effort on behalf of Cisco and F5 to detail best practice design and configurations for deploying BIG-IP Local Traffic Manager with Cisco Identity Services Engine. This is a validated solution that has undergone thorough design review and lab testing from both Cisco and F5. This document is intended to serve as a deployment aid for customers as well as support personnel alike to ensure a successful deployment when integrating these vendor solutions.

Note: Within this document, we describe the recommended method of deployment, and a few different options depending on the level of security and flexibility needed in your environment. These methods are illustrated by examples and include step-by-step instructions for deploying an F5 BIG-IP LTM-Cisco ISE deployment as prescribed by best practices to ensure a successful project deployment.

The figure depicts a basic end-to-end Cisco ISE deployment integrated with an F5 BIG-IP Load Balancer. The figure includes key components of the deployment even though they may not be directly involved with the load balancing process. These components include other ISE nodes such as the Policy Administration node (PAN), Monitoring and Troubleshooting node (MnT) and supporting servers and services like Microsoft Active Directory (AD), Lightweight Directory Access Protocol (LDAP), Domain Name Service (DNS), Network Time Protocol (NTP), Simple Mail Transport Protocol (SMTP), and external Sysloggers.

Note: For F5 BIG-IP LTM, the minimum recommended software release is 11.4.1 hotfix HF5 or 11.4.0 hotfix HF6. Additionally, 11.6.0 HF2 incorporates performance enhancements that can improve RADIUS load balancing performance.

There are many ways to insert the F5 BIG-IP LTM load balancer (LB) into the traffic flow for ISE PSN services. The actual traffic flow will depend on the service being load balanced and the configuration of the core components including the NAD, F5 BIG-IP LTM, ISE PSNs, and the connecting infrastructure. The method that has been most tested and validated in successful customer deployments is a fully inline deployment.

In a fully inline deployment, the F5 BIG-IP-LTM is either physically or logically inline for all traffic between endpoints/access devices and the PSNs. This includes RADIUS, direct and URL-redirected web services, profiling data, and other communications to supporting services.

The figure below depicts the “physically inline” scenario. The F5 BIG-IP LTM uses different physical adapters for the internal and external interfaces to separate the PSNs from the rest of the network; all traffic to/from the PSNs must pass through the load balancer on different physical interfaces.

In both cases routes must be configured to point to the F5 external interface to reach the PSNs on the internal interface. Additionally, the PSNs must have their default gateway set to the F5’s internal interface.

The sample topology below will be used to illustrate the load-balancing configuration of ISE PSN services using an F5 BIG-IP appliance. The diagram includes the network-addressing scheme used in the detailed configuration steps. Notice in the following illustration that the F5 BIG-IP LTM is deployed fully inline between the ISE PSNs and the rest of the network.

F5 BIG-IP LTM may be configured in Active-Standby and Active-Active high availability modes to prevent single points of failure with the load balancing appliance. Configuration of LTM high availability is beyond the scope of this guide. For additional details on Active-Standby configuration, refer to F5 product documentation on Creating an Active-Standby Configuration Using the Setup Utility. For additional details on Active-Active configuration, refer to Creating an Active-Active Configuration Using the Setup Utility.

When configured for high availability, default gateways and next hop routes will point to the floating IP address on the F5 appliance, but health monitors will be sourced from the locally-assigned IP addresses.

From the ISE admin interface, navigate to Administration > System > Deployment. From the left panel, click the gear icon in the upper right corner as shown to display the Create Node Group option:

When load balancing services to one of many candidate servers, it is critical to ensure the health of each server before forwarding requests to that server. In the case of RADIUS, the F5 BIG-IP LTM includes a health monitor to periodically verify that the RADIUS service is active and correctly responding. It performs this check by simulating a RADIUS client and sending authentication requests to each PSN (the RADIUS Server) with a username and password. Based on the response or lack of response, the BIG-IP LTM can determine the current status of the RADIUS auth service. Therefore, ISE must be configured to accept these requests from the BIG-IP LTM. This section covers the steps to add the BIG-IP LTM as a Network Device (RADIUS client) to the ISE deployment.

Enter the forwarding IP address (Self IP) of the BIG-IP LTM’s Internal interface. This is the source IP address of RADIUS request as seen by the ISE PSN.

Click the checkbox for the Authentication Settings section and enter a shared secret. This is the password used to secure RADIUS communications between the BIG-IP LTM and the ISE PSN.

F5 BIG-IP LTMs have the ability to treat a failed authentication (RADIUS Access-Reject) as a valid response to the RADIUS health monitor. The fact that ISE is able to provide a response indicates that the service is running. If deliberately sending incorrect user credentials, then an Access-Reject is a valid response and the server is treated as healthy.

If it is desired to have F5 send valid credentials and receive a successful authentication response (RADIUS Access-Accept), then it is necessary to configure the appropriate Identity Store—either internal or external to ISE—with the username and password sent by the BIG-IP LTM.

Note: See the RADIUS Health Monitoring section of this guide for a detailed discussion on RADIUS Monitor considerations and F5 configuration. This procedure has been included here to streamline the ISE configuration steps to support RADIUS monitoring.

Note: Be sure to include the identity store used for validating F5 RADIUS credentials in the Authentication Policy Rule used to authenticate the F5 monitor. In this example, the identity store InternUsers must be included as the ID store for matching load balancer requests.

Best Practice: To ensure that the F5 Monitor account is not used for other purposes that may grant unauthorized access, lock down the ability to authenticate using this account and restrict access granted to this account. As a health probe, no real network access needs to be granted.

Example: Create ISE Authorization Policy Rule that matches specifically on the F5 IP address or parent ISE Network Device Group and the specific F5 test username and return policy that denies network access such as ACL=deny ip any any.

DNS plays an important role in load balancing ISE web portal services such as the Sponsor Portal and My Devices Portal. Each if these portals can run on every PSN. In order to provide high availability and scaling for these portals, we can deploy F5 to load balance requests to multiple PSNs using a single fully qualified domain name (FQDN). End users can be given a simple and intuitive server name such as sponsor.company.com or guest.company.com that resolves to the IP address of an F5 Virtual Server IP address. The first step in making this happen is to create entries in the organization’s DNS service for these load-balanced portals.

The employee is given the URL of https://sponsor.company.com for creating new guest accounts. When the URL is entered into the employee’s browser, DNS will resolve the FQDN to the Virtual Server IP on the BIG-IP LTM. The web request is directed to ISE-PSN-3 and the PSN presents its HTTPS certificate to the employee’s browser. The server certificate includes the PSN’s identity, but this name is different than the one the employee attempted to access, so a certificate warning is presented to warn the user of the discrepancy.

Be sure that you include DNS entries for all FQDNs referenced in the server certificate. In the case of load-balanced FQDNs, these entries should resolve to the Virtual Server IP address on the F5 BIG-IP LTM.

From the ISE admin interface, navigate to Administration > System > Certificates > Local Certificates and click Add from the right panel to Generate CSR for ISE Server Certificate :

In addition to specific PSN traffic that must be sent directly to the Virtual Server IP address(es) for load balancing, there are a number of flows that do not require load balancing and simply need to be forwarded by the inline F5 appliance. This traffic includes:

As you can see, there are many flows that are not subject to load balancing. To accommodate this traffic in a fully inline deployment, it is necessary to create a Virtual Server on the F5 appliance that will serve as a catch all for these traffic flows and perform IP forwarding.

A common load balancing option is to have the F5 appliance perform source network address translation, also known as source NAT, or SNAT, on traffic sent to the Virtual IP address. This method can simplify routing since the real servers see the load balancer as the source of all traffic and consequently reply directly to the load balancer’s IP address whether the appliance is fully or partially inline to the traffic flow. Unfortunately, this option is not suitable for use with ISE RADIUS AAA services when Change of Authorization (CoA) is required.

For this reason, SNAT of RADIUS traffic from the NAD is not compatible with RADIUS CoA in an ISE deployment. With SNAT of RADIUS AAA traffic, PSNs see the load balancer IP address as the source of RADIUS requests and treat it as the NAD that manages the client session. As a result, RADIUS CoA requests are sent directly to the BIG-IP LTM and dropped.

There are numerous attributes that F5 can use for persistence including, but not limited to, RADIUS attributes (Calling-Station-ID, Framed-IP-Address, NAS-IP-Address, IETF or Cisco Session ID) or Source IP Address. The source IP address is typically the same as the IP address of the RADIUS client (the NAD) or RADIUS NAS-IP-Address.

This iRule is also based on Calling-Station-Id as the primary persistence attribute but also includes the Framed-IP-Address as a second identifier that allows RADIUS packets that include the Framed-IP-Address but not the Calling-Station-Id to maintain persistence to the same server. It can also server to stick packets sourced from this IP address to be sent to the same PSN. This could be used in an SSL Offload case where clients are redirected to an F5 Virtual Server IP rather than to a specific PSN. By sending the traffic to the F5 for SSL termination, additional security and traffic policies can be applied to the packet before a new connection is established to the real PSN.

Fortunately, “When a BIG-IP virtual server receives an IP fragment, the Traffic Management Microkernel (TMM) queues the packet and waits to collect and reassemble the remaining fragments into the original message. TMM does not generate a flow for the fragment until TMM reassembles and processes the entire message. If part of the message is lost, the BIG-IP system discards the fragment.” For more information, see F5 support article SOL9012: The BIG-IP LTM IP fragment processing.

F5 defines a Persistence Timeout, or Time to Live (TTL), that controls the duration that a given persistence entry should be cached. Once expired, a new packet without a matching entry can be load balanced to a different server.

Note: It is possible to configure separate Virtual Servers with different persistence timeouts. It is also possible to leverage F5 iRules to change the persistence TTL based on a RADIUS attribute like Network Device Group Type or Location.

F5 Monitors are used to perform periodic health checks against node members in a load-balanced pool. These monitors, or probes, validate that a real server is healthy before sending it requests. The term healthy is a relative term which can range in meaning from “Server is IP reachable using ICMP ping” to “Server is actively and successfully responding to simulated application requests; additionally, out-of-band checks validate resources are sufficient to support additional load”.

F5 includes a RADIUS Authentication monitor that will be used for monitoring the health of the ISE PSN servers. It will periodically send a simulated RADIUS Authentication request to each PSN in the load-balanced pool and verify that a valid response is received. A valid response can be either an Access-Accept or an Access-Reject. As covered under the ISE prerequisite configuration section, it is critical that the following be configured on the ISE deployment for the F5 health monitor to succeed:

So how does all of this relate to F5 RADIUS Health Monitors? The goal is to set the F5 monitor timers such that they detect PSN failure and try another PSN before the NAD RADIUS request times out. Otherwise, the F5 BIG-IP LTM will continue to send requests to the same failed PSN until the configured monitor interval is exceeded. For example, if the total NAD RADIUS timeout is 40 seconds, the interval may need to be set to 31 seconds. At the same time, setting an interval of 11 seconds will likely be too excessive and simply cause unnecessary RADIUS authentication load on each of the PSNs. If RADIUS timers differ significantly between groups of NADs or NAD types, it is recommended to use the lower settings to accommodate all NADs using the same RADIUS Virtual Servers.

Note: Be sure to take into consideration whether the test RADIUS account used by the F5 monitor is an internal ISE user account, or one that must be authenticated to an external identity store as this will impact total RADIUS response time. For more details on user account selection, refer to the section User Account Selection for RADIUS Probes.

Another decision is based on the security of the user account. It is important that this user account only be used to validate the PSNs are successfully processing RADIUS requests. No access should be available to this account in the event the credentials are leaked and used for access to secured resources. This may be a reason to choose an invalid user account so that an Access-Reject is returned. As noted, the BIG-IP LTM will deem this response as valid in determining health status since it must have been processed by the PSN’s RADIUS server.

An alternative to the above method is to use a valid account, but to be sure that no access privileges are granted to the authenticated user. ISE controls these permissions using the Authorization Policy. The PSN can authenticate the probe user (Access-Accept), but also return a RADIUS Authorization that explicitly denies access. For example, an Access Control List (ACL) that returns ‘deny ip any any’ could be assigned, or an unused/quarantine VLAN. Further, the Authentication and Authorization Policy rule should specifically limit the probe user account to the F5 IP address or other conditions (authentication protocol, service type, network device group, etc) that limit where account is expected.

After properly configuring the RADIUS Monitor checks you are overjoyed by the friendly green icons under the F5 Pool List or Pool Member List that signify your success and a healthy RADIUS server farm…

For F5 monitor checks, a simple Collection Filter can be configured based on Device-IP-Address or NAS-IP-Address that is typically the F5’s internal interface IP, or else use the User Name of the probe account as shown in the example.

This section provides the detailed F5 configuration for RADIUS load balancing of ISE PSN servers including the recommended settings and considerations for each component.

The name should match the value configured under Add F5 BIG-IP LTM as a NAD for RADIUS Health Monitoring in the “ISE Configuration Prerequisites” section.

The password should match the value configured under Add F5 BIG-IP LTM as a NAD for RADIUS Health Monitoring in the “ISE Configuration Prerequisites” section.

The RADIUS secret should match the value configured under Add F5 BIG-IP LTM as a NAD for RADIUS Health Monitoring in the “ISE Configuration Prerequisites” section.

Optional. By default, BIG-IP LTM will use the interface, or Self IP. If wish to match the monitor’s RADIUS requests using a different NAS-IP-Address, enter new value here.

UDP idle timeout should be set based on the RADIUS environment and load balancer resources. High RADIUS activity will consume more F5 appliance resources to maintain connection states. This setting can be increased in networks with lower authentication activity or sufficient appliance capacity. Conversely, the value may need to be tuned lower with higher authentications activity or lower appliance resource capacity.

No requirement to Match Across Servers when Virtual Servers share the same IP address. For more information, see F5 support article SOL5837: Match Across options for session persistence.

Note: The same monitor will be used to verify both RADIUS Auth and Accounting services on the ISE PSN appliances to minimize resource consumption on both F5 and ISE appliances.

Reselect option ensures established connections are moved to an alternate pool member when a target pool member becomes unavailable. For additional details on failed node handling, refer to following F5 Support articles:

Best Practice: After making changes to the RADIUS Load Balancing configuration, it may be necessary to clear existing connections to ensure any traffic currently being processed by less-specific Virtual Servers such as Forwarding (IP) hit the new, more-specific Virtual Servers created for RADIUS traffic. Use the tmsh sys delete connection command from the F5 BIG-IP LTM console to clear all existing connections, or use with parameters to delete specific connections based on protocol (udp/tcp), client/server IP address, or client/server port number.

Best Practice: The use of SNAT for PSN-initiated CoA traffic is a best practice to simplify NAD configuration and facilitate change changes in PSN addresses from the F5 appliance rather than changing the configuration on each NAD.

Using a basic NAD CoA configuration without SNAT, CoA packets can be sent directly through the F5 BIG-IP LTM using the IP Forwarding servers configured earlier in this guide. This section details the F5 configuration required to simplify the NAD CoA configuration by Source NATting RADIUS CoA traffic initiated by the ISE PSNs.

Note: In deployments that involve multiple data centers for disaster recovery, it is common that profiling data be sent to both data centers from remote sites. In this scenario, it is recommended to deploy separate load-balanced clusters in each data center. Therefore, it is possible that network devices may require a second target configured for profiling data. Since the profile data sources are usually configured with an IP address, DNS-based solutions like F5’s BIG-IP Global Traffic Manager (GTM) or Cisco’s Global Site Selector (GSS) would not apply. To support a single target, solutions like Anycast with intelligent routing, Cisco IOS Embedded Event Manager (EEM), or service templates may be required to dynamically update targets or route traffic to a single destination.

The DNS probe sends reverse lookup requests to external DNS servers, so while this traffic can technically be load balanced by the F5 BIG-IP LTM, it does not directly impact the PSNs.

The profiling data for DHCP, SNMP Traps, and NetFlow are all UDP-based and also unidirectional. This means that no response traffic is expected from the PSNs when consuming the profile data by these probes. Consequently, F5 monitors that rely on application-specific responses are not applicable. The use of simple ICMP is suitable to verify node is IP reachable. Since most ISE deployments combine Profiling and RADIUS user services, the reuse of the RADIUS monitor is a reasonable option to verify that ISE application services are running. In general, if RADIUS AAA services are not operational, you will likely not want to send profiling data to the node when both services are configured on the PSN.

The sample diagram shows the typical flow for sending DHCP traffic to both a real DHCP Server and an F5 BIG-IP LTM appliance. Unlike the real DHCP Server requests, the PSNs will never reply.

This is another case where advanced F5 iRule logic can be very powerful. Through intelligent parsing, an iRule can determine the location of a key DHCP option field and use that as the basis for persistence. Two examples are DHCP option 61 (dhcp-client-identifier) and DHCP Option 50 (dhcp-requested-address). The Client Identifier is typically the MAC address of the endpoint whereas Requested Address is typically the client IP address. (Reference: RFC2132 "DHCP Options and BOOTP Vendor Extensions”)

Best Practice: Be sure to closely monitor any NetFlow records sent to the load balancer for bandwidth and performance impact on both the F5 and ISE appliances. It is recommended that a phased approach be used to better gauge the impact of each new router/switch configured to export NetFlow to the load balancer.

Although configurable, common destination ports for NetFlow export include UDP/9995 and UDP/2055. The Virtual Server configured for NetFlow load balancing may share the same Virtual IP address used by RADIUS load balancing and other profiling services, but the pool members should be configured to use a dedicated PSN interface, each with a unique node IP address. This ensures traffic separation for the NetFlow profiling data on the PSN. If NetFlow bandwidth is a concern on the F5 appliance, then a unique Virtual Server IP address on a separate F5 interface can be configured on the load balancer appliance.

This section provides the detailed F5 configuration for load balancing ISE Profiling data to PSNs including the recommended settings and considerations for each component.

No requirement to Match Across Servers when Virtual Servers share the same IP address. For more information, see F5 support article SOL5837: Match Across options for session persistence.

Note: The same monitor will be used to verify both RADIUS AAA and Profiling services on the ISE PSN appliances to minimize resource consumption on both F5 and ISE appliances.

Reselect option ensures established connections are moved to an alternate pool member when a target pool member becomes unavailable. For additional details on failed node handling, refer to following F5 Support articles:

ISE supports a number of web-based services including Admin access, guest services, web authentication, and endpoint compliance assessment, quarantine, provisioning, and remediation. For the purpose of understanding when and how to integrate F5 load balancing, it will help to characterize ISE web services into two general categories:

Note that the redirected web request always follows the PSN that is servicing the RADIUS session. Also note that the web request is sent directly to a specific PSN IP address and not to a Virtual Server IP address on the F5 BIG-IP LTM. If RADIUS is load balanced, then the resulting redirected web service is also load balanced.

In summary, sessionized URL-Redirected web services are implicitly load balanced based on RADIUS connections and do not require any explicit F5 load balancing configuration.

If the ISE PSNs are configured to use a dedicated interface for web services, then all client HTTPS traffic to the PSNs can bypass the F5 BIG-IP LTM. ISE 1.3 and above simplifies the separation of traffic flows by supporting multiple default gateways per interface. This allows traffic received on the web service interface to be sent out same interface while allowing isolation of RADIUS and management traffic on a different interface. Under ISE 1.2 and earlier releases, only a single default gateway is supported. Therefore, the most effective method to bypass the F5 appliance would be to Source NAT the client traffic on a Layer 3 switch before it reaches the web portal network. The PSNs can then respond to a locally NATted address or be configured with a static route to the SNAT address/network via the web portal interface.

The diagram depicts the deployment of separate PSN interfaces for RADIUS and Web Services. TCP-based traffic from user networks to the web portal network (10.1.91.0/24) and service ports is Source NATted by the Layer 3 switch. This design simplifies the PSN routing config (allows simple default gateway), maintain path isolation, and allows client web traffic to completely bypass the BIG-IP LTM IP Forwarding server.

See the Load Balancing Sponsor, My Devices, and LWA Portals section for more details on shared versus dedicated PSN interfaces. The section includes an example of using SNAT on the F5 appliance to support HTTPS load balancing for specific ISE web portals while still supporting URL-Redirected HTTPS flows on dedicated PSN interfaces.

With the exception of Admin access, all of these portal services can be load balanced using F5 BIG-IP LTM. Admin client requests should always be sent to the Primary Administration Node (PAN) so load balancing with the Secondary PAN does not apply.

F5 load balancing of Sponsor, My Devices, and LWA can significantly improve user experience, scalability, and redundancy since URLs are no longer limited to a single static value that points to a single PSN. Instead, the URL that is provided to guest sponsors and registrants can resolve to an F5 Virtual Server IP address that can be processed by any one of many PSNs in the load-balanced cluster. Similarly, network access devices that require LWA support (for example, older platforms/versions of the Cisco Wireless LAN Controller) can be configured with a single external web portal URL that is serviced by multiple PSNs behind an F5 VIP.

The diagram depicts a sample traffic flow for load balancing the Sponsor Portal. The same flow applies to My Devices Portal and LWA. The client web request is sent to the F5 VIP and load balanced to an available PSN member in the Virtual Server Pool.

The default HTTPS service port for the ISE Sponsor, My Devices, and LWA Portals is TCP/8443. The port is configurable in ISE so make sure to validate the service port when configuring the Virtual Server in F5 BIG-IP LTM.

Under ISE 1.2 and earlier releases, the PSNs forward traffic based on its static routing table. The nodes do not automatically send return traffic on the receiving interface. Consequently, if only a single default gateway is configured on each PSN, web return traffic from the PSNs will take the same path as RADIUS and other management traffic. Although possible to configure static routes for each destination network to achieve symmetric traffic flows, this process can prove to be very management intensive, error prone, and sometimes impossible based on the addressing scheme of the network. Therefore, if path isolation is required between PSN interfaces and services, then F5 should perform source NAT on web traffic so that PSNs reply on the same interface.

The diagram depicts an example configuration using a dedicated PSN interface for web services. All web portal traffic will automatically be routed to the correct PSN but return traffic will be sent out the management interface, by default. SNAT on the F5 BIG-IP LTM can ensure responses are returned to the F5 interface connected to the portal network.

Note: When deploying a separate, dedicated interface for additional ISE services like web portals or profiling, be sure to configure the upstream Layer 3 switch/router with a static route to the isolated network that points to the F5’s interface IP address as the next hop.

The specific interfaces and ports for ISE web portals and services are configured under the ISE Guest configuration. Verify the ISE settings before configuring the F5 BIG-IP LTM to use specific ISE IP addresses and ports.

Note: To support load balancing to the Sponsor, My Devices, and LWA portals, be sure DNS is properly configured so that these FQDNs resolve to the correct F5 Virtual Server IP address.

To facilitate the Portal FQDN functionality without requiring F5 to perform the interception and translation, it is recommended to configure three Virtual Servers for the Sponsor and/or MyDevices portals—one each for the initial HTTP/S and another for the actual target portal and service port. Using this configuration, the initial portal request will hit the Virtual Server on either TCP/80 or TCP/443 and be redirected by ISE to TCP/8443, for example. Although separate F5 Pools could be configured on each port, we will simplify the backend configuration by using a single pool that services requests on any port.

where LWA_VIP_FQDN is the DNS FQDN assigned to the F5 Virtual Server IP used for LWA and portal_UUID is the universally unique identifier (UUID) of the portal (case sensitive), which can be obtained in the test portal URL of the portal in ISE.

F5 Monitors are used to perform periodic health checks against node members in a load-balanced pool. These monitors, or probes, validate that a real server is healthy before sending it requests. The term healthy is a relative term which can range in meaning from “Server is IP reachable using ICMP ping” to “Server is actively and successfully responding to simulated application requests; additionally, out-of-band checks validate resources are sufficient to support additional load”.

F5 includes an HTTPS monitor that will be used for monitoring the web portal health of the ISE PSN servers. It will periodically send a simulated web request to each PSN in the load-balanced pool and verify that a valid response is received. In this guide, we will treat a simple “HTTP/1.1 200” as valid response for the destination portal.

send "GET /sponsorportal/PortalSetup.action?portal=b7e7d773-7bb3-442b-a50b-42837c12248a HTTP/1.1\r\nUser-Agent: BigIP-LTM-Probe/1.0\r\nHost:\r\nConnection: Close\r\n\r\n"

GET /sponsorportal/PortalSetup.action?portal=b7e7d773-7bb3-442b-a50b-42837c12248a HTTP/1.1\r\nUser-Agent: BigIP-LTM-Probe/1.0\r\nHost:\r\nConnection: Close\r\n\r\n

No requirement to Match Across Servers if the Virtual Servers for web portals share the same IP address. For more information, see F5 support article SOL5837: Match Across options for session persistence.

The focus of the guide has been on the load balancing of ISE PSN services located in the same location and Local Area Network (LAN). In a given campus network, there is typically a single, load-balanced cluster of PSNs all connected through high-speed network connections—most commonly all in the same subnet or connected to same redundant server switches. F5 BIG-IP LTM is the prime solution for this use case.

RADIUS AAA: Configure NADs with a single RADIUS Server that points to an IP Anycast address. Each F5 BIG-IP LTM appliance can be configured with this same Anycast address as the VIP for RADIUS AAA. Individual PSNs with a dedicated RADIUS interface may also share this same IP address.

DHCP/SNMP Trap Profiling: For DHCP, configure L3 gateways with secondary/tertiary IP Helper statements. For SNMP Traps, configure access devices with secondary/tertiary SNMP Trap hosts. Each entry may point to an F5 Virtual Server IP or individual PSNs.

DHCP/SNMP Trap Profiling: For DHCP, configure L3 gateways with a single IP Helper entry that points to an IP Anycast address. For SNMP Traps, configure access devices with a single SNMP Trap host that points to an IP Anycast address. Each F5 BIG-IP LTM appliance can be configured with this same IP Anycast address as the VIP for DHCP/SNMP Trap Profiling. Individual PSNs with a dedicated profiling interface may also share this same IP address.

HTTPS Portals: Deploy intelligent Domain Name Service to resolve DNS lookups to different IP addresses based on availability, performance, or proximity. The returned address may point to an F5 Virtual Server IP or individual PSNs. Solutions in this space range from simplistic DNS Round-Robin to more sophisticated offerings such as F5’s BIG-IP Global Traffic Manager (GTM) or Cisco’s Global Site Selector (GSS). When deployed with existing LTMs, F5 BIG-IP GTM offers a number of additional management and feature benefits.

HTTPS Portals: Configure DNS with a single portal FQDN for each service that resolves to a single IP Anycast address. Each F5 BIG-IP LTM appliance can be configured with this same Anycast address as the VIP for the specific HTTPS portal. Individual PSNs with a dedicated web portal interface may also share this same IP address. This option does not require advanced DNS capabilities but the F5 BIG-IP GTM can still be leveraged for other DNS feature enhancements.

This section provides high-level recommendations to validate and troubleshoot the integration of Cisco ISE PSNs using F5 BIG-IP LTM for load balancing. It is not intended to be an exhaustive guide on this topic but rather to serve as an aid to jump start troubleshooting efforts and ensure basic configuration and deployment are correct before contacting Cisco or F5 for technical support.

Below is an example taken from the ISE Admin node’s main dashboard that shows Authentication Latency for different PSNs. It reveals trends in RADIUS latency. Additionally, the Alarms panel shows that there have been specific events related to high latency.

Are F5 probes failing? If the health monitor probes fail, then the PSN(s) and possibly the entire virtual server may be removed from service. Verify the probe configuration is correct.

Within the guide we discuss the use of Collection Filters to squelch authentication success events from F5 RADIUS Health Monitors when system is operational, but you may need to disable suppression for specific endpoints or NADs to troubleshoot individual clients or access device connections.

The following tips can be used to help validate that the general F5 deployment is healthy and that PSNs are operational from the perspective of the F5 BIG-IP LTM appliance.

To view the status of Virtual Servers from the F5 admin interface, navigate to Local Traffic > Virtual Servers > Virtual Server List. Status should be Available (green circle) for pools that use an active application monitor, or else Unknown (blue square) if no service checking is enabled. If Status is Offline (red diamond), a health monitor has failed. If connection limits are set for a node, the status could also be shown as Unavailable (yellow triangle). Status at the Virtual Server level indicates health of the pool as a whole rather than an individual pool member.

For service issues related to specific nodes, it is recommended to review F5 support article SOL2531: Troubleshooting health monitors to ensure monitors provide healthy status and keep the server active in the pool.

For quick viewing of persistence records from the F5 web-based admin interface, navigate to Statistics > Module Statistics > Local Traffic and set Statistics Type to Persistence Records. Optionally use the Search field to filter the output.

Note in the above “Bad Example” that there are two entries for the same endpoint persisted to two different servers. This may be expected when using different persistence algorithms for different Virtual Servers, or if intentionally using different pools for different services. In the case of PSN load balancing, we typically want all traffic for a given endpoint to be sent to the same PSN. Due to variances in the MAC address format in the above example, the F5 BIG-IP LTM treated each entry as a unique endpoint and consequently load balanced the traffic to different PSNs. By normalizing the MAC address to a single format, the endpoint traffic for both flows can be sent to the same PSN as shown in “Good Example”.

In a production network, use of the general statistics report may not be an efficient method to search and view records for a specific endpoint. To quickly view persistence records for a specific Virtual Server, source IP address, or endpoint, use the BIG-IP LTM TMOS Shell (tmsh).

root@(f5)(cfg-sync Standalone)(Active)(/Common)(tmos)# show ltm persistence persist-records virtual ise_radius_auth mode universal key 7C-6D-62-E3-D5-05

In some cases it may be necessary to clear existing persistence records to ensure new traffic is load balanced as expected. To clear persistence records for a specific endpoint, source IP, Virtual Server, pool, or node, use the BIG-IP LTM TMOS Shell (tmsh).

In some cases it may be necessary to clear existing connections to ensure new traffic is load balanced as expected. To clear connections for a specific client, server, or port, use the BIG-IP LTM TMOS Shell (tmsh).

The iRules used in this guide for persistence included a debug logging option to be used only when troubleshooting is required. Enable iRule debug logging options and view F5 traffic logs to verify iRule persistence processing. Example output for the debug options is provided in the guide.

Like ISE, F5 also includes support to capture and analyze packets directly from F5 Admin Interface. Additional filtering and analysis is possible using enhanced packet capture tools like Wireshark. For more information on using the tcpdump command using F5, see F5 support article SOL411: Overview of packet tracing with the tcpdump utility.

If F5 appliance trunks multiple VLANs, note that packet captures may show both ingress and egress packets where MAC addresses change but IP addresses do not. This can sometimes cause confusion when analyzing packet captures.

send "GET /sponsorportal/PortalSetup.action?portal=b7e7d773-7bb3-442b-a50b-42837c12248a HTTP/1.1\r\nUser-Agent: BigIP-LTM-Probe\r\nHost:\r\nConnection: Close\r\n\r\n"

This example shows how F5 scripting logic can be leveraged to parse specific fields in a client DHCP Discover or Request packet and extracts the MAC Address as persistence identifier. This allows DHCP requests to be load balanced to individual ISE PSNs on a per-host basis rather than a per-gateway basis (source IP address persistence). This provides better load distribution and when coupled with RADIUS persistence based on client MAC address (RADIUS Calling-Station-ID) provides optimal profiling collection by ensuring all RADIUS and DHCP traffic for a given endpoint is sent to the same PSN.

The PSNs are on a dedicated VLAN 99 (subnet 10.1.99.0/24). Each PSN has a default gateway to the F5 load balancer’s internal interface with Self IP 10.1.99.2. The virtual IP (VIP) for load-balanced services (10.1.98.8) is on a separate VLAN 98 (subnet 10.1.98/24). The F5 BIG-IP LTM has a default gateway to the upstream network switch at 10.1.98.1. Since the F5 is not advertising internal routes, it is necessary that the network switch be configured with a static route to the 10.1.99.0/24 network with a next hop set to the Self IP for the F5’s external interface at 10.1.98.2. The table displays the network-addressing scheme for the sample Topology.

Ms.Josey

Ms.Josey

Ms.Josey

Ms.Josey