not supported with g sync lcd panel free sample

/cdn.vox-cdn.com/uploads/chorus_asset/file/14743154/gsync1.jpg)

If you want smooth gameplay without screen tearing and you want to experience the high frame rates that your Nvidia graphics card is capable of, Nvidia’s G-Sync adaptive sync tech, which unleashes your card’s best performance, is a feature that you’ll want in your next monitor.

To get this feature, you can spend a lot on a monitor with G-Sync built in, like the high-end $1,999 Acer Predator X27, or you can spend less on a FreeSync monitor that has G-Sync compatibility by way of a software update. (As of this writing, there are 15 monitors that support the upgrade.)

However, there are still hundreds of FreeSync models that will likely never get the feature. According to Nvidia, “not all monitors go through a formal certification process, display panel quality varies, and there may be other issues that prevent gamers from receiving a noticeably improved experience.”

But even if you have an unsupported monitor, it may be possible to turn on G-Sync. You may even have a good experience — at first. I tested G-Sync with two unsupported models, and, unfortunately, the results just weren’t consistent enough to recommend over a supported monitor.

The 32-inch AOC CQ32G1 curved gaming monitor, for example, which is priced at $399, presented no issues when I played Apex Legends and Metro: Exodus— at first. Then some flickering started appearing during gameplay, though I hadn’t made any changes to the visual settings. I also tested it with Yakuza 0,which, surprisingly, served up the worst performance, even though it’s the least demanding title that I tested. Whether it was in full-screen or windowed mode, the frame rate was choppy.

Another unsupported monitor, the $550 Asus MG279Q, handled both Metro: Exodus and Forza Horizon 4 without any noticeable issues. (It’s easy to confuse the MG279Q for the Asus MG278Q, which is on Nvidia’s list of supported FreeSync models.) In Nvidia’s G-Sync benchmark, there was significant tearing early on, but, oddly, I couldn’t re-create it.

Before you begin, note that in order to achieve the highest frame rates with or without G-Sync turned on, you’ll need to use a DisplayPort cable. If you’re using a FreeSync monitor, chances are good that it came with one. But if not, they aren’t too expensive.

First, download and install the latest driver for your GPU, either from Nvidia’s website or through the GeForce Experience, Nvidia’s Windows 10 app that can tweak graphics settings on a per-game basis. All of Nvidia’s drivers since mid-January 2019 have included G-Sync support for select FreeSync monitors. Even if you don’t own a supported monitor, you’ll probably be able to toggle G-Sync on once you install the latest driver. Whether it will work well after you do turn the feature on is another question.

Once the driver is installed, open the Nvidia Control Panel. On the side column, you’ll see a new entry: Set up G-Sync. (If you don’t see this setting, switch on FreeSync using your monitor’s on-screen display. If you still don’t see it, you may be out of luck.)

Check the box that says “Enable G-Sync Compatible,” then click “Apply: to activate the settings. (The settings page will inform you that your monitor is not validated by Nvidia for G-Sync. Since you already know that is the case, don’t worry about it.)

Check that the resolution and refresh rate are set to their max by selecting “Change resolution” on the side column. Adjust the resolution and refresh rate to the highest-possible option (the latter of which is hopefully at least 144Hz if you’ve spent hundreds on your gaming monitor).

Nvidia offers a downloadable G-Sync benchmark, which should quickly let you know if things are working as intended. If G-Sync is active, the animation shouldn’t exhibit any tearing or stuttering. But since you’re using an unsupported monitor, don’t be surprised if you see some iffy results. Next, try out some of your favorite games. If something is wrong, you’ll realize it pretty quickly.

There’s a good resource to check out on Reddit, where its PC community has created a huge list of unsupported FreeSync monitors, documenting each monitor’s pros and cons with G-Sync switched on. These real-world findings are insightful, but what you experience will vary depending on your PC configuration and the games that you play.

Vox Media has affiliate partnerships. These do not influence editorial content, though Vox Media may earn commissions for products purchased via affiliate links. For more information, seeour ethics policy.

Two of the most common issues with PC gaming are screen tearing and stuttering. Each time your GPU renders a frame, it’s sent to the display, which updates the picture at a certain interval depending on the refresh rate (a 144Hz, display, for example, will refresh the image up to 144 times in a second). Screen tearing or stuttering happens when these two steps misalign, either with your GPU holding a frame your monitor isn’t ready for or your monitor trying to refresh with a frame that doesn’t exist.

G-Sync solves that problem by aligning your monitor’s refresh rate to your GPU’s frame render rate, offering smooth gameplay even as frame rates change. For years, G-Sync was a proprietary Nvidia technology that only worked with certain, very expensive displays with a Nvidia-branded module inside. In 2019, though, Nvidia opened its G-Sync technology to some compatible FreeSync displays, offering an adaptive refresh rate to not only a lot more displays, but a lot of cheaper ones, too.

Although there are now displays with the G-Sync Compatible badge on the shelves, they aren’t set to work with G-Sync by default. Here’s how to use G-Sync on a FreeSync monitor.

First, you need to make sure that your hardware setup is going to support G-Sync, or this process isn’t going to work very well. Your monitor needs to be ready, and that requires three important things.

The first thing you need is a compatible monitor. You will want to consult the GeForce list of G-Sync compatible gaming monitors, which are monitors where G-Sync isn’t built-in but is expected to perform quite well. Recent displays from Dell, BenQ, Asus, Acer, LG, Samsung, and more are mostly supported, though you’ll still need to consult Nvidia’s list.

Secondly, you’ll need a GTX 10-series graphics card or better. RTX 20-series and its Super variants work, as do RTX 30-series GPUs. Similarly, the 1660 Super, 1660 Ti, and 1660 work, too. Lastly, you’ll need a DisplayPort connection from this graphics card to your monitor for most displays, though there are some LG models that require HDMI.

Finally, you’ll need to update your GPU drivers. Driver 417.71 brought support for G-Sync in 2019, so you’ll need at least that version, though layer versions are preferable for their improved overall performance and support.

With a compatible monitor at the ready, head into your monitor settings and make sure that FreeSync (or Adaptive Sync) is turned on. This is necessary for the following steps to work.

With your Nvidia graphics card plugged in and recent drivers installed, you should have access to the Nvidia Control Panel app on your PC. Open it now. Once open, look at the left-hand menu for the Display section. Here, select the option to Set up G-SYNC.

Select your display. There’s probably only one display to pick here, so this isn’t usually a problem unless you have an odd sort of multi-monitor display plan and need to make sure the changes apply to the right monitor.

Perhaps your current monitor is new and upgraded enough to support G-Sync, in which case there is little you need to tweak or correct so that it functions smoothly. As an extra precaution, we recommend executing a graphics test to be positive that you have the desired visuals, at which point you’re free to use it as you see fit. You should note that other monitors may still not enable G-Sync properly. This is common, and you may just have to make one additional adjustment to help your monitor get along properly.

You will use the same NvidiaControl Panel, to access the option for3D Settingslocated in the left-hand menu. From here, selectManage 3D Settings, and go to theGlobaltab. Look for the setting calledMonitor Technology, and make sure it is set to theG-Synccompatibility setting.

You don’t have to employ G-Sync for every game, as you can always decide to take it one game at a time. You will want to accessProgram Settingsrather thanGlobal.This allows for some added customization if you prefer certain games on G-Sync and certain games otherwise.

This website is using a security service to protect itself from online attacks. The action you just performed triggered the security solution. There are several actions that could trigger this block including submitting a certain word or phrase, a SQL command or malformed data.

/cdn.vox-cdn.com/uploads/chorus_asset/file/15954546/akrales_190308_3281_0016.jpg)

In 2019, Nvidia introduced(opens in new tab) its G-Sync Compatibility program. It was somewhat shocking because it allowed monitors to don a G-Sync badge without investing in Nvidia’s proprietary hardware. Ever since, monitors new and old, including those that use AMD FreeSync technology, are eligible for G-Sync Compatibility certification if they pass Nvidia’s tests. Additionally, Nvidia’s G-Sync Compatibility program is retroactive and internally funded, complicating the idea of G-Sync monitors carrying a tax.

Since G-Sync Compatibility’s arrival, we’ve tried running G-Sync on all gaming monitors that have arrived in our lab, whether they’re Nvidia-certified or not. The vast majority of FreeSync-only gaming monitors we tested successfully ran G-Sync, (and you can learn how in our articleHow to Run G-Sync on a FreeSync Monitor).

So what’s the deal? Is either a standard G-Sync or G-Sync Compatibility certification truly necessary in order to join Adaptive-Sync with an Nvidia graphics card? Should Nvidia gamers nix a FreeSync-only monitor from their shopping list or is it safe to assume they’ll be able to run G-Sync on it just fine? And is there any risk in running G-Sync on a non-certified display?

It’s easy enough to get G-Sync to running on a FreeSync monitor that lacks Nvidia approval. Even Nvidia itself says it’s okay. Vijay Sharma, Nvidia director of product management, told us though that the experience may vary but may also be fine.

“Sometimes it might be okay, might be acceptable, satisfactory. Other times it might not. But we leave that choice to the end user,” he told Tom’s Hardware, echoing sentiments shared on Nvidia’s own website, which details how gamers can run G-Sync on monitors without certification while noting that it “may work, it may work partly, or it may not work at all.”

“And it"s easy enough to try,” Sharma added. “Take a monitor that you know is fixed frequency, an old one, 60 Hz, and then go into the video control panel, and turn on G-Sync Compatible and then go play a game. And check -- if the monitor doesn"t like the signal it’s getting it’ll just show a black screen [or something].”

Some monitor vendors also approve giving G-Sync a try. Jason Maryne, product marketing manager for LCD monitors at ViewSonic, which has G-Sync Compatible, G-Sync and FreeSync-only gaming monitors, told us that he’s not aware of any reason gamers shouldn’t try running G-Sync on ViewSonic’s FreeSync monitors.

“Every gamer with a FreeSync monitor should attempt to use G-Sync drivers (if they have an Nvidia graphics card), but their experience may not be as optimal as Nvidia wants it to be,” Maryne told Tom’s Hardware.

“In general, we recommend only using our monitors in the matter that they’ve been tested and certified to maximize performance and the end-user experience, which is why given the current great performance of FreeSync paired with the higher refresh specs of our monitors, there should not be any need to run our monitors in any un-supported modes,” Paul Collas, VP of product at Monoprice, which has FreeSync monitors but no G-Sync Compatible ones, told Tom’s Hardware.

We’ve also encountered some limitations in our testing. You can’t run the feature with HDRcontent, and we’ve been unable to run overdrive while running G-Sync on a FreeSync-only monitor.

If you want something that’ll fight screen tearing with your Nvidia graphics card right out of the box with satisfactory performance regardless of frame rates, official G-Sync Compatibility has its perks. To get why, it’s first important to understand what real Nvidia-certified G-Sync Compatibility is.

G-Sync Compatibility is aprotocol within VESA’s DisplayPort 1.2 spec. That means it won’t work with HDMI connections (barring G-Sync Compatible TVs) or Nvidia cards older than the GTX 10-series. As you can see onNvidia’s website(opens in new tab), G-Sync Compatibility is available across a range of PC monitor brands, and even TVs (LG OLEDs, specifically) carry G-Sync Compatible certification.

Each monitor that gets the G-Sync Compatible stamp not only goes through the vendor’s own testing, as well as testing required to get its original Adaptive-Sync (most likely FreeSync) certification, but also Nvidia testing on multiple samples. Most monitors that apply for G-Sync Compatibility fail -- the success rate is under 10%, according to Nvidia’s Sharma. Earning the certification is about more than just being able to turn G-Sync on in the GeForce Experience app.

In May, Nvidia announced(opens in new tab)that only 28 (5.6%) of the 503 Adaptive-Sync monitors it tested for its G-Sync Compatibility program passed its tests. Apparently, 273 failed because their variable refresh rate (VRR) range wasn’t at least 2.4:1 (lowest refresh rate to highest refresh rate). At the time, Nvidia said this meant gamers were unlikely to get any of the benefits of VRR. Another 202 failed over image quality problems, such as flickering or blanking. “This could range in severity, from the monitor cutting out during gameplay (sure to get you killed in PvP MP games), to requiring power cycling and Control Panel changes every single time,” Nvidia explained. The remaining 33 monitors failed simply because they were no longer available.

“Some were okay, some were good, but many were just outright bad. And when I say bad, it"s that the visual experience was very poor,” Sharma said. “And people would see things like extreme flicker in games, they’d see corruption on the stream, they"ve had difficulty enabling [VRR]. The supported VRR range would be really small.”

Some of what Nvidia looks for when determining G-Sync Compatibility may be important to you too. Take the first thing Nvidia tests for: VRR range. In a G-Sync Compatible monitor, the maximum refresh rate of its VRR range divided by the lowest support refresh rate in the VRR must be 2.4 or higher. For example, a 120 Hz monitor should have a VRR range of 40-120 Hz (120 divided by 40 is 3, which is greater than 2.4. If the minimum range went up to 55 Hz, the quotient would be 2.2, which is higher than Nvidia’s 2.4 minimum).

“We"re kind of sticklers on that because when you look at FPS output from games, it"s variable,” Sharma said. “Very few games if any output consistently at a fixed rate … They vary all over the place, depending on how complex the scene is.”

With G-Sync Compatible certification, your monitor’s guaranteed to run Nvidia VRR at the monitor’s maximum refresh rate, but that’s not the case with non-certified monitors, and the issue’s even worse if the monitor’s overclocked.

There should also be no flickering, which happens when the monitor changes brightness levels at different refresh rates, a common characteristic of LCD panels, Sharma said. In our own testing, we’ve found that running G-Sync on a non-certified monitor sometimes results in a lot of flickering in windowed screens.

Nvidia also looks for “corruption artifacts,” according to the exec, and VRR must be enabled by default without having to go into the on-screen display (OSD) or any PC settings.

Of course, Nvidia also has testers take G-Sync Compatible prospects for a test drive, Nvidia will typically look at 2-3 samples typically per display but that number can also be as high as 6, depending on the screen and its “characteristics,” as Sharma put it. The process typically takes about 4 weeks for both existing and new monitors but that timeframe may have increased a bit of late, due to the pandemic (Nvidia’s certification process requires in-person testing).

G-Sync has long been associated with a price premium, so we often hear people question if perfectly good monitors are rejected from the G-Sync Compatibility program. Notably, Nvidia’s G-Sync Compatibility program isn’t pay-to-play -- at least not directly. The program is entirely internally funded. As Nvidia’s Sharma put it, “There’s no cost for anybody but Nvidia.”

ViewSonic’s Maryne told us “it can be fairly difficult” to earn G-Sync Compatibility certification. ViewSonic was among the vendors to submit monitors that were rejected in the first batch of G-Sync Compatibility testing.

“One of the key requirements was to have FreeSync enabled by default; (however, most monitors require that you enable FreeSync in the OSD). If not, the monitor was automatically disqualified, even if there weren"t any other issues,” Marny noted.

As you can see G-Sync Compatibility with a capital C entails more than just being able to turn G-Sync on. So, your experience running G-Sync on a monitor Nvidia hasn’t certified (because either it was never submitted or it failed the tests) will vary. This makes sense when you think about what exactly certifications are for: creating a standardized baseline of performance.

But it’s possible that a monitor lacking Nvidia’s seal of approval had some form of G-Sync testing performed. Pixio, for example, makes budget gaming monitors and doesn’t have any official G-Sync Compatible or standard G-Sync monitors. That’s not because it doesn’t want to or has never submitted a passing monitor; it’s because Pixio’s never been able to submit a monitor for certification at all.

Kevin Park, operations manager at Pixio, told us that Pixio has tried reaching out to Nvidia but there’s no way to just send in a monitor for certification. All of Pixio’s gaming monitors are FreeSync-certified.

“With FreeSync we’ve always been notified by AMD from the get-go that there’s an open certification process. They reached out to vendors specifically to ensure that the proper steps are taken. Whereas with Nvidia we haven’t seen or heard anything or gotten back from them about that kind of process,” Park told Tom’s Hardware.

Nvidia told us that any monitor vendor can submit a monitor for G-Sync Compatible validation. Vendors that don’t already have a relationship with Nvidia “should reach out to the G-Sync team at Nvidia, and we will work with the vendor to review the technical details and scheduling the validation testing,” a spokesperson said. However, the rep noted that the queue has been quite full, so it may take a while.

Pixio is a smaller vendor and is still looking to understand Nvidia’s G-Sync Compatible certification process in order to get some of its current and/or future monitors certified. In the meantime, Pixio does extensive testing with its gaming monitors so it can tell customers whether it thinks G-Sync will run on it satisfactorily. Since Pixio isn’t working with Nvidia, it holds G-Sync performance to the FreeSync standards it follows.

“If in the future we are able to get in touch with [Nvidia] we would definitely go by their requirements and make sure to do the proper steps ... But we let our consumers know we’re not officially certified by Nvidia but it does work with G-Sync compatibility to our current FreeSync certification process at the very least,” Park said.

Pixio’s G-Sync testing includes an accessible VRR range and testing for tearing, flicker and stutter. This occurs across GTX 10-series cards and on; however, RTX 30-series testing is lesser since the cards are newer. Pixio tests a certain percentage of production units, with tests particularly focused around the monitor’s mainboard. Testing can range from a month to a year, depending on the features and with new models taking longer.

This all helps explain why you sometimes see vendors boldly claim their monitor can handle G-Sync even though they don’t have official Nvidia backing.

If you have an Nvidia graphics card, it’s true that thebest gaming monitor for you will have some form of G-Sync. Sure, you can get G-Sync to run on most FreeSync monitors, but for a reliable VRR range and the promise of tear and flicker-free performance out of the box without digging into software, an official G-Sync Compatible certification is the minimum.

If you already have a FreeSync monitor, but are hoping to run G-Sync on it with an Nvidia GPU, there’s no harm in firing up GeForce Experience and enabling it. If you don’t notice any flickering or other artifacts, having to turn on G-Sync manually is a small price to pay.

Alternatively, if you’re buying a new monitor, plan on pairing it with an Nvidia card and are stuck between a FreeSync-only monitor and one with G-Sync Compatibility, weigh your options. Is a wide VRR range important to you? If you don’t have the best graphics card, are running intensive games and/or playing at high resolution, it likely is. Also consider how sensitive you are to flickering. Is this something you’d notice, and would it bother you? And do you plan on using overdrive?

If for some reason you must run G-Sync on an uncertified monitor, there’s a good chance you can get an Adaptive-Sync experience that’s at least okay. The bigger question is how okay “okay” is to you.

It’s difficult to buy a computer monitor, graphics card, or laptop without seeing AMD FreeSync and Nvidia G-Sync branding. Both promise smoother, better gaming, and in some cases both appear on the same display. But what do G-Sync and FreeSync do, exactly – and which is better?

Most AMD FreeSync displays can sync with Nvidia graphics hardware, and most G-Sync Compatible displays can sync with AMD graphics hardware. This is unofficial, however.

The first problem is screen tearing. A display without adaptive sync will refresh at its set refresh rate (usually 60Hz, or 60 refreshes per second) no matter what. If the refresh happens to land between two frames, well, tough luck – you’ll see a bit of both. This is screen tearing.

Screen tearing is ugly and easy to notice, especially in 3D games. To fix it, games started to use a technique called V-Syncthat locks the framerate of a game to the refresh rate of a display. This fixes screen tearing but also caps the performance of a game. It can also cause uneven frame pacing in some situations.

Adaptive sync is a better solution. A display with adaptive sync can change its refresh rate in response to how fast your graphics card is pumping out frames. If your GPU sends over 43 frames per second, your monitor displays those 43 frames, rather than forcing 60 refreshes per second. Adaptive sync stops screen tearing by preventing the display from refreshing with partial information from multiple frames but, unlike with V-Sync, each frame is shown immediately.

Enthusiasts can offer countless arguments over the advantages of AMD FreeSync and Nvidia G-Sync. However, for most people, AMD FreeSync and Nvidia G-Sync both work well and offer a similar experience. In fact, the two standards are far more similar than different.

All variants of AMD FreeSync are built on the VESA Adaptive Sync standard. The same is true of Nvidia’s G-Sync Compatible, which is by far the most common version of G-Sync available today.

VESA Adaptive Sync is an open standard that any company can use to enable adaptive sync between a device and display. It’s used not only by AMD FreeSync and Nvidia G-Sync Compatible monitors but also other displays, such as HDTVs, that support Adaptive Sync.

AMD FreeSync and Nvidia G-Sync Compatible are so similar, in fact, they’re often cross compatible. A large majority of displays I test with support for either AMD FreeSync or Nvidia G-Sync Compatible will work with graphics hardware from the opposite brand.

AMD FreeSync and Nvidia G-Sync Compatible are built on the same open standard. Which leads to an obvious question: if that’s true, what’s the difference?

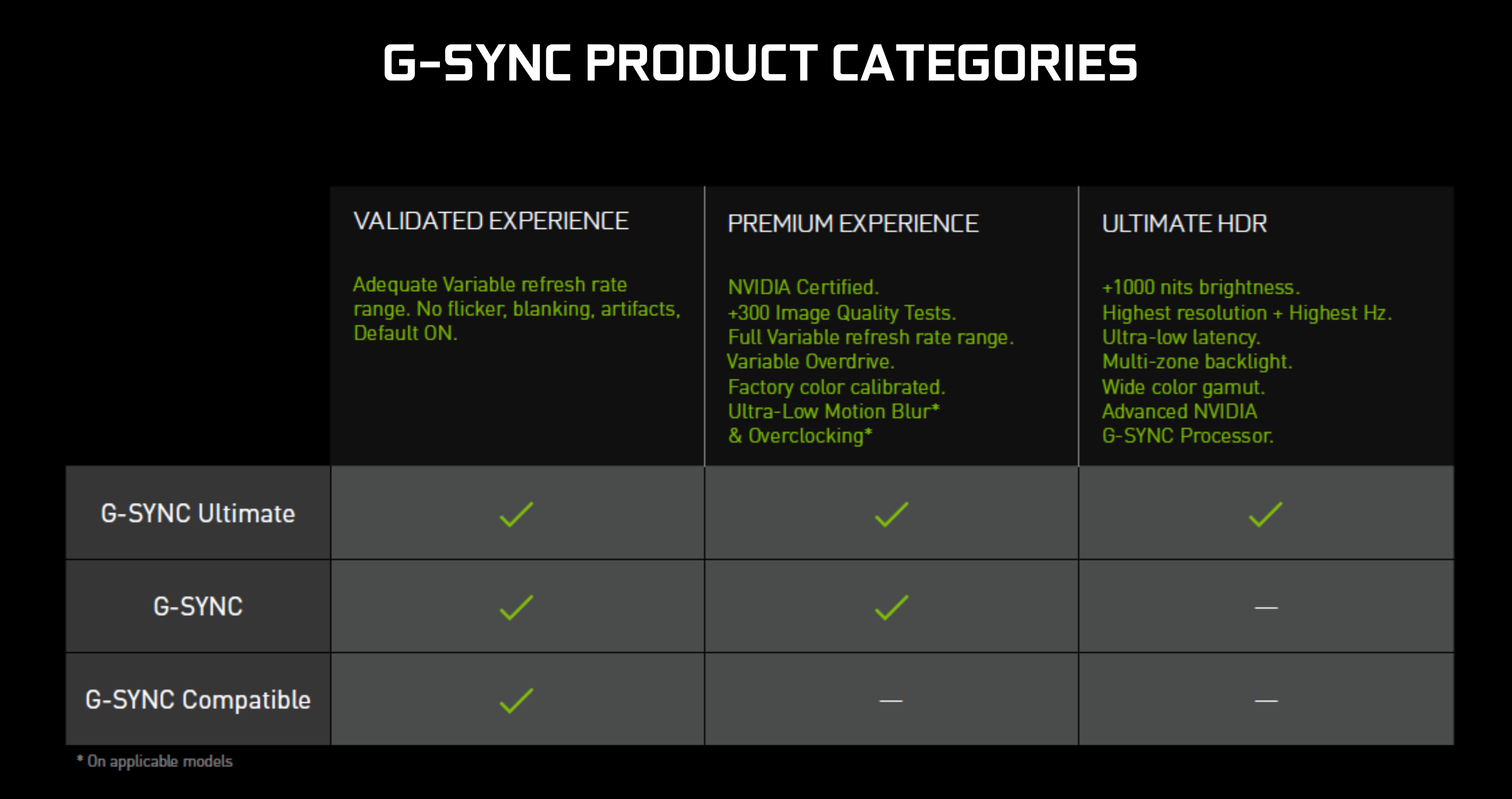

Nvidia G-Sync Compatible, the most common version of G-Sync today, is based on the VESA Adaptive Sync standard. But Nvidia G-Sync and G-Sync Ultimate, the less common and more premium versions of G-Sync, use proprietary hardware in the display.

This is how all G-Sync displays worked when Nvidia brought the technology to market in 2013. Unlike Nvidia G-Sync Compatible monitors, which often (unofficially) works with AMD Radeon GPUs, G-Sync is unique and proprietary. It only supports adaptive sync with Nvidia graphics hardware.

It’s usually possible to switch sides if you own an AMD FreeSync or Nvidia G-Sync Compatible display. If you buy a G-Sync or G-Sync Ultimate display, however, you’ll have to stick with Nvidia GeForce GPUs. (Here’s our guide to the best graphics cards for PC gaming.)

This loyalty does net some perks. The most important is G-Sync’s support for a wider range of refresh rates. The VESA Adaptive Sync specification has a minimum required refresh rate (usually 48Hz, but sometimes 40Hz). A refresh rate below that can cause dropouts in Adaptive Sync, which may let screen tearing to sneak back in or, in a worst-case scenario, cause the display to flicker.

G-Sync and G-Sync Ultimate support the entire refresh range of a panel – even as low as 1Hz. This is important if you play games that may hit lower frame rates, since Adaptive Sync matches the display refresh rate with the output frame rate.

For example, if you’re playing Cyberpunk 2077 at an average of 30 FPS on a 4K display, that implies a refresh rate of 30Hz – which falls outside the range VESA Adaptive Sync supports. AMD FreeSync and Nvidia G-Sync Compatible may struggle with that, but Nvidia G-Sync and G-Sync Ultimate won’t have a problem.

AMD FreeSync Premium and FreeSync Premium Pro have their own technique of dealing with this situation called Low Framerate Compensation. It repeats frames to double the output such that it falls within a display’s supported refresh rate.

Other differences boil down to certification and testing. AMD and Nvidia have their own certification programs that displays must pass to claim official compatibility. This is why not all VESA Adaptive Sync displays claim support for AMD FreeSync and Nvidia G-Sync Compatible.

AMD FreeSync and Nvidia G-Sync include mention of HDR in their marketing. AMD FreeSync Premium Pro promises “HDR capabilities and game support.” Nvidia G-Sync Ultimate boasts of “lifelike HDR.”

This is a bunch of nonsense. Neither has anything to do with HDR, though it can be helpful to understand that some level of HDR support is included in those panels. The most common HDR standard, HDR10, is an open standard from the Consumer Technology Association. AMD and Nvidia have no control over it. You don’t need FreeSync or G-Sync to view HDR, either, even on each company’s graphics hardware.

PC gamers interested in HDRshould instead look for VESA’s DisplayHDR certification, which provides a more meaningful gauge of a monitor’s HDR capabilities.

Both standards are plug-and-play with officially compatible displays. Your desktop’s video card will detect that the display is certified and turn on AMD FreeSync or Nvidia G-Sync automatically. You may need to activate the respective adaptive sync technology in your monitor settings, however, though that step is a rarity in modern displays.

Displays that support VESA Adaptive Sync, but are not officially supported by your video card, require you dig into AMD or Nvidia’s driver software and turn on the feature manually. This is a painless process, however – just check the box and save your settings.

AMD FreeSync and Nvidia G-Sync are also available for use with laptop displays. Unsurprisingly, laptops that have a compatible display will be configured to use AMD FreeSync or Nvidia G-Sync from the factory.

A note of caution, however: not all laptops with AMD or Nvidia graphics hardware have a display with Adaptive Sync support. Even some gaming laptops lack this feature. Pay close attention to the specifications.

VESA’s Adaptive Sync is on its way to being the common adaptive sync standard used by the entire display industry. Though not perfect, it’s good enough for most situations, and display companies don’t have to fool around with AMD or Nvidia to support it.

That leaves AMD FreeSync and Nvidia G-Sync searching for a purpose. AMD FreeSync and Nvidia G-Sync Compatible are essentially certification programs that monitor companies can use to slap another badge on a product, though they also ensure out-of-the-box compatibility with supported graphics card. Nvidia’s G-Sync and G-Sync Ultimate are technically superior, but require proprietary Nvidia hardware that adds to a display’s price. This is why G-Sync and G-Sync Ultimate monitors are becoming less common.

My prediction is this: AMD FreeSync and Nvidia G-Sync will slowly, quietly fade away. AMD and Nvidia will speak of them less and lesswhile displays move towards VESA Adaptive Sync badgesinstead of AMD and Nvidia logos.

If that happens, it would be good news for the PC. VESA Adaptive Sync has already united AMD FreeSync and Nvidia G-Sync Compatible displays. Eventually, display manufacturers will opt out of AMD and Nvidia branding entirely – leaving VESA Adaptive Sync as the single, open standard. We’ll see how it goes.

When buying a gaming monitor, it’s important to compare G-Sync vs FreeSync. Both technologies improve monitor performance by matching the performance of the screen with the graphics card. And there are clear advantages and disadvantages of each: G-Sync offers premium performance at a higher price while FreeSync is prone to certain screen artifacts like ghosting.

So G-Sync versus FreeSync? Ultimately, it’s up to you to decide which is the best for you (with the help of our guide below). Or you can learn more about ViewSonic’s professional gaming monitors here.

In the past, monitor manufacturers relied on the V-Sync standard to ensure consumers and business professionals could use their displays without issues when connected to high-performance computers. As technology became faster, however, new standards were developed — the two main ones being G-Sync and Freesync.

V-Sync, short for vertical synchronization, is a display technology that was originally designed to help monitor manufacturers prevent screen tearing. This occurs when two different “screens” crash into each other because the monitor’s refresh rate can’t keep pace with the data being sent from the graphics card. The distortion is easy to spot as it causes a cut or misalignment to appear in the image.

This often comes in handy in gaming. For example, GamingScan reports that the average computer game operates at 60 FPS. Many high-end games operate at 120 FPS or greater, which requires the monitor to have a refresh rate of 120Hz to 165Hz. If the game is run on a monitor with a refresh rate that’s less than 120Hz, performance issues arise.

V-Sync eliminates these issues by imposing a strict cap on the frames per second (FPS) reached by an application. In essence, graphics cards could recognize the refresh rates of the monitor(s) used by a device and then adjust image processing speeds based on that information.

Although V-Sync technology is commonly used when users are playing modern video games, it also works well with legacy games. The reason for this is that V-Sync slows down the frame rate output from the graphics cards to match the legacy standards.

Despite its effectiveness at eliminating screen tearing, it often causes issues such as screen “stuttering” and input lag. The former is a scenario where the time between frames varies noticeably, leading to choppiness in image appearances.

V-Sync only is useful when the graphics card outputs video at a high FPS rate, and the display only supports a 60Hz refresh rate (which is common in legacy equipment and non-gaming displays). V-Sync enables the display to limit the output of the graphics card, to ensure both devices are operating in sync.

Although the technology works well with low-end devices, V-Sync degrades the performance of high-end graphics cards. That’s the reason display manufacturers have begun releasing gaming monitors with refresh rates of 144Hz, 165Hz, and even 240Hz.

While V-Sync worked well with legacy monitors, it often prevents modern graphics cards from operating at peak performance. For example, gaming monitors often have a refresh rate of at least 100Hz. If the graphics card outputs content at low speeds (e.g. 60Hz), V-Sync would prevent the graphics card from operating at peak performance.

Since the creation of V-Sync, other technologies such as G-Sync and FreeSync have emerged to not only fix display performance issues, but also to enhance image elements such as screen resolution, image colors, or brightness levels.

Released to the public in 2013, G-Sync is a technology developed by NVIDIA that synchronizes a user’s display to a device’s graphics card output, leading to smoother performance, especially with gaming. G-Sync has gained popularity in the electronics space because monitor refresh rates are always better than the GPU’s ability to output data. This results in significant performance issues.

For example, if a graphics card is pushing 50 frames per second (FPS), the display would then switch its refresh rate to 50 Hz. If the FPS count decreases to 40, then the display adjusts to 40 Hz. The typical effective range of G-Sync technology is 30 Hz up to the maximum refresh rate of the display.

The most notable benefit of G-Sync technology is the elimination of screen tearing and other common display issues associated with V-Sync equipment. G-Sync equipment does this by manipulating the monitor’s vertical blanking interval (VBI).

VBI represents the interval between the time when a monitor finishes drawing a current frame and moves onto the next one. When G-Sync is enabled, the graphics card recognizes the gap, and holds off on sending more information, therefore preventing frame issues.

To keep pace with changes in technology, NVIDIA developed a newer version of G-Sync, called G-Sync Ultimate. This new standard is a more advanced version of G-Sync. The core features that set it apart from G-Sync equipment are the built-in R3 module, high dynamic range (HDR) support, and the ability to display 4K quality images at 144Hz.

Although G-Sync delivers exceptional performance across the board, its primary disadvantage is the price. To take full advantage of native G-Sync technologies, users need to purchase a G-Sync-equipped monitor and graphics card. This two-part equipment requirement limited the number of G-Sync devices consumers could choose from It’s also worth noting that these monitors require the graphics card to support DisplayPort connectivity.

While native G-Sync equipment will likely carry a premium, for the time being, budget-conscious businesses and consumers still can use G-Sync Compatible equipment for an upgraded viewing experience.

Released in 2015, FreeSync is a standard developed by AMD that, similar to G-Sync, is an adaptive synchronization technology for liquid-crystal displays. It’s intended to reduce screen tearing and stuttering triggered by the monitor not being in sync with the content frame rate.

Since this technology uses the Adaptive Sync standard built into the DisplayPort 1.2a standard, any monitor equipped with this input can be compatible with FreeSync technology. With that in mind, FreeSync is not compatible with legacy connections such as VGA and DVI.

The “free” in FreeSync comes from the standard being open, meaning other manufacturers are able to incorporate it into their equipment without paying royalties to AMD. This means many FreeSync devices on the market cost less than similar G-Sync-equipped devices.

As FreeSync is a standard developed by AMD, most of their modern graphics processing units support the technology. A variety of other electronics manufacturers also support the technology, and with the right knowledge, you can even get FreeSync to work on NVIDIA equipment.

Although FreeSync is a significant improvement over the V-Sync standard, it isn’t a perfect technology. The most notable drawback of FreeSync is ghosting. This is when an object leaves behind a bit of its previous image position, causing a shadow-like image to appear.

The primary cause of ghosting in FreeSync devices is imprecise power management. If enough power isn’t applied to the pixels, images show gaps due to slow movement. On the other hand when too much power is applied, then ghosting occurs.

To overcome those limitations, in 2017 AMD released an enhanced version of FreeSync known as FreeSync 2 HDR. Monitors that meet this standard are required to have HDR support; low framerate compensation capabilities (LFC); and the ability to toggle between standard definition range (SDR) and high dynamic range (HDR) support.

A key difference between FreeSync and FreeSync 2 devices is that with the latter technology, if the frame rate falls below the supported range of the monitor, low framerate compensation (LFC) is automatically enabled to prevent stuttering and tearing.

As FreeSync is an open standard – and has been that way since day one – people shopping for FreeSync monitors have a wider selection than those looking for native G-Sync displays.

If performance and image quality are your top priority when choosing a monitor, then G-Sync and FreeSync equipment come in a variety of offerings to fit virtually any need. The primary difference between the two standards is levels of input lag or tearing.

If you want low input lag and don’t mind tearing, then the FreeSync standard is a good fit for you. On the other hand, if you’re looking for smooth motions without tearing, and are okay with minor input lag, then G-Sync equipped monitors are a better choice.

For the average individual or business professional, G-Sync and FreeSync both deliver exceptional quality. If cost isn’t a concern and you absolutely need top of the line graphics support, then G-Sync is the overall winner.

Choosing a gaming monitor can be challenging, you can read more about our complete guide here. For peak graphics performance, check out ELITE gaming monitors.

Upgrading your computer with the latest technology only enhances your experience if you are able to get the maximum value out of your top-notch gear. It is clear that an AOC FreeSync monitor can stabilise and hone picture quality coming from the PC with AMD graphic cards, but thanks to the recent technological improvements, it is now possible to transform an AOC FreeSync monitor into a G-Sync compatible display, working smoothly with GPU’s from NVIDIA as well.

The GPU is usually not able to maintain a consistent frame rate, possibly altering between high spikes and sudden drops in performance. Its frame rate depends on the scenery the GPU has to display. For example, calm scenes in which there isn’t much going on demand less performance than epic, effect-laden boss fights.

When the frame rate of your GPU does not match the frame rate of your monitor, display issues occur: lag, tearing or stuttering when the monitor has to wait for new data or tries to display two different frames as one. To prevent these issues, the GPU and monitor need to be synchronised.

A technology to ensure a stable picture quality is called FreeSync and requires an AMD graphics processor and a FreeSync monitor. NVIDIA’s G-Sync on the other hand depends on the combination of an NVIDIA graphics card and a G-Sync monitor.

With the new generations of NVIDIA graphics cards, it is possible to get the G-Sync features working on specific FreeSync AOC monitors as well. NVIDIA announced a list of certified AOC monitors which are also G-Sync compatible. Even if the AOC product is not on the list, you can still enable G-Sync on any AOC monitor and test the performance.*

Now you should have successfully enabled G-Sync on your AOC FreeSync monitor. The picture quality stays perfect and you can enjoy your gaming session without disruptive image flaws.

* Please refer to NVIDIA website for the complete list of GPUs working with FreeSync monitors and to see which monitors have been officially categorized as G-sync Compatible.

NVIDIA G-SYNC is groundbreaking new display technology that delivers the smoothest and fastest gaming experience ever. G-SYNC’s revolutionary performance is achieved by synchronizing display refresh rates to the GPU in your GeForce GTX-powered PC, eliminating screen tearing and minimizing display stutter and input lag. The result: scenes appear instantly, objects look sharper, and gameplay is super smooth, giving you a stunning visual experience and a serious competitive edge.

Eliminating tearing whilst eliminating input lag, it almost sounds too good to be true. This really is one of those technologies you have to see for yourself.

The GeForce.com team have created a video which you can download that explains and simulates the effects. If you have not had the chance to see G-SYNC in person then this video goes some way to showing you what you are missing.

Tim Sweeney, John Carmack and Johan AnderssonThe biggest leap forward in gaming monitors since we went from standard definition to high-def. If you care about gaming, G-SYNC is going to make a huge difference in the experience. Tim Sweeney, founder, Epic GamesOnce you play on a G-SYNC capable monitor, you’ll never go back. John Carmack, architect of id Software’s engine and rocket scientistOur games have never looked or played better. G-SYNC just blew me away! Johan Andersson, DICE’s technical director, and architect of the Frostbite engines.

guys i need help ... my alienware monitor aw2518hf started having flickering issues. at first i thought it was the monitor. the problem doesnt happen in games but mostly on browser pages and its totally random. the screen might work perfectly for days and then all of a sudden boom... i cant figure out whats causing it. i changed the display port cable and got a verified VESA DP cable with 19pin because people said that 20pin dp cables shouldnt be used for connecting monitors to pc and things got better but after some days the problem came back just for a shorter amount of time and the flickering was at a smaller degree . when the problem occurred my old second monitor at 60fps which is next to alienware had zero flickering at the same time. i contacted dell and they send me a refurbished screen but the thing is that i have to send back one of the monitors and the problem isnt always happening so i dont know if its a monitor issue or is it a driver gsync issue ?? btw i was using gsync without knowing it right now ive disabled it to see if its gonna happen again but it might take days for the problem to occur again

NOTE: A G-Sync Compatible monitor is an AMD FreeSync monitor that has been validated by Nvidia to provide artifact-free performance when used with selected Nvidia graphics cards. G-Sync Compatible is a trimmed down version of G-Sync. (It does not have features such as ultralow motion blur, overclocking, and variable overdrive.)

Note:Using G-Sync with a monitor that is not found in the above list can lead to issues like flickering, blanking, and more. For information about troubleshooting these issues, reference Troubleshooting Flickering Video on Dell Gaming or Alienware Monitors.

NOTE: Ensure that either G-Sync or FreeSync is enabled in the monitor OSD settings. See the User Manual of your Dell monitor to learn how to enable G-Sync or FreeSync on your monitor.

This website is using a security service to protect itself from online attacks. The action you just performed triggered the security solution. There are several actions that could trigger this block including submitting a certain word or phrase, a SQL command or malformed data.

Multiple models of BenQ gaming monitors have official AMD FreeSync implementation. That means FreeSync is guaranteed to work on those monitors, and is especially nice to have in high framerate models that go up to 144Hz. Once more, when we say guaranteed to work we mean with AMD Radeon graphics cards.

But what if you have a GeForce graphics card instead? Here enters the no harm in trying part. Because FreeSync and G-Sync are so similar and so closely related to VESA Adaptive Sync, FreeSync may very well work with your GeForce graphics card. You may not get the more premium aspects (variable refresh rate probably won’t work for HDR gaming content, for example), but the basic screen tearing prevention could operate just fine. You could try turning G-Sync on in the NVIDIA control center and see what happens.

The flip side of this holds true as well. If you have a G-Sync monitor and an AMD graphics card, G-Sync could still function at least on that basic level we mentioned. The hardware and software architecture of the two technologies is so similar, most graphics cards may overlook the difference. On a related note, neither AMD nor NVIDIA are actively blocking each other’s proprietary technologies, so that’s good.

We can say that FreeSync monitors are also “G-Sync possible”. Claiming G-Sync support or compatibility would be going too far. Rather, it would be more correct to explain that G-Sync is very possible on FreeSync displays, and vice versa.

At CES 2019, Nvidia announced that its latest GeForce 417.17 drivers would enable the company’s G-Sync technology to work with monitors designed for AMD FreeSync. That means anyone seeking tear-free gaming without unwanted input lag now has more display options to choose from.

However, if your FreeSync monitor isn’t on the list, that doesn’t necessarily mean it won’t work. Users on Reddit have found that G-Sync can be made to work with many more FreeSync monitors – and that might well include yours. Here’s everything you need to know about getting G-Sync working on a FreeSync monitor.

The first thing you need is a monitor that supports AMD FreeSync or FreeSync 2. If you’re not sure whether yours does, check the manufacturer’s website.

Next, check the onscreen display (OSD) to see if there’s a FreeSync option hidden within the menus; if there is one, turn it on. However, if you don’t find this setting, that doesn’t necessarily mean your monitor doesn’t support FreeSync – some, such as my own Acer XF270HU, don’t display the option in the OSD.

To use G-Sync you need an Nvidia GTX 10-series graphics card or above – and it needs to be plugged into the monitor via a DisplayPort cable, as the G-Sync controller doesn’t support HDMI connections.

Open the Nvidia Control Panel (you can find it by right-clicking on your desktop, or on the GeForce icon in the taskbar). You’ll see a big list of options listed on the left, divided into four groups: 3D Settings, Display, Stereoscopic 3D and Video.

Under Display, you should see a link to “Set up G-Sync”; click this and tick the box labelled “Enable G-Sync”. You’ll want to pick the appropriate mode, too: we prefer to enable G-Sync in full-screen mode only, but it’s up to you. If you’ve got multiple monitors, check you’ve selected the correct one.

Nvidia makes it very easy to confirm that G-Sync is working. In the Display settings of the Nvidia Control Panel you’ll find a tickbox labelled “G-Sync Compatible Indicator”; when this is ticked, a box saying “G-Sync On” appears at the side of any full-screen (or windowed) apps that are using G-Sync.

If you don’t have a convenient G-Sync-enabled game to hand, you can test G-Sync using Nvidia’s free Pendulum Demo. Download and install it, then open it, set the resolution and tick the fullscreen option before launching the demo. You can adjust the sync options using the tickboxes at the top of the screen; if all is well, you should see the G-Sync overlay when G-Sync is enabled.

If your monitor isn’t on Nvidia’s list, it’s not guaranteed to work perfectly with G-Sync. However, if you’re experiencing flickering or a loss of signal, there are a few things you can try.

First, in the Nvidia Control Panel, click on “‘Manage 3D settings” (near the top of the list), then, in the Global pane, scroll down to find “Monitor technology” and click to set this to “G-Sync Compatible”. Hit Apply and check to see if the problems have been rectified.

If that hasn’t worked, you can try lowering your display’s resolution. Some users have reported that this has fixed problems for them, but you’ll have to decide whether you want to sacrifice detail for smoother gameplay.

Finally, some users on Reddit have found that lowering your FreeSync range using CRU (Custom Resolution Utility) can clear up issues with G-Sync. This sounds quite technical but it’s not too difficult – it simply involves tweaking the range of refresh rates used by the driver. To do this, you’ll need to manually add an “Extension block” within the app, and add the FreeSync option under the “Data blocks”. Extend the range downwards – so, for example, if your monitor supports a FreeSync range of 40Hz-144Hz, try setting it to 30Hz-144Hz.

You might be wondering how well Nvidia’s G-Sync works on a monitor designed for AMD’s competing FreeSync technology. To put it to the test, I spent some time playing Counter-Strike: Global Offensive on the Acer Nitro XV273K – one of the first 12 monitors to officially pass Nvidia’s certification – with both an XFX AMD RX590 Fatboy and an Nvidia GTX 1080.

As one who’s racked up more than 2,000 hours playing competitive CS:GO, I can say with confidence that there was no perceivable difference. You won’t be at a disadvantage when using an Nvidia card on a FreeSync monitor versus those using an AMD GPU and display.

However, it’s worth noting that both FreeSync and G-Sync disable Overdrive on the Acer Nitro XV273K monitor. This makes the monitor respond a tad more slowly than it does with those technologies disabled and Overdrive set to Extreme. Don’t confuse this for input lag, however: G-Sync has no real impact on that. For a more detailed discussion, I’d urge you to read BlurBusters’ analysis of the issue.

Many of the best gaming monitors today come with ‘AMD FreeSync’ technology. AMD likely chose the name ‘FreeSync’ to draw attention to the fact that it’s a free rival to NVIDIA’s G-Sync technology. Both technologies eliminate screen tearing by synchronising your monitor’s refresh rate to your connected GPU’s outputted framerate, but FreeSync doesn’t require any AMD proprietary hardware inside the monitor to work and is in this sense ‘free’.

A few years ago, if you wanted this kind of variable refresh rate (VRR) technology, you had to opt for an expensive G-Sync monitor in combination with one of the best graphics cards from NVIDIA. But then AMD FreeSync hit the market, a tech that uses VESA’s open (i.e., free) Adaptive-Sync standard. In retaliation to AMD’s new free option, NVIDIA gave us ‘G-Sync Compatible’ monitors, which also use Adaptive-Sync.

The result of this muddy history is a market in which there seem to be more VRR technologies than we might know what to do with. Deciding whether FreeSync is worth it comes down to comparing it to its alternatives, and to do that we need to understand what these technologies are and what distinguishes them from one another. But despite how much NVIDIA and AMD seem to want us to think otherwise, these technologies are all fundamentally similar, and the differences between them aren’t too complicated.

This occurs when the game’s framerate, outputted by the GPU to the monitor, doesn’t sync up with the monitor’s refresh rate. If the framerate and refresh rate aren’t synchronised the monitor might start drawing a new frame to the screen before it’s finished drawing the previous one, which leads to the tearing effect.

All VRR tech is designed to combat this problem. It does this by constantly adapting the monitor’s refresh rate to stay synchronised with the GPU’s outputted framerate. (VSync, on the other hand, works the other way around by synchronising and locking the framerate to the monitor’s static refresh rate.)

The difference between different VRR technologies lies in how they attempt to achieve this constant refresh rate synchronisation. A monitor that has Adaptive-Sync capability can have its refresh rate constantly shift to remain synchronised with a game’s frame rate. To use Adaptive-Sync, however, the connected GPU needs to tell the monitor what to do – it needs to tell it what refresh rate it should shift to.

These standards that are validated by AMD including things such as “very low input lag”, “low flicker”, “low framerate compensation (LFC)” (on FreeSync 2 monitors), HDR support (on FreeSync 2 monitors), and display quality factors such as “luminance, colour space, and monitor performance”. So, while the primary feature of a FreeSync monitor for many gamers is its Adaptive-Sync VRR support, many other qualities of the monitor are checked and validated before it can be given the FreeSync label, meaning a FreeSync monitor has AMD’s stamp of all-round quality.

AMD tells us how to enable FreeSync on all FreeSync monitors when paired with a supported AMD GPU. First, you should ensure that the monitor’s own on-screen display options have AMD FreeSync enabled, anti-blur disabled, and DisplayPort set to 1.2 or higher. Following this, you should open AMD Radeon settings, select Display, and check the AMD FreeSync box to enable it.

Finally, many users have found that limiting your framerate – either in-game or via AMD ‘Chill’ – to a frame or two below your monitor’s maximum refresh rate is the best way to minimise input lag with FreeSync enabled.

At first glance, it might seem like there’s an overabundance of VRR options on the market. In fact, in one respect there are only two options: Adaptive-Sync or G-Sync. This is because, as we have seen, the VRR tech that FreeSync uses is Adaptive-Sync, whereas G-Sync uses its own proprietary hardware-enabled VRR tech. Different versions of FreeSync (FreeSync 2 and FreeSync Premium Pro) also rely on Adaptive-Sync, but with different specification standards. Similarly, G-Sync Compatible monitors rely on Adaptive-Sync. At the fundamental level, then, only G-Sync and G-Sync Ultimate monitors use a different VRR technology to Adaptive-Sync.

There are potential benefits to opting for G-Sync over Adaptive-Sync. With NVIDIA’s proprietary scaler module, you’re guaranteed a top quality VRR experience, as has been shown over the years of G-Sync’s implementation. For example, G-Sync’s low framerate compensation (LFC) can extend all the way down to 1Hz (which works via frame duplication), whereas FreeSync monitors often have a higher minimum refresh rate threshold.

However, for most use cases – where you won’t be dropping down to 1fps in games, for instance – Adaptive-Sync should give you just as pleasurable of a VRR experience as G-Sync. Both eliminate screen tearing completely within their refresh rate thresholds, and, when configured properly, both keep input delay to an unnoticeable minimum.

Similarly, both FreeSync and G-Sync have high quality specification standards, and the differences in overall standards has levelled out over the years – both AMD’s and NVIDIA’s validation processes are high quality and thorough. This is also true for G-Sync Compatible monitors, which use Adaptive-Sync just like FreeSync instead of a proprietary NVIDIA scaler module.

G-Sync monitors are often a little more expensive thanks to their scaler module, so this is also a factor to consider. But ultimately, whether you opt for a FreeSync, G-Sync, or G-Sync Compatible monitor comes down to two things: first, how does the specific monitor you’re considering compare to others when looking at other factors such as its response time, contrast ratio, and maximum refresh rate; and second, what graphics card do you own?

FreeSync is designed for AMD cards, and G-Sync for NVIDIA ones, and while FreeSync monitors now often work with NVIDIA cards, sticking to the respective GPU brand will be certain to throw up less issues and require no workarounds.

Ultimately, though, all modern VRR technologies work well and have high quality specification standards. Because of this, after considering your graphics card’s compatibility, you should primarily focus on whether a monitor offers what you want in terms of resolution, panel type, picture quality, contrast ratio, maximum refresh rate, and response time. If you find a monitor that matches what you want in these respects, whether the monitor is G-Sync, G-Sync Compatible, or FreeSync shouldn’t matter too much providing your graphics card is compatible – all will provide a high quality, tear-free gaming experience with minimal input delay once configured properly.

This website is using a security service to protect itself from online attacks. The action you just performed triggered the security solution. There are several actions that could trigger this block including submitting a certain word or phrase, a SQL command or malformed data.

Take a look at our best gaming monitor recommendations and you"ll find pretty much all of them have support for either AMD FreeSync or Nvidia"s G-Sync variable refresh rate technology. But what exactly is G-Sync and FreeSync and why are they so important for gaming? Below, you"ll find all the answers to your burning G-Sync FreeSync questions, including what each one does, how they"re different, and which one is best for your monitor and graphics card. I"ll also be talking about where Nvidia"s G-Sync Compatible standard fits in with all this, as well as what Nvidia"s G-Sync Ultimate, AMD"s FreeSync Premium and FreeSync Premium Pro specifications bring to the table as well.

In all their forms, G-Sync and FreeSync are adaptive syncing technologies, meaning they dynamically synchronize your monitor’s refresh rate (how many times the screen updates itself per second) to match the frames-per-second output of your graphics card. This ensures smoothness by preventing screen tearing, which occurs when the monitor attempts to show chunks of several different frames in one image.

Unlike V-Sync, which is a similar technology you"ll often find in a game"s settings menu, G-Sync and FreeSync don"t add input lag, and they don"t force your GPU to stick to whatever your monitor"s refresh rate is - an approach that causes stuttering with V-Sync. Instead they adjust the refresh rate on the fly.

As such, it"s worth using one or the other, but Nvidia and AMD have tweaked their technologies over the years, adding new features and different tiers with varying capabilities. Which is best? It’s time to find out.

G-Sync is Nvidia"s variable refresh rate technology, and (unsurprisingly) requires an Nvidia graphics card in order to work. That"s generally not a problem, unless you were waiting for a lesser-spotted RTX 3080, but an even bigger problem might be how much the best Nvidia G-Sync monitors tend to cost.

You see, whereas FreeSync piggy-backs off a monitor"s built-in DisplayPort 1.2 (and, more recently, HDMI) protocols, fully-fledged G-Sync monitors require a propriety processing chip, which pushes up the price. It"s a fixed standard, too, and G-Sync monitors have to undergo over 300 compatibility and image quality tests, according to Nvidia, before they can join the G-Sync club.

This is why you often only find G-Sync on higher-end monitors, as it"s simply not cost-effective to include it on cheaper ones. That said, since G-Sync is a fixed standard, you also know exactly what you"re getting whenever you buy a G-Sync enabled display: the effectiveness won"t be slightly different between monitors, as is the case with FreeSync.

G-Sync Ultimate adds HDR support into the mix. You still get everything described above, but every G-Sync Ultimate screen also gets you the following monitor specifications:

G-Sync Ultimate monitors are much rarer than their standard G-Sync counterparts - naturally, given their higher specs and pricing - but but they encompass both regular desktop monitors, such as the Asus ROG Swift PG27UQ and Acer Predator X27, as well as Nvidia"s BFGD (or Big Format Gaming Display) screens such as the HP

Ms.Josey

Ms.Josey

Ms.Josey

Ms.Josey