not supported with g sync lcd panel price

I"m the process of buying a secondhand Alienware 15 r3 laptop. On my first visit to the seller, I was disappointed to discover that even though the laptop had both a iGPU and a dGPU, there was no way to switch (MUX switch) the graphics from the dGPU.

Information on this error message is REALLY sketchy online. Some say that the G-Sync LCD panel is hardwired to the dGPU and that the iGPU is connected to nothing. Some say that dGPU is connected to the G-Sync LCD through the iGPU. Some say that they got the MUX switch working after an intention ordering of bios update, iGPU drivers then dGPU drivers on a clean install.

I"m suspecting that if I connect an external 60hz IPS monitor to one of the display ports on the laptop and make it the only display, the Fn+F7 key will actually switch the graphics because the display is not a G-Sync LCD panel. Am I right on this?

If I"m right on this, does that mean that if I purchase this laptop, order a 15inch Alienware 60hz IPS screen and swap it with the FHD 120+hz screen currently inside, I will also continue to have MUX switch support and no G-Sync? The price for these screens is not outrageous.

If you want smooth gameplay without screen tearing and you want to experience the high frame rates that your Nvidia graphics card is capable of, Nvidia’s G-Sync adaptive sync tech, which unleashes your card’s best performance, is a feature that you’ll want in your next monitor.

To get this feature, you can spend a lot on a monitor with G-Sync built in, like the high-end $1,999 Acer Predator X27, or you can spend less on a FreeSync monitor that has G-Sync compatibility by way of a software update. (As of this writing, there are 15 monitors that support the upgrade.)

However, there are still hundreds of FreeSync models that will likely never get the feature. According to Nvidia, “not all monitors go through a formal certification process, display panel quality varies, and there may be other issues that prevent gamers from receiving a noticeably improved experience.”

But even if you have an unsupported monitor, it may be possible to turn on G-Sync. You may even have a good experience — at first. I tested G-Sync with two unsupported models, and, unfortunately, the results just weren’t consistent enough to recommend over a supported monitor.

The 32-inch AOC CQ32G1 curved gaming monitor, for example, which is priced at $399, presented no issues when I played Apex Legends and Metro: Exodus— at first. Then some flickering started appearing during gameplay, though I hadn’t made any changes to the visual settings. I also tested it with Yakuza 0,which, surprisingly, served up the worst performance, even though it’s the least demanding title that I tested. Whether it was in full-screen or windowed mode, the frame rate was choppy.

Another unsupported monitor, the $550 Asus MG279Q, handled both Metro: Exodus and Forza Horizon 4 without any noticeable issues. (It’s easy to confuse the MG279Q for the Asus MG278Q, which is on Nvidia’s list of supported FreeSync models.) In Nvidia’s G-Sync benchmark, there was significant tearing early on, but, oddly, I couldn’t re-create it.

Before you begin, note that in order to achieve the highest frame rates with or without G-Sync turned on, you’ll need to use a DisplayPort cable. If you’re using a FreeSync monitor, chances are good that it came with one. But if not, they aren’t too expensive.

First, download and install the latest driver for your GPU, either from Nvidia’s website or through the GeForce Experience, Nvidia’s Windows 10 app that can tweak graphics settings on a per-game basis. All of Nvidia’s drivers since mid-January 2019 have included G-Sync support for select FreeSync monitors. Even if you don’t own a supported monitor, you’ll probably be able to toggle G-Sync on once you install the latest driver. Whether it will work well after you do turn the feature on is another question.

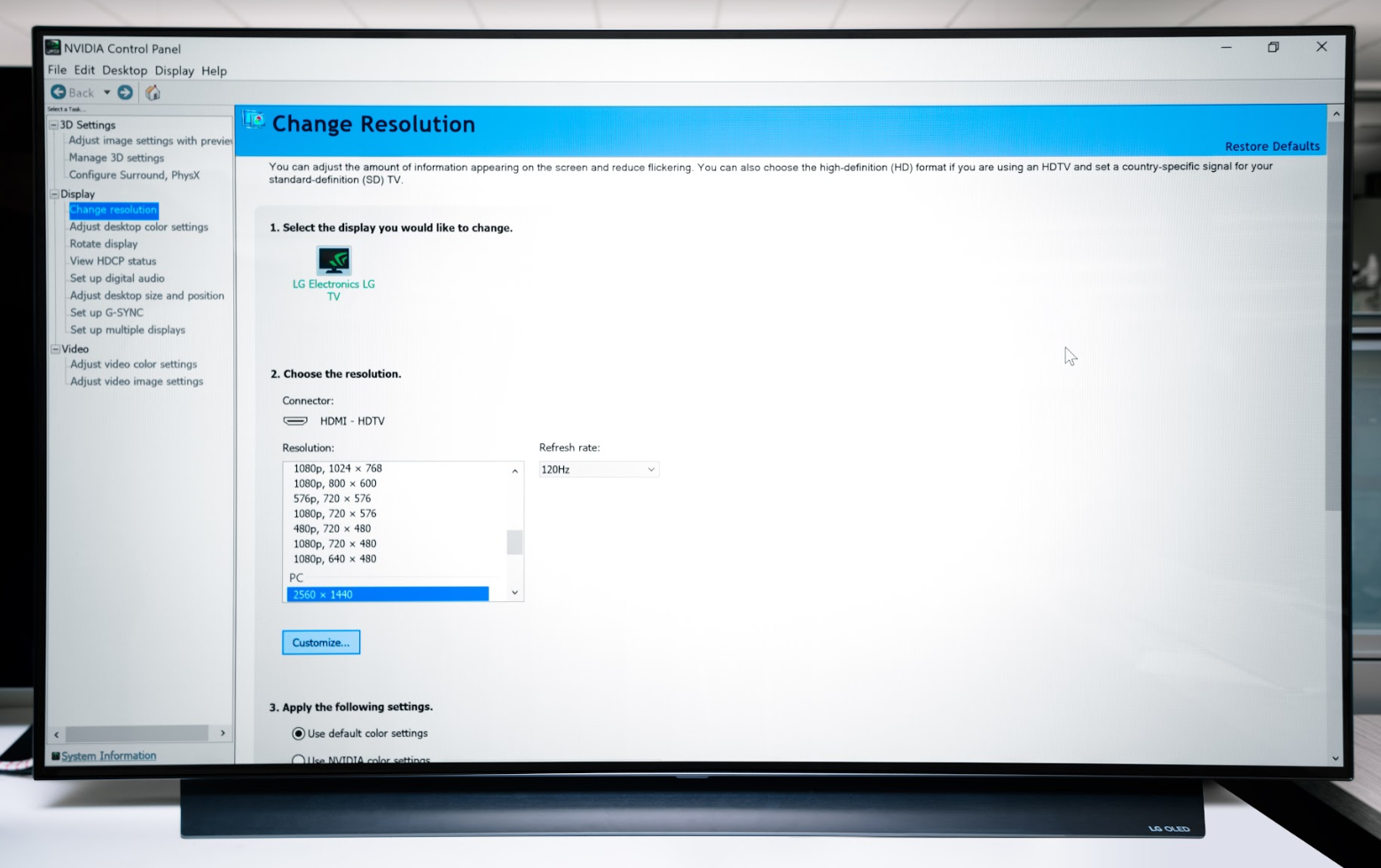

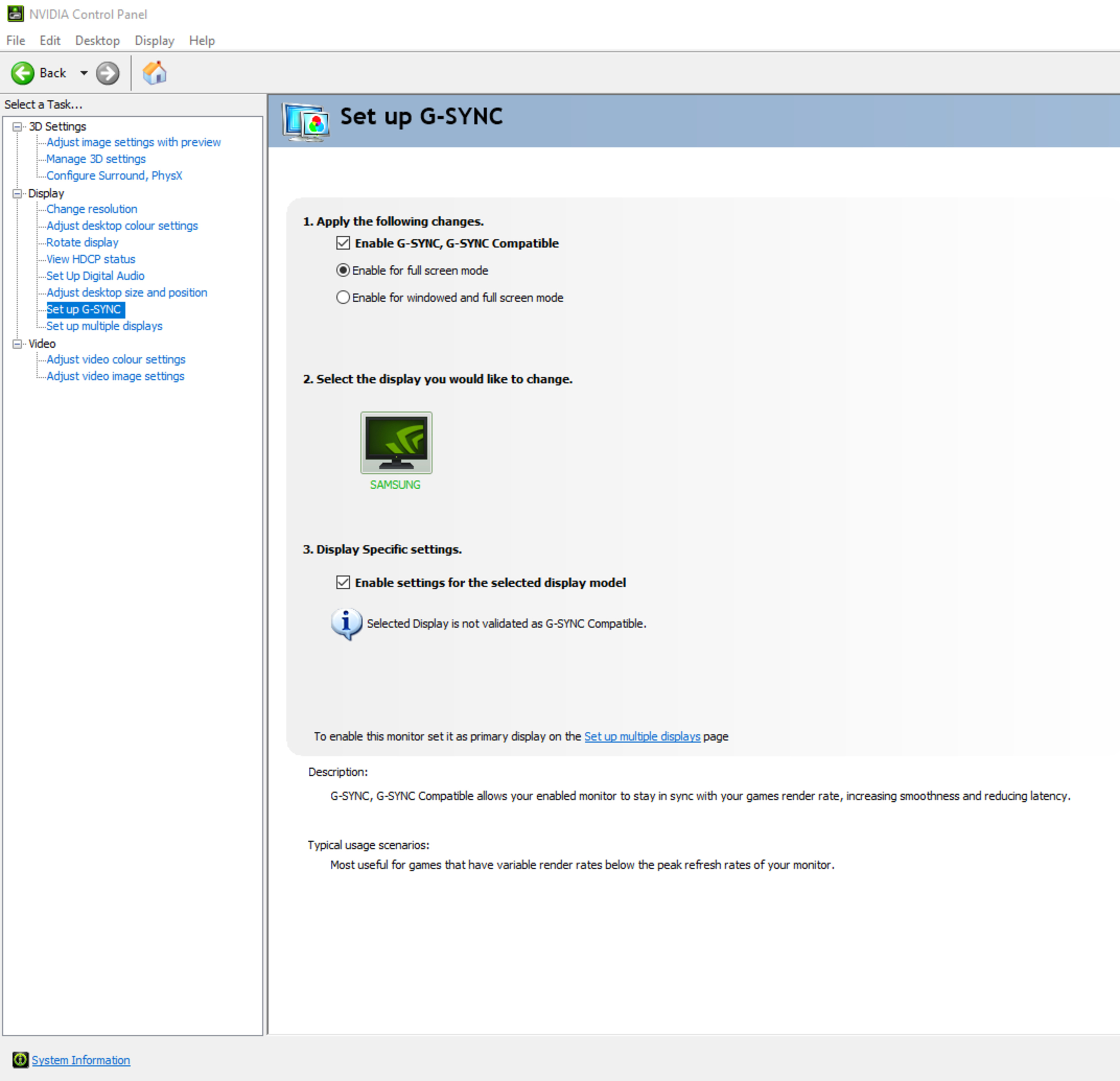

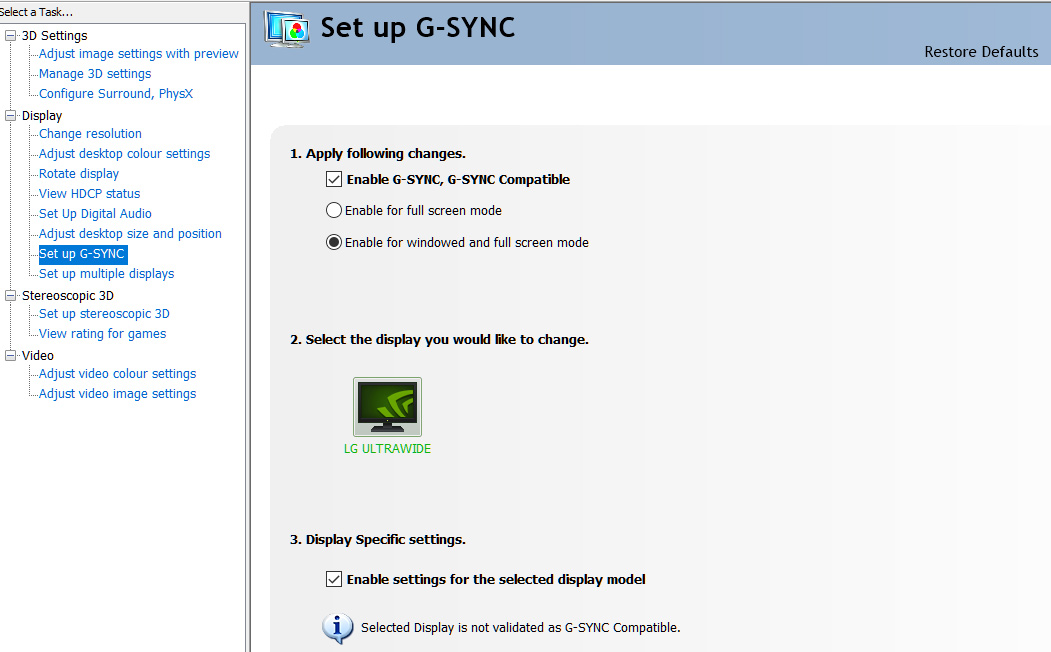

Once the driver is installed, open the Nvidia Control Panel. On the side column, you’ll see a new entry: Set up G-Sync. (If you don’t see this setting, switch on FreeSync using your monitor’s on-screen display. If you still don’t see it, you may be out of luck.)

Check the box that says “Enable G-Sync Compatible,” then click “Apply: to activate the settings. (The settings page will inform you that your monitor is not validated by Nvidia for G-Sync. Since you already know that is the case, don’t worry about it.)

Check that the resolution and refresh rate are set to their max by selecting “Change resolution” on the side column. Adjust the resolution and refresh rate to the highest-possible option (the latter of which is hopefully at least 144Hz if you’ve spent hundreds on your gaming monitor).

Nvidia offers a downloadable G-Sync benchmark, which should quickly let you know if things are working as intended. If G-Sync is active, the animation shouldn’t exhibit any tearing or stuttering. But since you’re using an unsupported monitor, don’t be surprised if you see some iffy results. Next, try out some of your favorite games. If something is wrong, you’ll realize it pretty quickly.

There’s a good resource to check out on Reddit, where its PC community has created a huge list of unsupported FreeSync monitors, documenting each monitor’s pros and cons with G-Sync switched on. These real-world findings are insightful, but what you experience will vary depending on your PC configuration and the games that you play.

Vox Media has affiliate partnerships. These do not influence editorial content, though Vox Media may earn commissions for products purchased via affiliate links. For more information, seeour ethics policy.

This website is using a security service to protect itself from online attacks. The action you just performed triggered the security solution. There are several actions that could trigger this block including submitting a certain word or phrase, a SQL command or malformed data.

I got a refurbished Alienware 15 R3 today, when i placed the order i made sure to look for one which doesn"t have a g-sync display so i can switch off the dedicated gpu in order to save battery, however when i got the laptop and tried switching to the internal gpu it doesn"t give me the option and only lets me use the dedicated one.

At first i thought that maybe i was sent a laptop with a g-sync display but when i checked in device manager the display is listed as "generic pnp display" no mention of g-sync yet i cant seem to be able to turn off the gpu and whenever i press fn+f7 i get the following message "not supported with g-sync ips display" even though the display is not a g-sync display.

Make sure the monitor supports Nvidia’s G-Sync technology - a list of supported monitors at the time of this article can be found on Nvidia"s website.

Make sure a DisplayPort cable is being used - G-Sync is only compatible with DisplayPort. It must be a standard DisplayPort cable using no adapters or conversions. HDMI, DVI and VGA are not supported.

Under the Display tab on the left side of the Nvidia Control Panel, choose Set up G-Sync, followed by Enable G-Sync, G-Sync Compatible checkbox. Note: If the monitor has not been validated as G-Sync Compatible, select the box under Display Specific Settings to force G-Sync Compatible mode on. See the warning NOTE at the end of the article before proceeding.

NOTE: If the monitor supports VRR (Variable Refresh Rate) technologies but is not on the list above, use caution before proceeding. It may still work, however there may be issues when using the technology. Known issues include blanking, pulsing, flickering, ghosting and visual artifacts.

It’s difficult to buy a computer monitor, graphics card, or laptop without seeing AMD FreeSync and Nvidia G-Sync branding. Both promise smoother, better gaming, and in some cases both appear on the same display. But what do G-Sync and FreeSync do, exactly – and which is better?

Most AMD FreeSync displays can sync with Nvidia graphics hardware, and most G-Sync Compatible displays can sync with AMD graphics hardware. This is unofficial, however.

The first problem is screen tearing. A display without adaptive sync will refresh at its set refresh rate (usually 60Hz, or 60 refreshes per second) no matter what. If the refresh happens to land between two frames, well, tough luck – you’ll see a bit of both. This is screen tearing.

Screen tearing is ugly and easy to notice, especially in 3D games. To fix it, games started to use a technique called V-Syncthat locks the framerate of a game to the refresh rate of a display. This fixes screen tearing but also caps the performance of a game. It can also cause uneven frame pacing in some situations.

Adaptive sync is a better solution. A display with adaptive sync can change its refresh rate in response to how fast your graphics card is pumping out frames. If your GPU sends over 43 frames per second, your monitor displays those 43 frames, rather than forcing 60 refreshes per second. Adaptive sync stops screen tearing by preventing the display from refreshing with partial information from multiple frames but, unlike with V-Sync, each frame is shown immediately.

Enthusiasts can offer countless arguments over the advantages of AMD FreeSync and Nvidia G-Sync. However, for most people, AMD FreeSync and Nvidia G-Sync both work well and offer a similar experience. In fact, the two standards are far more similar than different.

All variants of AMD FreeSync are built on the VESA Adaptive Sync standard. The same is true of Nvidia’s G-Sync Compatible, which is by far the most common version of G-Sync available today.

VESA Adaptive Sync is an open standard that any company can use to enable adaptive sync between a device and display. It’s used not only by AMD FreeSync and Nvidia G-Sync Compatible monitors but also other displays, such as HDTVs, that support Adaptive Sync.

AMD FreeSync and Nvidia G-Sync Compatible are so similar, in fact, they’re often cross compatible. A large majority of displays I test with support for either AMD FreeSync or Nvidia G-Sync Compatible will work with graphics hardware from the opposite brand.

AMD FreeSync and Nvidia G-Sync Compatible are built on the same open standard. Which leads to an obvious question: if that’s true, what’s the difference?

Nvidia G-Sync Compatible, the most common version of G-Sync today, is based on the VESA Adaptive Sync standard. But Nvidia G-Sync and G-Sync Ultimate, the less common and more premium versions of G-Sync, use proprietary hardware in the display.

This is how all G-Sync displays worked when Nvidia brought the technology to market in 2013. Unlike Nvidia G-Sync Compatible monitors, which often (unofficially) works with AMD Radeon GPUs, G-Sync is unique and proprietary. It only supports adaptive sync with Nvidia graphics hardware.

It’s usually possible to switch sides if you own an AMD FreeSync or Nvidia G-Sync Compatible display. If you buy a G-Sync or G-Sync Ultimate display, however, you’ll have to stick with Nvidia GeForce GPUs. (Here’s our guide to the best graphics cards for PC gaming.)

This loyalty does net some perks. The most important is G-Sync’s support for a wider range of refresh rates. The VESA Adaptive Sync specification has a minimum required refresh rate (usually 48Hz, but sometimes 40Hz). A refresh rate below that can cause dropouts in Adaptive Sync, which may let screen tearing to sneak back in or, in a worst-case scenario, cause the display to flicker.

G-Sync and G-Sync Ultimate support the entire refresh range of a panel – even as low as 1Hz. This is important if you play games that may hit lower frame rates, since Adaptive Sync matches the display refresh rate with the output frame rate.

For example, if you’re playing Cyberpunk 2077 at an average of 30 FPS on a 4K display, that implies a refresh rate of 30Hz – which falls outside the range VESA Adaptive Sync supports. AMD FreeSync and Nvidia G-Sync Compatible may struggle with that, but Nvidia G-Sync and G-Sync Ultimate won’t have a problem.

AMD FreeSync Premium and FreeSync Premium Pro have their own technique of dealing with this situation called Low Framerate Compensation. It repeats frames to double the output such that it falls within a display’s supported refresh rate.

Other differences boil down to certification and testing. AMD and Nvidia have their own certification programs that displays must pass to claim official compatibility. This is why not all VESA Adaptive Sync displays claim support for AMD FreeSync and Nvidia G-Sync Compatible.

AMD FreeSync and Nvidia G-Sync include mention of HDR in their marketing. AMD FreeSync Premium Pro promises “HDR capabilities and game support.” Nvidia G-Sync Ultimate boasts of “lifelike HDR.”

This is a bunch of nonsense. Neither has anything to do with HDR, though it can be helpful to understand that some level of HDR support is included in those panels. The most common HDR standard, HDR10, is an open standard from the Consumer Technology Association. AMD and Nvidia have no control over it. You don’t need FreeSync or G-Sync to view HDR, either, even on each company’s graphics hardware.

PC gamers interested in HDRshould instead look for VESA’s DisplayHDR certification, which provides a more meaningful gauge of a monitor’s HDR capabilities.

Both standards are plug-and-play with officially compatible displays. Your desktop’s video card will detect that the display is certified and turn on AMD FreeSync or Nvidia G-Sync automatically. You may need to activate the respective adaptive sync technology in your monitor settings, however, though that step is a rarity in modern displays.

Displays that support VESA Adaptive Sync, but are not officially supported by your video card, require you dig into AMD or Nvidia’s driver software and turn on the feature manually. This is a painless process, however – just check the box and save your settings.

AMD FreeSync and Nvidia G-Sync are also available for use with laptop displays. Unsurprisingly, laptops that have a compatible display will be configured to use AMD FreeSync or Nvidia G-Sync from the factory.

A note of caution, however: not all laptops with AMD or Nvidia graphics hardware have a display with Adaptive Sync support. Even some gaming laptops lack this feature. Pay close attention to the specifications.

VESA’s Adaptive Sync is on its way to being the common adaptive sync standard used by the entire display industry. Though not perfect, it’s good enough for most situations, and display companies don’t have to fool around with AMD or Nvidia to support it.

That leaves AMD FreeSync and Nvidia G-Sync searching for a purpose. AMD FreeSync and Nvidia G-Sync Compatible are essentially certification programs that monitor companies can use to slap another badge on a product, though they also ensure out-of-the-box compatibility with supported graphics card. Nvidia’s G-Sync and G-Sync Ultimate are technically superior, but require proprietary Nvidia hardware that adds to a display’s price. This is why G-Sync and G-Sync Ultimate monitors are becoming less common.

My prediction is this: AMD FreeSync and Nvidia G-Sync will slowly, quietly fade away. AMD and Nvidia will speak of them less and lesswhile displays move towards VESA Adaptive Sync badgesinstead of AMD and Nvidia logos.

If that happens, it would be good news for the PC. VESA Adaptive Sync has already united AMD FreeSync and Nvidia G-Sync Compatible displays. Eventually, display manufacturers will opt out of AMD and Nvidia branding entirely – leaving VESA Adaptive Sync as the single, open standard. We’ll see how it goes.

I can"t really say much other than the fact that I really enjoy this monitor for everything it gives out. Not only is the monitor calibrated to an almost perfect level of accuracy, as any manufacturer should do, but it also harbors zero quality defects as well (backlight bleed, dead pixels, etc.); Something that many manufacturers seem to miss now a days. In addition, it can also be reasonably powered by just a USB 3.0 port on your computer; Although it should be stated that you will lose some functionality with the display IF you do decide to power it using said port. Some of the functionality you would lose is speakers and higher brightness levels other than 50 percent. Otherwise it works perfectly well without issue. Currently I am using this monitor with my Inspiron 7559 laptop, since the display that comes with the laptop is mediocre on almost all levels. So this in turn is a huge upgrade all around. Though I do wish we had the option to adjust response times within the displays OSD menu. It could use a few tweaks or two. Nevertheless, its still pretty good all around. Overall I highly recommend this monitor, especially if you have a "gaming laptop" that comes with a mediocre display like mine.

One thing to note when you first obtain this monitor. The display is technically a "TV", at least when viewed in the GPU control panel. This means that you will need to adjust the "color range" to either limited or full so as to get full range of colors. If you do not adjust this setting, then the display may come out very gray looking. Use "lagom"s LCD monitor test images" website. It should help in adjusting the monitor to the right settings in the GPU control panel.

Let this review also be a message to display manufacturers of all kinds. Always make sure to calibrate your display before sending them out into the public AND make sure that the display covers at least 95 percent if not 99 percent color coverage for sRGB compliance; NOT 60 PERCENT OR LOWER!

This website is using a security service to protect itself from online attacks. The action you just performed triggered the security solution. There are several actions that could trigger this block including submitting a certain word or phrase, a SQL command or malformed data.

NVIDIA GeForce GTX 10 series (Pascal) and higher GPUs supports VESA Adaptive-Sync variable refresh rate over the DisplayPort 1.2a standard. If you have a G-SYNC Compatible monitor, VRR features will be enabled automatically giving you an improved experience in games. If your monitor isn’t listed, you can enable the tech manually from the NVIDIA Control Panel. It may work, it may work partly, or it may not work at all. To give it a try:

9. If the above isn"t available, or isn"t working, you may need to go to "Manage 3D Settings", click the "Global" tab, scroll down to "Monitor Technology", select "G-SYNC Compatible" in the drop down, and then click "Apply"

NVIDA GeForce GTX 16 series/RTX 20 series (Turing) and higher will support variable refresh rate over HDMI on displays which support this feature over HDMI 2.1. For the best gaming experience, we recommend a G-SYNC Compatible display. To enable this:

If you don’t own a G-SYNC Compatible TV, but do own a display or TV that only supports variable refresh rates via HDMI 2.1, you can try enabling G-SYNC as detailed above. As these displays and TVs have yet to through our comprehensive validation process, we can’t guarantee variable refresh rates will work, or work without issues.

There are four different possibilities of results for this test. Each result tells us something different about the monitor, and while native FreeSync monitors can still work with NVIDIA graphics cards, there are a few extra advantages you get with a native G-SYNC monitor too.

No:Some displays simply aren"t compatible with NVIDIA"s G-SYNC technology as there"s screen tearing. This is becoming increasingly rare, as most monitors at least work with G-SYNC.

Compatible (NVIDIA Certified):NVIDIA officially certifies some monitors to work with their G-SYNC compatible program, and you can see the full list of certified monitors here. On certified displays, G-SYNC is automatically enabled when connected to at least a 10-series NVIDIA card over DisplayPort. NVIDIA tests them for compatibility issues and only certifies displays that work perfectly out of the box, but they lack the G-SYNC hardware module found on native G-SYNC monitors.

The simplest way to validate that a display is officially G-SYNC compatible is to check the "Set up G-SYNC" menu from the NVIDIA Control Panel. G-SYNC will automatically be enabled for a certified compatible display, and it"ll say "G-SYNC Compatible" under the monitor name. Most of the time, this works only over DisplayPort, but with newer GPUs, it"s also possible to enable G-SYNC over HDMI with a few monitors and TVs, but these are relatively rare.

Compatible (Tested):Monitors that aren"t officially certified but still have the same "Enable G-SYNC, G-SYNC Compatible" setting in the NVIDIA Control Panel get "Compatible (Tested)" instead of "NVIDIA Certified". However, you"ll see on the monitor name that there isn"t a certification here. There isn"t a difference in performance between the two sets of monitors, and there could be different reasons why it isn"t certified by NVIDIA, including NVIDIA simply not testing it. As long as the VRR support works over its entire refresh rate range, the monitor works with an NVIDIA graphics card.

Yes (Native):Displays that natively support G-SYNC have a few extra features when paired with an NVIDIA graphics card. They can dynamically adjust their overdrive to match the content, ensuring a consistent gaming experience. Some high refresh rate monitors also support the NVIDIA Reflex Latency Analyzer to measure the latency of your entire setup.

Like with certified G-SYNC compatible monitors, G-SYNC is automatically enabled on Native devices. Instead of listing them as G-SYNC Compatible in the "Set up G-SYNC page", Native monitors are identified as simply "G-SYNC Capable" below the monitor name. We don"t specify if it has a standard G-SYNC certification or G-SYNC Ultimate, as both are considered the same for this testing.

For this test, we ensure G-SYNC is enabled from the NVIDIA Control Panel and use the NVIDIA Pendulum Demo to ensure G-SYNC is working correctly. If we have any doubts, we"ll check with a few games to ensure it"s working with real content.

If you find the Dell Alienware AW3423DW and the LG 34GP950G-B are too expensive or you don"t like the ultrawide format, the market for getting a cheaper G-SYNC monitor is limited. It"s easier to find 1080p, 360Hz monitors at a much lower cost than looking for mid-range options, as there aren"t many native G-SYNC monitors available with a 1440p resolution and 240Hz refresh rate. Some, like the Dell Alienware AW2721D and the ASUS ROG Swift PG279QM, are impressive gaming monitors, but they"re harder to find, so it"s easier to get a high refresh rate G-SYNC monitor for gaming.

If you want a G-SYNC monitor on a budget, you"ll still have to pay more than budget monitors without native G-SYNC support, as the G-SYNC module comes with a price premium. If that"s what you want, the ASUS ROG Swift 360Hz PG259QN is a great gaming option. It has a smaller 25-inch screen and 1080p resolution, but the lower resolution makes it easier for your graphics card to reach its 360Hz max refresh rate, which is ideal if you play games at a high frame rate. You can achieve its max refresh rate with 8-bit signals over DisplayPort connections, but it"s limited to 300Hz with 10-bit signals, like in HDR.

Thanks to the fast refresh rate, the response time with 360Hz and 120Hz signals is impressive, as you won"t notice any motion blur. It also has a backlight-strobing feature to reduce persistence blur that works within a wide range, but it doesn"t work at the same time as VRR, which is normal for most monitors. Luckily, it also has low input lag for a responsive feel while gaming.

This website is using a security service to protect itself from online attacks. The action you just performed triggered the security solution. There are several actions that could trigger this block including submitting a certain word or phrase, a SQL command or malformed data.

If you are a pc gamer, you often get suggestions to turn off your g-sync. It may seem to you that g-sync is some sort of virus or malware that can harm your pc or gameplay. But actually, g-sync is an advanced adaptive synchronizing technology that improves your network latency and screen experience.

Then why do people talk about disabling or turning it off? In this article, all the confusion regarding g-sync will be removed. We will learn how to disable g-sync, how to verify the process, the issue with it, and other necessary information and guidelines concerning g-sync.

Whether you are tech-savvy or not, you must have heard about Nvidia. Nvidia Corporation is a famous American multinational technology company. They continuously design advanced graphics processing units (GPU) for both gaming and the professional market.

G-sync is a result of Nvidia’s advanced technology for gamers and professionals. It is an exclusive adaptive sync technology developed by Nvidia. This display technology is meant to update your monitor or tv and give you a premium screening experience.

According to Nvidia, Nvidia g sync features, no tearing and stuttering. The users of the g sync monitor won’t be getting any input lag as well. It develops realistic images and provides a range of variable refresh rates (VRR). Also, its variable overdrive offers fantastic gameplay.

See how simple that is! Anyone can disable their g-sync option from Nvidia settings by following these steps. It will not take more than 10 seconds to turn off g-sync on your pc. The picture below will help you more to get the idea.

G-Sync may not affect the module, but it will provide a higher resolution. It is thrilling for gamers. But, it can also cause trouble. It may slow down the speed of the game you are playing.

Most pro gamers do not find G-Sync worth it. Just for having a higher resolution, you may lose a faster refreshing rate, quality panel, and overall better gaming experience.

However, you may have doubts about g-sync being correctly turned off or not. You can verify that as well by taking some measures. Let us show you how to do that by yourself.

From the description of g-sync, we are sure that it is an exciting technology. At the same time, you wonder why people suggest you disable g sync even though it gives you a realistic and jam-free screening. Let us remove your confusion.

The g-sync does not work with primary AMD graphics cards for being a proprietary technology. You will need a graphics card with higher-end compatibility and fast sync. To take advantage of g-sync, you have to use a powerful Nvidia graphics card like, GTX 10 series and GTX Titan Black.

Besides requiring a compatible GPU, this display technology needs a g-sync enabled or Optimus capable device. It is tough to find any laptop with both technologies available in the market. It also requires a high capacity of storage, speed, and additional hardware.

Apparently, you have to pay extra cost for having smooth g-sync enabled screening as it requires a powerful graphics card and g sync-compatible monitor. Also, you can be addicted to a high-performance pc and an outstanding image experience. It will make you unable to work with other devices.

Generally, g-sync limits the FPS to 140. This means you can not use your max FPS. Suppose you have 300 FPS. Since you are limiting it, this will create around 3-4ms input lag. Having a monitor of 144hz and playing a game with more fps than that will make g-sync turned off.

So it can be said that g-sync is not for casual users instead of intense gamers. It is more of a luxury to have a better screening experience. That is why maximum users suggest turning off your g-sync.

G-sync is mainly here to increase your screenplay. If you want a sharp image quality, realistic view, smooth speed, then g sync is for you. But as you are reading this article, it means you are actually thinking about turning it off.

By turning off your g-sync, you can be sure that you are not missing out on any significant advantage except a slightly better screenplay. Your pc or GPU will run as it usually does with a compatible monitor refresh rate and framerate. It will not try to be compatible with g sync, and that is all.

It means you will not be having any improvements in your graphics or any fast sync. Neither will you have any side effects on your variable refresh rate because of disabling it. Your gameplay experience and screen mode will be average like other monitors without extraordinary visuals.

What if you change your mind about turning off your g-sync system? If you want to turn back on your g sync module after disabling it, it is as simple as turning it off. Just follow the steps:

This is how you can enable g sync option again. You also will get the chance to choose where you want your g-sync to be enabled. The options available for you are only for full screen and full screen with windowed mode.

You need to remember that if you have an official g-sync monitor, your g-sync system should be on by default. If you are not using any monitor like that, you need to turn on the g-sync yourself.

FreeSync is a VESA’s adaptive-sync technology. It helps the monitor to remove screen stuttering and tearing. But you need to have a variable refresh rate and compatible graphics card.

If you do not have a compatible graphics card, you better turn off the FreeSync. Without a low range refresh rate, FreeSync does not work properly. It will create input lag.

G-Sync is worth it if you can afford an Nvidia GPU. Also, if you are looking for a high-end monitor with a high refresh rate, g sync compatible monitors are perfect for you. With g sync support, you will be experiencing fantastic gameplay and screenplay.

You should turn off your g-sync if your monitor or pc does not have a high-end GPU. Also, it is expensive, addictive, requires other costly hardware, and needs lots of space and speed, which is impossible to have in affordable computers.

Call of Duty is optimized for the highest possible FPS where g-sync only develops individual demonstrations. When a gaming monitors, refresh rate, and frame rate try to sync, input lag and screen tearing and input latency might happen. This will create a negative impact on performance.

So, is G-Sync good for Valorant? The short answer is yes! G-Sync can definitely help improve your gaming experience in Valorant. Here’s a more detailed look at how G-Sync can help you in Valorant:

Screen tearing can be a major issue when playing fast-paced games like Valorant. If you’ve ever experienced screen tearing, you know how annoying it can be. Thankfully, G-Sync can help eliminate screen tearing by syncing the frame rate of your monitor with the graphics card.

Another benefit of G-Sync is improved gaming performance. If you’re looking for a competitive edge in Valorant, G-Sync can definitely give you that extra boost you need. G-Sync can help reduce input lag and make your games run smoother overall.

In addition to improved performance, G-Sync also provides better visuals. This is because G-Sync eliminates screen tearing and stuttering, which can lead to a better visual experience overall.

No, it does not. Turning off g-sync means you will not have excellent picture quality. It does not affect your regular GPU performance at all. Your pc will be running fine like other variable refresh rate monitors. However, You can turn it off manually without any trouble.

FreeSync can be an alternative to g-sync. It is compatible with an AMD graphics card and AMD GPU. It is a cheaper version for AMD users to have a good graphics experience in the FreeSync monitor. It is open-source at a lower cost for the consumer without any special license or chip.

It can be said that g-sync is undoubtedly an advanced technology for the current user of multiple monitors to give you an outstanding visual experience. Questions regarding turning it off arise when one finds that it is nothing but a luxury to the casual users.

However, this article is enough to learn how to disable g-sync. In addition, you got to know about g-sync and get the answers you need concerning g sync. We hope our guideline will help you turn off and on g-sync manually without any trouble.

When buying a gaming monitor, it’s important to compare G-Sync vs FreeSync. Both technologies improve monitor performance by matching the performance of the screen with the graphics card. And there are clear advantages and disadvantages of each: G-Sync offers premium performance at a higher price while FreeSync is prone to certain screen artifacts like ghosting.

So G-Sync versus FreeSync? Ultimately, it’s up to you to decide which is the best for you (with the help of our guide below). Or you can learn more about ViewSonic’s professional gaming monitors here.

In the past, monitor manufacturers relied on the V-Sync standard to ensure consumers and business professionals could use their displays without issues when connected to high-performance computers. As technology became faster, however, new standards were developed — the two main ones being G-Sync and Freesync.

V-Sync, short for vertical synchronization, is a display technology that was originally designed to help monitor manufacturers prevent screen tearing. This occurs when two different “screens” crash into each other because the monitor’s refresh rate can’t keep pace with the data being sent from the graphics card. The distortion is easy to spot as it causes a cut or misalignment to appear in the image.

This often comes in handy in gaming. For example, GamingScan reports that the average computer game operates at 60 FPS. Many high-end games operate at 120 FPS or greater, which requires the monitor to have a refresh rate of 120Hz to 165Hz. If the game is run on a monitor with a refresh rate that’s less than 120Hz, performance issues arise.

V-Sync eliminates these issues by imposing a strict cap on the frames per second (FPS) reached by an application. In essence, graphics cards could recognize the refresh rates of the monitor(s) used by a device and then adjust image processing speeds based on that information.

Although V-Sync technology is commonly used when users are playing modern video games, it also works well with legacy games. The reason for this is that V-Sync slows down the frame rate output from the graphics cards to match the legacy standards.

Despite its effectiveness at eliminating screen tearing, it often causes issues such as screen “stuttering” and input lag. The former is a scenario where the time between frames varies noticeably, leading to choppiness in image appearances.

V-Sync only is useful when the graphics card outputs video at a high FPS rate, and the display only supports a 60Hz refresh rate (which is common in legacy equipment and non-gaming displays). V-Sync enables the display to limit the output of the graphics card, to ensure both devices are operating in sync.

Although the technology works well with low-end devices, V-Sync degrades the performance of high-end graphics cards. That’s the reason display manufacturers have begun releasing gaming monitors with refresh rates of 144Hz, 165Hz, and even 240Hz.

While V-Sync worked well with legacy monitors, it often prevents modern graphics cards from operating at peak performance. For example, gaming monitors often have a refresh rate of at least 100Hz. If the graphics card outputs content at low speeds (e.g. 60Hz), V-Sync would prevent the graphics card from operating at peak performance.

Since the creation of V-Sync, other technologies such as G-Sync and FreeSync have emerged to not only fix display performance issues, but also to enhance image elements such as screen resolution, image colors, or brightness levels.

Released to the public in 2013, G-Sync is a technology developed by NVIDIA that synchronizes a user’s display to a device’s graphics card output, leading to smoother performance, especially with gaming. G-Sync has gained popularity in the electronics space because monitor refresh rates are always better than the GPU’s ability to output data. This results in significant performance issues.

For example, if a graphics card is pushing 50 frames per second (FPS), the display would then switch its refresh rate to 50 Hz. If the FPS count decreases to 40, then the display adjusts to 40 Hz. The typical effective range of G-Sync technology is 30 Hz up to the maximum refresh rate of the display.

The most notable benefit of G-Sync technology is the elimination of screen tearing and other common display issues associated with V-Sync equipment. G-Sync equipment does this by manipulating the monitor’s vertical blanking interval (VBI).

VBI represents the interval between the time when a monitor finishes drawing a current frame and moves onto the next one. When G-Sync is enabled, the graphics card recognizes the gap, and holds off on sending more information, therefore preventing frame issues.

To keep pace with changes in technology, NVIDIA developed a newer version of G-Sync, called G-Sync Ultimate. This new standard is a more advanced version of G-Sync. The core features that set it apart from G-Sync equipment are the built-in R3 module, high dynamic range (HDR) support, and the ability to display 4K quality images at 144Hz.

Although G-Sync delivers exceptional performance across the board, its primary disadvantage is the price. To take full advantage of native G-Sync technologies, users need to purchase a G-Sync-equipped monitor and graphics card. This two-part equipment requirement limited the number of G-Sync devices consumers could choose from It’s also worth noting that these monitors require the graphics card to support DisplayPort connectivity.

While native G-Sync equipment will likely carry a premium, for the time being, budget-conscious businesses and consumers still can use G-Sync Compatible equipment for an upgraded viewing experience.

Released in 2015, FreeSync is a standard developed by AMD that, similar to G-Sync, is an adaptive synchronization technology for liquid-crystal displays. It’s intended to reduce screen tearing and stuttering triggered by the monitor not being in sync with the content frame rate.

Since this technology uses the Adaptive Sync standard built into the DisplayPort 1.2a standard, any monitor equipped with this input can be compatible with FreeSync technology. With that in mind, FreeSync is not compatible with legacy connections such as VGA and DVI.

The “free” in FreeSync comes from the standard being open, meaning other manufacturers are able to incorporate it into their equipment without paying royalties to AMD. This means many FreeSync devices on the market cost less than similar G-Sync-equipped devices.

As FreeSync is a standard developed by AMD, most of their modern graphics processing units support the technology. A variety of other electronics manufacturers also support the technology, and with the right knowledge, you can even get FreeSync to work on NVIDIA equipment.

Although FreeSync is a significant improvement over the V-Sync standard, it isn’t a perfect technology. The most notable drawback of FreeSync is ghosting. This is when an object leaves behind a bit of its previous image position, causing a shadow-like image to appear.

The primary cause of ghosting in FreeSync devices is imprecise power management. If enough power isn’t applied to the pixels, images show gaps due to slow movement. On the other hand when too much power is applied, then ghosting occurs.

To overcome those limitations, in 2017 AMD released an enhanced version of FreeSync known as FreeSync 2 HDR. Monitors that meet this standard are required to have HDR support; low framerate compensation capabilities (LFC); and the ability to toggle between standard definition range (SDR) and high dynamic range (HDR) support.

A key difference between FreeSync and FreeSync 2 devices is that with the latter technology, if the frame rate falls below the supported range of the monitor, low framerate compensation (LFC) is automatically enabled to prevent stuttering and tearing.

As FreeSync is an open standard – and has been that way since day one – people shopping for FreeSync monitors have a wider selection than those looking for native G-Sync displays.

If performance and image quality are your top priority when choosing a monitor, then G-Sync and FreeSync equipment come in a variety of offerings to fit virtually any need. The primary difference between the two standards is levels of input lag or tearing.

If you want low input lag and don’t mind tearing, then the FreeSync standard is a good fit for you. On the other hand, if you’re looking for smooth motions without tearing, and are okay with minor input lag, then G-Sync equipped monitors are a better choice.

For the average individual or business professional, G-Sync and FreeSync both deliver exceptional quality. If cost isn’t a concern and you absolutely need top of the line graphics support, then G-Sync is the overall winner.

Choosing a gaming monitor can be challenging, you can read more about our complete guide here. For peak graphics performance, check out ELITE gaming monitors.

The best G-Sync monitors make for a silky smooth gaming experience. This is because a G-Sync monitor will synchronize the frame rate to the output of your graphics card. The end result is a tear-free experience. This is just as great for high frame rates as it is for sub-60fps too, so you"re covered for whatever games you love to play.

But what is G-Sync tech? For the uninitiated, G-Sync is Nvidia"s name for its frame synchronization technology. It makes use of dedicated silicon in the monitor so it can match your GPU"s output to your gaming monitor"s refresh rate, for the smoothest gaming experience. It removes a whole load of guesswork in getting the display settings right, especially if you have an older GPU. The catch is that the tech only works with Nvidia GPUs.

G-Sync Ready or G-Sync Compatible monitors can be found, too. They"re often cheaper, but the monitors themselves don"t have dedicated G-Sync silicon inside them. You can still use G-Sync, but for best results, you want a screen that"s certified by Nvidia(opens in new tab).

Here"s where things might get a little complicated: G-Sync features do work with AMD"s adaptive FreeSync tech monitors, but not the other way around. If you have an AMD graphics card, you"ll for sure want to check out the best FreeSync monitors(opens in new tab) along with checking our overall best gaming monitors(opens in new tab) for any budget.

Why you can trust PC GamerOur expert reviewers spend hours testing and comparing products and services so you can choose the best for you. Find out more about how we test.

Brand new gaming monitor technology comes at a premium, and the Asus ROG Swift PH32UQX proves that point. As the world"s first Mini-LED gaming monitor, it sets a precedent for both performance and price, delivering extremely impressive specs for an extreme price tag.

The PG32UQX is easily one of the best panels I"ve used to date. The colors are punchy yet accurate and that insane brightness earns the PG32UQX the auspicious DisplayHDR 1400 certification. However, since these are LED zones and not self-lit pixels like an OLED, you won"t get those insane blacks for infinite contrast.

Mini-LED monitors do offer full-array local dimming (FALD) for precise backlight control, though. What that means for the picture we see is extreme contrast from impressive blacks to extremely bright DisplayHDR 1400 spec.If you want to brag with the best G-Sync gaming monitor around, this is the way to do it.

Beyond brightness, you can also expect color range to boast about. The colors burst with life and the dark hides ominous foes for you to slay in your quest for the newest loot.

Of course, at 4K you"ll need the equivalent of one of the best gaming PCs(opens in new tab) to get 144fps. I did get Doom Eternal to cross the 144Hz barrier in 4K HDR using an RTX 3080 and boy was it marvelous.

That rapid 144Hz refresh rate is accompanied by HDMI 2.0 and DisplayPort 1.4 ports, along with two USB 3.1 ports join the action, with a further USB 2.0 sitting on the top of the monitor to connect your webcam.

As for its G-Sync credentials, the ROG Swift delivers G-Sync Ultimate, which is everything a dedicated G-Sync chip can offer in terms of silky smooth performance and support for HDR. So if you want to brag with the best G-Sync gaming monitor around, this is the way to do it. However, scroll on for some more realistic recommendations in terms of price.

OLED has truly arrived on PC, and in ultrawide format no less. Alienware"s 34 QD-OLED is one of very few gaming monitors to receive such a stellar score from us, and it"s no surprise. Dell has nailed the OLED panel in this screen and it"s absolutely gorgeous for PC gaming. Although this monitor isn’t perfect, it is dramatically better than any LCD-based monitor by several gaming-critical metrics. And it’s a genuine thrill to use.

What that 34-inch, 21:9 panel can deliver in either of its HDR modes—HDR 400 True Black or HDR Peak 1000—is nothing short of exceptional. The 3440 x 1440 native resolution image it produces across that gentle 1800R curve is punchy and vibrant. With 99.3% coverage of the demanding DCI-P3 colour space, and fully 1,000 nits brightness, it makes a good go, though that brightness level can only be achieved on a small portion of the panel.

Still, there’s so much depth, saturation and clarity to the in-game image thanks to that per-pixel lighting, but this OLED screen needs to be in HDR mode to do its thing. And that applies to SDR content, too. HDR Peak 1000 mode enables that maximum 1,000 nit performance in small areas of the panel but actually looks less vibrant and punchy most of the time.The Alienware 34 QD-OLED"s response time is absurdly quick at 0.1ms.

HDR 400 True Black mode generally gives the best results, after you jump into the Windows Display Settings menu and crank the SDR brightness up, it looks much more zingy.

Burn-in is the great fear and that leads to a few quirks. For starters, you’ll occasionally notice the entire image shifting by a pixel or two. The panel is actually overprovisioned with pixels by about 20 in both axes, providing plenty of leeway. It’s a little like the overprovisioning of memory cells in an SSD and it allows Alienware to prevent static elements from “burning” into the display over time.

Latency is also traditionally a weak point for OLED, and while we didn’t sense any subjective issue with this 175Hz monitor, there’s little doubt that if your gaming fun and success hinges on having the lowest possible latency, there are faster screens available. You can only achieve the full 175Hz with the single DisplayPort input, too.

The Alienware 34 QD-OLED"s response time is absurdly quick at 0.1ms, and it cruised through our monitor testing suite. You really notice that speed in-game, too.

There"s no HDMI 2.1 on this panel, however. So it"s probably not the best fit for console gaming as a result. But this is PC Gamer, and if you"re going to hook your PC up to a high-end gaming monitor, we recommend it be this one.

4K gaming is a premium endeavor. You need a colossal amount of rendering power to hit decent frame rates at such a high resolution. But if you"re rocking a top-shelf graphics card, like an RTX 3080(opens in new tab), RTX 3090(opens in new tab), or RX 6800 XT(opens in new tab) then this dream can be a reality, at last. While the LG 27GN950-B is a fantastic gaming panel, it"s also infuriatingly flawed.

The LG UltraGear is the first 4K, Nano IPS, gaming monitor with 1ms response times, that"ll properly show off your superpowered GPU. Coming in with Nvidia G-Sync and AMD’s FreeSync adaptive refresh compatibility, this slick slim-bezel design even offers LG’s Sphere Lighting 2.0 RGB visual theatrics.

And combined with the crazy-sharp detail that comes with the 4K pixel grid, that buttery smooth 144Hz is pretty special.The color fidelity of the NanoIPS panel is outstanding.

While it does suffer with a little characteristic IPS glow. It appears mostly at the screen extremities when you’re spying darker game scenes, but isn"t an issue most of the time. The HDR is a little disappointing as, frankly, 16 edge-lit local dimming zones do not a true HDR panel make.

What is most impressive, however, is the Nano IPS tech that offers a wider color gamut and stellar viewing angles. And the color fidelity of the NanoIPS panel is outstanding.

The LG UltraGear 27GN950-B bags you a terrific panel with exquisite IPS image quality. Despite the lesser HDR capabilities, it also nets beautiful colors and contrast for your games too. G-Sync offers stable pictures and smoothness, and the speedy refresh rate and response times back this up too.

The MSI Optix MPG321UR is kitted out for high-speed 4K gaming, and it absolutely delivers. Despite the price point this monitor doesn’t have a physical G-Sync chip, it is officially certified and has been tested by Nvidia to hit the necessary standards for G-Sync compatibility. It does also offer FreeSync Premium Pro certification, as well as DCI-P3 RGB color space and sRGB.

That makes this a versatile piece of kit, and that 3840 x 2160 resolution is enough to prevent any pixelation across this generous, 32-inch screen. The 16:9 panel doesn"t curve, but does offer a professional-level, sub 1ms grey-to-grey (GTG) response rate.

Sadly, there"s been no effort to build in any custom variable overdrive features, so you’ll have to expect you"ll get artifacts on fast moving objects.

Still, the MSI Optix MPG321UR does come with a 600nit peak brightness, and Vesa HDR 600 certification, alongside 97% DCI-P3 colour reproduction capabilities. All this goes toward an amazingly vibrant screen that"s almost accurate enough to be used for professional colour grading purposes.

The Optix is one of MSI"s more recent flagship models, so you know you"re getting serious quality and performance. Its panel looks gorgeous, even at high speeds, managing a 1ms GTG response time.

Though MSI"s Optix is missing a physical G-Sync chip, it"ll still run nicely with any modern Nvidia GPU, or AMD card if you happen to have one of those lying around.

The Xeneon is Corsair"s attempt at breaking into the gaming monitor market. To do that, the company has opted for 32 inches of IPS panel at 1440p resolution. Once again we"re looking at a FreeSync Premium monitor that has been certified to work with GeForce cards by Nvidia.

It pretty much nails the sweetspot for real-world gaming, what with 4K generating such immense levels of GPU load and ultrawide monitors coming with their own set of limitations.

The 2,560 by 1,440 pixel native resolution combined with the 32-inch 16:9 aspect panel proportions translate into sub-100DPI pixel density. That’s not necessarily a major problem in-game. But it does make for chunky pixels in a broader computing context.It‘s punchy, vibrant, and well-calibrated.

Here, you"re looking at a swanky cast aluminum stand, which adjusts for height, tilt, and swivel, and is a definite cut above the norm for build quality. The OSD menu UI is clearer and more logical than many, too, and those unusually high levels of polish and refinement extend yet further.

That sub-3ms response, combined with a 165Hz refresh, means the thing isn"t a slouch when it comes to gaming capability, though there are certainly more impressive gaming monitors out there.

The two HDMI 2.0 sockets are limited to 144Hz, and the DisplayPort 1.4 interface is predictable enough. But the USB Type-C with power delivery for a single cable connection with charging to a laptop is a nice extra. Or, at least, it would be if the charging power wasn’t limited to a mere 15W, which is barely enough for something like a MacBook Air, let alone a gaming laptop.

The core image quality is certainly good, though. It‘s punchy, vibrant, and well-calibrated. And while it"s quite pricey for a 1440p model, it delivers all it sets out to with aplomb. On the whole, the Corsair Xeneon 32QHD165 doesn’t truly excel at anything, but it"s still a worthy consideration in 2022.

Housing Nvidia’s tech alongside a 4K resolution and HDR tech means that this is an absolute beast of a monitor that will give you the best of, well, everything. And by everything, we mean everything.

The XB273K’s gaming pedigree is obvious the second you unbox it: it is a 27-inch, G-Sync compatible, IPS screen, that boasts a 4ms gray-to-gray response rate, and a 144Hz refresh rate. While that may not sound like a heck of a lot compared to some of today"s monitors, it also means you can bag it for a little less.

Assassin’s Creed Odyssey looked glorious. This monitor gave up an incredibly vivid showing, and has the crispest of image qualities to boot; no blurred or smudged edges to see and each feature looks almost perfectly defined and graphically identified.The contrasts are particularly strong with any colors punching through the greys and blacks.

Particular highlights are the way water effects, lighting, reflections and sheens are presented, but there is equal enjoyment to be had from landscape features, the people, and urban elements. All further benefiting from a widespread excellence in color, contrast, shades (and shadows), and tones.

The contrasts are particularly strong with any colors punching through the greys and blacks. However, the smaller details here are equally good, down to clothing detail, skin tone and complexion, and facial expressions once again. There is an immersion-heightening quality to the blacks and grays of the Metro and those games certainly don’t feel five years old on the XB273K.

The buttons to access the menu are easy enough to use, and the main stick makes it particularly simple to navigate. And the ports you have available increase your ability to either plug and go or adapt to your machines’ needs: an HDMI; DisplayPort and five USB 3.0 ports are at your service.

The Predator XB273K is one for those who want everything now and want to future-proof themselves in the years ahead. It might not have the same HDR heights that its predecessor, the X27, had, but it offers everything else for a much-reduced price tag. Therefore, the value it provides is incredible, even if it is still a rather sizeable investment.

The best just got a whole lot better. That’s surely a foregone conclusion for the new Samsung Odyssey Neo G9. After all, the original Odyssey G9 was already Samsung’s tip-top gaming monitor. Now it’s been given the one upgrade it really needed. Yup, the Neo G9 is packing a mini-LED backlight.

Out of the box, it looks identical to the old G9. Deep inside, however, the original G9’s single most obvious shortcoming has been addressed. And then some. The Neo G9 still has a fantastic VA panel. But its new backlight doesn’t just have full-array rather than edge-lit dimming.

It packs a cutting-edge mini-LED tech with no fewer than 2,048 zones. This thing is several orders of magnitude more sophisticated than before. As if that wasn’t enough, the Neo G9’s peak brightness has doubled to a retina-wrecking 2,000 nits. What a beast.

The problem with any backlight-based rather than per-pixel local dimming technology is that compromises have to be made. Put another way, an algorithm has to decide how bright any given zone should be based on the image data. The results are never going to be perfect.

Visible halos around small, bright objects are the sort of issue you expect from full-array dimming. But the Neo G9 has its own, surprisingly crude, backlight-induced image quality issues. Admittedly, they’re most visible on the Windows desktop rather than in-game or watching video.Graphics-heavy titles such as Cyberpunk 2077 or Witcher III are what the G9 does best.

If you position a bright white window next to an all-black window, the adjacent edge of the former visibly dims. Or let’s say you move a small, bright object over a dark background. The same thing happens. The small, bright object dims. Even uglier, if something like a bright dialogue box pops up across the divide between light and dark elements, the result is a gradient of brightness across the box.

All this applies to both SDR and HDR modes and, on the Windows desktop, it’s all rather messy and distracting. Sure, this monitor isn’t designed for serious content creation or office work. But at this price point, it’s surely a serious flaw.

Still, that 1000R curve, huge 49-inch proportions, and relatively high resolution combine to deliver an experience that few, if any, screens can match. Graphics-heavy titles such as Cyberpunk 2077 or Witcher III are what the G9 does best. In that context, the Samsung Odyssey Neo G9 delivers arguably the best visual experience on the PC today.

In practice, the Neo G9’s mini-LED creates as many problems as it solves. We also can’t help but observe that, at this price point, you have so many options. The most obvious alternative, perhaps, is a large-format 120Hz OLED TV with HDMI 2.1 connectivity.

G-Sync gaming monitor FAQWhat is the difference in G-Sync and G-Sync Compatible?G-Sync and G-Sync Ultimate monitors come with a bespoke G-Sync processor, which enables a full variable refresh rate range and variable overdrive. G-Sync Compatible monitors don"t come with this chip, and that means they may have a more restricted variable refresh rate range.

Fundamentally, though, all G-Sync capable monitors offer a smoother gaming experience than those without any frame-syncing tech.Should I go for a FreeSync or G-Sync monitor?In general, FreeSync monitors will be cheaper. It used to be the case that they would only work in combination with an AMD GPU. The same went for G-Sync monitors and Nvidia GPUs. Nowadays, though, it is possible to find G-Sync compatible FreeSync monitors(opens in new tab) if you"re intent on spending less.Should I go for an IPS, TN or VA panel?We would always recommend an IPS panel over TN(opens in new tab). The clarity of image, viewing angle, and color reproduction are far superior to the cheaper technology, but you"ll often find a faster TN for cheaper. The other alternative, less expensive than IPS and better than TN, is VA tech. The colors aren"t quite so hot, but the contrast performance is impressive.

The speed at which the screen refreshes. For example, 144Hz means the display refreshes 144 times a second. The higher the number, the smoother the screen will appear when you play games.

Graphics tech synchronizes a game"s framerate with your monitor"s refresh rate to help prevent screen tearing by syncing your GPU frame rate to the display"s maximum refresh rate. Turn V-Sync on in your games for a smoother experience, but you"ll lose information, so turn it off for fast-paced shooters (and live with the tearing). Useful if you have an older model display that can"t keep up with a new GPU.

G-SyncNvidia"s frame synching tech that works with Nvidia GPUs. It basically allows the monitor to sync up with the GPU. It does by showing a new frame as soon as the GPU has one ready.

AMD"s take on frame synching uses a similar technique as G-Sync, with the biggest difference being that it uses DisplayPort"s Adaptive-Sync technology which doesn"t cost monitor manufacturers anything.

When movement on your display leaves behind a trail of pixels when watching a movie or playing a game, this is often a result of a monitor having slow response times.

The amount of time it takes a pixel to transition to a new color and back. Often referenced as G2G or Grey-to-Grey. Slow response times can lead to ghosting. A suitable range for a gaming monitor is between 1-4 milliseconds.

TN PanelsTwisted-nematic is the most common (and cheapest) gaming panel. TN panels tend to have poorer viewing angles and color reproduction but have higher refresh rates and response times.

IPSIn-plane switching, panels offer the best contrast and color despite having weaker blacks. IPS panels tend to be more expensive and have higher response times.

VAVertical Alignment panels provide good viewing angles and have better contrast than even IPS but are still slower than TN panels. They are often a compromise between a TN and IPS panel.

HDRHigh Dynamic Range. HDR provides a wider color range than normal SDR panels and offers increased brightness. The result is more vivid colors, deeper blacks, and a brighter picture.

ResolutionThe number of pixels that make up a monitor"s display, measured by height and width. For example: 1920 x 1080 (aka 1080p), 2560 x 1440 (2K), and 3840 x 2160 (4K).Round up of today"s best deals

When shopping for a gaming monitor, you’ll undoubtedly come across a few displays advertising Nvidia’s G-Sync technology. In addition to a hefty price hike, these monitors usually come with gaming-focused features like a fast response time and high refresh rate. To help you know where your money is going, we put together a guide to answer the question: What is G-Sync?

In short, G-Sync is a hardware-based adaptive refresh technology that helps prevent screen tearing and stuttering. With a G-Sync monitor, you’ll notice smoother motion while gaming, even at high refresh

Ms.Josey

Ms.Josey

Ms.Josey

Ms.Josey