dual touch screen monitors windows 8 free sample

Navigating with the corners of the screen with a mouse or with the edges of a screen with touch is part of the core navigation experience for Windows 8. It is important to support these corners and edges the right way on a PC with multiple monitors. Windows 8 does this by making the corners and edges of all attached displays active.

There is no concept of a single main monitor when it comes to the Windows UI and apps. The monitor that you access one of the corners on will be the monitor where that interface will display.

You can easily move Windows UI apps to another monitor by grabbing it along the top edge and dragging it from one monitor to another. If the app is snapped, it will be snapped on the other monitor as well.

On a PC with multiple monitors, one or more of the screen edges are likely to be shared. For example, two monitors sitting next to each other (where each has the same screen resolution) would share both the top and bottom edge of the screen. Because corners are so important for navigation in Windows 8, we need to be able to easily target each corner, but this is difficult when you can"t just throw your mouse in any corner like you can on a single monitor system.

To address this we have created real corners along shared edges, so that if you are targeting the corner, once you get there Windows won"t allow you to overshoot the corner accidentally. It does this by extending the corner along shared edges so if you are moving along the edge and hit the corner (within 6 pixels) the mouse will stop. You can think of it like a 6 pixel wall extending from the shared corner

With multiple monitors attached, Windows 8 will draw the taskbar across each monitor. By default, all taskbar icons are displayed on the main monitor, and the taskbar on all other monitors

You can use the options control which taskbar icons are displayed on which monitor: All icons on all monitors, All icons on main taskbar and taskbars on other displays will only show icons on the display where the app is running, or only on the taskbar where the 789app is running.

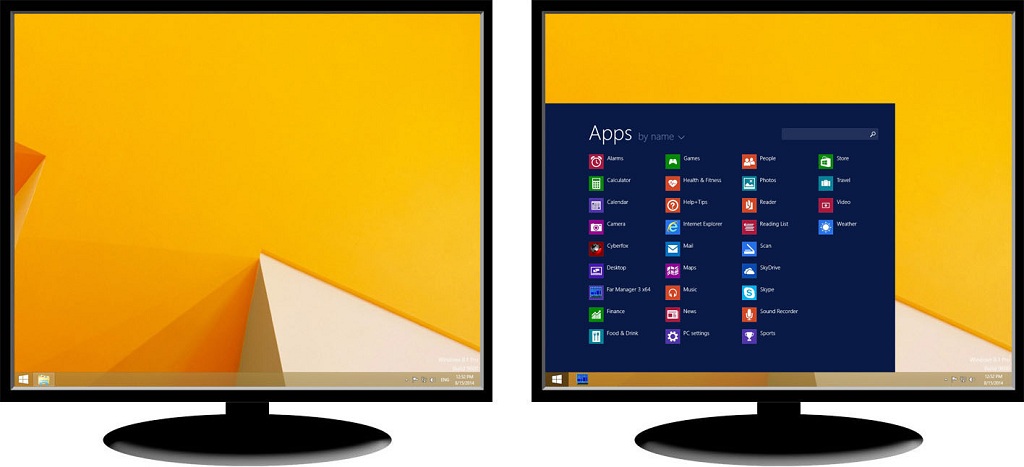

You can now set different background for each monitor. When selecting a personalization theme, Windows 8 automatically puts a different desktop background on each monitor. You can even set a slide show to cycle through pictures across all monitors, or pick specific background pictures for each monitor.

It is very typical for people to have a multi-monitor setup that consists of different sized and/or oriented monitors. And of course, not all photos look great in both portrait and landscape or on all screen sizes and resolutions. To address this, we"ve added logic to the slide show that selects the best suited images for each monitor.

You can now span a single panoramic picture across multiple monitors. We are also including a new panoramic theme in the personalization options for Windows 8.

Display Port is a new digital standard for connecting monitors to computers and has the capability to provide a scalable digital display interface with optional audio and high-definition content protection (HDCP) capability. Display Ports usually resemble USB Ports with one side angled.

Digital Video Interface (DVI) is the digital standard for connecting monitors to computers. DVI connections are usually color-coded with white plastic and labels.

Video Graphics Array (VGA) is the analog standard for connecting monitors to computers. VGA connections are commonly color-coded with blue plastic and labels.

Extend These Displays: This option is recommended when an external monitor is connected to a laptop,and each monitor can display different screen independently to improve user convenience. The relative position of the screens can be set up here, for example monitor 1 may be set up to be to the left of monitor 2 or vice versa.

This depends on the physical position of the LCD monitor in relation to the laptop. The horizontal line displayed on both monitors can be based on the laptop or external monitor. These are all adjustable options, and all a user need do is drag the monitor of the main screen to the extended monitor.

1、We need to go to Microsoft"s official website to download special panoramic themes, this theme is incomplete on a single monitor, be will be suitable when you use dual monitor. You can click Microsoft Official Web site to download it. Here is a collection of various themes provided by Microsoft. We can choose the theme according to the style. The theme of the panorama is as follows:

2. Select the theme and install it, and the theme will be installed under "Personalization". Before setting the wallpaper, we need to use the "Windows + P" key to set up the "Second Screen", the setting item is located on the right side of the screen. We can see the way to connect screen, including "PC screen only", "Duplicate", "Extend", "Second screen only". In order to achieve the effect of our panoramic theme, we need to choose "Expend" option. Figure:

4. To display the panoramic wallpaper on screen, we need to enter the "desktop background" blow and select the "image placement". And then we will find the wallpaper merged on the two screen into an extra long panoramic image, as shown:

Somewhere along the line telling the computer which USB device is the touchinput for which monitor something goes wrong. I think it has something to do with having two touchinput devices on the same internal hub.

This is what I think happening: You tell windows to have monitor A linked to the touchinput device 1 on port 1 on hub 1, and monitor B linked to touchinput device 1 on port 2 on internal hub 1. Somehow windows only remembers device 1 on internal hub 1, linking

to fix it you should find a usb port that is connected to an other internal hub. so where monitor A is linked to touchinput device 1 on usb port 1 on internal hub 1, monitor B will be recognized as touchinput 1 on usb port 1 and internal hub 2.

if after the switch your inputs are linked to the wrong monitor, you can use the "Tablet-PC" tool in windows to point out which input should link which monitor.

Most of us now have an iPad or some sort of tablet or smartphone. What’s more, we’ve probably tried using it to make beats, play synths, do some field recording or control our studio computer with a swipe-swipe of our fingers. We’re completely at home with the multi-touch screen environment. But we’re also perhaps grumbling at the size of the tablet/phone screen, quickly running out of processing power, and troubled by how best to integrate our devices into a larger studio setup. Conversely, on our desktop and laptop studio machines, we have far more power available and access to all the software tools we could wish for, but often find ourselves reduced to controlling them with a mouse.

Multi-touch technology has been very slow to make any headway in the world of desktop computing — and not just in terms of music production software, by any means. Surprisingly, perhaps, given the ubiquity of the iPad, Apple’s OS X doesn’t support multi-touch, but on ‘the other side’ it’s been available in some form or other since Windows 7. And, with high-quality, 24-inch, 10-point touchscreens now available for a very modest outlay(around £300), Windows 8 maturing through version 8.1, and Windows 9 on the horizon, some developers are now making significant progress. So, maybe it’s finally time to figure out how and where multi-touch-capable software could enhance your own recording studio, whether that be a modest home-studio setup, or something on a grander scale.

2. Infra-red technology creates an optical grid across the screen, and registers a ‘touch’ when the beams are interrupted. This is particularly suited to larger screens. It’s what the Microsoft PixelSense was based on, and it can be found today in Slate Digital’s Raven MTX. It benefits from great accuracy, not having to use annoyingly reflective glass, and the ability to register touches from any object (not just a finger). However, infra-red screens are vulnerable to accidental ‘touches’ from elbows, clothing, insects and so on.

3. Capacitive technology has risen to the top, primarily through Apple’s use of it. It’s durable, reliable and accurate, with a good resolution (although not as good as a stylus), and the price has come down significantly due to the sheer number of phones and tablets using it. Capacitive screens work by creating a minute electrical field, from which a capacitive object (such as a finger) draws current, creating a voltage drop at that point of the screen. Most phones and tablets now use a variant called Projected Capacitive Touch technology, which essentially doubles up the grid for improved accuracy and tracking, and also supports passive styli and gloved fingers.

Microsoft introduced native multi-touch support with Windows 7, but two things prevented it from really catching on: the expense of the hardware, and the fact that a desktop OS designed primarily for a mouse isn’t particularly suited to being operated with your fingers. In an act of pure genius (or madness, depending on your point of view) Microsoft then designed Windows 8 to offer the user two distinct interfaces: the Windows Start (formerly known as Metro) Modern UI, which is intended for touching, and the standard desktop, for use with a mouse and keyboard. It meant that the OS could be used on multiple devices: tablets, phones, laptops and desktops could all use it, and be touchable and futuristic in every environment.

Unfortunately, for the vast majority of people without a multi-touch interface, it was confusing and a bit frustrating, in that half of the OS couldn’t comfortably be used when all you had was a mouse! Microsoft addressed many of these concerns with Windows 8.1, and further updates have brought the Modern and the Desktop interfaces much closer together. The experience for the mouse and keyboard user is now much more that of an enhanced or augmented desktop, rather than a touch interface that was out of their reach. At the same time, sales of all-in-one computers with multi-touch screens, hybrid laptops and Surface tablets have grown at a healthy rate, and so multi-touch technology is fast becoming ‘normal’ on regular Windows computers.

So what does the Windows App Store offer in terms of music apps? Unfortunately, not a lot! There’s a sprinkling of piano and guitar-strumming apps, and some sample-triggering and remixing ones. There’s a DJ remix app from Magix called Music Maker Jam, which is pretty cool in a DJ-remixing-preset-samples kind of way, and a recording studio from Glouco, which does at least allow you to record via a mic input and sequence the included instruments. However, Windows Store apps have been hampered by two things when it comes to music production. Firstly there’s been no support for MIDI, and secondly, the output latency is only as good as the system’s soundcard using standard Windows drivers.

With Windows 8.1, Microsoft revealed a MIDI API (application programming interface), which for the first time allows a Modern app to use a MIDI input. Image Line took advantage of this in FL Studio Groove, a decent music-making app that lets you sequence, mix and mess about with a range of sample-based instruments and drums — and, of course, you can play the sounds from a MIDI keyboard.

The MIDI API is the first release from a new team of creative people at Microsoft who are working to improve the MIDI and audio aspects of Windows apps for the forthcoming Windows 9. This is unprecedented, and potentially very exciting for music makers. Pete Brown, from Microsoft’s DX Engineering Engagement and Evangelism department, said that they’re doing some serious engineering here, including on audio latency for Universal apps (apps that run across all Windows devices). They’re working with a lot of partners, big names and small, from both hardware and software worlds, to help prioritise, prototype apps, and so on. He tells me they’ve made sure that the approach is aligned with industry needs and requirements, and not just what Microsoft think needs to be done. It’s been the most open development process he’s ever seen, and he says the response from partners so far has been extremely positive.

The first thing to realise is that multi-touch is not mouse emulation, as it was on the old single-touch screens you might find in supermarkets or information kiosks. So, although it sometimes appears as though you’re just mousing about with your finger, it doesn’t always work as expected. In an application not designed specifically for touch control (we’ll call these ‘non-touch’), like Cubase, a single finger can access all the menu items, controls and parameters, just as with a mouse. But with some of the plug-ins you’ll find you can’t play the virtual keyboard unless you pull your finger across the notes, giving you a sort of Stylophone effect.

This problem is more obviously demonstrable in Adobe’s Photoshop: you can select all the tools and menu items, but you can’t actually use your finger on an image — nothing happens! Oddly, if you start with your finger off to the side of the page and then drag it onto the image, it then allows you to draw — but only as long as your finger stays in contact. Adobe say that this is because there’s no touch standard, and they already have their own APIs for use with Wacom’s pen and touchscreen products. They’re also waiting for Apple to join in the game, which, I fear, is something we’re going to hear from a lot of developers of software aimed at creative professionals.

Slight oddities aside, most DAW software actually works very well with single touches on a multi-touch screen. In my tests, Cubase, Pro Tools, Reason, Studio One, Reaper and Tracktion all happily let me poke around to my heart’s content. Ableton Live proved good for launching clips, entering notes and moving regions, but I ran into trouble when attempting to move parameters: once I’d grabbed a control with my finger, the knob or slider would zoom to the maximum or minimum value with the slightest finger movement without letting me easily set any value in between. Fortunately, there’s a fix for this. You have to create an ‘options.txt’ file in the Preferences folder, which lurks in the back end of the dusty reaches of your file system, and add the line “-AbsoluteMouseMode” (more precise information can be found at: www.ableton.com/en/articles/optionstxt-file-live). This allows all of the parameters to be moved much more smoothly. Unfortunately, I’m not aware of similar fixes for Bitwig Studio, which has exactly the same problem, or Digital Performer 8 (DP8), where some plug-ins exhibit this behaviour.

In the project/session/arrange window, with the exception of Live, Bitwig and DP8, all DAWs responded to the pinch/zoom two-finger gesture to either expand the track height or extend the timeline. Reaper even managed to do both directions at the same time. So, although these programs are not multi-touch compatible, there’s not that much you can’t do with your fingertip, assuming you can get your fudgy finger on the sometimes tiny knobs. You could load Reason, Live or Pro Tools onto a Windows 8 Pro tablet, such as the Microsoft Surface, and get on with making music without having to add a mouse to the equation.

Similarly, with stand-alone plug-ins and instruments, such as Native Instruments Reaktor or the Arturia Mini V, you’ve got single-finger control over all the parameters — the only problem is the Stylophone effect on virtual keyboards described earlier. Even though, especially on a tablet, you can go without a mouse, there’s no reason why you would want to do so on a desktop machine. The beauty with multi-touch on the desktop is that you can use everything. So, you can use your mouse and keyboard as normal, but perhaps when tweaking plug-ins or working closely in the arrange page you can simply reach out and touch it. Which is fantastic!

1. Console View: all the faders and pan, sends and other controls are all gloriously multi-touchable. You can use all your fingers, all at once, and mix to your heart’s content.

Sonar struggles, though, when it comes to consistency. In the Pro Channel expansion to the Console view, the knobs in the EQ, Tube and Compressor respond to a rotary, sort of half-circle finger movement, but the knobs in the rest of the Pro Channel respond with an up and down movement. You can’t control more than one knob at a time, although you can move other controls on the console. Using the visual display on the EQ suffers from the same ‘zooming about’ problem found in Live, though they’ve dealt with this issue in X3 by sliding out a lovely large EQ window, which works perfectly with multi-touch.

Meanwhile, back in the arrange window, nothing is actually touchable! You can’t move any regions, cut them up or edit them in any way. Nor can you add notes to the piano roll or change any automation. All the arrange page things that can be done in the other non-touch-enabled DAWs can’t be done in the touch-enabled Sonar, which is a bit strange. The changes they made to the Pro Channel EQ shows exactly what’s required for touch to work effectively: you need big knobs. The Console view is hampered in places by the size and throw of the faders and some of the small controls. Cakewalk have built multi-touch into their existing GUI, and although it works well in some areas it also demonstrates why this might not be the best way to approach it — although, of course, you still have your mouse and keyboard.

StageLight has the standard arrange window, with tracks and a timeline, piano roll and automation, but it also has drum pads, a step sequencer, and a virtual keyboard that you can lock to various preset tunings, making it very easy to play all the right notes. In version 2 they’ve added in some nice-sounding synths and instruments, all with touchy parameters, and they’ve introduced an Ableton Live-style loop arranger, with large clip boxes to poke with your fingers. One very neat feature is that, through its support of VST plug-ins, it includes a multi-touch GUI version of the standard VST parameters window. It’s very simple, with each parameter displaying just a slider and a value, but it hints at what’s possible. Matthew Presley, Product Manager at Open Labs, mentioned how the right-click element of touch — where you hold until a menu appears — is something they’ve found frustrating. In refreshing the interface for version 2, they decided to get around that by creating a ‘Charms’-style toolbar at the side with all the editing tools, including a ‘Duplicate’ button, which takes the finger pain out of copying and pasting. Their core concern was to make it easy, so that people can just get on and make music.

At $10, there’s nothing really to touch StageLight. The pricing model is similar to that used in so many iOS and Android apps, and it’s something we’ll probably see much more of — the standard software is very cheap, or free, and then, through an in-app store, you can purchase additional features as you get more serious. It’s a refreshing change from the ‘Lite’ versions of DAWs we’re so familiar with, where you always wish you could afford the ‘real’ version just to get a little more functionality. StageLight is increasingly being pre-installed on many Dell, Lenovo and Acer Windows 8 tablets, and as kids these days are unlikely ever to possess an actual desktop computer, this might very well be where they start making music.

Ben Loftis, Product Manager for Mixbus, had this to say: “In a touch interface, you must accommodate calibration errors, parallax, and the splay of your finger. You don’t have any haptic feedback. So, if you want an analogue-console experience on a touchscreen, you will need a touchscreen that is larger than the analogue counterpart. But exactly how much larger depends on the hardware and the user. Currently, Mixbus v2 chooses between three sizes, based on your monitor resolution. But v3 will give the user an infinitely variable-scale slider, so we can accommodate more combinations of screen size and resolution. Also, our plug-ins (like the XT series) are arbitrarily scalable: if you stretch the plug-in window, all the knobs get bigger. We think this will be important for touch users, because many existing plug-ins use tiny buttons.”

The grandaddy of digital audio on the PC, Software Audio Workshop (SAW) Studio added multi-touch control to their Software Audio Console (SAC) live-sound mixer application as long ago as 2010. It was tied into the revolutionary 3M multi-touch screens, as favoured by Perceptive Pixel. Unfortunately, it hasn’t got any further than that, and still only supports multi-touch on these rather expensive screens. The layout of SAC lends itself brilliantly to multi-touch and currently works very well with a single touch — but it would be good to see this opened up to more current and cheaper technology.

It turned out that the development libraries they were using (JUCE C++) contained multi-touch features, which were primarily intended for the iPad, and that these had simply translated into the GUI of the VST version. This is true of all their plug-ins. They’ve now released a larger-GUI option for LuSH-101, for people with fat fingers.

I had a similar experience more recently with Arturia’s Spark 2 soft synth. Arturia told me that they hadn’t planned to make it multi-touch, but that they’re very happy that it is. So, the programming languages already exist to allow developers to include multi-touch functionality without specialist add-ons or tools — which means plug-in manufacturers may start to produce their multi-touch GUIs even while the DAW makers drag their feet.

The alternative to direct touch control of the DAW or plug-ins is to use touchscreen technology as a controller. The Jazz Mutant Lemur, the first commercially available multi-touch controller, has now evolved to become an iPad app, and there are now dozens of iPad apps for controlling DAW software via MIDI or OSC — in fact, there are even a few for Android. They’re selling well and there’s obviously a desire and use for it. The lack of haptic feedback (an actual physical knob or fader) doesn’t appear to be a barrier to most users, despite the recent Kickstarter campaign to manufacture knobs that you can stick onto the surface of the iPad (http://sosm.ag/ipad-knob-kickstarter).

James Ivey, Pro Tools Expert hardware editor (www.pro-tools-expert.com), who owns a Slate Pro Raven MTi controller, put to me the case for touchscreen controls over physical faders: “I was using a Euphonix [now Avid] Artist Control and Mix. So I had 12 faders to play with. With the MTi I have unlimited faders — what’s not to like? I really don’t buy into the “Oh, it’s not a real fader or pot” thing. I’m so much faster on the Raven. It’s big, it’s clear and if I don’t like something about the workflow or arrangement I can change it.”

Perhaps more of a barrier, then, is the physical size of the iPad, and the connectivity when away from the cosy security of your home network. With a touchscreen attached directly to your desktop you have none of the connectivity problems, because the screen is right there: attached via HDMI or DVI, it’s part of your system via a virtual MIDI driver. Although Windows tablets may suffer from the same size issues, hybrids, all-in-ones and dumb touchscreens don’t give you a proper console-sized surface to play with either. Probably the most important point is that the controller can ‘be’ anything — knobs, faders, pads, XY controls, you are not stuck to a hardwired configuration.

SmithsonMartin’s Emulator Elite is an awesome crystal-clear, projected capacitive, 10-point touchscreen that folds out into a beautiful sheet of glass. This is then rear-projected upon to create what looks like the ultimate futuristic DJ performance tool. At $15,500, the ‘Elite’ part of its name is apt. However, a rather more reasonable $99 buys you the screen’s core controller software for use with the desktop. CEO Alan Smithson is a DJ and fully admits that 90 percent of their focus is on the DJ market, but the capabilities of Emulator Pro extend far beyond controlling Traktor and offering performance tools.

Emulator comes with its own internal virtual MIDI driver, which makes it a complete doddle to hook up with Cubase, Pro Tools and other such applications. There are a number of different pages, so you could have mixer control on one and plug-in control on another, or you can add controls to container windows which fold down to a button and reopen when you need them.

Emulator Pro runs only in full-screen mode, but that doesn’t mean it has to obscure the DAW: a feature that’s particularly useful for single-screen setups is the ability to ‘cut holes’ out of its GUI, so that the software running beneath is visible through it. That may be useful to reveal meters, a preview screen, or the arrange page, for instance — the possible configurations are endless.

If Emulator Pro is found lacking anywhere, it’s in the depth of the MIDI side of things. Channel and controller numbers is as far as it goes, so it can’t send SysEx commands or emulate a Mackie HUI, for example. However, Shane Felton (of www.alien-touch.com) has been working on an implementation to get 24 channels of Mackie HUI Control into Pro Tools running on his Apple Mac. The result looks not unlike the Slate Raven MTi, and includes many of the same shortcut buttons and controls. He uses Bome’s MIDI Translator to provide the HUI emulation and three virtual MIDI drivers (one for each group of eight faders) that are setup in Pro Tools. The template files are available to download from his web site, though he stresses that it’s a work in progress and would value contributions.

CopperLan is a networking protocol that connects compatible music software and hardware together. Each device can reveal its parameters by name and be controlled by any other device automatically. It’s a bit like MIDI control, but at a much higher resolution and without all that manual mapping and learning you have to do. There are wrappers for non-compatible plug-ins, but for these you have to manually configure the controls. A CopperLan-compatible touchscreen controller could potentially map itself automatically to whatever CopperLan-compatible plug-in is selected. It can also work internally, without the need for a network, which makes it such an interesting solution for a virtual controller running on the same machine as your DAW.

The DTouch mixer is essentially the fader section of Pro Tools’ mixer window, with cut-outs around the meters so that they shine through. Once the alignment is set up, the design is flawless and you wouldn’t know you were using anything other than the Pro Tools mixer. The toolbar provides all the usual transport controls as well as buttons to activate groups, open selected plug-ins and such like. In the edit window, although no multi-touch controls are overlaid, you get an expanded toolbar full of useful tools and functions. There’s also a load of buttons to which you can assign your own macros. The toolbar allows you to zoom around and perform edits without having to return to the mouse, which is what makes the workflow so effective.

At the time of writing, DTouch was available for Windows 7 only, Pro Tools only, and at a mandatory resolution of 1920 x 1080. However, Devil Technologies were kind enough to let me try their Windows 8.1 beta version, which should be available by the time you read this. One side-effect of the alignment and tight integration is that it’s not very flexible — there’s no ability to edit the controls or create knobs and faders for other things as there is with Emulator and Hollyhock. Instead, its beauty lies in the seamlessness with which it functions alongside the DAW.

I asked the company about the possibility of releasing a generic HUI-based controller, but they tell me they would much prefer to do something that’s designed for the specific DAW — and, encouragingly, they have a Cubase/Nuendo version in the works already. They are also testing out ways to support two screens; currently you have to have everything on the single touchscreen monitor, or the alignments start to shift. Having said that, I tried it over two screens and found that it can work very well, especially on the mix window. But I guess you are back to selecting clips in the edit window with the mouse if you’ve moved it to a non-touch screen.

One unique feature is the ability to incorporate an external, hardware HUI-compatible controller alongside DTouch, to give you the best of all worlds. At 200 Euros it’s more expensive than other, more flexible options I’ve discussed, but it’s a no-nonsense dedicated solution that’s supremely good at what it does.

Let’s get back to the DAW manufacturers. We know the multi-touch support is patchy at the moment, but what of their plans for the future? There appears to be a rough split between software manufacturers for whom multi-touch is already a priority and those who, though interested, are focusing their effort on other priorities. It seems that most of the major firms are the least interested in implementing multi-touch, though. Of course, I should qualify this with the caveat that most major developers don’t like dropping hints about anything that’s unreleased — but Steinberg, for example, went as far as to state that they don’t currently see a market for multi-touch outside the iPad.

The industry’s strong focus on the iPad in recent years is certainly one reason why we see so little development on desktop multi-touch. The other major one is the absence of any lead from Apple into multi-touch support in OS X. It’s not hard to foresee the increasing convergence of iOS and OS X in the medium term, but a fully multi-touch-capable version of OS X still seems a long way off. This, to me, seems a little short-sighted.

James Woodburn, CEO at Tracktion, had this to say: “Eighteen months ago, we were asked regularly when Tracktion would be available for iPad — in fact, we already have a ported version of Tracktion that runs on iOS, but we chose not to release it, as it makes no sense to us to run a full DAW on a limited platform. We would rather design a solution that utilises the iPad’s key benefits and does not expose the limitations The demand, at least from our user base, for iPad support has really dropped off a cliff in the past 12 months — and demand for PC touch is on the rise, albeit quite slowly.”

The key to unlocking multi-touch on the desktop is in the design and implementation of the interface. DAW software has to perform lots of different and precise tasks, some of which lend themselves to touch, but many more of which would be hampered by the fatness and inaccuracy of human fingers. It just makes no sense to build touch into something that would be better accomplished with a mouse. There are also issues with hands and fingers masking the very controls you’re trying to fiddle with.

Bremmers Audio Design have been working with touchscreens for many years, and in MultitrackStudio they’ve developed a neat pop-up window that materialises a couple of inches above what you have your finger on. That means you can see both the control and the value. Even with this function, trying to edit the score window accurately with a fingertip is truly an exercise in futility — but it’s no problem because that’s why we have a mouse. With the iPad it must all be about touch, but with the desktop you can use each and every tool at your disposal, be it single-touch, multi-touch, gestures, stylus, mouse, keyboard, trackpad, Leap Motion, Kinect, hardware controllers, or something else entirely. The desktop should remain an awesomely creative and versatile place.

Spending time over the last few weeks rummaging around in the world of touch-enabled software, I’ve created a bit of a personal wishlist of features. Let’s hope some developers are reading!

In a DAW, I don’t want to be restricted to touch any more than I want to be restricted to a mouse. I want touch controls to become available when I need them — like the way the Pro Channel EQ slides out in Sonar (their mixer needs to do something similar). I want to be able to pinch/zoom into a region and then draw in automation with my finger; but I don’t necessarily want to have to use my fingers to copy and paste, trim audio or move notes.

Scrolling has to be easy, perhaps gesture-based, so that I don’t have to fudge around trying to finger empty space between controls to move the GUI. But I don’t want to have to return to the mouse just to move the screen a bit. Reason has that neat side-panel that shows a zoomed-out version of the rack — which is perfect for finger scrolling. Once you start adding hardware into the equation, a touch-screen makes for a much less jarring experience than putting your hand back on a mouse. In using Arturia’s Spark 2 with the Spark LE controller, it’s so great to be able to simply tap on the screen to change a parameter, preset or sample — it’s a far more fluid experience than moving from a creative hardware place to that mouse zone. It’s also done my RSI no end of good!

In terms of virtual control, which is perhaps more useful, because you could use it with various different bits of music software, a multi-touch screen holds enormous potential. It could be anything, could control anything. Imagine your 24-inch multi-touch screen set before you like a mixer, but placed physically beneath your main (non-touch) screen, and whenever the focus changes to the mixer, or plug-in or instrument, the touchscreen evolves to display the appropriate controls. Something a bit like Novation’s Automap that automatically pulls out the parameters and lays them out in front of you. CopperLan seems to hint at this sort of power, but requires everyone to be compliant for it to reach its potential. That’s the sort of integration we really need.

Hopefully, as more manufacturers realise the advantages of the desktop platform for multi-touch interfaces, they will use it to enhance our music-making environments. With Windows 9 just around the corner, Universal apps and Microsoft’s new-found interest in music production, there’s very little competition and great buckets of processing power and potential for the software developer, which can only mean great things for we touchy-feely users.

The history of touch technology can be traced back to the touch-sensitive capacitance sensors of early synthesizer pioneers such as Hugh LeCaine and Bob Moog — and it’s interesting to note that Apple’s iPad shares the basis of its touch technology with the humble Theremin! The concept of the multi-touch screen was first realised in the 1980s by Bell Labs, but probably made its way into the public consciousness through sci-fi films and series such as Dillinger’s desk in Disney’s Tron (1982) and Star Trek — The Next Generation (1987-94).

In computing terms, multi-touch refers to the ability of a surface to recognise the presence of more than one point of contact. This is distinct from single-touch interfaces, which essentially emulate the mouse input, and moves us through the world of pinch/zoom and gesture control, with which everyone’s familiar, to the possibility of individual touches creating individual actions and responses simultaneously.

The technology that we know today evolved out of a few sources: Fingerworks, a gesture-recognition company who pioneered a number of touchscreen products and were bought by Apple in 2005; Jeff Han’s Perceptive Pixel (bought by Microsoft in 2012) who, back in 2006, were demoing vast multi-touch walls and dazzling us with the concept of pinching photos and swiping maps; and the original Microsoft Surface, now called PixelSense, which started development in 2001 and was an interactive table that combined multi-touch capability with real-world object interaction.

It’s remarkable that in 2005 JazzMutant developed their own multi-touch technology to release the Lemur multi-touch OSC controller commercially. In 2007, with the release of the first iPhone and, a few months later, Microsoft’s Surface (PixelSense) 1.0, we had both ends of the multi-touch spectrum spectacularly catered for. But it would take a few more years for that middle space of tablets, hybrid laptops and multi-touch monitors to really find their technology and pricing sweet spots.

This is a very good question and a hard one to answer! The manufacturers are all over the place, with dual-touch being marketed, confusingly for the end user, as multi-touch, and screens designed for Windows 7 being pushed for Windows 8.

Windows 7, which is still favoured by so many music-makers, supports two-point multi-touch out of the box — so gestures and pinch/zoom all work fine. More points are supported with an additional download, but there was not really any part of the Windows 7 OS that made use of it — so your mileage using multi-touch on that platform will be almost entirely down to the software you’re running.

If designed for Windows 8.x, multi-touch monitors must have at least five simultaneous points. Many all-in-one machines meet only this minimum requirement, whereas hybrids and tablets tend to have 10. Quite honestly, having played with multi-touch for music making for a while now, I’ve rarely found myself using more than two touch-points at once, although sometimes I’ve used up to eight when messing about on a mixer to see what I could do. That said, a 10-point screen is more likely to be a projected-capacitance type, and so of a higher quality than screens offering fewer points.

I chose the Acer T232HL for my own use, because at the time it was the only thing available from the new generation of multi-touch screens. Dell kept promising one, but it kept getting delayed and finally came out about six months after I got the Acer. The Acer remains well regarded, particularly for its ability to lay almost flat, so it was a good choice in that respect.

The biggest gripe I have with multi-touch at the moment is with touch latency — my Acer T232HL adds about 10-15ms (an estimate, having tried playing drum pads, and so on). My understanding is that performance in this regard is rather better on tablets such as the Microsoft Surface, but I’ve not had a chance to test that, so whether, for example, it’s better enough for you to play drums without the latency proving a distraction,z I can’t yet say. Unfortunately, the published specs won’t help: the ‘Response’ time listed in a monitor’s specification usually refers to the change from black to white, and there’s no documentation on the touch response.

We music makers are a greedy bunch. While the rest of the world is moving to ever smaller and more portable devices, we seem to be accruing acres of screen real estate as we hook up multiple monitors to our DAW computers. One question that arose during the course of my research, therefore, was whether you could use two multi-touch screens concurrently with a single computer. This could give you greater access to more parameters spread across a larger desktop or, perhaps more interestingly, allow two people to work on different screens on the same project — for instance, one controlling the mixer, while another controls virtual instruments or effects.

It was a similar story with music applications: when trying to stretch Sonar X3’s mixing console across both screens, for example, the same issue of which screen held the focus arose. The process for grabbing focus is very obvious when you have the screens at different resolutions, because the console jumps in size as you move it between the screens, and that jump is the screen grabbing focus.

However, if I split different tasks out to different screens, the results were more intuitive. Moving Sonar’s mixing console to one screen and its project window to the other allowed me to use them simultaneously with no trouble at all. So, you could have one person mixing levels while the other triggers loops in the Matrix, all in one project on one computer. The bottom line is that multiple multi-touch monitor setups are feasible for music-making, and different applications can be controlled at the same time from some different screens, but I suspect a little trial and error will be required to arrive at the best workflow for each application.

Sorry it took so long to spot this question. The reason you"re not seeing anyone talking about the touchscreen function is that it has nothing to do with the screen and everything to do with primary vs secondary. however the touch screen is simply a mouse built into the monitor. The only reason it lines up with your finger is that it"s calibrated to do so. If you run multiple monitors with touch screen or run one with and one without neither screen can calibrate properly for both screen areas and the touch mouse is locked to the primary monitors logical location.

The only way I"ve seen this done effectively is to use the touchscreen as primary. We did have a customer try dual touch screens but he had major driver issues and eventually ended up disabling the feature on his secondary monitor.

If you travel with a laptop and iPad, you need this app. I needed a second screen, but Duet gives me even more. Full gesture support, customizable shortcuts, Touch Bar, tons of resolution options, and very little battery power. How is this all in one app?

I just love this app. Especially when I am travelling for work an working from the company branches. Then I use my iPad as second monitor for Outlook, Lync and other chat while I use the laptop big screen for remote desktop to my workstation at the main office. :)

When it comes to desktop PCs, one thing is for sure: two screens are better than one. That"s especially true for the tech expert who has to multitask several graphics-intensive programs simultaneously.

Whatever your need, enabling multiple displays on your PC is a surefire way to increase productivity. Below, we discuss all the steps of setting up multiple monitors on your system in detail.

For one, the aesthetic opportunities of a dual or triple monitor setup are fantastic. Compared to a single display, multi-display arrangements allow you to tailor different screens according to their distinct function and purpose. Where dual or multi-monitor setups excel, however, is in their productivity bump. Most programs—especially those used in professional settings—display poorly when using even half of a complete display.

That"s why a dual-monitor setup allows users dramatic productivity increases. You can constantly view all available tools, menu selections, and information without constantly switching between tasks. In other words, alternate displays allow users additional screen real estate. You don"t have to sacrifice any particular function to monitor hardware, listen to music, edit graphic elements, analyze data, or write content.

You don"t even have to invest in an ultra-high definition display out of the gate to have a decent multi-monitor setup. Older, flat-screen monitors can still do their job rather well in a dual monitor setup. This is especially true when you consider flipping your monitor from landscape to portrait.

If you rotate your desktop on its side, you can use your 24-inch monitor in portrait. This setup can provide plenty of screen real estate, allow for easier reading and scrolling functions, or pose as a live (and endless) news and timeline UI.

Whether you"re a novice or a pro, you can benefit from a basic dual monitor setup. Best of all, most graphics cards allow multiple monitor setups out of the box. Besides, multi-monitor configuration couldn"t be easier!

Picking a second monitor couldn"t be easier, assuming you"re already viewing this via a PC monitor. That"s because most modern monitors in the market are both flat-screen and high definition (16:9 aspect ratio). This allows users plenty of space either in landscape or portrait mode. For example, a simple 23.8-inch Acer monitor can give you crisp 1080p resolution in vivid color.

Larger screen sizes and display resolutions typically determine higher price points. Yet, the setup for an expensive or budget monitor is exactly the same. For a basic dual-monitor setup, not much more than a 23.8-inch display would be necessary. You can even use your laptop as a second monitor.

More important than your new monitor"s dimensions is the proper cable type needed to connect your monitor to its appropriate port. Many confuse one cable type for another, which can be a serious hassle. For a multiple monitor setup, you"ll have to ensure that your graphics card supports multiple monitors in the first place.

HDMI and DisplayPort are the newer types of connections for monitors, while DVI and VGA are older. There are many benefits to these newer cable types, two of which are immediately pertinent: newer cable types offer better image display, and DisplayPorts function as the Swiss army knife of cables by adapting to all the connection types mentioned.

Monitors and displays connect to the PC via its graphics card, otherwise known as a GPU. The GPU handles the graphics processing capabilities of a PC, so you"ll naturally have to connect your monitor to your GPU component. Ensure you"re connecting your display to the primary graphics card used in your PC—often an external GPU—and not the default, integrated graphics ports.

If you have multiple monitors that use DisplayPort but only have one space in your graphics card for that type of connection, you"ll have to use a DisplayPort hub with multi-stream transport. The hub will connect to your only available DisplayPort port and allow you to connect as many as three monitors to it. Or you could opt for a monitor with daisy-chain capabilities.

You should see your second display within the settings image. Windows 10 conveniently provides this interface, so users can easily configure multiple displays.

The Display window allows for both X and Y coordinates, meaning monitors don"t have to be placed directly beside one another to function. If you"re confused about which display your PC refers to, click the Identify button to view which monitor is which.

For one, Windows 10 will sometimes display your monitor at a smaller resolution than native to the display. Set your Resolution to the Recommended setting (or higher).

What if you have two PCs with different operating systems and would like to use both of them at the same time? It sounds impossible unless you"ve heard of Synergy. Synergy is a mouse and keyboard sharing application that allows users to use any combination of Mac, Windows, or Linux PCs simultaneously, seamlessly, with one keyboard and mouse combination.

Synergy is impressive, even for nerds like myself. Setting up a new Linux distro while using your regular PC? You can do that. Have an office setup that uses both Mac and Windows but don"t want to spend your time unplugging the mouse and keyboard from one to use the other? Are you the office tech guy and constantly have to correct a coworker"s mistakes, but hate walking over to their desk? Synergy does all of this and more.

As trivial as it may sound, part of the fun of a dual or multi-monitor background is using multiple backgrounds. No longer are you tied down to a stale, single background. Better yet, it"s readily available to do in Windows 10!

To use different backgrounds on multiple monitors, open your Background settings window again. Once your window is open, scroll down until you see the Browse button under the Choose your picture category. Click the Browse button and select the image you want to use as a background. Do this for as many backgrounds as you"d like to have.

Once you have your backgrounds slotted, right-click on its thumbnail image. You should see a selection labeled Set for all monitors or Set for monitor X. Select whichever one you"d like.

That"s it! While there are third-party applications out there that also allow for a multiple background setup, the easiest and fastest way to get it done is by default. Below is an example of two reflected wallpapers on a dual-monitor setup.

Finally, head to your background settings again and Browse for your wide image. Then, under the Choose a fit option, select Span. That"s it! Now you know how one background spanning multiple monitors looks.

This step is an extension of the step we just discussed above. Like wallpapers, you can also combine entire displays so that the Windows is maximized across all the monitors. While a widescreen might not increase productivity at work, it can be great for gaming or even watching movies.

The steps of doing so can vary depending on the graphics card you have installed on your PC. Our guide to maximizing your window across different monitors covers steps to take for different graphics cards, so make sure you check it out for a great experience.

Now that you know how to configure multiple wallpapers, the natural next step is obvious: video. Setting up a video wallpaper on one or both of your monitors is now a breeze using this well-recommended software from the Steam store: Wallpaper Engine. This, however, is a paid tool.

To get multiple videos on multiple monitors, open Wallpaper Engine. You can open the program after you"ve launched it on Steam by locating its taskbar icon, right-clicking on it, and selecting Change Wallpaper.

Once you"ve opened the software, select a monitor (all of which should appear in the software) and select either Change Wallpaper or Remove Wallpaper. You can also extend a single video to span your monitors via the Layout option in this window as well. After you"ve chosen a display, select Change Wallpaper. In this window, switch to the Workshop tab. This is where you"ll download your video background.

Close Wallpaper Engine and restart the process for as many monitors as you"d like. That"s it! You now have stunning, crisp video wallpapers on every monitor at your disposal.

Rainmeter is our favorite Windows desktop customization tool. It allows users to create a simple or complex multi-monitor setup easily. If you are completely unaware of Rainmeter"s potential, head to the article link below to get up to speed.

Dual monitor setups remind me of solid-state drives. Before users own one, they seem frivolous. After they own one, they become indispensable. Maybe you want to be more productive, or maybe you have a flair for a dramatic PC setup.

With the revival of the “Start Menu” from Windows 8 to Windows 10, this user-friendly desktop UI (user interface) realizes a more operable multi-display function. Let’s take a look at how we can use this multi-display tool on a Windows 10 notebook or desktop PC.

On July 29 2015, Windows released their new operating system “Windows 10.” Devices equipped with Windows 7 or Windows 8.1 were given one year to upgrade to Windows 10 for free, and so the migration to the new OS (operating system) Windows 10 is happening much faster than previous Windows operating systems. Even corporate users who emphasize stability over innovation, will proceed to slowly migrate towards Windows 10 when Windows 7 support ends in 2020.

When using Windows 10 in your notebook PC or desktop computer you’ll notice one major change – the revival of the desktop UI. This UI was revived after the complete removal of the Start Menu in Windows 8/8.1 - previously present in Windows 7 and earlier - was met with mixed reactions. The latest UI has become much easier to use, with the modern UI “tile format” being integrated with a virtual desktop feature in order to enhance multitasking and workability.

With the new focus on the desktop UI, Windows 10 has naturally improved the display settings. For example the “multi-display” function (Multiple displays connected to one’s PC for simultaneous use) has been greatly improved. Let’s go through some of these surprisingly not well known Windows 10 multi-display functions found on both notebook PCs and Desktop PCs.

Example: The expanded display of two EIZO FlexScan EV2455 monitors connected to a desktop PC. Aligning two 24.1" WUXGA (1920 x 1200 pixels) monitors side by side achieves a combined resolution of 3840 x 1200 pixels.

Setting up a multi-display environment on Windows 10 is incredibly simple. When you connect a second display to your PC, Windows automatically detects the display and displays the desktop UI.

In this case we opened the multi-display function from the desktop UI by selecting the OS “Project” menu. From the taskbar, click on the Action Center (bottom right of screen) and select “Project,” or if you want to use the shortcut keys, press the Windows Key andP key and the “Project” menu will appear. There are four types of display methods that can be chosen. If you want to expand the desktop UI over two screens, select the “Extend” option.

Find the “Action Center” icon in the lower right taskbar, and click on the “Project” icon (left image). In the “Project” menu, out of the four options, choose how you want to display your monitors (right image)

From here the two screens’ position relative to each other, display size such as text (expansion rate), display orientation, the previous four display method settings, and the main / sub-display monitor settings can be changed. Additionally, if you cannot see your connected display, click on “detect” to try to find the display (if this doesn’t work we recommend reconnecting the cable and/or restarting your PC).

In the enclosed grey squares [1] and [2], the position of the two monitors relative to each other is displayed. It’s best to drag these two squares to suit the actual position of your monitors.

In the “System > Display” menu the screen position, display size (enlargement ratio), display orientation, display method of the multi-display, and main/sub display can be set.

If you scroll down to the bottom of the “Display” menu there is an “advanced display settings” link. If you click on this, you can set the resolutions of the display monitors. Additionally, if you click on the “Advanced sizing of text and other items” link, you can change the settings for more detailed things like the size of items and text.

As shown above, Windows 10 has a new settings application installed which we recommend you use. But you can also use the “control panel” found in Windows 8 and earlier. To any familiar PC user, the conventional method of using the control panel to display various settings is still possible.

In Windows 10, the Snap Assist function that sticks the window to the edge of the screen is available, and even more convenient. If you drag the window to the left or right of the screen, the window will expand to fill half of the screen. This is also possible in the extended desktop function where two windows can be placed onto the left and right sides of each monitor, making a total of four open windows. This can also be accomplished with the shortcut keys Windows + left or right arrow.

After snapping the window to either the left or right using Snap Assist, the vacant area on the opposite side will list all other available windows that can be selected to fit that space. This is also a new feature of Windows 10.

In Windows 10, after a window has been snapped to either the left or right side using the snap function, the empty area in the opposite side will display all other available windows as thumbnails. Choose one of these windows and it will fill that side of the screen.

Furthermore in Windows 10, if a window is moved to one of the four corners of the screen, it will shrink to 1/4 the size of the screen, so that four windows can be displayed at once. Additionally, in a multi-display environment, if you are displaying too many windows and your desktop has become messy, click and drag the window you want to view and quickly shake it to minimize all other windows. You can also press Windows and Home.

The above image shows the difference between the “All taskbars,” “Main taskbar and taskbar where window is open,” and “Taskbar where window is open” settings. The Windows 10 voice-enabled personal assistant “Cortana,” time icons and the notification area will always display on the first monitor.

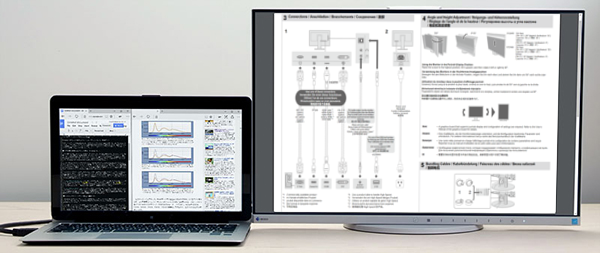

If you connect an external display to a notebook PC, being able to create a large-screen, high resolution dual-display environment can significantly improve one’s work efficiency. These days products with high density pixel displays larger than full HD are becoming more common, but if a notebook PC with a screen size of 13 or 14 inches is displayed on one of these high resolution displays, the screen will end up shrinking so that it’s difficult to read, and so it has to be enlarged by 150% or 200%. Therefore it’s not that resolution = workspace, but rather that your workspace is limited to the size of your screen.

But an external display with a mainstream 23 – 24" full HD (1920 x 1080 pixels) or WUXGA (1920 x 1200 pixels) model, connected to a notebook PC, will display in a similar size to the notebook PC making it familiar to the user, and providing a lot of work space.

For example you could do things like compare multiple pages at once in a web browser; create a graph on a spreadsheet and paste it into a presentation while reading a PDF document; do work on one screen and watch videos or view a social media timeline on the other; play a game on one screen while reading a walk-through on the other, or use an external color management monitor to check for correct colors. Using an external monitor in addition to your notebook PC allows all of these things to be done seamlessly without having to switch between windows.

Example: An EIZO 24.1 inch WUXGA display (FlexScan EV2455) connected to a high-spec 2in1 VAIO Z notebook PC (from here on the examples will display the same set-up). The VAIO Z notebook display has a high definition resolution of 2560 x 1440 pixels, but because the screen is only a “mobile” 13.3 inches, on Windows it is expanded to 200%. Adding this to the FlexScan EV2455’s 24.1 inch 1920 x 1200 pixel display, gives a vast area of work space. Of course, because the FlexScan EV2455 has a large screen and 1920 x 1200 pixels, the notebook’s display can be displayed at 100% without needing to increase the 1920 x 1200 pixels. This makes for comfortable browsing of multiple web pages as shown.

Example: On a large external display, you can watch an online video while searching for relevant information on your notebook. Of course you can surf the internet on anything, but the large external screen is perfect for enjoying video content.

A word of advice when choosing a monitor to connect to your notebook PC, in a dual display environment — having the two taskbars at the bottom of the screen be uniform makes it easier to use, but a notebook PC’s height cannot be adjusted, so choosing a product that can be easily adjusted is desirable. Furthermore, because a notebook’s display is situated at a fairly low height, an external monitor that can be lowered to the table surface is better.

On the other hand, if you have an external monitor that can be raised quite high, it can be situated on top of the notebook – achieving an extended workspace on a narrow desk. Additionally, if you have an external monitor that is capable of rotating to a vertical (portrait) position, you can take advantage of the long screen by using it for web pages, SNS timelines, and reading documents.

If an LCD display’s height adjustment range is wide, you can create a vertical multi-display environment like this, reducing the required width of your working space. The image gives the example of a VAIO Z and FlexScan EV2455, but if you tilt the screen of the VAIO Z, the FlexScan EV2455 can be made to not overlap as shown; naturally creating two screens.

In our examples we used the EIZO 24.1-inch WUXGA display FlexScan EV2455 because it is a monitor with a height adjustment range of 131 mm and the ability to be vertically rotated, so it can be easily combined with a notebook PC. Additionally, because of the narrow “frameless” design, the black border and bezel (i.e. noise) is minimized as much as possible. It’s easy to appreciate how the visual transition from one screen to the other becomes naturally gentler on the eyes. This monitor will also suit any photo-retouching and content creation by correctly displaying the sRGB color gamut; i.e. displaying colors the same as those found in most notebook PCs.

It should be noted that in Windows 10, the “tablet mode” cannot be used in a multi-display environment. In Windows 8/8.1 a notebook PC could display the modern UI start screen while an external display could display the desktop UI, but in Windows 10 the multi-display environment is restricted to only using the desktop UI. This is one of the revived functions that were found to be most useful in Windows 7.

Because there are no screen size or resolution restrictions like in a notebook PC, the desktop multi-display environment can use a flexible combination of screen sizes and resolutions according to your location, budget or application. If so inclined, using the previous EIZO monitor, a resolution of 5760 x 1080 pixels could be made from 3 monitors, 5760 x 2160 pixels from 6 monitors, and many more variations can be made.

Of course even a non-high-spec environment can find improvement in their work efficiency by using two mainstream 23 – 24 inch Full HD (1920 x 1080 pixels)/WUXGA (1920 x 1200 pixels) monitors, compared to just the one monitor.

An example of how a multi-display environment can be used in the business scene. The left display can display tables and calculations of statistical data, while comparing the graphs, and the right screen can be used to summarize the findings in a document. If this were just one monitor, you would be constantly switching between windows, but with two monitors you can see all the necessary data without needing to switch between windows; improving work efficiency and reducing transcribing errors.

An example of how map-based services can be used. On just one screen, the display range of a map is quite narrow, but with two screens, a map, aerial photo, information about the location, and photos from the location can all be displayed at the same time. You can take advantage of the realism of the large screen by doing virtual tours of tourist destinations.

An example of how the multi-display environment can help with photo re-touching. Rotating one monitor to the vertical position can help with retouching portrait photos, or editing long documents and websites. If you want to take advantage of a vertical screen, you need to choose a monitor that can b

Ms.Josey

Ms.Josey

Ms.Josey

Ms.Josey