wave and particle behaviors of lcd displays quotation

The theoretical sketch of the wave-particle scheme for the single photon is displayed in Fig. 1. A photon is initially prepared in a polarization state \(\left| {{\psi _0}} \right\rangle = {\rm{cos}}\,\alpha \left| {\rm V} \right\rangle + {\rm{sin}}\,\alpha \left| {\rm{H}} \right\rangle\), where \(\left| {\rm V} \right\rangle\) and \(\left| {\rm{H}} \right\rangle\) are the vertical and horizontal polarization states and α is adjustable by a preparation half-wave plate (not shown in the figure). After crossing the preparation part of the setup of Fig. 1 (see Supplementary Notes 1 and 2 and Supplementary Fig. 1 for details), the photon state is

$$\left| {{\psi _{\rm{f}}}} \right\rangle = {\rm{cos}}\,\alpha \left| {{\rm{wave}}} \right\rangle + {\rm{sin}}\,\alpha \left| {{\rm{particle}}} \right\rangle ,$$

$$\begin{array}{*{20}{l}}\\ {\left| {{\rm{wave}}} \right\rangle } = {{e^{i{\phi _1}/2}}\left( {{\rm{cos}}\frac{{{\phi _1}}}{2}\left| 1 \right\rangle - i\,{\rm{sin}}\frac{{{\phi _1}}}{2}\left| 3 \right\rangle } \right),} \hfill \\ {\left| {{\rm{particle}}} \right\rangle } = {\frac{1}{{\sqrt 2 }}\left( {\left| 2 \right\rangle + {e^{i{\phi _2}}}\left| 4 \right\rangle } \right),} \hfill \\ \end{array}$$

operationally represent the capacity \(\left( {\left| {{\rm{wave}}} \right\rangle } \right)\) and incapacity \(\left( {\left| {{\rm{particle}}} \right\rangle } \right)\) of the photon to produce interference\(\left| {{\rm{wave}}} \right\rangle\) state the probability of detecting the photon in the path \(\left| n \right\rangle \,\) (n = 1, 3) depends on the phase ϕ

1: the photon must have traveled along both paths simultaneously (see upper MZI in Fig. 1), revealing its wave behavior. Instead, for the \(\left| {{\rm{particle}}} \right\rangle\) state the probability to detect the photon in the path \(\left| n \right\rangle \,\) (n = 2, 4) is 1/2, regardless of phase ϕ

2: thus, the photon must have crossed only one of the two paths (see lower MZI of Fig. 1), showing its particle behavior. Notice that the scheme is designed in such a way that \(\left| {\rm V} \right\rangle\)

Conceptual figure of the wave-particle toolbox. A single photon is coherently separated in two spatial modes by means of a polarizing beam-splitter (PBS) according to its initial polarization state (in). A half-wave plate (HWP) is placed after the PBS to obtain equal polarizations between the two modes. One mode is injected in a complete Mach-Zehnder interferometer (MZI) with phase ϕ

1, thus exhibiting wave-like behavior. The second mode is injected in a Mach-Zehnder interferometer lacking the second beam-splitter, thus exhibiting particle-like behavior (no dependence on ϕ

To verify the coherent wave-particle superposition as a function of the parameter α, the wave and particle states have to interfere at the detection level. This goal is achieved by exploiting two symmetric beam-splitters where the output paths (modes) are recombined, as illustrated in the detection part of Fig. 1. The probability P

We remark that the terms \({{\cal I}_{\rm{c}}}\), \({{\cal I}_{\rm{s}}}\) in the detection probabilities exclusively stem from the interference between the \(\left| {{\rm{wave}}} \right\rangle\) and \(\left| {{\rm{particle}}} \right\rangle\) components appearing in the generated superposition state \(\left| {{\psi _{\rm{f}}}} \right\rangle\) of Eq. (1). This fact is further evidenced by the appearance, in these interference terms, of the factor \({\cal C} = {\rm{sin}}\,2\alpha\), which is the amount of quantum coherence owned by \(\left| {{\psi _{\rm{f}}}} \right\rangle\) in the basis {\(\left| {{\rm{wave}}} \right\rangle\), \(\left| {{\rm{particle}}} \right\rangle\)} theoretically quantified according to the standard l

1-norm\({{\cal I}_{\rm{c}}}\), \({{\cal I}_{\rm{s}}}\) are always identically zero (independently of phase values) when the final state of the photon is: (i) \(\left| {{\rm{wave}}} \right\rangle\) (α = 0); (ii) \(\left| {{\rm{particle}}} \right\rangle\) (α = π/2); (iii) a classical incoherent mixture \({\rho _{\rm{f}}} = {\rm{co}}{{\rm{s}}^2}\alpha \left| {{\rm{wave}}} \right\rangle \left\langle {{\rm{wave}}} \right| + {\rm{si}}{{\rm{n}}^2}\alpha \left| {{\rm{particle}}} \right\rangle \left\langle {{\rm{particle}}} \right|\) (which can be theoretically produced by the same scheme starting from an initial mixed polarization state of the photon).

The experimental single-photon toolbox, realizing the proposed scheme of Fig. 1, is displayed in Fig. 2 (see Methods for more details). The implemented layout presents the advantage of being interferometrically stable, thus not requiring active phase stabilization between the modes. Figure 3 shows the experimental results for the measured single-photon probabilities P

n. For α = 0, the photon is vertically polarized and entirely reflected from the PBS to travel along path 1, then split at BS1 into two paths, both leading to the same BS3 which allows these two paths to interfere with each other before detection. The photon detection probability at each detector Dn (n = 1, 2, 3, 4) depends on the phase shift ϕ

1: \({P_1}\left( {\alpha = 0} \right) = {P_2}\left( {\alpha = 0} \right) = \frac{1}{2}{\rm{co}}{{\rm{s}}^2}\frac{{{\phi _1}}}{2}\), \({P_3}\left( {\alpha = 0} \right) = {P_4}\left( {\alpha = 0} \right) = \frac{1}{2}{\rm{si}}{{\rm{n}}^2}\frac{{{\phi _1}}}{2}\), as expected from Eqs. (3) and (4). After many such runs an interference pattern emerges, exhibiting the wave-like nature of the photon. Differently, if initially α = π/2, the photon is horizontally polarized and, as a whole, transmitted by the PBS to path 2, then split at BS2 into two paths (leading, respectively, to BS4 and BS5) which do not interfere anywhere. Hence, the phase shift ϕ

2 plays no role on the photon detection probability and each detector has an equal chance to click: \({P_1}\left( {\alpha = \frac{\pi }{2}} \right) = {P_2}\left( {\alpha = \frac{\pi }{2}} \right) = {P_3}\left( {\alpha = \frac{\pi }{2}} \right) = {P_4}\left( {\alpha = \frac{\pi }{2}} \right) = \frac{1}{4}\), as predicted by Eqs. (3) and (4), showing particle-like behavior without any interference pattern. Interestingly, for 0 < α < π/2, the photon simultaneously behaves like wave and particle. The coherent continuous morphing transition from wave to particle behavior as α varies from 0 to π/2 is clearly seen from Fig. 4a and contrasted with the morphing observed for a mixed incoherent wave-particle state ρ

2 = 0, the coherence of the generated state is also directly quantified by measuring the expectation value of an observable \(\sigma _x^{1234}\), defined in the four-dimensional basis of the photon paths \(\left\{ {\left| 1 \right\rangle ,\left| 2 \right\rangle ,\left| 3 \right\rangle ,\left| 4 \right\rangle } \right\}\) of the preparation part of the setup as a Pauli matrix σ

x between modes (1, 2) and between modes (3, 4). It is then possible to straightforwardly show that \(\left\langle {\sigma _x^{1234}} \right\rangle = {\rm{Tr}}\left( {\sigma _x^{1234}{\rho _{\rm{f}}}} \right) = 0\) for any incoherent state ρ

f, while \(\sqrt 2 \left\langle {\sigma _x^{1234}} \right\rangle = {\rm{sin}}\,2\alpha = {\cal C}\) for an arbitrary state of the form \(\left| {{\psi _{\rm{f}}}} \right\rangle\) defined in Eq. (1). Insertion of beam-splitters BS4 and BS5 in the detection part of the setup (corresponding to β = 22.5° in the output wave-plate of Fig. 2) rotates the initial basis \(\left\{ {\left| 1 \right\rangle ,\left| 2 \right\rangle ,\left| 3 \right\rangle ,\left| 4 \right\rangle } \right\}\) generating a measurement basis of eigenstates of \(\sigma _x^{1234}\), whose expectation value is thus obtained in terms of the detection probabilities as \(\left\langle {\sigma _x^{1234}} \right\rangle = {P_1} - {P_2} + {P_3} - {P_4}\) (see Supplementary Note 2). As shown in Fig. 4c, d, the observed behavior of \(\sqrt 2 \left\langle {\sigma _x^{1234}} \right\rangle\) as a function of α confirms the theoretical predictions for both coherent \(\left| {{\psi _{\rm{f}}}} \right\rangle\) (Fig. 4c) and mixed (incoherent) ρ

f wave-particle states (the latter being obtained in the experiment by adding a relative time delay in the interferometer paths larger than the photon coherence time to lose quantum interference, Fig. 4d).

Experimental setup for wave-particle states. a Overview of the apparatus for the generation of single-photon wave-particle superposition. An heralded single-photon is prepared in an arbitrary linear polarization state through a half-wave plate rotated at an angle α/2 and injected into the wave-particle toolbox. b Overview of the apparatus for the generation of a two-photon wave-particle entangled state. Each photon of a polarization entangled state is injected into an independent wave-particle toolbox to prepare the output state. c Actual implemented wave-particle toolbox, reproducing the action of the scheme shown in Fig. 1. Top subpanel: top view of the scheme, where red and purple lines represent optical paths lying in two vertical planes. Bottom subpanel: 3-d scheme of the apparatus. The interferometer is composed of beam-displacing prisms (BDP), half-wave plates (HWP), and liquid crystal devices (LC), the latter changing the phases ϕ

2. The output modes are finally separated by means of a polarizing beam-splitter (PBS). The scheme corresponds to the presence of BS4 and BS5 in Fig. 1 for β = 22.5°, while setting β = 0 equals to the absence of BS4 and BS5. The same color code for the optical elements (reported in the figure legend) is employed for the top view and for the 3-d view of the apparatus. d Picture of the experimental apparatus. The green frame highlights the wave-particle toolbox

1, for different values of α. a Wave behavior (α = 0). b Particle behavior (α = π/2). c Coherent wave-particle superposition (α = π/4). d Incoherent mixture of wave and particle behaviors (α = π/4). Points: experimental data. Dashed curves: best-fit of the experimental data. Color legend: orange (P1), green (P2), purple (P3), blue (P

1 and of the angle α. Points: experimental data. Surfaces: theoretical expectations. In all plots, error bars are standard deviation due to the Poissonian statistics of single-photon counting

4). In a black triangles highlighted the position for wave behavior (α = 0), black circle for particle behavior (α = π/2) and black diamonds highlight the position for coherent wave-particle superposition behavior (α = π/4). c Coherence measure \(\sqrt 2 \left\langle {\sigma _x^{1234}} \right\rangle\) as a function of α in the coherent case and d for an incoherent mixture (the latter showing no dependence on α). Points: experimental data. Solid curves: theoretical expectations. Error bars are standard deviations due to the Poissonian statistics of single-photon counting

The above single-photon scheme constitutes the basic toolbox which can be extended to create a wave-particle entangled state of two photons, as shown in Fig. 2b. Initially, a two-photon polarization maximally entangled state \({\left| \Psi \right\rangle _{{\rm{AB}}}} = \frac{1}{{\sqrt 2 }}\left( {\left| {{\rm VV}} \right\rangle + \left| {{\rm{HH}}} \right\rangle } \right)\) is prepared (the procedure works in general for arbitrary weights, see Supplementary Note 3). Each photon is then sent to one of two identical wave-particle toolboxes which provide the final state

$${\left| \Phi \right\rangle _{{\rm{AB}}}} = \frac{1}{{\sqrt 2 }}\left( {\left| {{\rm{wave}}} \right\rangle \left| {{\rm{wave}"}} \right\rangle + \left| {{\rm{particle}}} \right\rangle \left| {{\rm{particle}"}} \right\rangle } \right),$$

where the single-photon states \(\left| {{\rm{wave}}} \right\rangle\), \(\left| {{\rm{particle}}} \right\rangle\), \(\left| {{\rm{wave}"}} \right\rangle\), \(\left| {{\rm{particle}"}} \right\rangle\) are defined in Eq. (2), with parameters and paths related to the corresponding wave-particle toolbox. Using the standard concurrenceC to quantify the amount of entanglement of this state in the two-photon wave-particle basis, one immediately finds C = 1. The generated state \({\left| \Phi \right\rangle _{{\rm{AB}}}}\) is thus a wave-particle maximally entangled state (Bell state) of two photons in separated locations.

The output two-photon state is measured after the two toolboxes. The results are shown in Fig. 5. Coincidences between the four outputs of each toolbox are measured by varying ϕ

1 and \(\phi _1^{\prime}\). The first set of measurements (Fig. 5a–d) is performed by setting the angles of the output wave-plates (see Fig. 2c) at {β = 0, β′ = 0}, corresponding to removing both BS4 and BS5 in Fig. 1 (absence of interference between single-photon wave and particle states). In this case, detectors placed at outputs (1, 3) and (1′, 3′) reveal wave-like behavior, while detectors placed at outputs (2, 4) and (2′, 4′) evidence a particle-like one. As expected, the two-photon probabilities \({P_{n{n^{\prime}}}}\) for the particle detectors remain unchanged while varying ϕ

1 and \(\phi _1^{\prime}\), whereas the \({P_{nn"}}\) for the wave detectors show interference fringes. Moreover, no contribution of crossed wave-particle coincidences \({P_{nn"}}\) is obtained, due to the form of the entangled state. The second set of measurements (Fig. 5e–h) is performed by setting the angles of the output wave-plates at {β = 22.5°, β′ = 22.5°}, corresponding to the presence of BS4 and BS5 in Fig. 1 (the presence of interference between single-photon wave and particle states). We now observe nonzero contributions across all the probabilities depending on the specific settings of phases ϕ

1 and \(\phi _1^{\prime}\). The presence of entanglement in the wave-particle behavior is also assessed by measuring the quantity \({\cal E} = {P_{22"}} - {P_{21"}}\) as a function of ϕ

1, with fixed \(\phi _1^{\prime} = {\phi _2} = \phi _2^{\prime} = 0\). According to the general expressions of the coincidence probabilities (see Supplementary Note 3), \({\cal E}\) is proportional to the concurrence C and identically zero (independently of phase values) if and only if the wave-particle two-photon state is separable (e.g., \(\left| {{\rm{wave}}} \right\rangle\) ⊗ \(\left| {{\rm{wave}"}} \right\rangle\) or a maximal mixture of two-photon wave and particle states). For \(\left| \Phi \right\rangle\)

AB of Eq. (5) the theoretical prediction is \({\cal E} = \left( {1{\rm{/}}4} \right){\rm{co}}{{\rm{s}}^{\rm{2}}}\left( {{\phi _1}{\rm{/}}2} \right)\), which is confirmed by the results reported in Fig. 5i, j (within the reduction due to visibility). A further test of the generated wave-particle entanglement is finally performed by the direct measure of the expectation values \(\left\langle {\cal W} \right\rangle = {\rm{Tr}}\left( {{\cal W}\rho } \right)\) of a suitable entanglement witness

z Pauli matrix between modes (1, 2) and between modes (3, 4). The measurement basis of \(\sigma _z^{1234}\) is that of the initial paths \(\left\{ {\left| 1 \right\rangle ,\left| 2 \right\rangle ,\left| 3 \right\rangle ,\left| 4 \right\rangle } \right\}\) exiting the preparation part of the single-photon toolbox. It is possible to show that \({\rm{Tr}}\left( {{\cal W}{\rho _{\rm{s}}}} \right) \ge 0\) for any two-photon separable state ρ

e is entangled in the photons wave-particle behavior (see Supplementary Note 3). The expectation values of \({\cal W}\) measured in the experiment in terms of the 16 coincidence probabilities P

1 = 0, \(\phi _1^\prime\) = π). These observations altogether prove the existence of quantum correlations between wave and particle states of two photons in the entangled state \(\left| \Phi \right\rangle\)

Generation of wave-particle entangled superposition with a two-photon state. Measurements of the output coincidence probabilities \({P_{nn"}}\) to detect one photon in output mode n of the first toolbox and one in the output mode n′ of the second toolbox, with different phases ϕ

1 = π and \(\phi _1^{\prime} = \pi\). White bars: theoretical predictions. Colored bars: experimental data. Orange bars: \({P_{n{n^{\prime}}}}\) contributions for detectors Dn and \({{\rm{D}}_{n"}}\) linked to wave-like behavior for both photons (in the absence of BS4 and BS5). Cyan bars: \({P_{nn"}}\) contributions for detectors Dn and \({{\rm{D}}_{n"}}\) linked to particle-like behavior for both photons (in the absence of BS4 and BS5). Magenta bars: \({P_{nn"}}\) contributions for detectors Dn and \({{\rm{D}}_{n"}}\) linked to wave-like behavior for one photon and particle-like behavior for the other one (in absence of BS4 and BS5). Darker regions in colored bars correspond to 1 σ error interval, due to the Poissonian statistics of two-photon coincidences. i, j, Quantitative verification of wave-particle entanglement. i, \({P_{22"}}\) (blue) and \({P_{21"}}\) (green) and j, \({\cal E} = {P_{22"}} - {P_{21"}}\), as a function of ϕ

1 for \(\phi _1^{\prime} = 0\) and {β = 22.5°, β′ = 22.5°}. Error bars are standard deviations due to the Poissonian statistics of two-photon coincidences. Dashed curves: best-fit of the experimental data

“Electrons, when they were first discovered, behaved exactly like particles or bullets, very simply. Further research showed, from electron diffraction experiments for example, that they behaved like waves. As time went on there was a growing confusion about how these things really behaved ---- waves or particles, particles or waves? Everything looked like both.

This growing confusion was resolved in 1925 or 1926 with the advent of the correct equations for quantum mechanics. Now we know how the electrons and light behave. But what can I call it? If I say they behave like particles I give the wrong impression; also if I say they behave like waves. They behave in their own inimitable way, which technically could be called a quantum mechanical way. They behave in a way that is like nothing that you have seen before. Your experience with things that you have seen before is incomplete. The behavior of things on a very tiny scale is simply different. An atom does not behave like a weight hanging on a spring and oscillating. Nor does it behave like a miniature representation of the solar system with little planets going around in orbits. Nor does it appear to be somewhat like a cloud or fog of some sort surrounding the nucleus. It behaves like nothing you have seen before.

The difficulty really is psychological and exists in the perpetual torment that results from your saying to yourself, "But how can it be like that?" which is a reflection of uncontrolled but utterly vain desire to see it in terms of something familiar. I will not describe it in terms of an analogy with something familiar; I will simply describe it. There was a time when the newspapers said that only twelve men understood the theory of relativity. I do not believe there ever was such a time. There might have been a time when only one man did, because he was the only guy who caught on, before he wrote his paper. But after people read the paper a lot of people understood the theory of relativity in some way or other, certainly more than twelve. On the other hand, I think I can safely say that nobody understands quantum mechanics. So do not take the lecture too seriously, feeling that you really have to understand in terms of some model what I am going to describe, but just relax and enjoy it. I am going to tell you what nature behaves like. If you will simply admit that maybe she does behave like this, you will find her a delightful, entrancing thing. Do not keep saying to yourself, if you can possible avoid it, "But how can it be like that?" because you will get "down the drain", into a blind alley from which nobody has escaped. Nobody knows how it can be like that.”

Wave–particle duality is the concept in quantum mechanics that every particle or quantum entity may be described as either a particle or a wave. It expresses the inability of the classical concepts "particle" or "wave" to fully describe the behaviour of quantum-scale objects. As Albert Einstein wrote:

It seems as though we must use sometimes the one theory and sometimes the other, while at times we may use either. We are faced with a new kind of difficulty. We have two contradictory pictures of reality; separately neither of them fully explains the phenomena of light, but together they do.

Through the work of Max Planck, Albert Einstein, Louis de Broglie, Arthur Compton, Niels Bohr, Erwin Schrödinger and many others, current scientific theory holds that all particles exhibit a wave nature and vice versa.atoms and even molecules. For macroscopic particles, because of their extremely short wavelengths, wave properties usually cannot be detected.

Although the use of the wave–particle duality has worked well in physics, the meaning or interpretation has not been satisfactorily resolved; see interpretations of quantum mechanics.

Bohr regarded the "duality paradox" as a fundamental or metaphysical fact of nature. A given kind of quantum object will exhibit sometimes wave, sometimes particle, character, in respectively different physical settings. He saw such duality as one aspect of the concept of complementarity.

Werner Heisenberg considered the question further. He saw the duality as present for all quantic entities, but not quite in the usual quantum mechanical account considered by Bohr. He saw it in what is called second quantization, which generates an entirely new concept of fields that exist in ordinary space-time, causality still being visualizable. Classical field values (e.g. the electric and magnetic field strengths of Maxwell) are replaced by an entirely new kind of field value, as considered in quantum field theory. Turning the reasoning around, ordinary quantum mechanics can be deduced as a specialized consequence of quantum field theory.

Democritus (5th century BC) argued that all things in the universe, including light, are composed of indivisible sub-components.Euclid (4th–3rd century BC) gives treatises on light propagation, states the principle of shortest trajectory of light, including multiple reflections on mirrors, including spherical, while Plutarch (1st–2nd century AD) describes multiple reflections on spherical mirrors discussing the creation of larger or smaller images, real or imaginary, including the case of chirality of the images. At the beginning of the 11th century, the Arabic scientist Ibn al-Haytham wrote the first comprehensive reflection, refraction, and the operation of a pinhole lens via rays of light traveling from the point of emission to the eye. He asserted that these rays were composed of particles of light. In 1630, René Descartes popularized the opposing wave description in his treatise on light, The World, showing that the behaviour of light could be re-created by modeling wave-like disturbances in a universal medium i.e. luminiferous aether. Beginning in 1670 and progressing over three decades, Isaac Newton developed and championed his corpuscular theory, arguing that the perfectly straight lines of reflection demonstrated light"s particle nature, only particles could travel in such straight lines. He explained refraction by positing that particles of light accelerated laterally upon entering a denser medium. Around the same time, Newton"s contemporaries Robert Hooke and Christiaan Huygens, and later Augustin-Jean Fresnel, mathematically refined the wave viewpoint, showing that if light traveled at different speeds in different media, refraction could be easily explained as the medium-dependent propagation of light waves. The resulting Huygens–Fresnel principle was extremely successful at reproducing light"s behaviour and was subsequently supported by Thomas Young"s discovery of wave interference of light by his double-slit experiment in 1801.

James Clerk Maxwell discovered that he could apply his previously discovered Maxwell"s equations, along with a slight modification to describe self-propagating waves of oscillating electric and magnetic fields. It quickly became apparent that visible light, ultraviolet light, and infrared light were all electromagnetic waves of differing frequency.

Animation showing the wave–particle duality with a double-slit experiment and effect of an observer. Increase size to see explanations in the video itself. See also a quiz based on this animation.

In 1901, Max Planck published an analysis that succeeded in reproducing the observed spectrum of light emitted by a glowing object. To accomplish this, Planck had to make a mathematical assumption of quantized energy of the oscillators, i.e. atoms of the black body that emit radiation. Einstein later proposed that electromagnetic radiation itself is quantized, not the energy of radiating atoms.

Black-body radiation, the emission of electromagnetic energy due to an object"s heat, could not be explained from classical arguments alone. The equipartition theorem of classical mechanics, the basis of all classical thermodynamic theories, stated that an object"s energy is partitioned equally among the object"s vibrational modes. But applying the same reasoning to the electromagnetic emission of such a thermal object was not so successful. That thermal objects emit light had been long known. Since light was known to be waves of electromagnetism, physicists hoped to describe this emission via classical laws. This became known as the black body problem. Since the equipartition theorem worked so well in describing the vibrational modes of the thermal object itself, it was natural to assume that it would perform equally well in describing the radiative emission of such objects. But a problem quickly arose if each mode received an equal partition of energy, the short wavelength modes would consume all the energy. This became clear when plotting the Rayleigh–Jeans law, which, while correctly predicting the intensity of long wavelength emissions, predicted infinite total energy as the intensity diverges to infinity for short wavelengths. This became known as the ultraviolet catastrophe.

In 1900, Max Planck hypothesized that the frequency of light emitted by the black body depended on the frequency of the oscillator that emitted it, and the energy of these oscillators increased linearly with frequency (according E = hf where h is Planck"s constant and f is the frequency). This was not an unsound proposal considering that macroscopic oscillators operate similarly when studying five simple harmonic oscillators of equal amplitude but different frequency, the oscillator with the highest frequency possesses the highest energy (though this relationship is not linear like Planck"s). By demanding that high-frequency light must be emitted by an oscillator of equal frequency, and further requiring that this oscillator occupy higher energy than one of a lesser frequency, Planck avoided any catastrophe, giving an equal partition to high-frequency oscillators produced successively fewer oscillators and less emitted light. And as in the Maxwell–Boltzmann distribution, the low-frequency, low-energy oscillators were suppressed by the onslaught of thermal jiggling from higher energy oscillators, which necessarily increased their energy and frequency.

The most revolutionary aspect of Planck"s treatment of the black body is that it inherently relies on an integer number of oscillators in thermal equilibrium with the electromagnetic field. These oscillators give their entire energy to the electromagnetic field, creating a quantum of light, as often as they are excited by the electromagnetic field, absorbing a quantum of light and beginning to oscillate at the corresponding frequency. Planck had intentionally created an atomic theory of the black body, but had unintentionally generated an atomic theory of light, where the black body never generates quanta of light at a given frequency with an energy less than hf. However, once realizing that he had quantized the electromagnetic field, he denounced particles of light as a limitation of his approximation, not a property of reality.

While Planck had solved the ultraviolet catastrophe by using atoms and a quantized electromagnetic field, most contemporary physicists agreed that Planck"s "light quanta" represented only flaws in his model. A more-complete derivation of black-body radiation would yield a fully continuous and "wave-like" electromagnetic field with no quantization. However, in 1905 Albert Einstein took Planck"s black body model to produce his solution to another outstanding problem of the day: the photoelectric effect, wherein electrons are emitted from atoms when they absorb energy from light. Since their existence was theorized eight years previously, phenomena had been studied with the electron model in mind in physics laboratories worldwide.

In 1902, Philipp Lenard discovered that the energy of these ejected electrons did not depend on the intensity of the incoming light, but instead on its frequency. So if one shines a little low-frequency light upon a metal, a few low energy electrons are ejected. If one now shines a very intense beam of low-frequency light upon the same metal, a whole slew of electrons are ejected; however they possess the same low energy, there are merely more of them. The more light there is, the more electrons are ejected. Whereas in order to get high energy electrons, one must illuminate the metal with high-frequency light. Like blackbody radiation, this was at odds with a theory invoking continuous transfer of energy between radiation and matter. However, it can still be explained using a fully classical description of light, as long as matter is quantum mechanical in nature.

If one used Planck"s energy quanta, and demanded that electromagnetic radiation at a given frequency could only transfer energy to matter in integer multiples of an energy quantum hf, then the photoelectric effect could be explained very simply. Low-frequency light only ejects low-energy electrons because each electron is excited by the absorption of a single photon. Increasing the intensity of the low-frequency light (increasing the number of photons) only increases the number of excited electrons, not their energy, because the energy of each photon remains low. Only by increasing the frequency of the light, and thus increasing the energy of the photons, can one eject electrons with higher energy. Thus, using Planck"s constant h to determine the energy of the photons based upon their frequency, the energy of ejected electrons should also increase linearly with frequency, the gradient of the line being Planck"s constant. These results were not confirmed until 1915, when Robert Andrews Millikan produced experimental results in perfect accord with Einstein"s predictions.

While energy of ejected electrons reflected Planck"s constant, the existence of photons was not explicitly proven until the discovery of the photon antibunching effect. This refers to the observation that once a single emitter (an atom, molecule, solid state emitter, etc.) radiates a detectable light signal, it cannot immediately release a second signal until after the emitter has been re-excited. This leads to a statistically quantifiable time delay between light emissions, so detection of multiple signals becomes increasingly unlikely as the observation time dips under the excited-state lifetime of the emitter.

This phenomenon could only be explained via photons. Einstein"s "light quanta" would not be called photons until 1925, but even in 1905 they represented the quintessential example of wave–particle duality. Electromagnetic radiation propagates following linear wave equations, but can only be emitted or absorbed as discrete elements, thus acting as a wave and a particle simultaneously.

In 1905, Albert Einstein provided an explanation of the photoelectric effect, an experiment that the wave theory of light failed to explain. He did so by postulating the existence of photons, quanta of light energy with particulate qualities.

In the photoelectric effect, it was observed that shining a light on certain metals would lead to an electric current in a circuit. Presumably, the light was knocking electrons out of the metal, causing current to flow. However, using the case of potassium as an example, it was also observed that while a dim blue light was enough to cause a current, even the strongest, brightest red light available with the technology of the time caused no current at all. According to the classical theory of light and matter, the strength or amplitude of a light wave was in proportion to its brightness: a bright light should have been easily strong enough to create a large current. Yet, oddly, this was not so.

Einstein explained this enigma by postulating that electrons can receive energy from an electromagnetic field only in discrete units (quanta or photons): an amount of energy E that was related to the frequency f of the light by

where h is Planck"s constant (6.626 × 10−34 Js). Only photons of a high enough frequency (above a certain threshold value) could knock an electron free. For example, photons of blue light had sufficient energy to free an electron from the metal, but photons of red light did not. One photon of light above the threshold frequency could release only one electron; the higher the frequency of a photon, the higher the kinetic energy of the emitted electron, but no amount of light below the threshold frequency could release an electron. To violate this law would require extremely high-intensity lasers that had not yet been invented. Intensity-dependent phenomena have now been studied in detail with such lasers.

Propagation of de Broglie waves in 1d—real part of the complex amplitude is blue, imaginary part is green. The probability (shown as the colour opacity) of finding the particle at a given point x is spread out like a waveform; there is no definite position of the particle. As the amplitude increases above zero the curvature decreases, so the amplitude decreases again, and vice versa—the result is an alternating amplitude: a wave. Top: Plane wave. Bottom: Wave packet.

De Broglie"s formula was confirmed three years later for electrons with the observation of electron diffraction, as it had been observed with X-rays, in two independent experiments. At the University of Aberdeen, George Paget Thomson passed a beam of electrons through a thin metal film and observed the predicted interference patterns. At Bell Labs, Clinton Joseph Davisson and Lester Halbert Germer guided the electron beam through a crystalline grid in their experiment popularly known as Davisson–Germer experiment.

De Broglie was awarded the Nobel Prize for Physics in 1929 for his hypothesis. Thomson and Davisson shared the Nobel Prize for Physics in 1937 for their experimental work.

De Broglie"s proposal also predicts particle interferometry. In particular, single-particle interferometry has become a classic for its clarity in expressing the central puzzles of quantum mechanics. Because it demonstrates the fundamental limitation of the ability of the observer to predict experimental results, Richard Feynman called it "a phenomenon which is impossible […] to explain in any classical way, and which has in it the heart of quantum mechanics. In reality, it contains the only mystery [of quantum mechanics].Giulio Pozzi performed the first experiment of single particle interferometry using electrons and a biprism (instead of slits), showing that each electron interferes with itself as predicted by quantum theory.Positron Laboratory (L-NESS) of Rafael Ferragut in Como (Italy), by a group led by Marco Giammarchi.

Heisenberg originally explained this as a consequence of the process of measuring: Measuring position accurately would disturb momentum and vice versa, offering an example (the "gamma-ray microscope") that depended crucially on the de Broglie hypothesis. The thought is now, however, that this only partly explains the phenomenon, but that the uncertainty also exists in the particle itself, even before the measurement is made.

In fact, the modern explanation of the uncertainty principle, extending the Copenhagen interpretation first put forward by Bohr and Heisenberg, depends even more centrally on the wave nature of a particle. Just as it is nonsensical to discuss the precise location of a wave on a string, particles do not have perfectly precise positions; likewise, just as it is nonsensical to discuss the wavelength of a "pulse" wave traveling down a string, particles do not have perfectly precise momenta that correspond to the inverse of wavelength. Moreover, when position is relatively well defined, the wave is pulse-like and has a very ill-defined wavelength, and thus momentum. And conversely, when momentum, and thus wavelength, is relatively well defined, the wave looks long and sinusoidal, and therefore it has a very ill-defined position.

De Broglie himself had proposed a pilot wave construct to explain the observed wave–particle duality. In this view, each particle has a well-defined position and momentum, but is guided by a wave function derived from Schrödinger"s equation. The pilot wave theory was initially rejected because it generated non-local effects when applied to systems involving more than one particle. Non-locality, however, soon became established as an integral feature of quantum theory and David Bohm extended de Broglie"s model to explicitly include it.

In the resulting representation, also called the de Broglie–Bohm theory or Bohmian mechanics,quantum potential.This idea seems to me so natural and simple, to resolve the wave–particle dilemma in such a clear and ordinary way, that it is a great mystery to me that it was so generally ignored.J.S.Bell

Since the demonstrations of wave-like properties in photons and electrons, similar experiments have been conducted with neutrons and protons. Among the most famous experiments are those of Estermann and Otto Stern in 1929.

A dramatic series of experiments emphasizing the action of gravity in relation to wave–particle duality was conducted in the 1970s using the neutron interferometer.atomic nucleus, provide much of the mass of a nucleus and thus of ordinary matter. In the neutron interferometer, they act as quantum-mechanical waves directly subject to the force of gravity. While the results were not surprising since gravity was known to act on everything, including light (see tests of general relativity and the Pound–Rebka falling photon experiment), the self-interference of the quantum mechanical wave of a massive fermion in a gravitational field had never been experimentally confirmed before.

In 1999, the diffraction of C60 fullerenes by researchers from the University of Vienna was reported.u. The de Broglie wavelength of the incident beam was about 2.5 pm, whereas the diameter of the molecule is about 1 nm, about 400 times larger. In 2012, these far-field diffraction experiments could be extended to phthalocyanine molecules and their heavier derivatives, which are composed of 58 and 114 atoms respectively. In these experiments the build-up of such interference patterns could be recorded in real time and with single molecule sensitivity.

In 2003, the Vienna group also demonstrated the wave nature of tetraphenylporphyrinTalbot Lau interferometer.60F48, a fluorinated buckyball with a mass of about 1600 u, composed of 108 atoms.decoherence mechanisms.

Whether objects heavier than the Planck mass (about the weight of a large bacterium) have a de Broglie wavelength is theoretically unclear and experimentally unreachable; above the Planck mass a particle"s Compton wavelength would be smaller than the Planck length and its own Schwarzschild radius, a scale at which current theories of physics may break down or need to be replaced by more general ones.

Wave–particle duality is deeply embedded into the foundations of quantum mechanics. In the formalism of the theory, all the information about a particle is encoded in its wave function, a complex-valued function roughly analogous to the amplitude of a wave at each point in space. This function evolves according to Schrödinger equation. For particles with mass this equation has solutions that follow the form of the wave equation. Propagation of such waves leads to wave-like phenomena such as interference and diffraction. Particles without mass, like photons, have no solutions of the Schrödinger equation. Instead of a particle wave function that localizes mass in space, a photon wave function can be constructed from Einstein kinematics to localize energy in spatial coordinates.

The particle-like behaviour is most evident due to phenomena associated with measurement in quantum mechanics. Upon measuring the location of the particle, the particle will be forced into a more localized state as given by the uncertainty principle. When viewed through this formalism, the measurement of the wave function will randomly lead to wave function collapse to a sharply peaked function at some location. For particles with mass, the likelihood of detecting the particle at any particular location is equal to the squared amplitude of the wave function there. The measurement will return a well-defined position, and is subject to Heisenberg"s uncertainty principle.

Following the development of quantum field theory the ambiguity disappeared. The field permits solutions that follow the wave equation, which are referred to as the wave functions. The term particle is used to label the irreducible representations of the Lorentz group that are permitted by the field. An interaction as in a Feynman diagram is accepted as a calculationally convenient approximation where the outgoing legs are known to be simplifications of the propagation and the internal lines are for some order in an expansion of the field interaction. Since the field is non-local and quantized, the phenomena that previously were thought of as paradoxes are explained. Within the limits of the wave–particle duality the quantum field theory gives the same results.

Below is an illustration of wave–particle duality as it relates to de Broglie"s hypothesis and Heisenberg"s Uncertainty principle, in terms of the position and momentum space wavefunctions for one spinless particle with mass in one dimension. These wavefunctions are Fourier transforms of each other.

The more localized the position-space wavefunction, the more likely the particle is to be found with the position coordinates in that region, and correspondingly the momentum-space wavefunction is less localized so the possible momentum components the particle could have are more widespread.

Conversely, the more localized the momentum-space wavefunction, the more likely the particle is to be found with those values of momentum components in that region, and correspondingly the less localized the position-space wavefunction, so the position coordinates the particle could occupy are more widespread.

Position x and momentum p wavefunctions corresponding to quantum particles. The colour opacity of the particles corresponds to the probability density of finding the particle with position x or momentum component p.

Top: If wavelength λ is unknown, so are momentum p, wave-vector k and energy E (de Broglie relations). As the particle is more localized in position space, Δx is smaller than for Δpx.

Wave–particle duality is an ongoing conundrum in modern physics. Most physicists accept wave–particle duality as the best explanation for a broad range of observed phenomena; however, it is not without controversy. Alternative views are also presented here. These views are not generally accepted by mainstream physics, but serve as a basis for valuable discussion within the community.

The pilot wave model, was originally developed by Louis de Broglie and further developed by David Bohm into the hidden variable theory. The phrase “hidden variable” is misleading since the variable in question is the positions of the particles.deterministic fashion. The wave in question is the wavefunction obeying Schrödinger’s equation. Bohm’s formulation is intended to be classical, but it has to incorporate a distinctly non-classical

Bohm’s original purpose (1952) “was to show that an alternative to the Copenhagen interpretation is at least logically possible.Basil Hiley in 1961 when both were at Birbeck College (University of London). Bohm and Hiley then wrote extensively on the theory and it gained a wider audience. This idea is held by a significant minority within the physics community.

Carver Mead, an American scientist and professor at Caltech, said that the duality can be replaced by a "wave-only" view. In his book Collective Electrodynamics: Quantum Foundations of Electromagnetism (2000), Mead purports to analyze the behaviour of electrons and photons purely in terms of electron wave functions, and attributes the apparent particle-like behaviour to quantization effects and eigenstates. According to reviewer David Haddon:

Mead has cut the Gordian knot of quantum complementarity. He claims that atoms, with their neutrons, protons, and electrons, are not particles at all but pure waves of matter. Mead cites as the gross evidence of the exclusively wave nature of both light and matter the discovery between 1933 and 1996 of ten examples of pure wave phenomena, including the ubiquitous laser of CD players, the self-propagating electrical currents of superconductors, and the Bose–Einstein condensate of atoms.

This double nature of radiation (and of material corpuscles) ... has been interpreted by quantum-mechanics in an ingenious and amazingly successful fashion. This interpretation ... appears to me as only a temporary way out...

The many-worlds interpretation (MWI) is sometimes presented as a waves-only theory, including by its originator, Hugh Everett who referred to MWI as "the wave interpretation".

Hegerfeldt"s theorem, which appears to demonstrates the incompatibility of the existence of localized discrete particles with the combination of the principles of quantum mechanics and special relativity, has also been used to support the conclusion that reality must be described solely in terms of field-based formulations.

Still in the days of the old quantum theory, a pre-quantum-mechanical version of wave–particle duality was pioneered by William Duane,Alfred Landé.x-rays by a crystal in terms solely of their particle aspect. The deflection of the trajectory of each diffracted photon was explained as due to quantized momentum transfer from the spatially regular structure of the diffracting crystal.

It has been argued that there are never exact particles or waves, but only some compromise or intermediate between them. For this reason, in 1928 Arthur Eddingtonwavicle" to describe the objects although it is not regularly used today. One consideration

is that zero-dimensional mathematical points cannot be observed. Another is that the formal representation of such points, the Dirac delta function is unphysical, because it cannot be normalized. Parallel arguments apply to single-frequency wave states. Roger Penrose states:

Such "position states" are idealized wavefunctions in the opposite sense from the momentum states. Whereas the momentum states are infinitely spread out, the position states are infinitely concentrated. Neither is normalizable [...].

Although it is difficult to draw a line separating wave–particle duality from the rest of quantum mechanics, it is nevertheless possible to list some applications of this basic idea.

Wave–particle duality is exploited in electron microscopy, where the small wavelengths associated with the electron can be used to view objects much smaller than what is visible using visible light.

Similarly, neutron diffraction uses neutrons with a wavelength of about 0.1 nm, the typical spacing of atoms in a solid, to determine the structure of solids.

R. Eisberg & R. Resnick (1985). Quantum Physics of Atoms, Molecules, Solids, Nuclei, and Particles (2nd ed.). John Wiley & Sons. pp. 59–60. ISBN 978-0-471-87373-0. For both large and small wavelengths, both matter and radiation have both particle and wave aspects.... But the wave aspects of their motion become more difficult to observe as their wavelengths become shorter.... For ordinary macroscopic particles the mass is so large that the momentum is always sufficiently large to make the de Broglie wavelength small enough to be beyond the range of experimental detection, and classical mechanics reigns supreme.

Camilleri, K. (2009). Heisenberg and the Interpretation of Quantum Mechanics: the Physicist as Philosopher, Cambridge University Press, Cambridge UK, ISBN 978-0-521-88484-6.

Berryman, Sylvia (2016), "Democritus", in Zalta, Edward N. (ed.), The Stanford Encyclopedia of Philosophy (Winter 2016 ed.), Metaphysics Research Lab, Stanford University, retrieved 2022-07-17

Thorn, J. J.; Neel, M. S.; Donato, V. W.; Bergreen, G. S.; Davies, R. E.; Beck, M. (2004). "Observing the quantum behaviour of light in an undergraduate laboratory". American Journal of Physics. 72 (9): 1210. Bibcode:2004AmJPh..72.1210T. doi:10.1119/1.1737397.

Zhang, Q (1996). "Intensity dependence of the photoelectric effect induced by a circularly polarized laser beam". Physics Letters A. 216 (1–5): 125–128. Bibcode:1996PhLA..216..125Z. doi:10.1016/0375-9601(96)00259-9.

Donald H Menzel, "Fundamental formulas of Physics", vol. 1, p. 153; Gives the de Broglie wavelengths for composite particles such as protons and neutrons.

Feynman, Richard P.; Robert B. Leighton; Matthew Sands (1965). The Feynman Lectures on Physics, Vol. 3. Addison-Wesley. pp. 1.1–1.8. ISBN 978-0201021189.

Merli, P G; Missiroli, G F; Pozzi, G (1976). "On the statistical aspect of electron interference phenomena". American Journal of Physics. 44 (3): 306–307. Bibcode:1976AmJPh..44..306M. doi:10.1119/1.10184.

Sala, S.; Ariga, A.; Ereditato, A.; Ferragut, R.; Giammarchi, M.; Leone, M.; Pistillo, C.; Scampoli, P. (2019). "First demonstration of antimatter wave interferometry". Science Advances. 5 (5): eaav7610. Bibcode:2019SciA....5.7610S. doi:10.1126/sciadv.aav7610. PMC PMID 31058223.

Arndt, Markus; O. Nairz; J. Voss-Andreae, C. Keller, G. van der Zouw, A. Zeilinger (14 October 1999). "Wave–particle duality of C60". Nature. 401 (6754): 680–682. Bibcode:1999Natur.401..680A. doi:10.1038/44348. PMID 18494170. S2CID 4424892.link)

Juffmann, Thomas; et al. (25 March 2012). "Real-time single-molecule imaging of quantum interference". Nature Nanotechnology. 7 (5): 297–300. arXiv:Bibcode:2012NatNa...7..297J. doi:10.1038/nnano.2012.34. PMID 22447163. S2CID 5918772.

Hackermüller, Lucia; Stefan Uttenthaler; Klaus Hornberger; Elisabeth Reiger; Björn Brezger; Anton Zeilinger; Markus Arndt (2003). "The wave nature of biomolecules and fluorofullerenes". Phys. Rev. Lett. 91 (9): 090408. arXiv:Bibcode:2003PhRvL..91i0408H. doi:10.1103/PhysRevLett.91.090408. PMID 14525169. S2CID 13533517.

Brezger, Björn; Lucia Hackermüller; Stefan Uttenthaler; Julia Petschinka; Markus Arndt; Anton Zeilinger (2002). "Matter-wave interferometer for large molecules". Phys. Rev. Lett. 88 (10): 100404. arXiv:Bibcode:2002PhRvL..88j0404B. doi:10.1103/PhysRevLett.88.100404. PMID 11909334. S2CID 19793304.

Hornberger, Klaus; Stefan Uttenthaler; Björn Brezger; Lucia Hackermüller; Markus Arndt; Anton Zeilinger (2003). "Observation of Collisional Decoherence in Interferometry". Phys. Rev. Lett. 90 (16): 160401. arXiv:Bibcode:2003PhRvL..90p0401H. doi:10.1103/PhysRevLett.90.160401. PMID 12731960. S2CID 31057272.

Hackermüller, Lucia; Klaus Hornberger; Björn Brezger; Anton Zeilinger; Markus Arndt (2004). "Decoherence of matter waves by thermal emission of radiation". Nature. 427 (6976): 711–714. arXiv:Bibcode:2004Natur.427..711H. doi:10.1038/nature02276. PMID 14973478. S2CID 3482856.

Eibenberger, S.; Gerlich, S.; Arndt, M.; Mayor, M.; Tüxen, J. (2013). "Matter–wave interference of particles selected from a molecular library with masses exceeding 10 000 amu". Physical Chemistry Chemical Physics. 15 (35): 14696–14700. arXiv:Bibcode:2013PCCP...1514696E. doi:10.1039/c3cp51500a. PMID 23900710. S2CID 3944699.

Couder, Yves; Fort, Emmanuel (2006). "Single-Particle Diffraction and Interference at a Macroscopic Scale". Physical Review Letters. 97 (15): 154101. Bibcode:2006PhRvL..97o4101C. doi:10.1103/PhysRevLett.97.154101. PMID 17155330.

Eddi, A.; Fort, E.; Moisy, F.; Couder, Y. (2009). "Unpredictable Tunneling of a Classical Wave-Particle Association". Physical Review Letters. 102 (24): 240401. Bibcode:2009PhRvL.102x0401E. doi:10.1103/PhysRevLett.102.240401. PMID 19658983.

Bohm, David (1952). "A Suggested Interpretation of the Quantum Theory in Terms of "Hidden" Variables, I and II". Physical Review. 85 (2): 166–179. doi:10.1103/PhysRev.85.166.

Buchanan, M. (2019). "In search of lost memories". Nat. Phys. 15 (5): 29–31. Bibcode:2019NatPh..15..420B. doi:10.1038/s41567-019-0521-9. S2CID 155305346.

Afshar, S.S.; et al. (2007). "Paradox in Wave Particle Duality". Found. Phys. 37 (2): 295. arXiv:Bibcode:2007FoPh...37..295A. doi:10.1007/s10701-006-9102-8. S2CID 2161197.

Kastner, R (2005). "Why the Afshar experiment does not refute complementarity". Studies in History and Philosophy of Science Part B: Studies in History and Philosophy of Modern Physics. 36 (4): 649–658. arXiv:Bibcode:2005SHPMP..36..649K. doi:10.1016/j.shpsb.2005.04.006. S2CID 119438183.

Steuernagel, Ole (2007-08-03). "Afshar"s Experiment Does Not Show a Violation of Complementarity". Foundations of Physics. 37 (9): 1370–1385. arXiv:Bibcode:2007FoPh...37.1370S. doi:10.1007/s10701-007-9153-5. ISSN 0015-9018. S2CID 53056142.

Jacques, V.; Lai, N. D.; Dréau, A.; Zheng, D.; Chauvat, D.; Treussart, F.; Grangier, P.; Roch, J.-F. (2008-01-01). "Illustration of quantum complementarity using single photons interfering on a grating". New Journal of Physics. 10 (12): 123009. arXiv:Bibcode:2008NJPh...10l3009J. doi:10.1088/1367-2630/10/12/123009. ISSN 1367-2630. S2CID 2627030.

See section VI(e) of Everett"s thesis: The Theory of the Universal Wave Function, in Bryce Seligman DeWitt, R. Neill Graham, eds, The Many-Worlds Interpretation of Quantum Mechanics, Princeton Series in Physics, Princeton University Press (1973), ISBN 0-691-08131-X, pp. 3–140.

Horodecki, R. (1981). "De broglie wave and its dual wave". Phys. Lett. A. 87 (3): 95–97. Bibcode:1981PhLA...87...95H. doi:10.1016/0375-9601(81)90571-5.

Jabs, Arthur (2016). "A conjecture concerning determinism, reduction, and measurement in quantum mechanics". Quantum Studies: Mathematics and Foundations. 3 (4): 279–292. arXiv:doi:10.1007/s40509-016-0077-7. S2CID 32523066.

Heisenberg, W. (1930). The Physical Principles of the Quantum Theory, translated by C. Eckart and F.C. Hoyt, University of Chicago Press, Chicago, pp. 77–78.

H. Nikolic (2007). "Quantum mechanics: Myths and facts". Foundations of Physics. 37 (11): 1563–1611. arXiv:Bibcode:2007FoPh...37.1563N. doi:10.1007/s10701-007-9176-y. S2CID 9613836.

R. Nave. "Wave–Particle Duality" (Web page). HyperPhysics. Georgia State University, Department of Physics and Astronomy. Retrieved December 12, 2005.

Just what is the true nature of light? Is it a wave or perhaps a flow of extremely small particles? These questions have long puzzled scientists. Let"s travel through history as we study the matter.

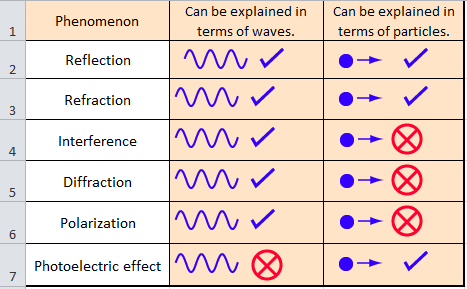

Around 1700, Newton concluded that light was a group of particles (corpuscular theory). Around the same time, there were other scholars who thought that light might instead be a wave (wave theory). Light travels in a straight line, and therefore it was only natural for Newton to think of it as extremely small particles that are emitted by a light source and reflected by objects. The corpuscular theory, however, cannot explain wave-like light phenomena such as diffraction and interference. On the other hand, the wave theory cannot clarify why photons fly out of metal that is exposed to light (the phenomenon is called the photoelectric effect, which was discovered at the end of the 19th century). In this manner, the great physicists have continued to debate and demonstrate the true nature of light over the centuries.

Known for his Law of Universal Gravitation, English physicist Sir Isaac Newton (1643 to 1727) realized that light had frequency-like properties when he used a prism to split sunlight into its component colors. Nevertheless, he thought that light was a particle because the periphery of the shadows it created was extremely sharp and clear.

The wave theory, which maintains that light is a wave, was proposed around the same time as Newton"s theory. In 1665, Italian physicist Francesco Maria Grimaldi (1618 to 1663) discovered the phenomenon of light diffraction and pointed out that it resembles the behavior of waves. Then, in 1678, Dutch physicist Christian Huygens (1629 to 1695) established the wave theory of light and announced the Huygens" principle.

Some 100 years after the time of Newton, French physicist Augustin-Jean Fresnel (1788 to 1827) asserted that light waves have an extremely short wavelength and mathematically proved light interference. In 1815, he devised physical laws for light reflection and refraction, as well. He also hypothesized that space is filled with a medium known as ether because waves need something that can transmit them. In 1817, English physicist Thomas Young (1773 to 1829) calculated light"s wavelength from an interference pattern, thereby not only figuring out that the wavelength is 1 μm ( 1 μm = one millionth of a meter ) or less, but also having a handle on the truth that light is a transverse wave. At that point, the particle theory of light fell out of favor and was replaced by the wave theory.

The next theory was provided by the brilliant Scottish physicist James Clerk Maxwell (1831 to 1879). In 1864, he predicted the existence of electromagnetic waves, the existence of which had not been confirmed before that time, and out of his prediction came the concept of light being a wave, or more specifically, a type of electromagnetic wave. Until that time, the magnetic field produced by magnets and electric currents and the electric field generated between two parallel metal plates connected to a charged capacitor were considered to be unrelated to one another. Maxwell changed this thinking when, in 1861, he presented Maxwell"s equations: four equations for electromagnetic theory that shows magnetic fields and electric fields are inextricably linked. This led to the introduction of the concept of electromagnetic waves other than visible light into light research, which had previously focused only on visible light.

The term electromagnetic wave tends to bring to mind the waves emitted from cellular telephones, but electromagnetic waves are actually waves produced by electricity and magnetism. Electromagnetic waves always occur wherever electricity is flowing or radio waves are flying about. Maxwell"s equations, which clearly revealed the existence of such electromagnetic waves, were announced in 1861, becoming the most fundamental law of electromagnetics. These equations are not easy to understand, but let"s take an in-depth look because they concern the true nature of light.

Maxwell"s four equations have become the most fundamental law in electromagnetics. The first equation formulates Faraday"s Law of Electromagnetic Induction, which states that changing magnetic fields generate electrical fields, producing electrical current.

The second equation is called the Ampere-Maxwell Law. It adds to Ampere"s Law, which states an electric current flowing over a wire produces a magnetic field around itself, and another law that says a changing magnetic field also gives rise to a property similar to an electric current (a displacement current), and this too creates a magnetic field around itself. The term displacement current actually is a crucial point.

The fourth equation is Gauss"s Law of magnetic field, stating a magnetic field has no source (magnetic monopole) equivalent to that of an electric charge.

If you take two parallel metal plates (electrodes) and connect one to the positive pole and the other to the negative pole of a battery, you will create a capacitor. Direct-current (DC) electricity will simply collect between the two metal plates, and no current will flow between them. However, if you connect alternating current (AC) that changes drastically, electric current will start to flow along the two electrodes. Electric current is a flow of electrons, but between these two electrodes there is nothing but space, and thus electrons do not flow.

Maxell wondered what this could mean. Then it came to him that applying an AC voltage to the electrodes generates a changing electric field in the space between them, and this changing electric field acts as a changing electric current. This electric current is what we mean when we use the term displacement current.

A most unexpected conclusion can be drawn from the idea of a displacement current. In short, electromagnetic waves can exist. This also led to the discovery that in space there are not only objects that we can see with our eyes, but also intangible fields that we cannot see. The existence of fields was revealed for the first time. Solving Maxwell"s equations reveals the wave equation, and the solution for that equation results in a wave system in which electric fields and magnetic fields give rise to each other while traveling through space.

The form of electromagnetic waves was expressed in a mathematical formula. Magnetic fields and electric fields are inextricably linked, and there is also an entity called an electromagnetic field that is solely responsible for bringing them into existence.

Now let"s take a look at a capacitor. Applying AC voltage between two metal electrodes produces a changing electric field in space, and this electric field in turn creates a displacement current, causing an electric current to flow between the electrodes. At the same time, the displacement current produces a changing magnetic field around itself according to the second of Maxwell"s equations (Ampere-Maxwell Law).

The resulting magnetic field creates an electric field around itself according to the first of Maxwell"s equations (Faraday"s Law of Electromagnetic Induction). Based on the fact that a changing electric field creates a magnetic field in this manner, electromagnetic waves-in which an electric field and magnetic field alternately appear-are created in the space between the two electrodes and travel into their surroundings. Antennas that emit electromagnetic waves are created by harnessing this principle.

Maxwell calculated the speed of travel for the waves, i.e. electromagnetic waves, revealed by his mathematical formulas. He said speed was simply one over the square root of the electric permittivity in vacuum times the magnetic permeability in vacuum. When he assigned "9 x 109/4π for the electric permittivity in vacuum" and "4π x 10-7 for the magnetic permeability in vacuum," both of which were known values at the time, his calculation yielded 2.998 x 108 m/sec. This exactly matched the previously discovered speed of light. This led Maxwell to confidently state that li

Ms.Josey

Ms.Josey

Ms.Josey

Ms.Josey