refresh rate on lcd monitors quotation

Responsible for performing installations and repairs (motors, starters, fuses, electrical power to machine etc.) for industrial equipment and machines in order to support the achievement of Nelson-Miller’s business goals and objectives:

• Perform highly diversified duties to install and maintain electrical apparatus on production machines and any other facility equipment (Screen Print, Punch Press, Steel Rule Die, Automated Machines, Turret, Laser Cutting Machines, etc.).

• Provide electrical emergency/unscheduled diagnostics, repairs of production equipment during production and performs scheduled electrical maintenance repairs of production equipment during machine service.

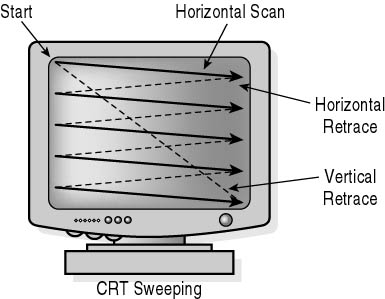

The refresh rate (or "vertical refresh rate", "vertical scan rate", terminology originating with the cathode ray tubes) is the number of times per second that a raster-based display device displays a new image. This is independent from frame rate, which describes how many images are stored or generated every second by the device driving the display.

On cathode ray tube (CRT) displays, higher refresh rates produce less flickering, thereby reducing eye strain. In other technologies such as liquid-crystal displays, the refresh rate affects only how often the image can potentially be updated.

Non-raster displays may not have a characteristic refresh rate. Vector displays, for instance, do not trace the entire screen, only the actual lines comprising the displayed image, so refresh speed may differ by the size and complexity of the image data.

Raster-scan CRTs by their nature must refresh the screen, since their phosphors will fade and the image will disappear quickly unless refreshed regularly.

In a CRT, the vertical scan rate is the number of times per second that the electron beam returns to the upper left corner of the screen to begin drawing a new frame.vertical blanking signal generated by the video controller, and is partially limited by the monitor"s maximum horizontal scan rate.

The refresh rate can be calculated from the horizontal scan rate by dividing the scanning frequency by the number of horizontal lines, plus some amount of time to allow for the beam to return to the top. By convention, this is a 1.05x multiplier.1280 × 1024 results in a refresh rate of 96,000 ÷ (1024 × 1.05) ≈ 89 Hz (rounded down).

CRT refresh rates have historically been an important factor in videogame programming. In early videogame systems, the only time available for computation was during the vertical blanking interval, during which the beam is returning to the top corner of the screen and no image is being drawn.screen tearing.

Unlike CRTs, where the image will fade unless refreshed, the pixels of liquid-crystal displays retain their state for as long as power is provided, and consequently there is no intrinsic flicker regardless of refresh rate. However, refresh rate still determines the highest frame rate that can be displayed, and despite there being no actual blanking of the screen, the vertical blanking interval is still a period in each refresh cycle when the screen is not being updated, during which the image data in the host system"s frame buffer can be updated.

On smaller CRT monitors (up to about 15 in or 38 cm), few people notice any discomfort between 60–72 Hz. On larger CRT monitors (17 in or 43 cm or larger), most people experience mild discomfort unless the refresh is set to 72 Hz or higher. A rate of 100 Hz is comfortable at almost any size. However, this does not apply to LCD monitors. The closest equivalent to a refresh rate on an LCD monitor is its frame rate, which is often locked at 60 fps. But this is rarely a problem, because the only part of an LCD monitor that could produce CRT-like flicker—its backlight—typically operates at around a minimum of 200 Hz.

Different operating systems set the default refresh rate differently. Microsoft Windows 95 and Windows 98 (First and Second Editions) set the refresh rate to the highest rate that they believe the display supports. Windows NT-based operating systems, such as Windows 2000 and its descendants Windows XP, Windows Vista and Windows 7, set the default refresh rate to a conservative rate, usually 60 Hz. Some fullscreen applications, including many games, now allow the user to reconfigure the refresh rate before entering fullscreen mode, but most default to a conservative resolution and refresh rate and let you increase the settings in the options.

Old monitors could be damaged if a user set the video card to a refresh rate higher than the highest rate supported by the monitor. Some models of monitors display a notice that the video signal uses an unsupported refresh rate.

Some LCDs support adapting their refresh rate to the current frame rate delivered by the graphics card. Two technologies that allow this are FreeSync and G-Sync.

When LCD shutter glasses are used for stereo 3D displays, the effective refresh rate is halved, because each eye needs a separate picture. For this reason, it is usually recommended to use a display capable of at least 120 Hz, because divided in half this rate is again 60 Hz. Higher refresh rates result in greater image stability, for example 72 Hz non-stereo is 144 Hz stereo, and 90 Hz non-stereo is 180 Hz stereo. Most low-end computer graphics cards and monitors cannot handle these high refresh rates, especially at higher resolutions.

For LCD monitors the pixel brightness changes are much slower than CRT or plasma phosphors. Typically LCD pixel brightness changes are faster when voltage is applied than when voltage is removed, resulting in an asymmetric pixel response time. With 3D shutter glasses this can result in a blurry smearing of the display and poor depth perception, due to the previous image frame not fading to black fast enough as the next frame is drawn.

This gif animation shows a rudimentary comparison of how motion varies with 4Hz, 12Hz, and 24Hz refresh rates. Entire sequence has a frame rate of 24Hz.

The development of televisions in the 1930s was determined by a number of technical limitations. The AC power line frequency was used for the vertical refresh rate for two reasons. The first reason was that the television"s vacuum tube was susceptible to interference from the unit"s power supply, including residual ripple. This could cause drifting horizontal bars (hum bars). Using the same frequency reduced this, and made interference static on the screen and therefore less obtrusive. The second reason was that television studios would use AC lamps, filming at a different frequency would cause strobing.NTSC color coding) and 50 Hz System B/G (almost always used with PAL or SECAM color coding). This accident of chance gave European sets higher resolution, in exchange for lower frame-rates. Compare System M (704 × 480 at 30i) and System B/G (704 × 576 at 25i). However, the lower refresh rate of 50 Hz introduces more flicker, so sets that use digital technology to double the refresh rate to 100 Hz are now very popular. (see Broadcast television systems)

Another difference between 50 Hz and 60 Hz standards is the way motion pictures (film sources as opposed to video camera sources) are transferred or presented. 35 mm film is typically shot at 24 frames per second (fps). For PAL 50 Hz this allows film sources to be easily transferred by accelerating the film by 4%. The resulting picture is therefore smooth, however, there is a small shift in the pitch of the audio. NTSC sets display both 24 fps and 25 fps material without any speed shifting by using a technique called 3:2 pulldown, but at the expense of introducing unsmooth playback in the form of telecine judder.

Similar to some computer monitors and some DVDs, analog television systems use interlace, which decreases the apparent flicker by painting first the odd lines and then the even lines (these are known as fields). This doubles the refresh rate, compared to a progressive scan image at the same frame rate. This works perfectly for video cameras, where each field results from a separate exposure – the effective frame rate doubles, there are now 50 rather than 25 exposures per second. The dynamics of a CRT are ideally suited to this approach, fast scenes will benefit from the 50 Hz refresh, the earlier field will have largely decayed away when the new field is written, and static images will benefit from improved resolution as both fields will be integrated by the eye. Modern CRT-based televisions may be made flicker-free in the form of 100 Hz technology.

Many high-end LCD televisions now have a 120 or 240 Hz (current and former NTSC countries) or 100 or 200 Hz (PAL/SECAM countries) refresh rate. The rate of 120 was chosen as the least common multiple of 24 fps (cinema) and 30 fps (NTSC TV), and allows for less distortion when movies are viewed due to the elimination of telecine (3:2 pulldown). For PAL at 25 fps, 100 or 200 Hz is used as a fractional compromise of the least common multiple of 600 (24 × 25). These higher refresh rates are most effective from a 24p-source video output (e.g. Blu-ray Disc), and/or scenes of fast motion.

As movies are usually filmed at a rate of 24 frames per second, while television sets operate at different rates, some conversion is necessary. Different techniques exist to give the viewer an optimal experience.

The combination of content production, playback device, and display device processing may also give artifacts that are unnecessary. A display device producing a fixed 60 fps rate cannot display a 24 fps movie at an even, judder-free rate. Usually, a 3:2 pulldown is used, giving a slight uneven movement.

While common multisync CRT computer monitors have been capable of running at even multiples of 24 Hz since the early 1990s, recent "120 Hz" LCDs have been produced for the purpose of having smoother, more fluid motion, depending upon the source material, and any subsequent processing done to the signal. In the case of material shot on video, improvements in smoothness just from having a higher refresh rate may be barely noticeable.

In the case of filmed material, as 120 is an even multiple of 24, it is possible to present a 24 fps sequence without judder on a well-designed 120 Hz display (i.e., so-called 5-5 pulldown). If the 120 Hz rate is produced by frame-doubling a 60 fps 3:2 pulldown signal, the uneven motion could still be visible (i.e., so-called 6-4 pulldown).

Additionally, material may be displayed with synthetically created smoothness with the addition of motion interpolation abilities to the display, which has an even larger effect on filmed material.

Monitors already do report their maximum refresh rate to your PC. Your computer then generally sends new frames at this maximum refresh rate, whether new data is available or not.

In theory we could only send updates when pixel change, but in practice there isn"t much reason to do so. The whole hardware chain has to support updating every pixel on every frame all the time, so it"s highly efficient at doing so. This is a dedicated high-bandwidth link, so you"re not going to gain much at all by reducing the throughput across the link. In fact, you"d probably end up spending more money and energy on hardware to encode and decode the delta frames on each end of the link. It just makes more sense to send the whole frame each time. Especially for applications like games and movies, where 100% of the pixels are going to change every frame anyway.

As for your variable framerate idea, this is another can of worms. The entire display chain, from applications to the operating system to the graphics card through the monitor are built on the concept that updates happen at a fixed, pre-defined interval. Changing this to a variable interval would require that we throw all of that out. Furthermore, how do you decide what framerate to use at any given moment? Suppose your monitor can update at 100Hz maximum, and you open a 60fps video in a window. Does that then force everything else to update at the 60fps refresh rate even if it wants to update the monitor at 100Hz or 90Hz? If you"re updating a portion of the screen at 60fps, then you no longer have any evenly-divisible time slices in which to do your 100Hz equivalent updates.

A much better solution is to just embrace the ubiquitous fixed-framerate system we have now and increase the refresh rate of our monitors to 120Hz or more. This maintains backwards compatibility for older monitors, but gives us small enough time steps that we can display any effective framerate we want. 120Hz is an even multiple of 24fps, 30fps, and 60fps and it"s fast enough that your eyes would never notice anything was amiss on other frame rates.

This website is using a security service to protect itself from online attacks. The action you just performed triggered the security solution. There are several actions that could trigger this block including submitting a certain word or phrase, a SQL command or malformed data.

The refresh rate of a monitor or TV is the maximum number of times the image on the screen can be drawn, or refreshed, per second. The refresh rate is measured in hertz.

Instead, the image is "redrawn" over and over on the screen so quickly (anywhere from 60, 75, or 85 to 100 times or more per second) that the human eye perceives it as a static image, or a smooth video, etc.

This means that the difference between a 60 Hz and 120 Hz monitor, for example, is that the 120 Hz one can create the image twice as fast as the 60 Hz monitor.

An electron gun sits behind the glass of the monitor and shoots light to produce an image. The gun starts at the very top left corner of the screen and then quickly fills it with the image, line by line across the face and then downward until it reaches the bottom, after which the electron gun moves back to the top left and starts the whole process over again.

While the electron gun is in one place, another portion of the screen may be blank as it waits for the new image. However, due to how fast the screen is refreshed with the light of the new image, you don"t see this.

If the refresh rate of a monitor is set too low, you may be able to notice the "redrawing" of the image, which we perceive as a flicker. Monitor flickering is unpleasant to look at and can quickly lead to eye strain and headaches.

The refresh rate setting can be changed to reduce this flickering effect. See our How to Change a Monitor"s Refresh Rate Setting in Windows guide for instructions on doing this in all versions of Windows.

All LCD monitors support a refresh rate that is typically over the threshold that normally causes flicker (usually 60 Hz) and they don"t go blank between refreshes as CRT monitors do.

The highest possible refresh rate isn"t necessarily better. Setting the refresh rate over 120 Hz, which some video cards support, may have an adverse effect on your eyes as well. Keeping a monitor"s refresh rate set at 60 Hz to 90 Hz is best for most.

Attempting to adjust a CRT monitor"s refresh rate to one that"s higher than the specifications of the monitor may result in an "Out of Frequency" error and leave you with a blank screen. Try starting Windows in Safe Mode and then changing the monitor refresh rate setting to something more appropriate.

Three factors determine the maximum refresh rate: The monitor"s resolution (lower resolutions typically support higher refresh rates), the video card"s maximum refresh rate, and the monitor"s maximum refresh rate.

You can change the refresh rate on your monitor through your display settings, though it may be limited by the monitor, graphics card, and screen resolution.

Your display"s refresh rate is a measure of how frequently the on-screen image is updated. Refresh rate is measured in hertz (Hz), which indicates the number of times per second something occurs. So a 60Hz display is one that has a refresh rate of 60 times per second.

Most people don"t need to worry much about refresh rate, especially when using a computer for ordinary productivity applications. That"s because computer displays typically have a refresh rate of 60Hz — that"s the default for many monitors — and that value is perfectly adequate almost all the time. It"s fast enough that there"s no obvious blurring or "image tearing" when there"s on-screen motion. Below this value — 30 Hz, for example — you might see some stuttering or jitteriness when things are moving on the screen.

Higher refresh rates are of interest to gamers, though. Because many video games rely on fast-moving visuals, many computer displays are optimized for gaming with very high refresh rates — 144Hz, 240Hz, and even 360Hz. For gaming, the higher the refresh rate the better.

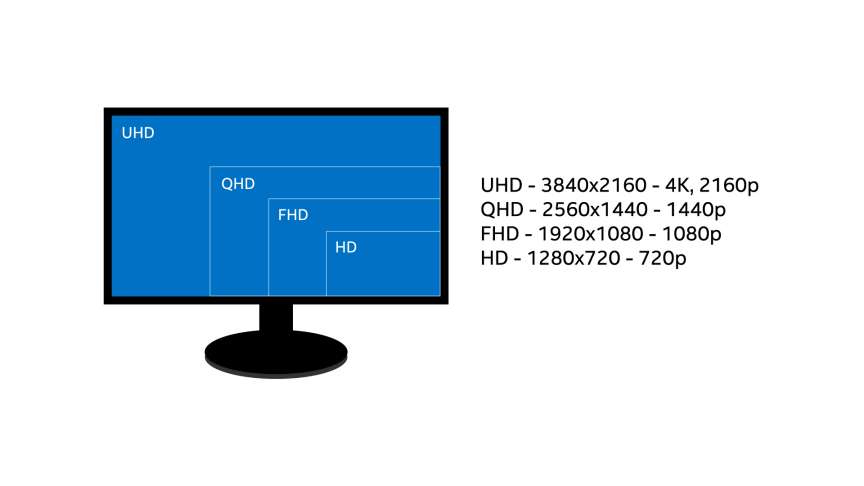

There"s also a relationship between screen resolution and refresh rate. Because a computer only has so much bandwidth for the display, you might find that at higher resolutions, your display can"t run at its maximum refresh rate. If you are adjusting these settings, you might need to make a choice between, for example, an HD (1920x1080) display at 144Hz or a 4K (3840x2160) resolution at 60Hz.

Depending on your monitor or laptop display, you might be able to adjust the refresh rate. Some displays aren"t running at their highest refresh rate by default, for example, and it"s adjustable in the system settings. If you try to increase the refresh rate but it won"t go as high as you think the monitor is designed to go, you might need to lower the resolution to make a higher value become available.How to change the refresh rate on your PC monitor

You can open the refresh rate settings on any Windows PC, though whether you can change it — and what values you can set — depend on your graphics card, monitor or laptop display, and the resolution you are running.Click the Start button and click "Settings."

Click the link "Advanced display settings." On the Advanced display settings page, you can see details about your monitor, including the currently set refresh rate.

Finally, in the Monitor Settings section, you can click the Screen refresh rate dropdown and choose a different refresh rate. Click "OK" to save this setting.How to change the refresh rate on your Mac monitor

If you have a MacBook Pro (16-inch 2019 model) or a Pro Display XDR monitor for your Mac, you can adjust the refresh rate. Here"s how:Click the Apple menu, then choose "System Preferences."

Dave Johnson is a technology journalist who writes about consumer tech and how the industry is transforming the speculative world of science fiction into modern-day real life. Dave grew up in New Jersey before entering the Air Force to operate satellites, teach space operations, and do space launch planning. He then spent eight years as a content lead on the Windows team at Microsoft. As a photographer, Dave has photographed wolves in their natural environment; he"s also a scuba instructor and co-host of several podcasts. Dave is the author of more than two dozen books and has contributed to many sites and publications including CNET, Forbes, PC World, How To Geek, and Insider.

To show moving images, a television has to change out the pixels displayed on the screen. This is what we mean when we say the television refreshes the image -- it has to draw images in pixels so quickly that the human eye can"t detect the process. If televisions didn"t refresh the pixels, they could only display a still image. That"s not good TV.

The standard television refresh rate is 60 hertz. That means the screen displays an image 60 times every second. An interlaced television will refresh the odd and even lines 30 times a second each in an alternating pattern. Even at this rate, we don"t notice the screen refreshing because it"s too fast for us to detect.

Early LCD high-definition televisions had great resolution but experienced some problems when displaying fast-moving images on screen. Action movies and sporting events in particular gave early LCD sets problems. The images tended to blur as they moved across the screen. Plasma screens didn"t have the same problem, giving that format the advantage when it came to high-speed television content.

The solution to the LCD problem was to increase the refresh rate. A few years ago, the first 120 hertz sets showed consumers that by doubling the refresh rate, the set could reduce the blurring effect. By early 2009, sets with a 240 hertz or higher refresh rate were either on store shelves or scheduled for release.

The higher refresh rates indicate that the televisions refresh the screen more often each second. Whether the faster rate has a noticeable effect on the viewer"s experience is subjective. A viewer may not be able to tell the difference between a set refreshing at 120 hertz and one with a 240 hertz refresh rate.

Some HDTV sets interpolate frames. Basically, the television analyzes two adjacent frames of content and creates a frame to fill the gap between the two in order to smooth out the movement.

Computer monitors often have a "maximum refresh rate" listed in their technical specifications. This number, measured in hertz (Hz), determines how many times the screen is redrawn each second. Typical refresh rates for CRT monitors include 60, 75, and 85 Hz. Some monitors support refresh rates of over 100 Hz.

The higher the refresh rate, the less image flicker you will notice on the screen. Typically a refresh rate of less than 60 Hz will produce noticeable flicker, meaning you can tell the screen is being redrawn instead of seeing a constant image. If the refresh rate is too slow, this flicker can be hard on your eyes and may cause them to tire quickly. As if sitting at a computer for several hours wasn"t hard enough!

To avoid flicker, you should set your monitor to use the maximum refresh rate possible. This setting is found in the Monitors control panel in Windows and the Displays system preference in Mac OS X. While 60 Hz is considered a good refresh rate, some people will find that 85 Hz is significantly better.

The maximum refresh rate is determined by three factors: 1) The rate your video card supports, 2) the rate your monitor supports, and 3) the resolution your monitor is set at. Lower resolutions (i.e. 800x600) typically support higher refresh rates than higher resolutions (i.e. 1600x1200).

If you have an LCD monitor, you may not be able to adjust the refresh rate. This is because most LCD monitors come with a standard refresh rate that is well above the "flicker" point. LCD monitors produce less flicker than CRT monitors because the pixels on an LCD screen stay lit longer than CRT monitors before they noticeably fade.

A Cathode Ray Tube is nothing more than a vacuum tube with a anode and a cathode. it just has the anode and cathode in diffrent places and also has a phospher on one end. What happens is there is an amplifier and a highvoltage (flyback transformer) inside.

To make things simple(which will put me on the verge of being told where im wrong at) I"m going to explain how it displays the image. The signal wheather it is vga,dvi, bnc,analog are digital. will be sent to the amplifier where it will be amplified split and proccesed then sent to the flyback transformer for control and sent thru the electron gun. the electron gun is a single point that electrons fire off of and fly thru a vacuum(so that there isn"t any ions to abosrb it) before hitting a particuler phosphor at one period of time. which depends on what frequency the aperture grill is at and what frequency the electron hit it among other things is at. when the electron hits the certain phospher depending on what quantim state and how big the forbidden gap is and at what certain threshold frequency the electorns have when it strickes the phosphor a photon is released toward the oppsite side of the screen spinning into the atomsphere before hitting your corronas cinching the hell out of them. Since the electron gun can only point to a certain group of phospurs (depending on screen res)at a certain time then it has to strobe back and fourth by the use of finely tuned electromagnetics that are used to push and pull the stream of electons.

I can"t throughtly explain this without saying a certain res. let"s use 800by600 once the gun has moved down 800 time from the top left of the screen and over 600 times and come back to the very top left then it has completed one cycle are hz. phosphors have the tendancy to want to decay from there orginal state from when the electron stream hit it. they tend to go back down to there natrual state below the photoelectron threshold. usually by the time the electron gun is at the bottom of the screen the top of the screen is already 70 percent decayed. to compensate we can turn up the refresh rate! higher refresh rate mean phosphors are more stable on a particular color. It also means that it will update from the video card for a smoother image. a crt can change frames midways between with a smooth transition from on frame to the next this is why we can apprecaite those extra headshots. from a crt at 100 hz. This is not vsync this that is where the videocard starts drawing a certain frame before the last one is finished. this happens on a much faster rate. this is when the electron gun is halfway down and the video has no vsync problems and changes midway to which the electron gun draws a portion of the frame and then starts drawing the last poriton of the frame. (to complicated to explain) just dont aregue that its vysnc its not. vsync can happen in thru 3-4 frames whereas this happens are transistions i must say wihtin 1 hz of the 60 hz (are whatever you have defaluted) this is that flicker you see.if you turn up your resolution then its harder for the deflectors to push and pull your electron stream steady at a higher resolution thus you have to turn it down so there is no blurring! are use more of everything electornics wise like the fw900 and have hellacious back problems.

The reason that crts produce more accuate colors is because the phosphor creates the photon. unlike an lcd which blocks them. when you turn up your referesh rate you have less flicker which hurts your eyes you get exaclty at one time what yoru video card is produceing (depending on your connection lag).also you can take certain pins off of your cable nothing new and be able to run in resolution your video card can handle althought you may bring your crt down to as little as 9 hz not very good for your monitor i assure you. yes we have had high definiton on crt"s for years and even more so than your 720p 1080p stuff.

lcds work by haveing a back light that can be dynamic are static. they work on the process of deflecting light. every particlular color within humans can see has a particulare frequncey(and those we cant"t see) what we do to make things simple wiht an lcd is uses a crystal to oscillate back and fourth to counteract the color. depending on what frequency its oscillating at depends on what color the white backlight is comeing thru and changeing to . the crystal is at it"s fastest when ever it is showing black (i think it may be white but the process and the point are still the same.

lets take a video card produceing a frame at 80 fps every certain ms and running at 60 hz on a crt the screen would flicker and the crt would allow you to push 80 fps at 60 hz it would just flicker. an lcd would not it is limited by the 60hz cap and because 1 frame is one hz 60 hz per second so it frame skips.also during that time the crystals are oscillaing at say 2 ms. the crystals themselves can transition 4-6 times within each frame on gray2gray and 1-2 time on black to white. let say that we had a solid 2ms on every color. and 10ms frame and a 60 hz signal. you could change 5 times wihting that 10ms frame theroticly.and not have any blurrying. but the crystals are limited to 60 hz input. lets say we were to up the screen to 120 hz. what would happen unde these conditions. it would take the cap off of the crystals and they wouldn"t be waiting for a signal at 60 hz to tell them to change color they would change at a faster rate. maybe not at 120 hz but maybe somewhere around equal to a crt 72 -80 hz. and if you were to overdrive them even more than the manufacturers are doing now you could acheive even closer to a 120hz screen.

you silly consumers a 60 hz signal has nothign to do with the rate at which a crystal can osciallate. they don"t wont to change them as of yet its marketing. all they would have to do would be to change the the thickness of the crystal being cut. simple as that. have you ever noticed that the 120 hz screens are snappy then slow snappy then slow. with all kinds of problems. the pixels themseves can do 120 hz hell thats easy a crystal in a soundcard oscillates from 20 to 20,000 hz doesn"t it are correct me if im wrong please do! they are snappy because the signal is 60 hz and they are produceing 120 hz off of this signal.how canyou double your fps without a scaler chip? if there isn"t something driving it directly. the scaler chips used in todays monitors are exacly what is keeping us from hitting 120 hz. screens. you think becasue you can see the pixel(screendoor effect) that it limited to 60 hz no way. that is so freaking slow. what you need is a montor with a good scaleing chip. i have the l227wtg-pf and you wouldn"t tear it from my cold dead hands.

ok so yes you can have your monitor go faster than 60 hz its that manufactures need to produce monitors without scaler chips and the chips need to be manufactured in the video cards. yes my monitor can do 75 hz and i have no frame skipping. have you ever noticed that the image gets smoother whenever you have a lower response time no matter if its set at 60 hz are not. well once you get down to around 2 ms it can"t go any faster then 60 hz thats why we are stuck with no progress below 2 ms response time we are waiting for scaler chips that can go faster than 60 hz maybe 120 hz scaler chip and we can go to 1 ms . so you guys and gals who have monitors at 6 ms don"t even attempt to go past 60 hz you are no where near the 2 ms border. you won"t notice a diffrence! but for you guys who are lucky enought to have an l227wtg-pf like me who are proud of it. then we can enjoy 1:1 pixel mapping at all resoultions no yellow tinged whites and with a 2 ms response time. by the way modern panels that are tn within the last 2 years are way better than they were years ago. and even thought it has a viewing angle like all tn"s its ability to give me clear games and deep vivid colors is far superior to me than an h-ips that is cap limited at around 50 hz. when you pull those pins out it lets your scaler chip and edid not no what you are doing therfore allowing you to take advantage of your pixels repsonse time not your fps cap you can have up to 5 transitions wihtn 1frame on an lcd but teh scaler chip and firmware frame cut it.

I understand 1 frame is like 17 ms so thretocly a 2ms crystal can change 8 times within each fram with this limit if all colors had the same transition time. at 60 hz 60 x 17 equals 1020 so if you were to divide this by a 2 ms crystal then you would get 510 transitions if we didn"t have a cap on a crystal. at 1 frame but since they are all updated at once we have some crystals already finished transitioning and others not. depending if the scaler chip were programed to allow indiviual pixels to change instead of as a whole would make a big diffrence. this is why we have fps cap at 60hz and why people changeing to 75 hz are getting better results. if the scaler lets the signal bypass to the pixels then you get a smoother picture if it doesn"t then your just pumping 75 hz to a scaler that brings it down to 60 hz and skips luckly my monitor doesn"t and allows it to pass! people who have to high response time can"t take advantage. and for those people who have tried it on there monitors using dvi try switching back to vga to see if you can get a smoother image i can on both dvi and vga but some can only on vga

Accurate stimulus presentation is vital to research in vision, and the biggest limitations are imposed by the device used to display stimuli (Krantz, 2000; Bukhari and Kurylo, 2008). In early times, accurate timing of visual stimuli was achieved by specialized apparatus such as tachistoscopes (Mollon and Polden, 1978). Later, these expensive devices were replaced by cheaper and broadly available cathode-ray tube (CRT) monitors, which could be controlled conveniently and flexibly via personal computers (PCs; Brainard, 1989). These systems allowed researchers to adjust a much larger number of stimulus’ parameters and are partly responsible for the expansion of the research in vision.

Nevertheless, CRT monitors are not perfect as they continue to pose several limitations for researchers. First, the light is not presented continuously, but discretely in flashes. In CRT monitors each pixel of the screen changes from bright to dark with certain vertical refresh rate frequency (60 Hz or higher), producing constant flicker. This fast flicker is largely fused by our visual system (Kristofferson, 1967; Andrews et al., 1996; Carmel et al., 2006), but not necessarily completely, as it can affect electrophysiological recordings (Wollman and Palmer, 1995; Krolak-Salmon et al., 2003; Williams et al., 2004). This technology, based on non-continuous light sources, makes an exact calculation of the stimulus presentation time difficult (Bridgeman, 1998). For example, if an image is presented nominally for four screen frames with a 100-Hz refresh rate, the total time span over which the screen is emitting light does not correspond to 4 × 10 = 40 ms, but to a shorter period of time, the exact duration of which depends on the duration of each flash, which in turn depends on the phosphor decay characteristics of a particular screen. Another disadvantage of the CRT monitors is the lack of independence between neighboring pixels (Pelli, 1997). This can be illustrated by presenting the finest possible grating (i.e., one-pixel raster of black and white stripes) with either horizontal or vertical orientation, in which cases the luminance can differ dramatically (Pelli, 1997; Krantz, 2000). Moreover, CRT monitors produce electromagnetic radiation and hence often induce noise in electrophysiological recordings. This noise is correlated to the stimulus and hence, cannot be averaged out. Finally, CRT monitors are becoming increasingly difficult to purchase due to the shrinkage of the market for such products.

There are several possible replacements of CRT monitors in experiments, such as those based on digital light projection or mechanical shutters (Packer et al., 2001; Wiens et al., 2004; Fischmeister et al., 2010). However, these solution need complex setups and the costs are considerable, which limits their application. More general solutions for visual stimulation may be based on liquid crystal display (LCD) technology. LCD monitors present images continuously, i.e., without flicker, generate the luminance of the neighboring pixels independently, produce negligible electromagnetic noise, and are broadly available. Unfortunately, so far, these monitors could not be used widely for research in vision because they lacked speed and accuracy mandatory for scientific investigation of the visual system. These monitors were sluggish in responding to the changes dictated by the computer software and were unable to set the intensity of illumination with sufficient reliability (Wiens et al., 2004). These two disadvantages rendered LCD monitors not applicable for most studies in vision. However, as the technology for LCD monitors has been improving steadily, one can expect LCD monitors to catch up eventually with CRTs also in respect to the timing and luminance accuracy. We report here that at least one recently introduced LCD model has sufficient speed, and temporal and luminance accuracy adequate for most research purposes in vision.

The timing and intensity of luminance changes on the screen were controlled by general-purpose PC using specialized software ActiveSTIM (www.ActiveSTIM.com), which enables creating simple scripts for visual stimulation in conventional programming languages such as LabView, Matlab, C++, etc. The PC was equipped with a modern, but inexpensive, display card (NVIDIA Quadro FX580), which was used to drive, one at a time, all three different monitors that have been tested: (i) a new model of 22′′ 120 Hz LCD monitor (SAMSUNG 2233RZ), backlit by a cold cathode fluorescent lamp and developed for 3D gaming purposes, which was our candidate for a new lab monitor for visual stimulation, referred to as LCD-1. The performance of that monitor was compared to (ii) our current visual stimulation monitor, a 21′′ CRT (ViewSonic P227f, CRT), and (iii) an older LCD model (DELL 3007WFPt, LCD-2), which we knew did not satisfy the needs for visual stimulation.

The settings of brightness, contrast and gamma mode for all monitors were kept constant during the test. In LCD-1, these values were set to the 100, 75, and 1, respectively. In CRT brightness and contrast were set to the 0 and 88, respectively. This monitor did not have an option for setting the gamma mode. In LCD-2 the default setup was used as there was no numeric indicator of the intensity of the luminance set manually. All the tests were taken with the same amount of ambient light. The LCD monitors were always used with their native resolutions, 1680 × 1050 for LCD-1 and 2560 × 1600 for the LCD-2. The resolution of the CRT was 1024 × 768, unless specified otherwise.

A lux probe from a luxmeter (Voltcraft, LX-1108) was used to measure the luminance delivered by the monitors. The sensor of the probe is shaped as a semi-hemisphere surrounded by a black plastic light shield (see Figure A1 in Appendix). The sensor was fixed to the screen by a sticky tape during measurements and always covered by a lid when no measurements took place. To measure average luminance produced by the images over multiple screen frames, the value from the sensor was read by an electronic readout device supplied with the probe, returning values directly in lux. To investigate frame-to-frame dynamic changes of the screen luminance, we used our system for the acquisition of electrophysiological data to first amplify (30×) the voltage generated by the sensor and then record the signal with high sampling frequency (10 kHz). The timing of stimuli was controlled by the phase change of a digital signal (trigger) sent by ActiveSTIM via a digital IO board (National Instrument, NI-6503). The pulses were sent at the beginning of each measurement, and were recorded by the acquisition system. We also tested how the luminance was affected by changes in the viewing angles for a viewer positioned in the center of the screen and viewing either the center of the periphery of the screen.

All the generated images had square form and their size (∼7 cm × 7 cm) exceeded well the screen area sampled by the sensor. In the first test, the measurements of the luminance during sustained illumination of the screen, the used image was either uniform white or consisted of full contrast (i.e., white/black) high-frequency grating with the widths of the stripes spanning a single pixel. The grating was oriented either vertically or horizontally. The lux values for white squares and gratings were measured at nine locations on the screen: the center, the four corners, and the four midpoints between the corners. On each location, the luminance produced by grating and uniform white images was measured twice, in the following order: vertical, horizontal, uniform, uniform, horizontal, and vertical. These measurements were made with 60 Hz refresh rate on all three monitors and with 120 Hz on LCD-1 and CRT (LCD-2 does not support refresh rates higher than 60 Hz). In addition to the resolution 1024 × 768, at 120 Hz the CRT monitor was tested also with another higher resolution (1280 × 1024), closer to those of the two LCDs.

To investigate the effect of a change in a viewing angle, the luminance of the screen was set constant and a chroma-meter (Konica Minolta, model CS-100A) was positioned at the distance of 57 cm facing the center of the monitor, which is a relatively small viewing distance for a typical experiment in vision and hence, it estimated the upper boundary of the magnitude of the effects of different viewing angles. The luminance values were measured by rotating the chroma-meter along one horizontal and the vertical axis to point to five locations on the screen: the center of the screen and the four centers of the four edges of the screen. The performance was compared between CRT and LCD-1 at 120 Hz.

In LCD-1 and CRT we measured also the maximum luminance contrast and the resolution of the generated luminance. The maximum luminance contrast was defined as the ratio between the difference and the sum of the maximum and the minimum luminance [RGB values (255 255 255) and (0 0 0), respectively; i.e., (max − min)/(max + min)]. To investigate the resolution of the generated luminance, we attempted making luminance changes in the steps much finer than those provided by individual RGB values in the default mode. To this end, we re-defined the color-lookup table to split three luminance levels (RGB values, 100, 101, and 102) into 21 luminance levels. The luminance levels generated by the two monitors were then measured for the white and separately, for each of the three color channels. The color-lookup table was set back to default for all other tests.

In the second test we measured accurately the time of rise and fall of illumination when an image was presented for a limited amount of time (number of screen frames). A white square (RGB 255, 255, 255) appeared in the center of the display, which returned back to black (RGB 0, 0, 0) after a duration of either 1, 2, 3, 6, 10, 15, 20, or 30 frames of screen refresh. Each of these eight durations was presented in a block consisting of 100 repetitions, starting with a block of 1-frame and ending with a block of 30-frame long images. Thus, there were 800 measurements in total, each lasting 600 ms. LCD-1 and CRT were tested at 60, 100, and 120 Hz refresh rates, and LCD-2, only at 60 Hz.

In the third test we investigated luminance delivered by the monitors when the luminance of the presented image changed randomly at each frame of screen refresh. To this end, a square at the center of the screen took all the 256 different shades of gray (from RGB 0, 0, 0 to RGB 255, 255, 255) in a random order, each shade lasting for only one frame. 100 such sequences were shown, each randomized anew. LCD-1 and CRT were tested at 60 and 100 Hz (LCD-2 was not tested).

Finally, the second and the third test were conducted also for LCD-1 mimicking the flickering flashes of a CRT. Here, LCD-1 was ran at 120 Hz but every second screen frame was set always to full black, resembling the luminance flicker of a 60-Hz CRT monitor.

To account for possible DC drifts of the recording system, the baseline luminance (i.e., full black) in the second and third tests was subtracted from all signals prior to further analyses. The baseline luminance was taken from the recordings 60–10 ms prior to the occurrence of digital trigger. Since different monitors differed in absolute luminance generated, it was necessary to normalize the measured values to the maximum (i.e., full white) for each monitor. The maximum luminance for the normalization reference was determined as the peak luminance within a presentation period that lasted 30 frames (at 60 Hz) and that was averaged across 100 repetitions.

The onset time of illumination was defined as the time at which the luminance rose to half of its maximum. We defined the offset time in a similar way: the point at which the luminance reduced to half of its maximum in the last frame. To quantify the properties of the luminance rise and fall, we fit the following exponential function:

to the regions of the measured luminance curves that preceded the onset times and that followed the offset times, respectively. The smaller the time constant c, the steeper are the rising/falling slopes, and thus the monitor is closer to the ideal performance. These measures are also related to the magnitude of the motion blur produced by the screen (Watson, 2010). The duration of illumination was defined then simply as the interval between the onset time and offset time.

In the third test with random sequences of the shades of gray, the time period over which each frame was illuminated (i.e., the period between the onset and offset time) was determined with methods similar to those in other tests, except that the luminance did not change from full black to a given level of gray but rather, in most cases, from other shades of gray. Hence, the duration of illumination could not be determined accurately for each level of gray, the dark ones being least accurate. As a result, the illumination periods were first determined solely on the basis of the full white frames for which we used the darkest available shades of the preceding and succeeding frames. Within this time window we then calculated the mean luminance for all frames, including those with other levels of gray. Finally, for each level of gray (i.e., each target luminance), these individual averages were normalized relative to the gross average obtained across all 100 repetitions.

We first investigated the consistency of brightness across different locations on the screen. The brightness of a full white statically presented image varied across locations in all three tested monitors. Typically, luminance was highest at the center of the screen. LCD-1, our candidate for a new visual stimulation monitor, exhibited least variability of all tested monitors, while LCD-2, an older model used for comparison, showed the largest variability (Figure 1A). The tested CRT monitor had also variability larger than LCD-1. In addition, as would be expected for back-lit LCDs, these monitors had the advantage of high constancy across different refresh rates, which was problematic for the CRT monitor. Therefore, although LCD-1 does not illuminate the screen with perfect homogeneity, it is much closer to this goal than other tested monitors. This disadvantage of the CRT monitor was compensated by its better luminance stability as a function of changes in viewing angles. When luminance was measured from distance and under an angle by a chroma-meter, the drop of luminance in the corners of the screen was much larger for LCD than for CRT. Consequently, the total drop of generated peripheral luminance about equaled for the two monitors, in one monitor being caused primarily by a reduction in the total amount of light generated (CRT) and in the other by the viewing angle (LCD).

Figure 1. Spatial reliability of generated stimuli. (A) Variability of luminance across screen location. Diamonds: for each monitor and each setting (x-axis), luminance measures on the screen shown for nine different locations (eight along the borders and one in the center). Open circles: for CRT and LCD-1 at 120 Hz, the luminance measured from a viewing distance of 57 cm and from different angles, shown for five locations (the four centers of the borders and one screen center). For easy comparison, the luminance at the center locations was always taken as a norm (i.e., it has been assigned the value of 1.0), others being shown as proportions of the value at the center. Red: the new LCD model tested for the possible suitability for research in vision. (B) Luminance produced by fine (one-pixel wide stripes) gratings of different orientations. The luminance produced by gratings is shown relative to the expectation defined as 1/2 of the luminance produced by a white square (indicated by the horizontal line). Each data point is the normalized luminance averaged over nine locations. Error bar: 95% confidence interval. (C) Test of fine changes in luminance for the levels of gray. To examine the luminance resolution of the monitors, the color-lookup table was modified such that the luminance steps between adjacent RGB values were reduced to 1/10 of those in the default color-lookup table, as shown by the solid line. Hence, the RGB range in the x-axis covered a very small luminance span with fine changes that corresponded to the span of only three RGB values (100–102) of the original lookup table. y-Axis: the luminance normalized to the range measured in this test, which corresponds to about 2.4% of the total luminance range of the monitor.

The independence between neighboring pixels was confirmed for LCD monitors. Horizontally oriented black/white gratings produced in all cases luminance that corresponded accurately to 1/2 of the luminance of a fully white square, as expected (Figure 1B). Gratings oriented vertically produced also identical results when rendered on LCDs. However, when rendered on the CRT, consistent with previous reports (Pelli, 1997; Krantz, 2000), the luminance was reduced relative to the horizontal grating. This reduction became more severe with higher refresh rates and higher screen resolutions. In our most strenuous test (120 Hz refresh rate, 1280 × 1024 resolution), the luminance of the vertical grating got reduced on the CRT to ∼40% of that produced by the horizontal grating (Figure 1B). Thus, only LCD monitors, but not the CRTs, generate the luminance of the neighboring pixels independently.

Theoretically, the highest possible value of luminance contrast is 1, which would require the monitor to produce full black (i.e., zero luminance) with the RGB values (0 0 0). The minimal and maximal luminances for the CRT monitor were 0.7 lx and 145.6 lx, respectively and for LCD-1, 1.3 lx and 236.6 lx, respectively. Thus the contrasts were 99.0 and 98.9% for CRT and LCD-1, respectively. This indicates no substantial difference between the maximum contrasts of these two monitors.

The test of luminance resolution indicated that LCD-1 generated a different value of luminance for each RGB value, but not finer. By modifying the color-lookup table, we requested 21 levels of gray from the monitors within the very narrow range of luminance change corresponding to only three RGB levels of the original setup. In that setting, the monitors were still able to produce only about three levels (Figure 1C), as would be expected from 8-bit color depth screen. The three groups of measured luminance values (filled circles in Figure 1C) suggest that this limitation was imposed by digital technology. The results were similar for the measurements of individual color channels (Figure A2 in Appendix). In contrast, CRT monitor did not produce clear groups of luminance values as the values of luminance generated by this monitor deviated from the ideal in an irregular way (open triangles in Figure 1C). This would be expected from analog technology. In conclusion, both monitors seem to generate luminance levels accurately enough to achieve 8-bit color depth, each achieving this goal with a different technical solution.

Our second and more important set of tests was about precise temporally resolved measurements of the changes in luminance (see Methods). Repeated generations of an image enabled us to assess the reliability of the generated luminance. LCD-1 and CRT performed both excellently, as they produced identical luminance traces across repetitions. To illustrate this point, in Figure 2A, the non-averaged luminance traces of each of the 100 repetitions are shown superimposed for all tested monitors. As can be seen, no noticeable variability exists for LCD-1 and CRT. In contrast, LCD-2 exhibited problems in delivered luminance. The traces had an oscillatory component of ∼200 Hz which, at the maximum luminance, accounted for ∼11% of the total luminance. This oscillatory component was not locked to the refresh rate of the monitor, resulting in a thick region of overlap in Figure 2A. Therefore, only the newer LCD model repeats the generated luminance trace exactly.

Figure 2. Precise timing of the changes in luminance. (A) Overlay of 100 traces of luminance measurements for a presentation of a full white stimulus, shown for three actual monitors and one mimicked monitor (mimicked CRT; every other frame of LCD-1 is set to full black). The measurements are made in all cases with 60 Hz refresh rate. The trace of LCD-1 is delayed relative to the CRT mimicked on LCD-1 because in the former case the monitor ran at 60 Hz while in the latter case its real underlying refresh rate was 120 Hz. The time 0 ms is the moment at which a digital pulse was sent. (B–E) Luminance traces averaged across 100 repetitions and produced by the different monitors at 60 Hz when stimuli of different durations are presented (1–30 frames; color coded). Zoom-in: detailed view for the first frames of the presentation.

Consistent with the precisely overlapping traces in Figure 2A for LCD-1 and CRT, the quantitative measures of the precision (i.e., error defined by 95% confidence interval) were also excellent for the onset time of the stimulus, for the time it needs to rise from the minimum to its maximum, and for the overall duration over which the stimulus was presented. These errors were less than 0.02 ms for all tested refresh rates.

Some of the advantages of the CRT monitor came from its flickering nature, the return to full black at each screen frame contributing to, e.g., the high consistency of the total generated luminance at each frame. Hence, when relevant, we also show the performance of LCD-1 when it mimics the flicker of the CRT monitor by using 120 Hz refresh rate to mimic a 60-Hz flicker-based generation of an image. These results are indicated as “Mimicked CRT” and are discussed at the end of the Section “Results.”

We also measured the stability of the peak intensity, i.e., the maximum luminance within a single presentation, across 100 presentations of a stimulus. LCD-1 and CRT had low errors of less than 0.04%. LCD-2, although not performing as well as the other two monitors, had nevertheless relatively low error of up to 0.11% of the maximum. Probably, all these errors would be acceptable for most research applications in vision.

The monitors were also similarly consistent when we used the luminance traces to calculate the average intensity of luminance generated along each stimulus presentation. The upper bound of the error across repeated presentations was around 0.07, 0.02, and 0.11% for CRT, LCD-1, and LCD-2, respectively. Therefore, all three monitors can be described as generally reliable in producing luminance across repeated presentations, with the exception that LCD-2 had an oscillatory component not locked to the refresh rate, as described above.

Ideally, an image should appear on the screen and disappear immediately. In other words, the luminance should change with a square-wave function. Unfortunately, the technology is not at that level and every monitor requires time to change from one luminance level to another, here referred to as rising and falling times. According to our measurement of the time constant of rising times, CRT monitors outperformed both LCDs (0.2–0.3 ms for CRT vs. 1.1 ms for LCD-1 and 1.5 ms for LCD-2, respectively). Therefore, the CRT built up luminance faster than the LCDs. However, CRT was not generally better in changing the luminance, as the opposite was the case for the time constant of falling time, which was longer for the phosphor of CRTs (1.7–1.9 ms) as compared to the modern liquid crystals (1.2 ms; see Figure 3A for details). Therefore, CRTs and LCDs can be considered about even in this test, one being faster in building luminance, and the other in returning back to full black.

Figure 3. (A) The rising and falling times obtained for different monitors by fitting exponential functions to luminance traces. Filled circles: rising times; Open circles: falling times. (B) The duration over which stimuli are presented relative to the nominal duration specified by the number of screen frames, investigated at 60 Hz refresh rate. y-Axis: measured duration normalized relative to the nominal duration. (C) The amount of light delivered by the stimuli relative to the nominal amount computed from the number of screen frames, investigated at 120 Hz refresh rate. y-Axis: the total amount of luminance generated per frame normalized relative to that obtained by the longest presentation (30 frames).

Rising and falling times determine also the total time window over which a stimulus is presented. We compared how well the true duration of illumination corresponded to the nominal one computed by multiplying the number of presented frames with the nominal duration of the frame (1/refresh rate). The true duration corresponded always better to the nominal ones for LCDs than for the CRT, the latter being especially inaccurate with short nominal presentation times (one or two screen frames; see Figure 3B). The main reason for the inaccuracy of the CRT is that the effective time over which light has been generated is relatively short (e.g., only ∼3 ms).

An important related question is whether a monitor can reach 100% of its maximum intensity within a single frame of presentation. This was the case for neither of the tested monitors. The error was especially large for higher refresh rates and for the CRT monitor, which, at 120 Hz, delivered 4.28% smaller peak luminance at the first frame than at a later frame in which the maximum has been reached (the traces are shown in Figure A3 in Appendix). This should be compared to only 0.43% reduction in peak luminance at the first frame for LCD-1 running at 60 Hz (Figure 2C). Fortunately, these problems seem relevant only for the first one or two frames. With longer stimulus durations, these errors vanished quickly for both LCD-1 and CRT (already with >3 frames to <0.20%).

Liquid crystal display-2 delivered luminance also inconsistently over time. As our recording progressed over the period of ∼8 min (from 1-frame to 30-frame stimuli), the luminance produced by the light source gradually reduced in total by about 4% (see zoom-in in Figure 2B). Therefore, the source of light of that monitor is not necessarily stable but one may have to ensure that the monitor is on for a certain period of time (e.g., several tens of minutes) before it can be used for delivering visual stimuli. LCD-1 and CRT did not suffer from this problem (zoom-ins in Figures 2C–E).

Although LCD-1 was better than CRT in reaching maximum intensity at the first frame, it was worse in the total amount of luminance generated at the first frame when compared to later frames (Figure 3C). If the same image is shown for different numbers of frames, ideally, the amount of light delivered would be proportional to the number of frames, e.g., the light energy of a one-frame presentation would be one-tenth of that of a ten-frame presentation. The results indicated that CRT was the closest to this goal. A comparison between blocks with shortest duration (only one frame) and longest duration (30 frames), lead to a discrepancy of up to 20% in LCD-1, compared to only 2% in CRT.

Our final set of questions was about possible temporal dependences between frames in the delivered luminance by displaying images of varying shades of gray sequentially one frame after another. For both LCD-1 and CRT monitors, the luminance generated at the current screen frame depended to a degree on the luminance of the preceding frame. High preceding luminance always increased the luminance of the current frame (Figures 4A,B; also Figure A4 in Appendix) and vice versa, low luminance in the preceding frame reduced the luminance of the current frame. In LCD, this was a carry-over effect due to the non-zero time needed to switch from one luminance level to the other, as can be seen in Figure 4A. In the CRT, this carry-over effect (Figure 4B) occurred perhaps due to insufficiently quick change in voltage in the emission guns. Note that in LCD-1, toward the end of the frame also a small reversal of luminance occurred: high preceding luminance tended to reduce slightly the luminance generated at the very end of the current frame. The coefficients of variation (target luminance vs. actual luminance) are shown for all monitors and all target luminance values in Figure 4D. As one can see, LCD-1 performed better than CRT for lower target luminance (RGB values <∼80, 80, 80), and this reversed for higher target luminance (e.g., RGB value >120, 120, 120), CRT generating luminance more accurately than LCD-1. Notice also that in most cases, with higher refresh rate the actual luminance varied also more.

Figure 4. The effects of the preceding screen frame on the generated luminance. (A–C) Traces of measured luminance for the specified RGB value (150, 150, 150), obtained following either high luminance of the preceding frames (green) or low (red). Ten traces are shown for each color. All plots are shown for refresh rates of 60 Hz. Gray box: the actual duration of illumination during the frame as defined in our analyses (see Methods). (D) The summary of the results for all different values of target luminance and for all monitors and all tested refresh rates, shown as coefficients of variation. The target luminance is shown normalized relative to the maximum (RGB 255, 255, 255). Vertical dashed line: the target luminance used for plots in [(A–C) RGB 150, 150, 150].

Finally, the mimicked CRT exhibited the advantages of both LCD-1 and CRT. This monitor had very accurate onset, rising, and falling times, inherited from LCD-1 (see Figure 2E). The same held for the actual duration of the stimulation, which was generated more accurately than by the CRT monitor (see Figure 3B). Also, this mimicked monitor had good average intensity of generated luminance due to the repeatable return to full black, a property of the CRT (Figure 2E). In Figures 4C,D one can see that this resulted in very low temporal dependence on luminance generated in the preceding frame, the coefficient of variation being especially favorable with low target luminance.

In general, a researcher in vision needs a monitor that creates stimuli as close as possible to their formal specification. The duration and intensity of the stimuli should linearly scale with the values specified in the software and the values should remain constant across contextual and other variables, such as the immediately preceding stimuli, stimulus position on the screen, size, or orientation of the stimulus, etc.

Our results indicate that a new model of LCD can satisfy most of these needs and that in m

Ms.Josey

Ms.Josey

Ms.Josey

Ms.Josey