connect lcd panel to pc psu site www.tomshardware.com made in china

Even though you are buying two of the "exact same" modules, there is no guarantee that they will work together. Manufacturers don’t guarantee when mixing or adding one RAM module to another, even when they appear to be the "exact same" model.

Intel"s upcoming Raptor Lake non-K CPUs are reportedly up to 64 percent faster than their Alder Lake counterparts, according to a hardware leaker who shared some performance numbers.

As shared by @momomo_us on Twitter,(opens in new tab) ASRock has built a new accessory for PC builders that allows you to turn your PC chassis" side panel into an LCD monitor. The gadget is a 13.3" side panel kit designed to be tapped to the inside of your see-through side panel, giving users an additional display for monitoring system resources and temperatures or being used as a secondary monitor altogether.

The screen is a 16:9 aspect ratio 1080P IPS 60Hz display, measuring 13.3 inches diagonally. This screen is the equivalent of a laptop display. It uses the same connection method as laptops, featuring an embedded DisplayPort (eDP) connector.

Unfortunately, this represents a problem for most PC users. The connector was originally designed specifically for mobile, and embedded PC solutions, meaning the connector is not available on standard desktop motherboards or graphics cards.

As a result, only ASRock motherboards support the side panel, and only a few models at best, with less than ten motherboards featuring the eDP connector. The list includes the following motherboards: Z790 LiveMixer, Z790 Pro RS/D4, Z790M-ITX WiFi, Z790 Steel Legend WiFi, Z790 PG Lightning, Z790 Pro RS, Z790 PG Lightning/D4. H610M-ITX/eDP, and B650E PG-ITX WiFi.

Sadly adapters aren"t a solution either since eDP to DP (or any other display output) adapters don"t exist today. Furthermore, creating an adapter is problematic because eDP runs both power and video signals through a single cable.

It"s a shame this accessory won"t get mainstream popularity due to these compatibility issues. But for the few users with the correct motherboard, this side panel kit can provide a full secondary monitor that takes up no additional space on your desk. The only sacrifice you"ll make is blocking all the shiny RGB lighting inside your chassis.

A review posted at EXPreview gives us our first look at Chinese GPU maker Moore Thread"s new mid-range gaming competitor, the MTT S80 graphics card. This GPU is one of Moore Thread"s most powerful graphics cards to date, packing a triple fan cooler setup and theoretically competing with Nvidia"s RTX 3060 and RTX 3060 Ti, some of the best graphics cards available, even close to two years after they first launched.

For the uninitiated, Moore Thread is a Chinese GPU manufacturer that was established just two years ago, in 2020. The company has reportedly tapped some of the most experienced minds in the GPU industry, hiring experts and engineers from Nvidia, Microsoft, Intel, Arm and others. Moore Thread"s aim is to produce domestic (for China) GPU solutions completely independent from Western nations. These are supposed to be capable of 3D graphics, AI training, inference computing, and high-performance parallel computing capabilities, and will be used in China"s consumer and government sectors.

The MTT S80 graphics card was developed with Moore Thread"s "Chunxaio" GPU architecture, which supports FP32, FP16, and INT8 (integer) precision compute, and is compatible with the company"s MUSA computing platform. The architecture also employs a full video engine with H.264, H.265 (HEVC), and AV1 codec support, capable of handling video encoding and decoding at up to 8K.

The GPU operates with a target board power rating of 255W, powered by both a PCIe 5.0 x16 slot, and a single 8-pin EPS12V power connector — yes, it"s using a CPU EPS12V rather than an 8-pin PEG (PCI Express Graphics) connector. That"s because the EPS12V can deliver up to 300W, and for users that lack an extra EPS12V connector the card includes a dual 8-pin PEG to single 8-pin EPS12V adapter. And for the record, that"s more power than even the RTX 3070 requires.

Display outputs consist of three DisplayPort 1.4a connectors and a single HDMI 2.1 port, the same as what you"ll find on most Nvidia GeForce GPUs from the RTX 40- and 30-series families. The graphics card has a silver colored shroud, accented by matte black designs surrounding the right and left fans. The cooler features a triple-fan cooler design, with two larger outer fans and a smaller central fan in the middle. The card dimensions are 286mm long and two slots wide.

Earlier reports on the Chunxaio GPU suggest it can achieve FP32 performance similar to that of an RTX 3060 Ti. The 3060 Ti has theoretical throughput of 16.2 teraflops, while the Chunxaio has a theoretical 14.7 TFLOPS. That"s a bit lower than Nvidia, but it does have twice the VRAM capacity, so the hardware at least appears capable of competing with Nvidia"s RTX 3060 Ti.

Unfortunately, according to EXPreview, the MTT S80 suffers from very poor driver optimizations. While the MTT S80 is aimed at the RTX 3060 Ti in terms of raw compute performance, in gaming benchmarks Nvidia"s RTX 3060 reportedly outpaces the MTT S80 by a significant margin. The problem with the testing is that no comparative charts are provided for the games with RTX 3060 or other GPUs; only text descriptions of the actual gaming performance are provided, while the charts are for an odd mix of titles.

EXPreview ran its benchmarks on an Intel test rig with a Core i7-12700K, Asus TUF B660M motherboard, RTX 3060 12GB Strix, 16GB of DDR4 memory, and an 850W PSU running Windows 10 21H2.

The MTT S80 managed to come out on top in some of the synthetic tests, like PCIe bandwidth and pure fill rates. That"s understandable on the PCIe link, as the S80"s PCIe 5.0 x16 configuration should vastly outpace the RTX 3060"s PCIe 4.0 x16 spec. In the OCL Bandwidth Test, the S80 averaged 28.7 read and 42.8GB/s write speeds. The RTGX 3060 managed 18.3GB/s and 14.2GB/s respectively. In 3DMark06"s texturing tests, the MTT S80 hit 134.8 GTexels per second in the Single-Texturing Fill Rate test, and 168.5 in the Multi-Texturing test. The RTX 3060 was much slower in the Single-Texturing test, with 59.9 GTexels/s, but came back with 177.3 GTexels/s in the Multi-Texturing test.

But the review does mention driver problems, texture corruption, and other issues. It concludes with, "Compatibility needs to be improved and the future can be expected," according to Google Translate. That"s hardly surprising for a new GPU company. Hopefully future "reviews" of the MTT S80 will actually show more real-world comparisons with modern games rather than old tests that have little meaning in today"s market.

... his pc is entry level , no overclocking .. a simple PC and low wattage. advising him to change the power supply just because it is not on your tier list is wrong. it is not a faulty power supply and it should work fine. his all PC power usage is below 250 watts max load , and he is using a 650watt Corsair power supply . not like he is using no name Chinese power supply.

Dont rush and buy a power supply. First , clean all your system from dust very well . then check the cables , remove one memory stick and see if the problem stays or not .. also check the temps of the GPU and CPU while gaming monitor them .

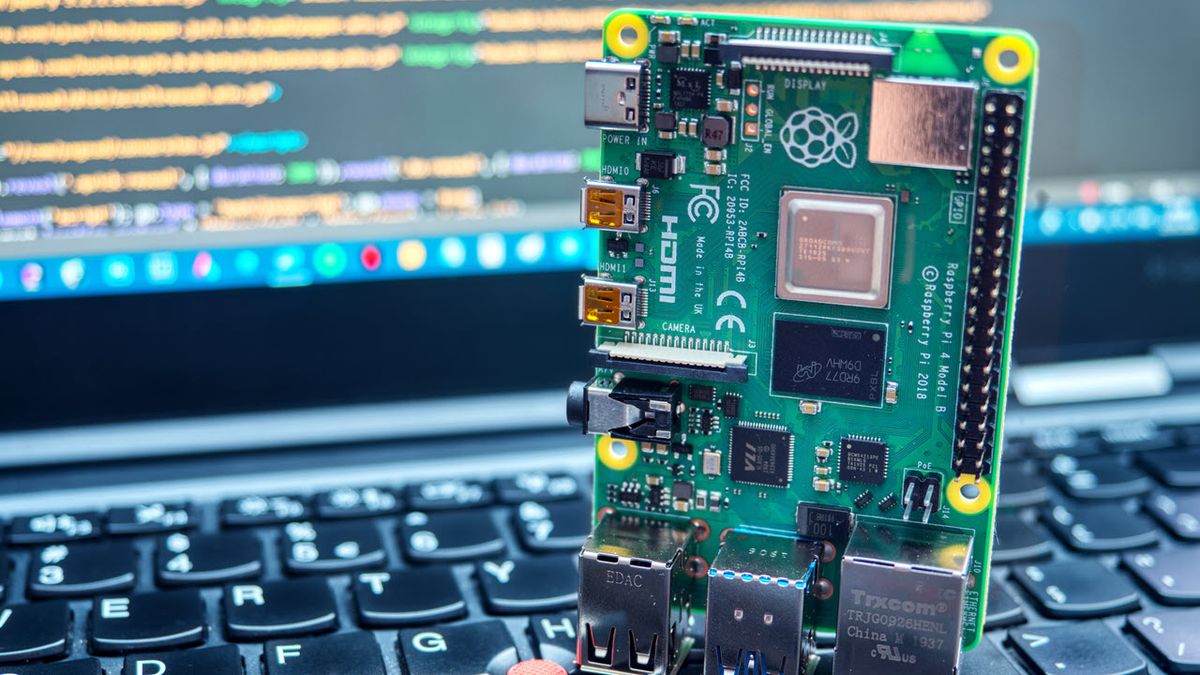

Raspberry Pis are still in short supply, if you are lucky enough to grab a Raspberry Pi for as little as $5 (for the Raspberry Pi Zero) or more likely from $35 (for the Raspberry Pi 41GB), you"ll need a few extra products to make the most of it. There"s a whole world of accessories that help you make the most of the Raspberry Pi"s GPIO. These accessories have been around since the Raspberry Pi was released, when it had just 26 GPIO pins. Using special addon boards we can take advantage of the more recent Raspberry Pi"s 40 pin GPIO to control and interact with electronic components and create diverse projects such as robotics, machine learning and IoT and even our own home server.

As with any computer, you’ll need a way to enter data and a way to see the interface, which usually means getting a keyboard, a mouse and a monitor. However, you can opt for a headless Raspberry Pi install, which allows you to remote control the Pi from your PC. In that case, the minimum requirements are:microSD card of at least 8GB, but the best Raspberry Pi microSD cards have 32GB or more. When you first set up a Raspberry Pi, you need to “burn” the OS onto it by using a PC, another Raspberry Pi or even a phone with microSD card reader.Power supply:For the Raspberry Pi 4, you need a USB-C power source that provides at least 3 amps / 5 volts, but for other Raspberry Pis, you need a micro USB connection that offers at least 2.5 amps and the same 5 volts. Your power supply provides power to both the Pi and any attached HATs and USB devices, so always look for supplies that can provide a higher amperage at 5 volts as this will give you a little headroom to safely power your projects.

Electronic parts: You can make great projects and have a lot of fun with motors, sensors, transistors and other bits and bobs. Just don"t forget your breadboard!

The top overall choice on our round-up of the best Raspberry Pi Cases, the Argon Neo combines great looks with plenty of flexibility and competent passive cooling. This mostly-aluminum (bottom is plastic) case for the Raspberry Pi 4 features a magnetic cover that slides off to provide access to the GPIO pins with enough clearance to attach a HAT, along with the ability to connect cables to the camera and display ports. The microSD card slot, USB and micro HDMI out ports are easy to access at all times.

It doesn"t come cheap, but the official Raspberry Pi High Quality camera offers the best image quality of any Pi camera by far, along with the ability to mount it on a tripod. The 12-MP camera doesn"t come with a lens, but supports any C or CS lens, which means you can choose from an entire ecosystem of lenses, with prices ranging from $16 up to $50 or more and a variety of focal lengths and F-stop settings. We tested the High Quality camera with two lenses, one designed for close up shots, the other for more distant, the image quality was a massive improvement over the standard Raspberry Pi camera.

If you need a Raspberry Pi camera, but don"t want to spend more than $50 on the high quality module and then have to bring your own lens, the official Raspberry Pi Camera Module V2 is the one to get. This 8-MP camera uses a Sony IMX219 sensor that gives it really solid image quality, records video at up 1080p, 30 fps and is a big improvement over the 5-MP OmniVision OV5647 that was in the V1 camera.

Whether you want to control your Raspberry Pi from the couch or you have it on a table and don"t want to waste space, getting one of the best wireless keyboards is a good idea. It"s particularly helpful to have a wireless keyboard with a pointing device so you don"t need to also drag around a mouse.

Lenovo"s ThinkPad TrackPoint Keyboard II is the best keyboard for Raspberry Pi thanks to its excellent key feel, multiple connectivity options and built-in TrackPoint pointing stick. The keyboard looks and types just like those on Lenovo"s ThinkPad line of business laptops, offering plenty of tactile feedback and a deep (for a non-mechanical), 1.8mm of key travel. The TrackPoint pointing stick sits between the G and H keys, allowing you to navigate around the Raspberry Pi"s desktop, without even lifting your hands off of the home row.

If you"re going to use a Raspberry Pi 4, you need a USB-C power supply that offers at least 3 amps of juice with a 5-volt output. We"ve found that the best USB-C laptop chargers are capable of delivering this kind of power (albeit often with 4.8 - 4.9 volts, which still works), but if you don"t have a powerful charger handy or need one just for your Pi, the official Raspberry Pi power supply is your best choice.

Rated for 5.1 volts at 3 amps, the official Raspberry Pi 4 power supply has good build quality and a nice design. Available in black or white, it"s a small rectangle, emblazoned with the Raspberry Pi logo and a strong, built-in Type-C cable that"s 59 inches (1.5m) long. Unlike some third-party competitors, it doesn"t come with an on / off switch, but it is compatible with cheap on / off adapters you can attach to the end. You may find competitors for a few dollars less, but the official Raspberry Pi 4 power supply is a sure thing.

The Raspberry Pi"s 40 GPIO pins are arguably its most important feature. Using these pins (see our GPIO pinout(opens in new tab)), you can attach an entire universe of electronics, including motors, sensors and lights. There"s a huge ecosystem of add-on boards, appropriately called HATs (hardware attached on top) that plug directly into the GPIO pins and matching the same layout as the Pi. These add on boards give you all kinds of added functionality, from LED light matrixes to touch screens and motor controllers for robotics projects.

If you"re using a Raspberry Pi 4, you definitely need some kind of cooling, whether it"s a heat sink, an aluminum with passive cooling built in or, best of all, a fan. The Pimoroni Fan Shim is powerful, easy-to-install and unobtrusive. You just push it down onto the left most side of your GPIO pin header and it does a fantastic job of cooling your Pi. You can even use a Pimoroni Fan Shim on a Raspberry Pi 4 that"s been overclocked all the way to 2.1 GHz, without seeing any throttling.

Unless you"ve specifically configured yours to boot from an SSD (see our article on How to Boot Raspberry Pi from USB), every Raspberry Pi uses a microSD card as its primary storage drive. We maintain a list of the Best microSD cards for Raspberry Pi and have chosen the 32GB Silicon Power 3D NAND card as the top choice.

Unless you"re hosting a media server or have a ridiculous amount of ROMS on a game emulator, a 32GB microSD card provides more than enough storage for Raspberry Pi OS and a ton of applications. The operating system and preloaded applications take up far less than 8GB by themselves.

Each of the Raspberry Pi"s 40 GPIO pins has a different function so it"s hard to keep track of which does what. For example, some of the pins provide I2C communication while others offer power and others are just for grounding. You can look at a GPIO pinout guide such as ours, but sometimes it"s just easier to put the list of functions right on top of the pins.

While most of the earlier Raspberry Pi models have a single, full-size HDMI port, the Raspberry Pi 4 has dual micro HDMI ports that can each output to a monitor at up to 4K resolution. While there"s a good chance you already have one or more HDMI cables lying around the house, most of us don"t have micro HDMI cables, because it"s a rarely used connector.

You can use your Raspberry Pi as a game emulator, a server or a desktop PC, but the real fun begins when you start connecting electronics to its GPIO pins. Of course, to even get started playing with GPIO connectors, you need some interesting things to connect to them such as lights, sensors and resistors (see resistor color codes).

The market is filled with electronics kits that come with a slew of LED lights, resistors, jumper cables, buttons and other bits and bobs you need to get started. Most importantly, all of these kits come with at least one breadboard, a white plastic surface filled with holes you can use to route and test circuits, no soldering required.

In order to write Raspberry Pi OS (or a different OS) to a microSD card, you"ll need some kind of microSD card reader that you can attach to your PC. Just about any make or model will do as long as it reads SDHC and SDXC cards and, preferably, connects via USB 3.0. I"ve been using the Jahovans X USB 3.0 card reader, which currently goes for $5.99, for almost a year now and it has worked really well.

TheRaspberry Pi 400’s big feature is that it is a Raspberry Pi 4 inside of a keyboard. This new layout introduced a challenge, the GPIO is now on the rear of the case, breaking compatibility with Raspberry Pi HATs but with the Flat HAT Hacker we can restore the functionality and delve into a rich world of first and third party add ons for robotics, science projects and good old blinking LEDs! In our review we found that the board is easy to install, and requires no additional software. If you have a Raspberry Pi 400, this is a no brainer purchase.

Whether you"re shopping for one of the best Raspberry Pi accessories or one that didn"t quite make our list, you may find savings by checking out the latest SparkFun promo codes, Newegg promo codes, Amazon promo codes or Micro Center coupons.Round up of today"s best deals

Two Windows BSODs, VIDEO_TDR_FAILURE BSODs and VIDEO_TDR_TIMEOUT_DETECTED, both relate to video timeout detection and recovery (TDR). Both are triggered when, as the MS Doc file for VIDEO_TDR_TIMEOUT_DETECTED(opens in new tab) says “…the display driver failed to respond in a timely fashion.”

Simply put, when a graphics issue makes a Windows PC freeze or hang, and users try to reboot by pressing the power button, either of these BSODs may appear. As the VIDEO portion of the stop code string indicates, these BSODs almost always originate from a display device driver problem of one kind or another.

When a GPU – either a standalone graphics card, or graphics processors integrated into a CPU – gets overly busy processing intensive graphics options, these BSODs can occur. They happen most often during game play, or while other graphics heavy applications such as CAD or 3D rendering tools, are running.

The detection and recovery process is formally called timeout detection and recovery, abbreviated TDR. The default timeout value is 2 seconds. If this timeout elapses, the TDR process for video cards and integrated graphics processors (such as Intel’s Iris Xe or numbered UHD models) uses an OS-based GPU scheduler to call the displayport minidriver command DxgkDdiResetFromTimeout function. This function, in turn, reinitializes the device driver and resets the GPU. The stop code for VIDEO_TDR_TIMEOUT_DETECTED indicates that driver reinitialization and GPU reset succeeded. The stop code for VIDEO_TDR_FAILURE indicates that either or both of these operations failed. In fact the latter stop code can also occur if five or more TDR events occur in a period of 60 seconds or less.

The most common cause of TDR issues is a video driver problem. In many cases, updating your display driver to the most current version will properly support the TDR process. This summarily ends related BSODs.

Thus, it’s the first thing I recommend you try for video drivers on an affected system. For older video devices (2014 or older) it may be smart to research drivers online. Why? Because for older video devices, rolling back drivers to specific, known, good, working versions is often the right fix.

If driver replacement or update doesn’t help, hardware-related issues are often at fault. The Microsoft docs files for both TDR stop codes specifically mention the following hardware issues “that impact the ability of the video card to operate properly:”“Over-clocked components such as the motherboard.” Obviously, a GPU overclocking tool such as MSI Afterburner also counts here. Revert all overclocked settings to manufacturer defaults and see if that helps. If so, and you’re determined to overclock, you can advance and test overclock settings in small increments until things start failing again, then back off one level.“Incorrect component compatibility and settings (especially memory configuration and timings)”is really a subset of the preceding item. If you’re using non-standard or accelerated (e.g. XPG) memory settings and timings, return them to the memory module maker’s factory defaults, too.“Insufficient system cooling” often occurs when intense graphics or CPU activity drives devices outside recommended operating temperature ranges. This means you must check to make sure fans are working properly, clear out any dust buildup, make sure case vents aren’t blocked, and so on and so forth. Sometimes, going with one of the best CPU coolers (switching from forced air to liquid coolers with more and larger fans too) can help. I use the excellent Core Temp utility to monitor temps on my systems constantly; plenty of other good temp monitoring tools and utilities are available. Use them!“Insufficient system power” can indicate a failing Power Supply Unit (PSU) or one whose power output is unable to handle demand at peak system loads (as when playing games, or running graphically-intense applications). If you’ve recently added one or more new, higher-powered GPUs and haven’t upgraded your PSU, this could be a likely culprit. Power Supply Calculators like those discussed in this Tom’s Forum thread can help you determine if your PSU has enough oomph to service your system’s components (I usually add 100 to calculator results to make SURE there enough juice available).“Defective parts (memory modules, motherboards, etc.)”obviously includes graphics devices as well, since the problem lies somewhere in the system components that enable graphics output on a PC, including memory, motherboard, CPU, GPU, cables, and displays. This calls for old fashioned device troubleshooting. I recommend working your way in from the display to the system case, starting with video cables first, display(s) next, GPU(s) after that, and only then start worrying about a failing CPU or motherboard. Those last two may start throwing other BSODs, and will help clue you into potentially bigger problems than graphics.

Use the WinDbg debugger(opens in new tab) to get more information about the stop code. This can often be helpful in getting to root causes. I prefer using the NirSoft BlueScreenView tool myself, because it usually points directly to the faulting module (which for TDR errors will often be a graphic driver, such as the Nvidia nvloddmkm.sys or something similar). But the debugger can take you down to levels of detail beyond that for systems with multiple graphics cards or complex video output chains.

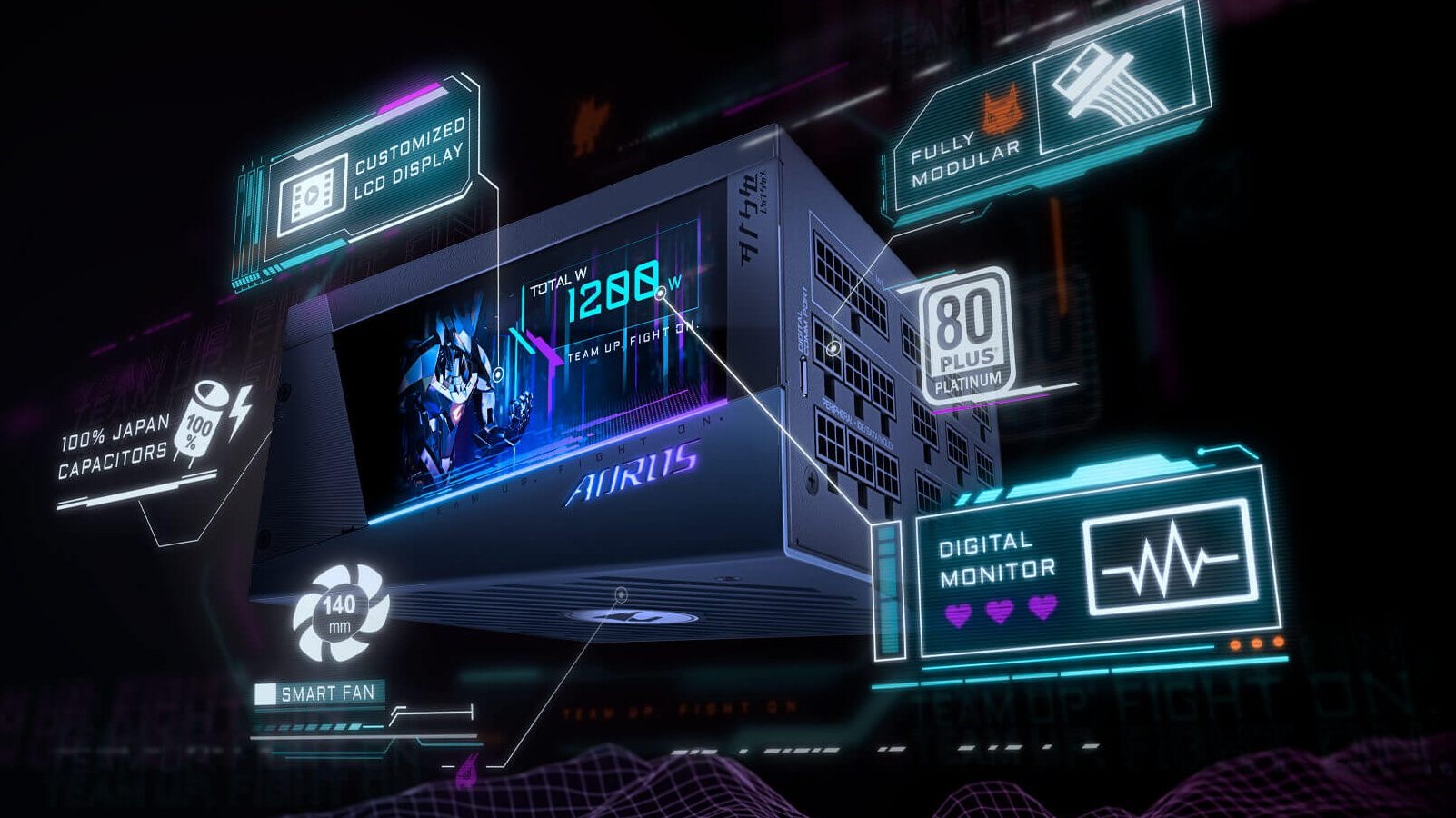

You can never have have enough screens, even if some of them are inside of your case. Gigabyte"s new Aorus P1200W power supply features a full-color LCD screen, which can display custom text, pictures, GIFs, and even videos on its LCD screen. Yes, just imagine watching your favorite movies on the side of your PSU!

This isn"t the first time we"ve seen a power supply with a screen slapped on it. The ASUS ROG Thor has one, but it only displays power draw, not your favorite films. Of course, the more practical use case for a screen on a PSU is showing stats such as your fan speed or temperature.

Unfortunately, Gigabyte hasn"t listed the exact size or resolution of the screen nor do we know what its refresh rate will be. Could one even play games on it? I guess we"ll have to find out.

Designed to compete with the best power supplies, the P1200W features all of the bells and whistles most high-end power supplies come with: an 80-Plus Platinum rating, fully modular cables, 140mm fan, an input current of 15-7.5A, full-range input voltage, >16ms hold up time, active PFC, and Japanese capacitors.

The P1200W brings a lot in a small package, with one 24-pin and 10-pin connector, two CPU EPS-12v power connectors, six peripheral connectors, and six PCIe connectors, and of course, the LCD screen.

Intel has been hyping up Xe Graphics for about two years, but the Intel Arc Alchemist GPU will finally bring some needed performance and competition from Team Blue to the discrete GPU space. This is the first "real" dedicated Intel GPU since the i740 back in 1998 — or technically, a proper discrete GPU after the Intel Xe DG1 paved the way last. The competition among the best graphics cards is fierce, and Intel"s current integrated graphics solutions basically don"t even rank on our GPU benchmarks hierarchy (UHD Graphics 630 sits at 1.8% of the RTX 3090 based on just 1080p medium performance).

The latest announcement from Intel is that the Arc A770 is coming October 12, starting at $329. That"s a lot lower on pricing than what was initially rumored, but then the A770 is also coming out far later than originally intended. With Intel targeting better than RTX 3060 levels of performance, at a potentially lower price and with more VRAM, things are shaping up nicely for Team Blue.

Could Intel, purveyor of low performance integrated GPUs—"the most popular GPUs in the world"—possibly hope to compete? Yes, it can. Plenty of questions remain, but with the official China-first launch of Intel Arc Alchemist laptops and the desktop Intel Arc A380 now behind us, plus plenty of additional details of the Alchemist GPU architecture, we now have a reasonable idea of what to expect. Intel has been gearing up its driver team for the launch, fixing compatibility and performance issues on existing graphics solutions, hopefully getting ready for the US and "rest of the world" launch. Frankly, there"s nowhere to go from here but up.

The difficulty Intel faces in cracking the dedicated GPU market can"t be underestimated. AMD"s Big Navi / RDNA 2 architecture has competed with Nvidia"s Ampere architecture since late 2020. While the first Xe GPUs arrived in 2020, in the form of Tiger Lake mobile processors, and Xe DG1 showed up by the middle of 2021, neither one can hope to compete with even GPUs from several generations back. Overall, Xe DG1 performed about the same as Nvidia"s GT 1030 GDDR5, a weak-sauce GPU hailing from May 2017. It was also a bit better than half the performance of 2016"s GTX 1050 2GB, despite having twice as much memory.

The Arc A380 did better, but it still only managed to match or slightly exceed the performance of the GTX 1650 (GDDR5 variant) and RX 6400. Video encoding hardware was a high point at least. More importantly, the A380 is potentially about a quarter of the performance of the top-end Arc A770, so there"s still hope.

Intel has a steep mountain to ascend if it wants to be taken seriously in the dedicated GPU space. Here"s the breakdown of the Arc Alchemist architecture, a look at the announced products, some Intel-provided benchmarks, all of which give us a glimpse into how Intel hopes to reach the summit. Truthfully, we"re just hoping Intel can make it to base camp, leaving the actual summiting for the future Battlemage, Celestial, and Druid architectures. But we"ll leave those for a future discussion.

Intel"s Xe Graphics aspirations hit center stage in early 2018, starting with the hiring of Raja Koduri from AMD, followed by chip architect Jim Keller and graphics marketer Chris Hook, to name just a few. Raja was the driving force behind AMD"s Radeon Technologies Group, created in November 2015, along with the Vega and Navi architectures. Clearly, the hope is that he can help lead Intel"s GPU division into new frontiers, and Arc Alchemist represents the results of several years worth of labor.

There"s much more to building a good GPU than just saying you want to make one, and Intel has a lot to prove. Here"s everything we know about the upcoming Intel Arc Alchemist, including specifications, performance expectations, release date, and more.

We"ll get into the details of the Arc Alchemist architecture below, but let"s start with the high-level overview. Intel has two different Arc Alchemist GPU dies, covering three different product families, the 700-series, 500-series, and 300-series. The first letter also denotes the family, so A770 are for Alchemist, and the future Battlemage parts will likely be named Arc B770 or similar.

Here are the specifications for the various desktop Arc GPUs that Intel has revealed. All of the figures are now more or less confirmed, except for A580 power.

These are Intel"s official core specs on the full large and small Arc Alchemist chips. Based on the wafer and die shots, along with other information, we expect Intel to enter the dedicated GPU market with products spanning the entire budget to high-end range.

Intel has five different mobile SKUs, the A350M, A370M, A550M, A730M, and A770M. Those are understandably power constrained, while for desktops there will be (at least) A770, A750, A580, and A380 models. Intel also has Pro A40 and Pro A50 variants for professional markets (still using the smaller chip), and we can expect additional models for that market as well.

The Arc A300-series targets entry-level performance, the the A500 series goes after the midrange market, and A700 is for the high-end offerings — though we"ll have to see where they actually land in our GPU benchmarks hierarchy when they launch. Arc mobile GPUs along with the A380 were available in China first, but the desktop A580, A750, and A770 should be full world-wide launches. Releasing the first parts in China wasn"t a good look, especially since one of Intel"s previous "China only" products was Cannon Lake, with the Core i3-8121U that basically only just saw the light of day before getting buried deep under ground.

As shown in our GPU price index, the prices of competing AMD and Nvidia GPUs have plummeted this year. Intel would have been in great shape if it had managed to launch Arc at the start of the year with reasonable prices, which was the original plan (actually, late 2021 was at one point in the cards). Many gamers might have given Intel GPUs a shot if they were priced at half the cost of the competition, even if they were slower.

Intel has provided us with reviewer"s guides for both its mobile Arc GPUs and the desktop Arc A380. As with any manufacturer provided benchmarks, you should expect the games and settings used were selected to show Arc in the best light possible. Intel tested 17 games for laptops and desktops, but the game selection isn"t even identical, which is a bit weird. It then compared performance with two mobile GeForce solutions, and the GTX 1650 and RX 6400 for desktops. There"s a lot of missing data, since the mobile chips represent the two fastest Arc solutions, but let"s get to the actual numbers first.

We"ll start with the mobile benchmarks, since Intel used its two high-end models for these. Based on the numbers, Intel suggests its A770M can outperform the RTX 3060 mobile, and the A730M can outperform the RTX 3050 Ti mobile. The overall scores put the A770M 12% ahead of the RTX 3060, and the A730M was 13% ahead of the RTX 3050 Ti. However, looking at the individual game results, the A770M was anywhere from 15% slower to 30% faster, and the A730M was 21% slower to 48% faster.

That"s a big spread in performance, and tweaks to some settings could have a significant impact on the fps results. Still, overall the list of games and settings used here looks pretty decent. However, Intel used laptops equipped with the older Core i7-11800H CPU on the Nvidia cards, and then used the latest and greatest Core i9-12900HK for the A770M and the Core i7-12700H for the A730M. There"s no question that the Alder Lake CPUs are faster than the previous generation Tiger Lake variants, though without doing our own testing we can"t say for certain how much CPU bottlenecks come into play.

There"s also the question of how much power the various chips used, as the Nvidia GPUs have a wide power range. The RTX 3050 Ti can ran at anywhere from 35W to 80W (Intel used a 60W model), and the RTX 3060 mobile has a range from 60W to 115W (Intel used an 85W model). Intel"s Arc GPUs also have a power range, from 80W to 120W on the A730M and from 120W to 150W on the A770M. While Intel didn"t specifically state the power level of its GPUs, it would have to be higher in both cases.

Switching over to the desktop side of things, Intel provided the above A380 benchmarks. Note that this time the target is much lower, with the GTX 1650 and RX 6400 budget GPUs going up against the A380. Intel still has higher-end cards coming, but here"s how it looks in the budget desktop market.

Even with the usual caveats about manufacturer provided benchmarks, things aren"t looking too good for the A380. The Radeon RX 6400 delivered 9% better performance than the Arc A380, with a range of -9% to +31%. The GTX 1650 did even better, with a 19% overall margin of victory and a range of just -3% up to +37%.

And look at the list of games: Age of Empires 4, Apex Legends, DOTA 2, GTAV, Naraka Bladepoint, NiZhan, PUBG, Warframe, The Witcher 3, and Wolfenstein Youngblood? Some of those are more than five years old, several are known to be pretty light in terms of requirements, and in general that"s not a list of demanding titles. We get the idea of going after esports competitors, sort of, but wouldn"t a serious esports gamer already have something more potent than a GTX 1650?

Keep in mind that Intel potentially has a part that will have four times as much raw compute, which we expect to see in an Arc A770 with a fully enabled ACM-G10 chip. If drivers and performance don"t hold it back, such a card could still theoretically match the RTX 3070 and RX 6700 XT, but drivers are very much a concern right now.

Where Intel"s earlier testing showed the A380 falling behind the 1650 and 6400 overall, our own testing gives it a slight lead. Game selection will of course play a role, and the A380 trails the faster GTX 1650 Super and RX 6500 XT by a decent amount despite having more memory and theoretically higher compute performance. Perhaps there"s still room for further driver optimizations to close the gap.

Over the past decade, we"ve seen several instances where Intel"s integrated GPUs have basically doubled in theoretical performance. Despite the improvements, Intel frankly admits that integrated graphics solutions are constrained by many factors: Memory bandwidth and capacity, chip size, and total power requirements all play a role.

While CPUs that consume up to 250W of power exist — Intel"s Core i9-12900K and Core i9-11900K both fall into this category — competing CPUs that top out at around 145W are far more common (e.g., AMD"s Ryzen 5900X or the Core i7-12700K). Plus, integrated graphics have to share all of those resources with the CPU, which means it"s typically limited to about half of the total power budget. In contrast, dedicated graphics solutions have far fewer constraints.

Consider the first generation Xe-LP Graphics found in Tiger Lake (TGL). Most of the chips have a 15W TDP, and even the later-gen 8-core TGL-H chips only use up to 45W (65W configurable TDP). Except TGL-H also cut the GPU budget down to 32 EUs (Execution Units), where the lower power TGL chips had 96 EUs. The new Alder Lake desktop chips also use 32 EUs, though the mobile H-series parts get 96 EUs and a higher power limit.

The top AMD and Nvidia dedicated graphics cards like the Radeon RX 6900 XT and GeForce RTX 3080 Ti have a power budget of 300W to 350W for the reference design, with custom cards pulling as much as 400W. Intel doesn"t plan to go that high for its reference Arc A770/A750 designs, which target just 225W, but we"ll have to see what happens with the third-party AIB cards. Gunnir"s A380 increased the power limit by 23% compared to the reference specs, so a similar increase on the A700 cards could mean a 275W power limit.

Intel may be a newcomer to the dedicated graphics card market, but it"s by no means new to making GPUs. Current Alder Lake (as well as the previous generation Rocket Lake and Tiger Lake) CPUs use the Xe Graphics architecture, the 12th generation of graphics updates from Intel.

The first generation of Intel graphics was found in the i740 and 810/815 chipsets for socket 370, back in 1998-2000. Arc Alchemist, in a sense, is second-gen Xe Graphics (i.e., Gen13 overall), and it"s common for each generation of GPUs to build on the previous architecture, adding various improvements and enhancements. The Arc Alchemist architecture changes are apparently large enough that Intel has ditched the Execution Unit naming of previous architectures and the main building block is now called the Xe-core.

To start, Arc Alchemist will support the full DirectX 12 Ultimate feature set. That means the addition of several key technologies. The headline item is ray tracing support, though that might not be the most important in practice. Variable rate shading, mesh shaders, and sampler feedback are also required — all of which are also supported by Nvidia"s RTX 20-series Turing architecture from 2018, if you"re wondering. Sampler feedback helps to optimize the way shaders work on data and can improve performance without reducing image quality.

The Xe-core contains 16 Vector Engines (formerly or sometimes still called Execution Units), each of which operates on a 256-bit SIMD chunk (single instruction multiple data). The Vector Engine can process eight FP32 instructions simultaneously, each of which is traditionally called a "GPU core" in AMD and Nvidia architectures, though that"s a misnomer. Other data types are supported by the Vector Engine, including FP16 and DP4a, but it"s joined by a second new pipeline, the XMX Engine (Xe Matrix eXtensions).

Xe-core represents just one of the building blocks used for Intel"s Arc GPUs. Like previous designs, the next level up from the Xe-core is called a render slice (analogous to an Nvidia GPC, sort of) that contains four Xe-core blocks. In total, a render slice contains 64 Vector and Matrix Engines, plus additional hardware. That additional hardware includes four ray tracing units (one per Xe-core), geometry and rasterization pipelines, samplers (TMUs, aka Texture Mapping Units), and the pixel backend (ROPs).

The above block diagrams may or may not be fully accurate down to the individual block level. For example, looking at the diagrams, it would appear each render slice contains 32 TMUs and 16 ROPs. That would make sense, but Intel has not yet confirmed those numbers (even though that"s what we used in the above specs table).

The ray tracing units (RTUs) are another interesting item. Intel detailed their capabilities and says each RTU can do up to 12 ray/box BVH intersections per cycle, along with a single ray/triangle intersection. There"s dedicated BVH hardware as well (unlike on AMD"s RDNA 2 GPUs), so a single Intel RTU should pack substantially more ray tracing power than a single RDNA 2 ray accelerator or maybe even an Nvidia RT core. Except, the maximum number of RTUs is only 32, where AMD has up to 80 ray accelerators and Nvidia has 84 RT cores. But Intel isn"t really looking to compete with the top cards this round.

Finally, Intel uses multiple render slices to create the entire GPU, with the L2 cache and the memory fabric tying everything together. Also not shown are the video processing blocks and output hardware, and those take up additional space on the GPU. The maximum Xe HPG configuration for the initial Arc Alchemist launch will have up to eight render slices. Ignoring the change in naming from EU to Vector Engine, that still gives the same maximum configuration of 512 EU/Vector Engines that"s been rumored for the past 18 months.

Intel includes 2MB of L2 cache per render slice, so 4MB on the smaller ACM-G11 and 16MB total on the ACM-G10. There will be multiple Arc configurations, though. So far, Intel has shown one with two render slices and a larger chip used in the above block diagram that comes with eight render slices. Given how much benefit AMD saw from its Infinity Cache, we have to wonder how much the 16MB cache will help with Arc performance. Even the smaller 4MB L2 cache is larger than what Nvidia uses on its GPUs, where the GTX 1650 only has 1MB of L2 and the RTX 3050 has 2MB.

While it doesn"t sound like Intel has specifically improved throughput on the Vector Engines compared to the EUs in Gen11/Gen12 solutions, that doesn"t mean performance hasn"t improved. DX12 Ultimate includes some new features that can also help performance, but the biggest change comes via boosted clock speeds. We"ve seen Intel"s Arc A380 clock at up to 2.45 GHz (boost clock), even though the official Game Clock is only 2.0 GHz. A770 has a Game Clock of 2.1 GHz, which yields a significant amount of raw compute.

The maximum configuration of Arc Alchemist will have up to eight render slices, each with four Xe-cores, 16 Vector Engines per Xe-core, and each Vector Engine can do eight FP32 operations per clock. Double that for FMA operations (Fused Multiply Add, a common matrix operation used in graphics workloads), then multiply by a 2.1 GHz clock speed, and we get the theoretical performance in GFLOPS:

Obviously, gigaflops (or teraflops) on its own doesn"t tell us everything, but nearly 17.2 TFLOPS for the top configurations is nothing to scoff at. Nvidia"s Ampere GPUs still theoretically have a lot more compute. The RTX 3080, as an example, has a maximum of 29.8 TFLOPS, but some of that gets shared with INT32 calculations. AMD"s RX 6800 XT by comparison "only" has 20.7 TFLOPS, but in many games, it delivers similar performance to the RTX 3080. In other words, raw theoretical compute absolutely doesn"t tell the whole story. Arc Alchemist could punch above — or below! — its theoretical weight class.

Still, let"s give Intel the benefit of the doubt for a moment. Arc Alchemist comes in below the theoretical level of the current top AMD and Nvidia GPUs, but if we skip the most expensive "halo" cards, it looks competitive with the RX 6700 XT and RTX 3060 Ti. On paper, Intel Arc A770 could even land in the vicinity of the RTX 3070 and RX 6800 — assuming drivers and other factors don"t hold it back.

Theoretical compute from the XMX blocks is eight times higher than the GPU"s Vector Engines, except that we"d be looking at FP16 compute rather than FP32. That"s similar to what we"ve seen from Nvidia, although Nvidia also has a "sparsity" feature where zero multiplications (which can happen a lot) get skipped — since the answer"s always zero.

Intel also announced a new upscaling and image enhancement algorithm that it"s calling XeSS: Xe Superscaling. Intel didn"t go deep into the details, but it"s worth mentioning that Intel hired Anton Kaplanyan. He worked at Nvidia and played an important role in creating DLSS before heading over to Facebook to work on VR. It doesn"t take much reading between the lines to conclude that he"s likely doing a lot of the groundwork for XeSS now, and there are many similarities between DLSS and XeSS.

XeSS uses the current rendered frame, motion vectors, and data from previous frames and feeds all of that into a trained neural network that handles the upscaling and enhancement to produce a final image. That sounds basically the same as DLSS 2.0, though the details matter here, and we assume the neural network will end up with different results.

Intel did provide a demo using Unreal Engine showing XeSS in action (see below), and it looked good when comparing 1080p upscaled via XeSS to 4K against the native 4K rendering. Still, that was in one demo, and we"ll have to see XeSS in action in actual shipping games before rendering any verdict.

XeSS also has to compete against AMD"s new and "universal" upscaling solution, FSR 2.0. While we"d still give DLSS the edge in terms of pure image quality, FSR 2.0 comes very close and can work on RX 6000-series GPUs, as well as older RX 500-series, RX Vega, GTX going all the way back to at least the 700-series, and even Intel integrated graphics. It will also work on Arc GPUs.

The good news with DLSS, FSR 2.0, and now XeSS is that they should all take the same basic inputs: the current rendered frame, motion vectors, the depth buffer, and data from previous frames. Any game that supports any of these three algorithms should be able to support the other two with relatively minimum effort on the part of the game"s developers — though politics and GPU vendor support will likely factor in as well.

More important than how it works will be how many game developers choose to use XeSS. They already have access to both DLSS and AMD FSR, which target the same problem of boosting performance and image quality. Adding a third option, from the newcomer to the dedicated GPU market no less, seems like a stretch for developers. However, Intel does offer a potential advantage over DLSS.

XeSS is designed to work in two modes. The highest performance mode utilizes the XMX hardware to do the upscaling and enhancement, but of course, that would only work on Intel"s Arc GPUs. That"s the same problem as DLSS, except with zero existing installation base, which would be a showstopper in terms of developer support. But Intel has a solution: XeSS will also work, in a lower performance mode, using DP4a instructions (four INT8 instructions packed into a single 32-bit register).

The big question will still be developer uptake. We"d love to see similar quality to DLSS 2.x, with support covering a broad range of graphics cards from all competitors. That"s definitely something Nvidia is still missing with DLSS, as it requires an RTX card. But RTX cards already make up a huge chunk of the high-end gaming PC market, probably around 90% or more (depending on how you quantify high-end). So Intel basically has to start from scratch with XeSS, and that makes for a long uphill climb.

Intel has confirmed Arc Alchemist GPUs will use GDDR6 memory. Most of the mobile variants are using 14Gbps speeds, while the A770M runs at 16Gbps and the A380 desktop part uses 15.5Gbps GDDR6. The future desktop models will use 16Gbps memory on the A750 and A580, while the A770 will use 17.5Gbps GDDR6.

There will be multiple Xe HPG / Arc Alchemist solutions, with varying capabilities. The larger chip, which we"ve focused on so far, has eight 32-bit GDDR6 channels, giving it a 256-bit interface. Intel has confirmed that the A770 can be configured with either 8GB or 16GB of memory. Interestingly, the mobile A730M trims that down to a 192-bit interface and the A550M uses a 128-bit interface. However, the desktop models will apparently all stick with the full 256-bit interface, likely for performance reasons.

The smaller Arc GPU only has a 96-bit maximum interface width, though the A370M and A350M cut that to a 64-bit width, while the A380 uses the full 96-bit option and comes with 6GB of GDDR6.

Raja showed a wafer of Arc Alchemist chips at Intel Architecture Day. By snagging a snapshot of the video and zooming in on the wafer, the various chips on the wafer are reasonably clear. We"ve drawn lines to show how large the chips are, and based on our calculations, it looks like the larger Arc die will be around 24x16.5mm (~396mm^2), give or take 5–10% in each dimension. Other reports state that the die size is actually 406mm^2, so we were pretty close.

That"s not a massive GPU — Nvidia"s GA102, for example, measures 628mm^2 and AMD"s Navi 21 measures 520mm^2 — but it"s also not small at all. AMD"s Navi 22 measures 335mm^2, and Nvidia"s GA104 is 393mm^2, so ACM-G10 is larger than AMD"s chip and similar in size to the GA104 — but made on a smaller manufacturing process. Still, putting it bluntly: Size matters.

Besides the wafer shot, Intel also provided these two die shots for Xe HPG. The larger die has eight clusters in the center area that would correlate to the eight render slices. The memory interfaces are along the bottom edge and the bottom half of the left and right edges, and there are four 64-bit interfaces, for 256-bit total. Then there"s a bunch of other stuff that"s a bit more nebulous, for video encoding and decoding, display outputs, etc.

The smaller die has two render slices, giving it just 128 Vector Engines. It also only has a 96-bit memory interface (the blocks in the lower-right edges of the chip), which could put it at a disadvantage relative to other cards. Then there"s the other "miscellaneous" bits and pieces, for things like the QuickSync Video Engine. Obviously, performance will be substantially lower than the bigger chip.

While the smaller chip appears to be slower than all the current RTX 30-series GPUs, it does put Intel in an interesting position. The A380 checks in at a theoretical 4.1 TFLOPS, which means it ought to be able to compete with a GTX 1650 Super, with additional features like AV1 encoding/decoding support that no other GPU currently has. 6GB of VRAM also gives Intel a potential advantage, and on paper the A380 ought to land closer to the RX 6500 XT than the RX 6400.

That"s not currently the case, according to Intel"s own benchmarks as well as our own testing (see above), but perhaps further tuning of the drivers could give a solid boost to performance. We certainly hope so, but let"s not count those chickens before they hatch.

This is hopefully a non-issue at this stage, as the potential profits from cryptocurrency mining have dropped off substantially in recent months. Still, some people might want to know if Intel"s Arc GPUs can be used for mining. Publicly, Intel has said precisely nothing about mining potential and Xe Graphics. However, given the data center roots for Xe HP/HPC (machine learning, High-Performance Compute, etc.), Intel has certainly at least looked into the possibilities mining presents, and its Bonanza Mining chips are further proof Intel isn"t afraid of engaging with crypto miners. There"s also the above image (for the entire Intel Architecture Day presentation), with a physical Bitcoin and the text "Crypto Currencies."

Generally speaking, Xe might work fine for mining, but the most popular algorithms for GPU mining (Ethash mostly, but also Octopus and Kawpow) have performance that"s predicated almost entirely on how much memory bandwidth a GPU has. For example, Intel"s fastest Arc GPUs will use a 256-bit interface. That would yield similar bandwidth to AMD"s RX 6800/6800 XT/6900 XT as well as Nvidia"s RTX 3060 Ti/3070, which would, in turn, lead to performance of around 60-ish MH/s for Ethereum mining.

There"s also at least one piece of mining software that now has support for the Arc A380. While in theory the memory bandwidth would suggest an Ethereum hashrate of around 20-23 MH/s, current tests only showed around 10 MH/s. Further tuning of the software could help, but by the time the larger and faster Arc models arrive, Ethereum should have undergone "The Merge" and transitioned to a full proof of stake algorithm.

If Intel had launched Arc in late 2021 or even early 2022, mining performance might have been a factor. Now, the current crypto-climate suggests that, whatever the mining performance, it won"t really matter.

The core specs for Arc Alchemist are shaping up nicely, and the use of TSMC N6 and a 406mm^2 die with a 256-bit memory interface all point to a card that should be competitive with the current mainstream/high-end GPUs from AMD and Nvidia, but well behind the top performance models.

As the newcomer, Intel needs the first Arc Alchemist GPUs to come out swinging. As we discussed in our Arc A380 review, however, there"s much more to building a good graphics card than hardware. That"s probably why Arc A380 launched in China first, to get the drivers and software ready for the faster offerings as well as the rest of the world.

Alchemist represents the first stage of Intel"s dedicated GPU plans, and there"s more to come. Along with the Alchemist codename, Intel revealed codenames for the next three generations of dedicated GPUs: Battlemage, Celestial, and Druid. Now we know our ABCs, next time won"t you build a GPU with me? Those might not be the most awe-inspiring codenames, but we appreciate the logic of going in alphabetical order.

Tentatively, with Alchemist using TSMC N6, we might see a relatively fast turnaround for Battlemage. It could use TSMC"s N5 process and ship in 2023 — which would perhaps be wise, considering we expect to see Nvidia"s Lovelace RTX 40-series GPUs and AMD"s RX 7000-series RDNA 3 GPUs in the next few months. Shrink the process, add more cores, tweak a few things to improve throughput, and Battlemage could put Intel on even footing with AMD and Nvidia. Or it could arrive woefully late (again) and deliver less performance.

The bottom line is that Intel has its work cut out for it. It may be the 800-pound gorilla of the CPU world, but it has stumbled and faltered even there over the past several years. AMD"s Ryzen gained ground, closed the gap, and took the lead up until Intel finally delivered Alder Lake and desktop 10nm ("Intel 7" now) CPUs. Intel"s manufacturing woes are apparently bad enough that it turned to TSMC to make its dedicated GPU dreams come true.

As the graphics underdog, Intel needs to come out with aggressive performance and pricing, and then iterate and improve at a rapid pace. And please don"t talk about how Intel sells more GPUs than AMD and Nvidia. Technically, that"s true, but only if you count incredibly slow integrated graphics solutions that are at best sufficient for light gaming and office work. Then again, a huge chunk of PCs and laptops are only used for office work, which is why Intel has repeatedly stuck with weak GPU performance.

We now have hard details on all the Arc GPUs, and we"ve tested the desktop A380. We even have Intel"s own performance data, which was less than inspiring. Had Arc launched in Q1 as planned, it could have carved out a niche. The further it slips into 2022, the worse things look.

Again, the critical elements are going to be performance, price, and availability. The latter is already a major problem, because the ideal launch window was last year. Intel"s Xe DG1 was also pretty much a complete bust, even as a vehicle to pave the way for Arc, because driver problems appear to persist. Arc Alchemist sets its sights far higher than the DG1, but every month that passes those targets become less and less compelling.

We should find out how the rest of Intel"s discrete graphics cards stack up to the competition in October. Can Intel capture some of the mainstream market from AMD and Nvidia? Time will tell, but we"re still hopeful Intel can turn the current GPU duopoly into a triopoly in the coming years — if not with Alchemist, then perhaps with Battlemage.

The best graphics cards are the beating heart of any gaming PC, and everything else comes second. Without a powerful GPU pushing pixels, even the fastest CPU won"t manage much. While no one graphics card will be right for everyone, we"ll provide options for every budget and mindset below. Whether you"re after the fastest graphics card, the best value, or the best card at a given price, we"ve got you covered.

Where our GPU benchmarks hierarchy ranks all of the cards based purely on performance, our list of the best graphics cards looks at the whole package. Price, availability, performance, features, and efficiency are all important, though the weighting becomes more subjective. Factoring in all of those aspects, these are the best graphics cards that are currently available.

The long, dark night of GPU shortages and horrible prices is coming to an end... sort of. The recently launched GeForce RTX 4090 unfortunately sold out immediately, and we"ve seen scalpers and profiteers offering cards at significantly higher prices. The same happened with the GeForce RTX 4080, though prices are quickly approaching MSRP. Now the AMD Radeon RX 7900 XTX and 7900 XT are following suit after selling out almost immediately.

We"ll need to see what happens in the next month or two, because brand-new top tier cards almost always sell out at launch. But with cryptocurrency GPU mining profitability in the toilet (nearly every GPU would cost more in electricity than you could possibly make from any current coin), and signs that graphics card prices were coming down, we hoped to see better prices than we"re now finding. There was also talk of an excess of supply for now-previous-gen cards, though either that"s dried up or demand has gone back up. Most major GPUs are in stock, but while AMD"s prices are below MSRP and have stayed mostly flat since Black Friday, Nvidia GPUs seem to be trending back up.

Besides the new AMD and Nvidia cards, we also tested and reviewed the Intel Arc A770 and Intel Arc A750, two newcomers that target the midrange value sector and deliver impressive features and performance — assuming you don"t happen to run a game where the drivers hold Arc back. We"ve added the A770 16GB model to our list, along with the A380 as a budget pick. AMD"s RX 7900 XTX has also supplanted the RX 6950 XT as the top pick for a high-performance AMD card.

Our list consists almost entirely of current generation cards — or "previous" generation now that the RTX 4090/4080 and RX 6900 cards have arrived. There"s one exception with the RTX 2060. That"s slightly faster than the newer RTX 3050, and while it "only" has 6GB VRAM, the current price makes up for that. Unfortunately, prices have trended upward over the past month or two, possibly due to the holiday shopping fervor.

We sorted the above table in order of performance, considering both regular and DXR performance, which is why the RTX 4090 sits at the top. We also factor in 1080p, 1440p, and 4K performance for our standard benchmarks, though it"s worth noting that the 4090 can"t really flex its muscle until at least 1440p. Our subjective rankings below factor in price, power, and features colored by our own opinions. Others may offer a slightly different take, but all of the cards on this list are worthy of your consideration.

For some, the best graphics card is the fastest card, pricing be damned. Nvidia"s GeForce RTX 4090 caters to precisely this category of user. It"s also the debut of Nvidia"s brand-new Ada Lovelace architecture, and as such will represent the most potent card Nvidia has to offer... at least until the inevitable RTX 4090 Ti shows up.

If you were disappointed that the RTX 3090 Ti was only moderately faster (~30%) than an RTX 3080 in most workloads, RTX 4090 will have something more to offer. Across our standard suite of gaming benchmarks, it was 55% faster than the 3090 Ti on average. Fire up a game with heavy ray tracing effects, and that lead grows to 78%! AMD"s new RX 7900 XTX can"t touch it either, as the 4090 is 25% faster in traditional rasterization games and just over double the performance in ray tracing games.

Let"s be clear about something: You really need a high refresh rate 4K monitor to get the most out of the RTX 4090. At 1440p its advantage over a 3090 Ti shrinks to 30%, and only 16% at 1080p. It"s also just 7% faster than AMD"s 6950 XT at 1080p ultra and actually trails it slightly at 1080p medium, though it"s still more than double the performance at 1080p in demanding ray tracing games.

It"s not just gaming performance, either. In professional content creation workloads like Blender, Octane, and V-Ray, the RTX 4090 is about 80% faster than the RTX 3090 Ti. With Blender, it"s over three times faster than the RX 7900 XTX. And don"t even get us started on artificial intelligence tasks. In Stable Diffusion testing(opens in new tab), besides being difficult to even get things running on AMD GPUs (we had to resort to Linux), the RTX 4090 was eight times faster than the RX 6950 XT — and there are numerous other AI workloads that currently only run on Nvidia GPUs. In other words, Nvidia knows a thing or two about professional applications, and the only potential problem is that it locks improved performance in some apps (like those in SPECviewperf) to its true professional cards, i.e. the RTX 6000 48GB.

AMD"s RDNA 3 response to Ada Lovelace might be a better value, at least if you"re only looking at rasterization games, but for raw performance the RTX 4090 reigns as the current champion. You might also want a CPU and power supply upgrade to get the most out of the 4090. And there"s still the matter of finding RTX 4090 cards in stock — they sold out almost immediately at launch, and scalpers (and companies following their example) have pushed prices well above $2,000 for the time being. Hopefully prices come down quickly this round, but it"s been two months since launch and that hasn"t happened yet.

The Red Team King is dead; long live the Red Team King! AMD"s Radeon RX 7900 XTX has supplanted the previous generation RX 6950 XT at the top of the charts, with a price bump to match. Ostensibly priced at $999, it sold out almost immediately, and now we"ll need to wait for supply to catch up to demand. Still, there"s good reason for the demand, as the 7900 XTX comes packing AMD"s latest RDNA 3 architecture.

That gives the 7900 XTX a lot more potential compute, and you get 33% more memory as well. Compared to the 6950 XT, on average the new GPU is 32% faster in our rasterization test suite and 42% faster in ray tracing games. And it delivers that performance boost without dramatically increasing power use or graphics card size. The second string RX 7900 XT falls behind by 15% as well, so saving $100 for the lesser 7900 doesn"t make a lot of sense.

AMD remains a potent solution for anyone that doesn"t care much about ray tracing — and when you see the massive hit to performance for relatively mild gains in image fidelity, we can understand why many feel that way. Still, the number of games with RT support continues to grow, and many of those also support Nvidia"s DLSS technology, something AMD hasn"t fully countered even if FSR2 comes relatively close. If you want the best DXR/RT experience right now, Nvidia still wins hands down.

AMD"s GPUs can also be used for professional tasks, but here things get a bit hit and miss. Certain apps in the SPECviewperf suite run great on AMD hardware; others come up short, and if you want to do AI or deep learning research, there"s no question Nvidia"s cards are a far better pick. But for this generation, the RX 7900 XTX is AMD"s fastest option, and it definitely packs a punch.

The Radeon RX 6600 takes everything good about the 6600 XT and then scales it back slightly. It"s about 15% slower overall, just a bit behind the RTX 3060 as well (in non-RT games), but in our testing it was still 30% faster than the RTX 3050. It"s also priced to move, with the least expensive cards starting at just over $200 — like this ASRock RX 6600 card at Newegg(opens in new tab).

Nvidia"s GeForce RTX 3080 with the now previous generation Nvidia Ampere architecture continues to be a great option, though it"s now lost some of its luster. The recommendation also applies to all RTX 3080 cards, including the RTX 3080 Ti and RTX 3080 12GB — depending on current prices. Right now, the best deals we can find are $730 for the 10GB card, while the 12GB and 3080 Ti have shot up to well o

Ms.Josey

Ms.Josey

Ms.Josey

Ms.Josey