connect lcd panel to pc psu site www.tomshardware.com quotation

As shared by @momomo_us on Twitter,(opens in new tab) ASRock has built a new accessory for PC builders that allows you to turn your PC chassis" side panel into an LCD monitor. The gadget is a 13.3" side panel kit designed to be tapped to the inside of your see-through side panel, giving users an additional display for monitoring system resources and temperatures or being used as a secondary monitor altogether.

The screen is a 16:9 aspect ratio 1080P IPS 60Hz display, measuring 13.3 inches diagonally. This screen is the equivalent of a laptop display. It uses the same connection method as laptops, featuring an embedded DisplayPort (eDP) connector.

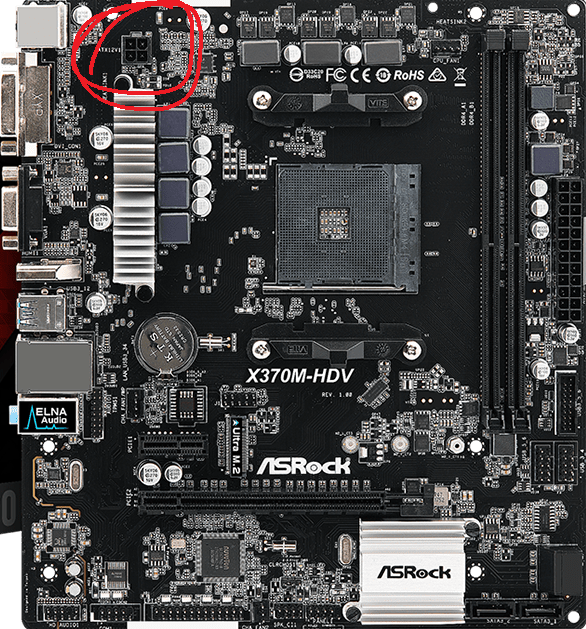

Unfortunately, this represents a problem for most PC users. The connector was originally designed specifically for mobile, and embedded PC solutions, meaning the connector is not available on standard desktop motherboards or graphics cards.

As a result, only ASRock motherboards support the side panel, and only a few models at best, with less than ten motherboards featuring the eDP connector. The list includes the following motherboards: Z790 LiveMixer, Z790 Pro RS/D4, Z790M-ITX WiFi, Z790 Steel Legend WiFi, Z790 PG Lightning, Z790 Pro RS, Z790 PG Lightning/D4. H610M-ITX/eDP, and B650E PG-ITX WiFi.

Sadly adapters aren"t a solution either since eDP to DP (or any other display output) adapters don"t exist today. Furthermore, creating an adapter is problematic because eDP runs both power and video signals through a single cable.

It"s a shame this accessory won"t get mainstream popularity due to these compatibility issues. But for the few users with the correct motherboard, this side panel kit can provide a full secondary monitor that takes up no additional space on your desk. The only sacrifice you"ll make is blocking all the shiny RGB lighting inside your chassis.

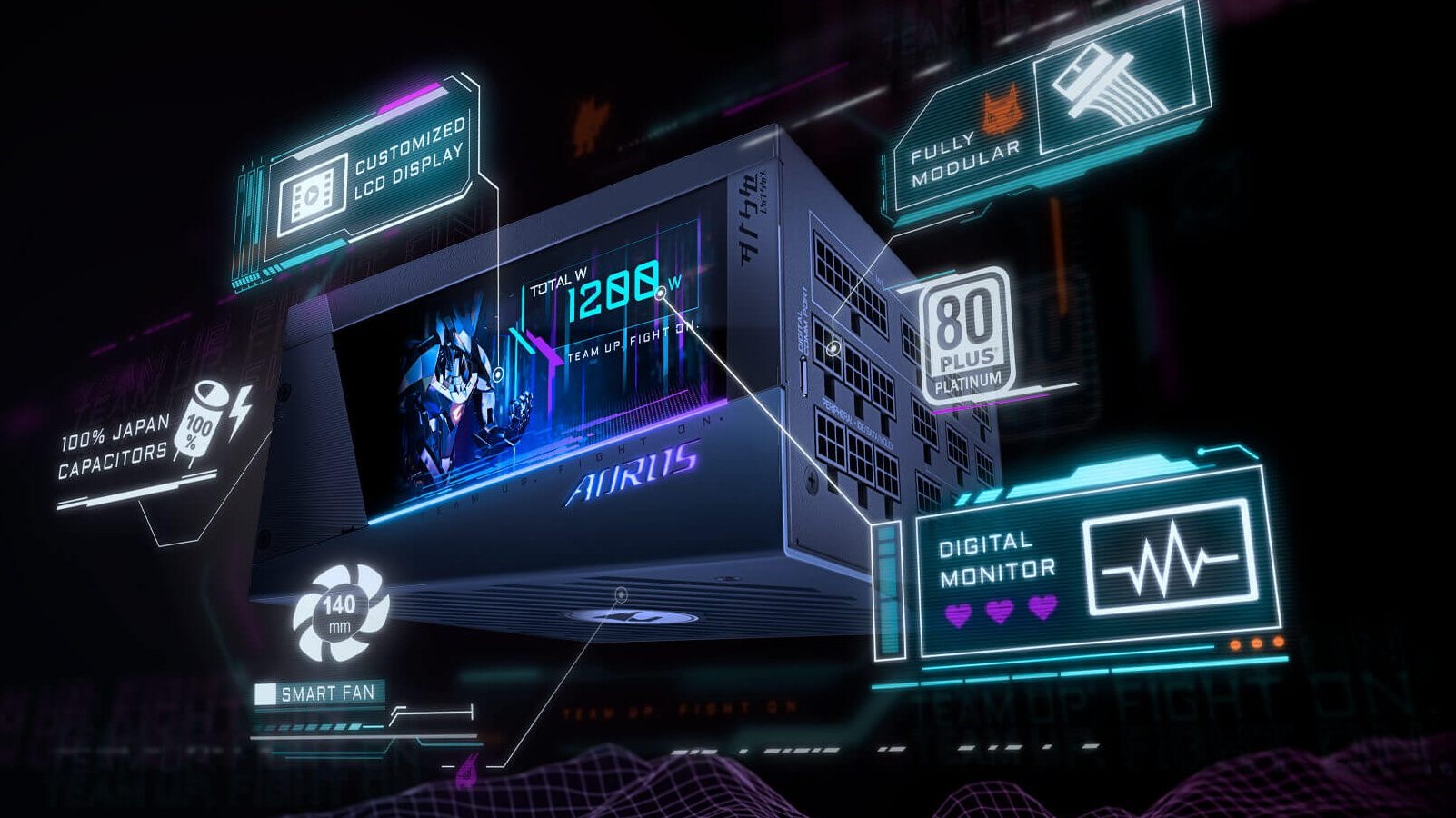

You can never have have enough screens, even if some of them are inside of your case. Gigabyte"s new Aorus P1200W power supply features a full-color LCD screen, which can display custom text, pictures, GIFs, and even videos on its LCD screen. Yes, just imagine watching your favorite movies on the side of your PSU!

This isn"t the first time we"ve seen a power supply with a screen slapped on it. The ASUS ROG Thor has one, but it only displays power draw, not your favorite films. Of course, the more practical use case for a screen on a PSU is showing stats such as your fan speed or temperature.

Unfortunately, Gigabyte hasn"t listed the exact size or resolution of the screen nor do we know what its refresh rate will be. Could one even play games on it? I guess we"ll have to find out.

Designed to compete with the best power supplies, the P1200W features all of the bells and whistles most high-end power supplies come with: an 80-Plus Platinum rating, fully modular cables, 140mm fan, an input current of 15-7.5A, full-range input voltage, >16ms hold up time, active PFC, and Japanese capacitors.

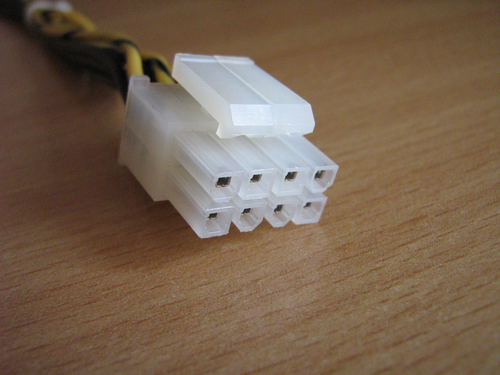

The P1200W brings a lot in a small package, with one 24-pin and 10-pin connector, two CPU EPS-12v power connectors, six peripheral connectors, and six PCIe connectors, and of course, the LCD screen.

The launch of the Nvidia RTX 40-series and Ada Lovelace GPUs has been greeted with a range of emotions. Excitement from some, disbelief from others, and outright scorn from many. In building a GPU that promises to outperform the best graphics cards, Nvidia has gone big — big and expensive. AMD, on the other hand, is using a more cost-effective technology that could make its upcoming RDNA cards more appealing, more affordable choice.

While we"re not privy to Nvidia"s Bill of Materials (BOM), the high price of Nvidia"s GPUs is largely due to the company"s refusal to embrace "Moore"s Law 2.0" and look to things like chiplets. AMD started beating Intel on CPUs when it switched to chiplets, especially on cost, and now they"re about to do the same for GPUs. Putting the analog memory interfaces on an older process with RDNA 3 is a brilliant approach, since analog scales very poorly with newer process nodes. The same goes for cache.

Looking at the AD102 die shots Nvidia has posted so far, we know the die size is 608mm^2. That"s only slightly smaller than GA102 at 628mm^2, but now Nvidia is on a cutting edge TSMC 4N process node instead of Samsung 8N. Pricing on the wafers definitely shot up, and we had multiple(opens in new tab) stories(opens in new tab) in the past year about TSMC raising prices(opens in new tab). That"s all coming to bear.

The AD102 die pictured below — which is a rendering but it"s the best we"ve got — shows some clear details of how and why Nvidia"s latest tour de force costs more than the previous generation chips. Flip through the gallery for annotated versions of the image.

The twelve Graphics Processing Clusters (GPCs) are easily distinguishable from the rest of the chip, and each of those has 12 Streaming Multiprocessors (SMs). All of the GPCs and SMs together take up about 45% of the total die area. Where does the rest go?

The twelve 32-bit GDDR6X memory controllers use up most of the outside edge of the die, with the PCIe x16 connector using about a third of the bottom edge. The memory controllers and related circuitry take up a hefty 17% of the die area, give or take. But that"s not the only part of the memory subsystem, as Nvidia has a much larger L2 cache than on previous designs.

You can see the six 16MB chunks of L3 in the center section of the die, with some related routing and other circuitry (ROPs?) around it. The L2 cache blocks are at least 15% of the total die area, while the entire center portion of the die (L2 plus other logic) takes up 25% of the total. The remainder of the die at the bottom is dedicated to things like the dual NVENC encoders, the PCIe interface and physical display interfaces. It"s about 7% of the total, and then there are a few other miscellaneous bits scattered around that take up the last ~6% of the die.

The point of discussing those die areas is to help put things in perspective. Nvidia, with its monolithic approach on the AD102 chip, has dedicated roughly 33% of the total die area just to memory interfaces and L2 cache. AMD"s MCD (Memory Chiplet Die) approach used with its Radeon RX 7000-series and RDNA 3 GPUs will apparently move nearly all of that off the main chiplet, and it will reportedly use TSMC N6 instead of TSMC N5, reducing price and improving yields at the same time.

If TSMC 4N costs more than twice as much per wafer as Samsung 8N, that means AD102 costs more than twice as much per chip as the previous generation GA102 and the RTX 3090. Gordon Mah Ung of PC World asked Nvidia CEO Jensen Huang during a Q&A session about pricing. I"ll go ahead and quote it directly to put things in context.

Gordon: [RTX] 4000 is finally here, which for you I"m sure feels like a huge launch. The reaction universally I"m seeing out there is, "Oh, my God. It costs so much money." Is there anything you would like to say to the community regarding pricing on the new generational parts? As well as, can they expect to see better pricing at some point and basically address all the loud screams that I"m seeing everywhere?"

Jensen:"First of all, a 12 inch wafer is a lot more expensive today than it was yesterday. And it"s not a little bit more expensive, it is a ton more expensive. Moore"s law is dead. And the ability for Moore"s law to deliver the same performance, half the cost every year and a half is over. It"s completely over. And so the idea that the chip is going to go down in cost over time, unfortunately is a story of the past." (Emphasis added.)

Of course, there"s a lot more to building a graphics card than just the GPU. Memory, PCB, VRMs, PMICs, capacitors, and all sorts of other bits are involved. Prices on many of those have increased over the past two years as well. Nvidia also has to put a lot of effort into the research and development of GPUs and related technologies. And the final design has to take all of that into account and then ultimately turn into a successful product.

One more interesting thing about the RTX 40-series announcement is that Nvidia has revealed three different graphics card models, and each one uses a different GPU. Again, that sort of approach has to increase costs, and it means Nvidia also needs to figure out how to best allocate its wafer orders. The AD102 chip in the RTX 4090 is the new halo part with a large die and all the trimmings. AD103 cuts down the memory interface and the core counts, and then AD104 cuts them down even further.

We can do the same for the RTX 4080 12GB. The 4080 16GB offers 21% more compute, 33% more memory capacity, 42% more memory bandwidth, but only uses 12% more power. It also costs 33% more. Both of the RTX 4080 models look overpriced and underpowered compared to what we"ve seen in previous Nvidia architectures, where the halo cards cost substantially more while only moderately increasing performance.

When RTX 30-series launched, Nvidia started with the RTX 3090 and 3080. Both used the GA102 chip, just with fewer cores enabled on the 3080. Next came the RTX 3070 and 3060 Ti, both of which used the GA104 chip. Eventually, Nvidia would add GA106 to the family, used in the RTX 3060 and 3050 — and there was GA107 for the mobile RTX 3050 Ti and 3050, but that never came to desktops. Ultimately, just looking at the desktop cards, Nvidia had three different GPUs that powered ten different graphics cards. Now, Nvidia has announced three cards using three GPUs, and it has to figure out how to balance the number of each chip.

AMD in contrast appears set to announce perhaps a single core GPU on November 3, which will use chiplets. Current information says the GCD (GPU Chiplet Die) will measure just 308mm^2, about half the size of AD102, and it will link up with up to six MCDs (Memory Chiplet Dies) that are all relatively small (38mm^2). That"s about the same size as AD104 (294.5mm^2), and if rumors are correct, AMD"s Navi 31 will be packing up to 12,288 GPU shader cores — 60% more than Nvidia"s RTX 4080 12GB in roughly the same size chip.

Nvidia"s Justin Walker, Senior Director of Product Management, said, "The 4080 12GB is a really high performance GPU. It delivers performance considerably faster than a 3080 12GB... it"s faster than a 3090 Ti, and we really think it"s deserving of an 80-class product."

Frankly, that"s a crap answer. Of course it"s faster! It"s a new chip and a new architecture; it"s supposed to be faster. Remember when the GTX 1070 came out and it was faster than a 980 Ti? I guess that wasn"t "deserving" of an 80-class product name. Neither was the RTX 2070 when it matched the 1080 Ti, or the 3070 when it matched the 2080 Ti.

Based on Nvidia"s benchmarks, it"s going to be a mixed bag. The first three games in the above chart on the left don"t use DLSS, or DLSS 3. The RTX 4080 12GB is often tied with or slightly slower than an RTX 3090 Ti when DLSS 3 and ray tracing aren"t part of the equation. How often will that be the case on future games is far more difficult to predict.

In a lot of ways, the RTX 40-series launch so far feels very reminiscent of the RTX 20-series launch. Nvidia is once again hyping up ray tracing and DLSS, only we"re now in round three of that story. The RT hardware is far more capable, DLSS 3 is supposed to be a lot better as well, but will all the big games support both technologies to sufficient levels? Undoubtedly the answer is no; some will, some won"t.

Meanwhile, generational pricing has increased (again), and the specs on some of the models certainly look questionable. RTX 4080 12GB feels far too much like it really should have been the RTX 4070 right now, and Nvidia could have started tacking on Ti and Super or whatever to create other models.

The RTX 3080 10GB will apparently still linger on with a $699 MSRP for the time being. That certainly can"t last, not when an eventual RTX 4070 will inevitably displace it on performance and features. But Nvidia and its partners need the unwitting to buy up the existing inventory of RTX 30-series cards, for the highest prices they can still get, before they"re ready to move down the stack to the rest of the Ada Lovelace lineup.

We"re still waiting for full details on AMD"s RDNA 3 lineup as well, and as discussed above, the design decisions AMD made — specifically with GPU chiplets — should result in lower prices that Nvidia can"t possibly match. AMD may not take the outright performance crown, but if it can come close while undercutting Nvidia"s prices, it might not matter. Add to that Intel"s Arc A770 and A750, which could launch in the near future as well. We"ll see who emerges as the victor in the GPU market in the next couple of months.

Hong Kong"s Minisforum has launched the Elitemini B550. The firm announced the product on Twitter today, prepared an overview video, and published a pre-sale product page(opens in new tab) with a configurator – including US pricing. Minisforum sees two significant selling points to its AMD Ryzen powered NUC-alike. Firstly, it uses socketed AM4 processors, and secondly, buyers get an external GPU dock to use the best graphics cards. However, there are several more attractions and drawbacks.

The Minisforum Elitemini B550 is a small and easily portable PC compared to the Mac Mini and Intel NUC (and several others). It measures 20.859 x 24.088 x 12.813 cm (approximately 8.3 x 9.4 x 5 inches) according to the product pages. Still, the dimensions quoted in the product video are quite a bit smaller, a sizable sizing error.

The design of the Elitemini isn"t very appealing, but it looks functional, with plenty of air vents and plenty of ports (all at the rear). One good way to hide it is via its VESA mount base, with screws provided.

The flexibility on offer lets you forgive the styling somewhat. You can buy this machine with your choice of the AMD Ryzen 7 5700G or Ryzen 7 4700G (to save $100), both 8C/16T APUs from different generations. However, you won"t be stuck with your choice, as this is a socketed AM4 CPU. You can change it later. Due to this, it is a little sad that the "Barebone" option without RAM or SSD does not have a cheaper version without the processor.

Inside the case, you will have access to dual SODIMM slots for up to 64GB DDR4-3200 RAM, and there are twin M.2 2280 SATA/NVMe SSD slots. If you use a newer gen processor, you can use NVMe PCIe 4.0. The other user-upgradable component is a single 2.5 inch SATA SSD/HDD. A Wi-Fi and Bluetooth card is already on board, so you don’t have to worry about that.

Ports on the back of the Elitemini B550 include; RJ45 2.5 Gigabit Ethernet Port × 1, USB3.2 Port × 4 (Gen2), USB-C Port × 1 (Gen2), HDMI (4K@60Hz) × 2, DisplayPort(4K@60Hz) × 1, 3.5mm Headphone Jack(Green), LINE OUT × 2, Clear CMOS × 1, 3.5mm MIC Jack × 1. So, that is triple displays without adding an external GPU. The power button is on the rear too.

Included in your Minisforum Elitemini B550 purchase is a somewhat minimal GPU dock. It doesn’t enclose the GPU you will have decided to fit. Depending on your choice of the graphics card, it might extend considerably past the dimensions of the host machine. If you don’t like this idea, choose a GPU that is 24cm or shorter.

If you choose to use the external GPU dock, you won’t be able to VESA mount the Minisforum Elitemini B550. In the pictures of the GPU attached, the desktop case is on its side, with the GPU extension effectively on its underside where its feet and mounting holes are.

The external GPU will not get power from the 19V power brick supplied with the Elitemini 500. It requires an external ATX or SFF power supply (not included). Having a PSU on the desk/table next to this system adds more unwanted clutter to your setup. A positive of adding the desktop power supply is that it should be able to support more powerful CPUs, but remember, the cooling system is only suitable for up to 65W.

On the surface, this sounds like a great alternative and highly portable mini system (it even comes with a carry case); however, making full use of its USPs adds a lot of bulk, mess, and clutter to your desktop. Perhaps an SFF PSU could somehow be bolted to the construction to make things neater. On the other hand, if you want a small system with a desktop graphics card, it might be better to get/build a Mini ITX system, and then everything will be neatly enclosed in a suitable stylish chassis.

On November 3, AMD revealed key details of its upcoming RDNA 3 GPU architecture and the Radeon RX 7900-series graphics cards. It was a public announcement that the whole world was invited to watch. Shortly after the announcement, AMD took press and analysts behind closed doors to dig a little deeper into what makes RDNA 3 tick — or is it tock? No matter.

We"re allowed to talk about the additional RDNA 3 details and other briefings AMD provided now, which almost certainly has nothing to do with Nvidia"s impending launch of the RTX 4080 on Wednesday. (That"s sarcasm, just in case it wasn"t clear. This sort of thing happens all the time with AMD and Nvidia, or AMD and Intel, or even Intel and Nvidia now that Team Blue has joined the GPU race.)

AMD"s RDNA 3 architecture fundamentally changes several of the key design elements for GPUs, thanks to the use of chiplets. And that"s as good of a place to start as any. We"ve also got separate articles covering AMD"s Gaming and ISV Relations, Software and Platform details, and the Radeon RX 7900 Series Graphics Cards.

Navi 31 consists of two core pieces, the Graphics Compute Die (GCD) and the Memory Cache Dies (MCDs). There are similarities to what AMD has done with its Zen 2/3/4 CPUs, but everything has been adapted to fit the needs of the graphics world.

For Zen 2 and later CPUs, AMD uses an Input/Output Die (IOD) that connects to system memory and provides all of the necessary functionality for things like the PCIe Express interface, USB ports, and more recently (Zen 4) graphics and video functionality. The IOD then connects to one or more Core Compute Dies (CCDs — alternatively "Core Complex Dies," depending on the day of the week) via AMD"s Infinity Fabric, and the CCDs contain the CPU cores, cache, and other elements.

A key point in the design is that typical general computing algorithms — the stuff that runs on the CPU cores — will mostly fit within the various L1/L2/L3 caches. Modern CPUs up through Zen 4 only have two 64-bit memory channels for system RAM (though EPYC Genoa server processors can have up to twelve DDR5 channels).

GPUs have very different requirements. Large caches can help, but GPUs also really like having gobs of memory bandwidth to feed all the GPU cores. For example, even the beastly EPYC 9654 with a 12-channel DDR5 configuration "only" delivers up to 460.8 GB/s of bandwidth. The fastest graphics cards like the RTX 4090 can easily double that.

In other words, AMD needed to do something different for GPU chiplets to work effectively. The solution ends up being almost the reverse of the CPU chiplets, with memory controllers and cache being placed on multiple smaller dies while the main compute functionality resides in the central GCD chiplet.

The GCD houses all the Compute Units (CUs) along with other core functionality like video codec hardware, display interfaces, and the PCIe connection. The Navi 31 GCD has up to 96 CUs, which is where the typical graphics processing occurs. But it also has an Infinity Fabric along the top and bottom edges (linked via some sort of bus to the rest of the chip) that then connects to the MCDs.

The MCDs, as the name implies (Memory Cache Dies) primarily contain the large L3 cache blocks (Infinity Cache), plus the physical GDDR6 memory interface. They also need to contain Infinity Fabric links to connect to the GCD, which you can see in the die shot along the center facing edge of the MCDs.

GCD will use TSMC"s N5 node, and will pack 45.7 billion transistors into a 300mm^2 die. The MCDs meanwhile are built on TSMC"s N6 node, each packing 2.05 billion transistors on a chip that"s only 37mm^2 in size. Cache and external interfaces are some of the elements of modern processors that scale the worst, and we can see that overall the GCD averages 152.3 million transistors per mm^2, while the MCDs only average 55.4 million transistors per mm^2.

One potential concern with a chiplet approach on GPUs is how much power all of the Infinity Fabric links require — external chips almost always use more power. As an example, the Zen CPUs have an organic substrate interposer that"s relatively cheap to make, but it consumes 1.5 pJ/b (Picojoules per bit). Scaling that up to a 384-bit interface would have consumed a fair amount of power, so AMD worked to refine the interface with Navi 31.

The result is what AMD calls the high performance fanout interconnect. The image above doesn"t quite explain things clearly, but the larger interface on the left is the organic substrate interconnect used on Zen CPUs. To the right is the high performance fanout bridge used on Navi 31, "approximately to scale."

You can clearly see the 25 wires used for the CPUs, while the 50 wires used on the GPU equivalent are packed into a much smaller area, so you can"t even see the individual wires. It"s about 1/8 the height and width for the same purpose, meaning about 1/64 the total area. That, in turn, dramatically cuts power requirements, and AMD says all of the Infinity Fanout links combined deliver 3.5 TB/s of effective bandwidth while only accounting for less than 5% of the total GPU power consumption.

There"s a quick interesting aside here: all the Infinity Fabric logic on both the GCD and MCDs takes up a decent amount of die space. Looking at the die shot, the six Infinity Fabric interfaces on the GCD use about 9% of the die area, while the interfaces are around 15% of the total die size on the MCDs.

Related to this, we know that certain aspects of a chip design scale better with process shrinks. External interfaces — like the GDDR6 physical interface — have almost stopped scaling. Cache also tends to scale poorly. What will be interesting to see is if AMD"s next-generation GPUs (Navi 4x / RDNA 4) leverage the same MCDs as RDNA 3 while shifting the GCD presumably to the future TSMC N3 node.

That takes care of the chiplet aspect of the design, so now let"s go into the architecture changes to the various parts of the GPU. These can be broadly divided into four areas: general changes to the chip design, enhancements to the GPU shaders (Stream Processors), updates to improve ray tracing performance, and improvements to the matrix operation hardware.

AMD says that RDNA 3 has been architected to reach speeds of 3 GHz. The official boost clocks on the reference 7900 XTX / XT are well below that mark, but we also feel AMD"s reference designs focused more on maximizing efficiency. Third-party AIB cards could very well bump up power limits, voltages, and clock speeds quite a bit. Will we see 3 GHz out-of-factory overclocks? Perhaps, so we"ll wait and see.

According to AMD, RDNA 3 GPUs can hit the same frequency as RDNA 2 GPUs while using half the power, or they can hit 1.3 times the frequency while using the same power. Of course, ultimately, AMD wants to balance frequency and power to deliver the best overall experience. Still, given we see higher power limits on the 7900 XTX, we should also expect that to come with a decent bump to clock speeds and performance.

Another point AMD makes is that it has improved silicon utilization by approximately 20%. In other words, there were functional units on RDNA 2 GPUs where parts of the chip were frequently sitting idle even when the card was under full load. Unfortunately, we don"t have a good way to measure this directly, so we"ll take AMD"s word on this, but ultimately this should result in higher performance.

Outside of the chiplet stuff, many of the biggest changes occur within the Compute Units (CUs) and Workgroup Processors (WGPs). These include updates to the L0/L1/L2 cache sizes, more SIMD32 registers for FP32 and matrix workloads, and wider and faster interfaces between some elements.

AMD"s Mike Mantor presented the above and the following slides, which are dense! He basically talked non-stop for the better part of an hour, trying to cover everything that"s been done with the RDNA 3 architecture, and that wasn"t nearly enough time. The above slide covers the big-picture overview, but let"s step through some of the details.

RDNA 3 comes with an enhanced Compute Unit pair — the dual CUs that became the main building block for RDNA chips. A cursory look at the above might not look that different from RDNA 2, but then notice that the first block for the scheduler and Vector GPRs (general purpose registers) says "Float / INT / Matrix SIMD32" followed by a second block that says "Float / Matrix SIMD32." That second block is new for RDNA 3, and it basically means double the floating point throughput.

You can choose to look at things in one of two ways: Either each CU now has 128 Stream Processors (SPs, or GPU shaders), and you get 12,288 total shader ALUs (Arithmetic Logic Units), or you can view it as 64 "full" SPs that just happen to have double the FP32 throughput compared to the previous generation RDNA 2 CUs.

This is sort of funny because some places are saying that Navi 31 has 6,144 shaders, and others are saying 12,288 shaders, so I specifically asked AMD"s Mike Mantor — the Chief GPU Architect and the main guy behind the RDNA 3 design — whether it was 6,144 or 12,288. He pulled out a calculator, punched in some numbers, and said, "Yeah, it should be 12,288." And yet, in some ways, it"s not.

AMD"s own slides in a different presentation (above) say 6,144 SPs and 96 CUs for the 7900 XTX, and 84 CUs with 5,376 SPs for the 7900 XT, so AMD is taking the approach of using the lower number. However, raw FP32 compute (and matrix compute) has doubled. Personally, it makes more sense to me to call it 128 SPs per CU rather than 64, and the overall design looks similar to Nvidia"s Ampere and Ada Lovelace architectures. Those now have 128 FP32 CUDA cores per Streaming Multiprocessor (SM), but also 64 INT32 units.

Along with the extra 32-bit floating-point compute, AMD also doubled the matrix (AI) throughput as the AI Matrix Accelerators appear to at least partially share some of the execution resources. New to the AI units is BF16 (brain-float 16-bit) support, as well as INT4 WMMA Dot4 instructions (Wave Matrix Multiply Accumulate), and as with the FP32 throughput, there"s an overall 2.7x increase in matrix operation speed.

That 2.7x appears to come from the overall 17.4% increase in clock-for-clock performance, plus 20% more CUs and double the SIM32 units per CU. (But don"t quote me on that, as AMD didn"t specifically break down all of the gains.)

The caches, and the interfaces between the caches and the rest of the system, have all received upgrades. For example, the L0 cache is now 32KB (double RDNA 2), and the L1 caches are 256KB (double RDNA 2 again), while the L2 cache increased to 6MB (1.5x larger than RDNA 2).

The L3 cache, also called the Infinity Cache, did shrink relative to Navi 21. It"s now 96MB vs. 128MB. However, the L3 to L2 link is now 2.25x wider (2304 bytes per clock), so the total throughput is much higher. In fact, AMD gives a figure of 5.3 TB/s — 2304 B/clk at a speed of 2.3 GHz. The RX 6950 XT only had a 1024 B/clk link to its Infinity Cache (maximum), and RDNA 3 delivers up to 2.7x the peak interface bandwidth.

Note that these figures are only for the fully configured Navi 31 solution in the 7900 XTX. The 7900 XT has five MCDs, dropping down to a 320-bit GDDR6 interface and 1920 B/clk links to the combined 80MB of Infinity Cache. We will likely see lower-tier RDNA 3 parts that further cut back on interface width and performance, naturally.

Finally, there are now up to six 64-bit GDDR6 interfaces for a combined 384-bit link to the GDDR6 memory. The VRAM also clocks at 20 Gbps (vs 18 Gbps on the later 6x50 cards and 16 Gbps on the original RDNA 2 chips) for a total bandwidth of 960 GB/s.

It"s interesting how much the gap between GDDR6 and GDDR6X has narrowed with this generation, at least for shipping configurations. AMD"s 960 GB/s on the RX 7900 XTX is only 5% less than the 1008 GB/s of the RTX 4090 now, whereas with the RX 6900 XT and RTX 3090 were only pushing 512 GB/s compared to Nvidia"s 936 GB/s back in 2020.

Ray tracing on the RDNA 2 architecture always felt like an afterthought — something tacked on to meet the required feature checklist for DirectX 12 Ultimate. AMD"s RDNA 2 GPUs lack dedicated BVH traversal hardware, opting to do some of that work via other shared units, and that"s at least partially to blame for their weak performance.

RDNA 2 Ray Accelerators could do up to four ray/box intersections per clock, or one ray/triangle intersection. By way of contrast, Intel"s Arc Alchemist can do up to 12 ray/box intersections per RTU per clock, while Nvidia doesn"t provide a specific number but has up to two ray/triangle intersections per RT core on Ampere and up to four ray/triangle intersections per clock on Ada Lovelace.

It"s not clear if RDNA 3 actually improves those figures directly or if AMD has focused on other enhancements to reduce the number of ray/box intersections performed. Perhaps both. What we do know is that RDNA 3 will have improved BVH (Bounding Volume Hierarchy) traversal that will increase ray tracing performance.

RDNA 3 also has 1.5x larger VGPRs, which means 1.5x as many rays in flight. There are other stack optimizations to reduce the number of instructions needed for BVH traversal, and specialized box sorting algorithms (closest first, largest first, closest midpoint) can be used to extract improved efficiency.

Overall, thanks to the new features, higher frequency, and increased number of Ray Accelerators, AMD says RDNA 3 should deliver up to a 1.8x performance uplift for ray tracing compared to RDNA 2. That should narrow the gap between AMD and Nvidia Ampere. Still, Nvidia also seems to have doubled down on its ray tracing hardware for Ada Lovelace, so we wouldn"t count on AMD delivering equivalent performance to RTX 40-series GPUs.

Finally, RDNA 3 has tuned other elements of the architecture related to the command processor, geometry, and pixel pipelines. There"s also a new Dual Media Engine with support for AV1 encode/decode, AI-enhanced video decoding, and the new Radiance Display Engine.

That last seems to be a result of increasing the number of ROPs (Render Outputs) from 128 on Navi 21 result to 192 on Navi 31. That makes sense, as there"s also a 50% increase in memory channels, and AMD would want to scale other elements in step with that.

The Dual Media Engine should bring AMD up to parity with Nvidia and Intel on the video side of things, though we"ll have to test to see how quality and performance compare. We know from our Arc A380 video encoding tests that Intel generally delivered the best performance and quality, Nvidia wasn"t far behind, and AMD was a relatively distant third on the quality front. Unfortunately, we haven"t been able to test Nvidia"s AV1 support yet, but we"re looking forward to checking out both of the new AMD and Nvidia AV1 implementations.

AMD also gains at least a few points for including DisplayPort 2.1 support. Intel also has DP2 support on its Arc GPUs, but it tops out at 40 Gbps (UHBR 10), while AMD can do 54 Gbps (UHBR 13.5). AMD"s display outputs can drive up to 4K at 229 Hz without compression for 8-bit color depths, or 187 Hz with 10-bit color. Display Stream Compression can more than double that, allowing for 4K and 480 Hz or 8K and 165 Hz — not that we"re anywhere near having displays that actually support such speeds.

Realistically, we have to wonder how important DP2.1 UHBR 13.5 will be with the RDNA 3 graphics cards. You"ll need a new monitor that supports DP2.1 first of all, and second, there"s the question of how much better something like 4K 180 Hz looks with and without DSC — because DP1.4a can still handle that resolution with DSC while UHBR 13.5 could do it without DSC.

For those who want the full collection of slides on the RDNA 3 architecture, you can flip through them in the above gallery. Overall, it sounds like an impressive feat of engineering and we"re eager to see how the graphics cards based on the RDNA 3 GPUs stack up.

As we"ve noted before, we feel like there"s a good chance AMD can compete quite well against Nvidia"s RTX 4080 card, which launches on November 16. On the other hand, it seems quite unlikely that AMD will be able to go head-to-head against the bigger RTX 4090 in most games.

Simple math provides plenty of food for thought. With FP32 12,288 shaders running at 2.5 GHz vs. Nvidia"s 16,384 shaders at 2.52 GHz, Nvidia clearly has the raw compute advantage — 61 teraflops vs. 83 teraflops. As noted, adding more FP32 units makes AMD"s RDNA 3 seem more like Ampere and Ada Lovelace, so there"s a reasonable chance that real-world gaming performance will match up more closely with the teraflops. Memory bandwidth at least looks pretty close and the difference probably shouldn"t matter too much.

Beyond raw compute, we"ve got transistor counts and die sizes. Nvidia has built monolithic dies with its AD102, AD103, and AD104 GPUs. The largest has 76.3 billion transistors in a 608mm^2 chip. Even if AMD were doing a monolithic 522mm^2 chip with 58 billion transistors, we"d expect Nvidia to have some advantages. Still, the GPU chiplet approach means some of the area and transistors get used on things not directly related to performance.

Meanwhile, Nvidia"s penultimate Ada chip, the AD103 used in the RTX 4080, falls on the other side of the fence. With a 256-bit interface, 45.9 billion transistors, and a 368.6mm^2 die size, Navi 31 should have some clear advantages — both with the RX 7900 XTX and the slightly lower tier 7900 XT. And don"t even get us started on the AD104 with 35.8 billion transistors and a 294.5mm^2 die. There"s no way the "unlaunched" RTX 4080 12GB was going to keep pace with an RX 7900 XT, not without DLSS3 being a major part of the story.

But there"s more to performance than paper specs. Nvidia invests more transistors into features like DLSS (Tensor cores) and now DLSS3 (the Optical Flow Accelerator), and ray tracing hardware. AMD seems more willing to give up some ray tracing performance while boosting the more common use cases. We"ll see how the RTX 4080 performs in just a couple of days, and then we"ll need to wait until December to see AMD"s RX 7900 series response.

For those who aren"t interested in graphics cards costing $900 or more, 2023 will be when we get RTX 4070 and lower-tier Ada Lovelace parts, and we"ll likely get RX 7800, 7700, and maybe even 7600 series offerings from AMD. Navi 32 is rumored to use the same MCDs, but with a smaller GCD, while further out, Navi 33 will supposedly be a monolithic die still built on the N6 node.

Based on what we"ve seen and heard so far, the future RTX 4070 and RX 7800 will likely deliver similar performance to the previous generation RTX 3090 and RX 6950 XT, hopefully at substantially lower prices and while using less power. Check back next month for our full reviews of AMD"s first and fastest RDNA 3 graphics cards.

The power supply unit (PSU) is the most important part of every electronic device, including, of course, computers. It is the heart of your system since it feeds energy to the other components. Consequently, if the PSU fails, everything else fails with it. This is the reason most experienced technicians start a failure investigation from the PSU before proceeding to the rest of the components. And, consequently, this is why you should pay extra attention to your choice of PSU and not make a decision based exclusively on price. After all, a good PSU will do its job for quite a long time, far outlasting the rest of your expensive system components.

To properly review a PSU, expensive equipment is required, and the reviewer needs to know not only how to operate it, but also have sufficient knowledge about electronics and a PSU"s design. The knowledge part is especially crucial since not even the most expensive equipment can make a good PSU review if the reviewer doesn"t know how to properly use it and what tests to conduct with it.

In our reviews, we examine the PSU"s performance, noise, and temperature ratings, along with the build quality of the units. We also judge the PSU"s individual components, cables, connectors, and even product specifications and packaging while providing a performance per dollar comparison.

This website is using a security service to protect itself from online attacks. The action you just performed triggered the security solution. There are several actions that could trigger this block including submitting a certain word or phrase, a SQL command or malformed data.

So I"m pretty sure I"m already at the end of my build-all there needs is a little more cable management. But before finishing, I wanted to make sure my tower turned on, so I connected my power supply and my monitor, ect. Except when I pressed the power button, nothing turned on except a red light next to the power button. What does this mean? I did an outside build, so everything is functioning at a basic level. Also, this happened before when I tried booting it on, but the red light was dimmer and it turns out I plugged the front system panels in wrong, but now I"m sure it"s in right. Also I bent one of my 24-pin main power supply connector, but I bent it back and it seems fine.

It"s hard to build a computer right now, because many of the key parts are sold out everywhere you look . If you can get a quality desktop prebuilt, it may be worth springing for it just to get the components you want. The MSI Aegis RS11th ($1,999 to start, $2,499) as tested, delivers the latest with Intel"s 11th Gen Rocket Lake and an Nvidia GeForce RTX 3080. If those are the parts you"re looking for, this PC should be in your consideration.

MSI isn"t using a weird, proprietary chassis that"s hard to open; This is made of standardized parts, just mostly MSI-branded ones. That does mean that when parts are easier to buy, this is a PC you"ll be able to upgrade and grow with.

The MSI Aegis RS 11th’s gaming performance is strong, which makes one of the best gaming PCs, but Rocket Lake’s modest core count holds it back in productivity workloads.

How much you like the design of the Aegis RS will rely heavily on how much you like one of MSI"s existing PC cases, the MPG Gungnir 110R, s a mid-tower chassis with both tempered glass and black aluminum.The front is split between the two, making it look like the Two-Face of computer cases. Considering our review unit had three RGB fans up front, I didn"t love that they were half covered up. It"s a weird design choice.

The rest of it, however, is far more conservatve. The left side panel is tempered glass, which lets you see your components, while the right side is opaque and covers up the cable management. There are two dust filters: a magnetic one on top of the case, and a second one in front of the intake fans.

There are three 120mm intake fans on the front. There"s another on the rear, as exhaust, but it also cools the radiator on the MSI Coreliquid 120 liquid cooler for the CPU. I would like to see the radiator mounted up top, where there is room for one up to the 240mm in size, and have a regular exhaust fan in the back, since there is no obstacle to that with this case. (In fact, I wouldn"t mind a beefier cooler for this processor, too.) Still, unlike many custom chassis we"ve seen lately, this one doesn"t seem to have particular issues with where to put fans.

The front three fans and CPU cooler have RGB lighting, which can be controlled with a button labeled "LED" on the top of the case, or with a module in the MSI Center software.

Internally, the Aegis is easy to update or repair, because it"s built just like a PC you might put together yourself. There aren"t any weird custom chassis tricks or hidden parts. MSI makes the case, power supply, graphics card, motherboard and liquid cooler as separate components. There"s nothing proprietary about this that you couldn"t change or update later.

You can get to most of the parts by removing the tempered glass side panel. It"s held into the back of the chassis with two thumb screws, so no tools are needed. There"s a handle to pull it straight back from the case. One highlight here is a sled for a 2.5-inch drive for easy extra storage. The RAM and M.2 SSD are easy enough to access without moving anything.

The right side panel comes off the same way as the glass door, and it"s the easiest (only, really) way to access the HDD and the PSU, which are hidden beneath a shroud. The case"s RGB controller is also back here, and there"s another 2.5-inch drive sled.

I"m not going to say the cable management is beautiful compared to some other prebuilts, but it"s functional enough (I honestly probably don"t have the patience to do any better) and, unlike some cases, you can easily access it.

I played a bit of Control on the Aegis RS, which I like to try because of how well it integrates ray tracing and stresses even the most powerful components. I ran it at 4K with the high preset and medium ray tracing.

On the Shadow of the Tomb Raider benchmark (highest settings), the game ran at 147 fps in 1080p and 57 fps at 4K. It was beat in both only by the Alienware Aurora R11 (149 fps at 1080p, 64 fps at 4K) with an RTX 3090.

In Grand Theft Auto V(very high settings),the Aegis RS had superior 1080p performance at 163 fps and ran in 4K at 54 fps. The Aurora won out in 4K, while the Omen and iBuypower both had identical 4K performance to the Aegis.

This is the first pre-built desktop we"ve reviewed with the Intel Core i7-11700K. MSI has paired it with 16GB of RAM, a 1TB PCIe Gen 4 SSD and a 2TB HDD. The CPU has 8 cores and 16 threads. Its competitors, though, pose a threat, as many high-end PCs come with Core i9 processors that have more cores. It should be noted that even the Core i9 Rocket Lake has just 8 cores, so this isn"t a limitation of testing the Core i7.

On Geekbench 5, an overall performance benchmark, the Aegis RS 11th notched a single-core score of 1,676 and a multi-core score of 10,102. While that was the highest single-core score, the other three desktops had higher multi-core scores. All of those used Intel Core i9-10900K chips, which have 10 cores rather than the 8 cores in the 11700K.

The PCIe Gen 4 SSD in the Aegis didn"t show off. It transferred 25GB of files at a rate of 635.3 MBps, just edging past the iBuypower and falling far short of the Omen (978 MBps) and Aurora (1,201.87 MBps).

On our Handbrake video editing test, the MSI Aegis RS 11th transcoded a 4K video to 1080p in 5 minutes and 19 seconds. That"s faster than the iBuypower, but the Aurora and Omen both beat the Aegis" time by five seconds.

MSI includes a keyboard and mouse in the box, which are good enough to use if you don"t have a lot else lying around, but that you may want to replace if you already have favorite peripherals.

The mouse, the Clutch GM08, has rubberized grips on the side, though it felt a bit narrow for my wide claw grip. Still, there are some higher-end features here, including adjustable weights (two 3-gram weights and one 5-gram weight) to make the mouse lighter or heavier. It has a PixArt PAW351 sensor that goes up to 4,200 DPI. The mouse has a DPI switch button that lets you adjust sensitivity, and has two buttons on the left side of the mouse, but they aren"t programmable in MSI Center. It typically sells separately for around $20(opens in new tab), so don"t get your hopes up too much, but it gets the job done in a pinch. The red LED light can"t be changed in MSI"s software, either.

The keyboard, the Vigor GK30, is the same one that came with the MSI Aegis Ti5 I recently reviewed. It"s just OK. The keyboard, which MSI suggests is "mechanical-like" has keys that are stiff and not quite clicky. There"s perhaps too much RGB lighting in a sea around the keys. They can"t be controlled in MSI"s software, either, but can be customized with buttons on the keyboard.

This is the first MSI PC that"s crossed my desk with MSI Center, the company"s replacement for its two previous swiss army-knife applications, Dragon Center (for gaming) and Creator Center (for, well, creating).

MSI Center, though, seems barebones. Sure, it has an optional light/dark mode switcher, which is nice, and you can still see CPU and GPU temperatures and usage, and there are still different usage scenarios to choose from, though they"re buried behind menus. But some features from Dragon Center are nowhere to be found, including one-click optimization for games, Mystic Light and the LAN manager are optional modules to add on. This feels like it"s in beta; there"s an area to "downlaod, update or uninstall" (MSI"s typo, not mine).

We reviewed the Aegis RS with a new Intel Core i7-11700K "Rocket Lake" processor, 16GB of RAM, and MSI RTX 3080 Ventus 3X OC GPU, a 1TB PCIe Gen 4 SSD and a 2TB, 7,200-rpm HDD. When the system becomes widely available in mid-April, it will run for $2,499.

When we were reviewing this model, MSI told us that the RS 11th series would start at $1,999. It didn"t have completely finalized specs, but suggested the base model would have an RTX 3070 and 650W GPU and ditch the HDD. Several configurations may continue to utilize a Z490 motherboard and then transition to Z590 as supply levels out. The Aegis RS series is expected to top out at a Core i9-11900K, RTX 3090, 32GB of RAM, an 850W power supply and a 240mm CPU cooler, going possibly as high as $3,899.

If you"re looking for the latest and greatest, the MSI Aegis RS 11th delivers you the most recent parts from Intel and Nvidia (at least, as long as it"s in stock).

Intel"s Core i7 Rocket Lake and the Nvidia GeForce RTX 3080 in our review configuration worked potently together. In productivity, though, Rocket Lake"s limited core count didn"t stand up to some competition, which affects some workloads.

MSI needs to add polish to its MSI Center utility.. If you use the app to monitor CPU usage, check temperatures or change RGB colors, it will feel a bit like beta. If you prefer other applications, you may not notice.

As a whole package, the Aegis RS 11th is a powerful gaming rig with few frills. If you need a PC to play games, this will stand up, even in 4K with the right settings.MSI Aegis RS 11th: Price Comparison

The suitcase-style box protects the MG278Q with plenty of rigid Styrofoam, which should prevent damage from all but the most extreme shipping abuse. The base and upright go together with a captive bolt and snap onto the panel for a tool-less installation. The shiny bits are protected from scratches by peel-off film.

The accessory bundle is quite complete with one each of DVI, HDMI and DisplayPort cables. An IEC power cord sends the juice to an internal power supply. You also get a USB 3.0 cable to connect the monitor"s internal two-port hub. To help you get started there"s a printed quick guide with a full user"s manual on CD.

The MG278Q"s front bezel is quite narrow at only half-an-inch around the sides and top and slightly wider at the bottom. It"s a great choice for multi-screen setups. The only identifying marks are an Asus logo at the bottom center and HDMI and DisplayPort symbols at the lower left. On the lower-right side are small printed icons denoting the control button functions. The keys are around back along with a joystick for OSD navigation. The anti-glare layer is quite aggressive at blocking reflections, but still provides good clarity and detail for text and graphics.

Asus has provided a quality stand with the MG278Q. It offers full tilt, height and swivel adjustments with very firm movements. You can also rotate the panel to portrait mode. In this photo you can see the panel is of average slimness with a large flat area housing the mount. The upright has a small handle-like protrusion at the bottom for cable management. Styling cues are subtle and consist of some polished areas and bits of red trim.

The angular shape of the chassis continues around back where there are no curves to be found. The upright unsnaps to reveal 100mm VESA-compatible bolt holes. The control buttons are visible here along with the super-convenient menu joystick finished in red. The power bulge handles ventilation chores well. We detected no excess heat during our time with the MG278Q. The top vent strip contains two small speakers which fire directly upwards. Like most monitors, they"re tinny and weak with nothing in the way of bass. They are reasonably clear however, and will work fine for general computing tasks.

Video inputs are all digital with two HDMI, one DisplayPort 1.2 and one DVI. FreeSync only works over DisplayPort with a compatible AMD graphics board. 2560x1440 at 144Hz will work over both DisplayPort and HDMI 1 while HDMI 2 is limited to 1920x1080 at 120Hz. On the left there are USB 3.0 upstream and downstream ports; there are none on the sides. On the right are analog audio jacks, including one input and one headphone output.Acer XR341CK: Price Comparison

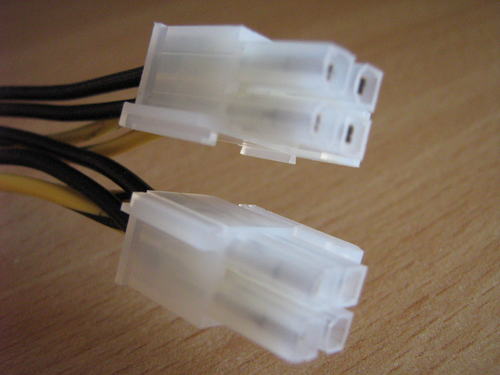

Thank you to everyone for the input, I appreciate it. I really liked the Asi card recommended by scatterforce, but can"t reasonably get my hands on one in Canada. Also, when I looked at the specs on my motherboard HP states the CPIe x16 slot is only rated for 25 watts. This concerns me, I know that people are running 1050ti in those slots which draw up to 75 watts, so the 25 watt limit must be a suggestion. However, as my son, and not I will use this I am concerned with creating an electrical hazard for him. So this is what I plan to do(you might think I am creating a larger hazard). Rather than overpaying for a low profile 1660 or 1050ti card which are not in stock anywhere close to me anyway, I will use a $20 adapter from amazon to hook up an atx psu to my proprietary motherboard. I got a nice modular 600 watt version for 80 bucks on Amazon with built in led lighting. I spent another 30 bucks on extension cables so I can place it outside of the case if need be. I did consider powering a GPU from sata connections, nut did not find anyone doing that, so thought it might be risky. So at this point I am spending some cash, but figure we will likely build a gaming rig when he is older and could use this psu again. So I am into new power for about 140 bucks tax in--hopefully it pans out.

Next I need to choose a gpu. If the psu is outside of the case I will have lots of options to mount inside, or I might place that outside too--or modify the case as bubba showed. I plan to get a full sized gpu so I can plug it into the new psu rather than drawing all power from the motherboard. I figure at least then we could wait until there is new stock of GPU"s so we don"t get hosed on price and if we did another build in the future we could use this gpu. I would use a riser to relocate the gpu.

A graphics processing unit (GPU) is a specialized electronic circuit designed to manipulate and alter memory to accelerate the creation of images in a frame buffer intended for output to a display device. GPUs are used in embedded systems, mobile phones, personal computers, workstations, and game consoles.

In the 1970s, the term "GPU" originally stood for graphics processor unit and described a programmable processing unit independently working from the CPU and responsible for graphics manipulation and output.Sony used the term (now standing for graphics processing unit) in reference to the PlayStation console"s Toshiba-designed Sony GPU in 1994.Nvidia in 1999, who marketed the GeForce 256 as "the world"s first GPU".processor with integrated transform, lighting, triangle setup/clipping, and rendering engines".ATI Technologies coined the term "visual processing unit" or VPU with the release of the Radeon 9700 in 2002.

Arcade system boards have been using specialized graphics circuits since the 1970s. In early video game hardware, the RAM for frame buffers was expensive, so video chips composited data together as the display was being scanned out on the monitor.

A specialized barrel shifter circuit was used to help the CPU animate the framebuffer graphics for various 1970s arcade games from Midway and Taito, such as Namco Galaxian arcade system in 1979 used specialized graphics hardware supporting RGB color, multi-colored sprites and tilemap backgrounds.golden age of arcade video games, by game companies such as Namco, Centuri, Gremlin, Irem, Konami, Midway, Nichibutsu, Sega and Taito.

In the home market, the Atari 2600 in 1977 used a video shifter called the Television Interface Adaptor.Atari 8-bit computers (1979) had ANTIC, a video processor which interpreted instructions describing a "display list"—the way the scan lines map to specific bitmapped or character modes and where the memory is stored (so there did not need to be a contiguous frame buffer).6502 machine code subroutines could be triggered on scan lines by setting a bit on a display list instruction.vertical and horizontal scrolling independent of the CPU.

The NEC µPD7220 was the first implementation of a PC graphics display processor as a single Large Scale Integration (LSI) integrated circuit chip, enabling the design of low-cost, high-performance video graphics cards such as those from Number Nine Visual Technology. It became the best-known GPU up until the mid-1980s.VLSI (very large-scale integration) metal-oxide-semiconductor (NMOS) graphics display processor for PCs, supported up to 1024x1024 resolution, and laid the foundations for the emerging PC graphics market. It was used in a number of graphics cards and was licensed for clones such as the Intel 82720, the first of Intel"s graphics processing units.blitter chips for operating on 16-color bitmaps.

In 1984, Hitachi released ARTC HD63484, the first major CMOS graphics processor for PC. The ARTC was capable of displaying up to 4K resolution when in monochrome mode, and it was used in a number of PC graphics cards and terminals during the late 1980s.Commodore Amiga featured a custom graphics chip, with a blitter unit accelerating bitmap manipulation, line draw, and area fill functions. Also included is a coprocessor with its own simple instruction set, capable of manipulating graphics hardware registers in sync with the video beam (e.g. for per-scanline palette switches, sprite multiplexing, and hardware windowing), or driving the blitter. In 1986, Texas Instruments released the TMS34010, the first fully programmable graphics processor.Texas Instruments Graphics Architecture ("TIGA") Windows accelerator cards.

In 1987, the IBM 8514 graphics system was released as one ofIBM PC compatibles to implement fixed-function 2D primitives in electronic hardware. Sharp"s X68000, released in 1987, used a custom graphics chipsetCapcom"s CP System arcade board. Fujitsu later competed with the FM Towns computer, released in 1989 with support for a full 16,777,216 color palette.polygonal 3D graphics boards were introduced in arcades with the Namco System 21Taito Air System.

IBM"s proprietary Video Graphics Array (VGA) display standard was introduced in 1987, with a maximum resolution of 640×480 pixels. In November 1988, NEC Home Electronics announced its creation of the Video Electronics Standards Association (VESA) to develop and promote a Super VGA (SVGA) computer display standard as a successor to IBM"s proprietary VGA display standard. Super VGA enabled graphics display resolutions up to 800×600 pixels, a 36% increase.

In 1991, S3 Graphics introduced the Porsche 911 as an indication of the performance increase it promised.2D acceleration support to their chips.Windows accelerators had surpassed expensive general-purpose graphics coprocessors in Windows performance, and these coprocessors faded away from the PC market.

Throughout the 1990s, 2D GUI acceleration continued to evolve. As manufacturing capabilities improved, so did the level of integration of graphics chips. Additional application programming interfaces (APIs) arrived for a variety of tasks, such as Microsoft"s WinG graphics library for Windows 3.x, and their later DirectDraw interface for hardware acceleration of 2D games within Windows 95 and later.

In the early- and mid-1990s, real-time 3D graphics were becoming increasingly common in arcade, computer, and console games, which led to increasing public demand for hardware-accelerated 3D graphics. Early examples of mass-market 3D graphics hardware can be found in arcade system boards such as the Sega Model 1, Namco System 22, and Sega Model 2, and the fifth-generation video game consoles such as the Saturn, PlayStation and Nintendo 64. Arcade systems such as the Sega Model 2 and SGI Onyx-based Namco Magic Edge Hornet Simulator in 1993 were capable of hardware T&L (transform, clipping, and lighting) years before appearing in consumer graphics cards.DSPs to accelerate transformations. Fujitsu, which worked on the Sega Model 2 arcade system,LSI solution for use in home computers in 1995;home video game consoles was the Nintendo 64"s Reality Coprocessor, released in 1996.Mitsubishi released the 3Dpro/2MP, a fully featured GPU capable of transformation and lighting, for workstations and Windows NT desktops;ATi utilized it for their FireGL 4000 graphics card, released in 1997.

In the PC world, notable failed first tries for low-cost 3D graphics chips were the S3 ATI Rage, and Matrox Mystique. These chips were essentially previous-generation 2D accelerators with 3D features bolted on. Many were even pin-compatible with the earlier-generation chips for ease of implementation and minimal cost. Initially, performance 3D graphics were possible only with discrete boards dedicated to accelerating 3D functions (and lacking 2D GUI acceleration entirely) such as the PowerVR and the 3dfx Voodoo. However, as manufacturing technology continued to progress, video, 2D GUI acceleration and 3D functionality were all integrated into one chip. Rendition"s Verite chipsets were among the first to do this well enough to be worthy of note. In 1997, Rendition went a step further by collaborating with Hercules and Fujitsu on a "Thriller Conspiracy" project which combined a Fujitsu FXG-1 Pinolite geometry processor with a Vérité V2200 core to create a graphics card with a full T&L engine years before Nvidia"s GeForce 256. This card, designed to reduce the load placed upon the system"s CPU, never made it to market.

OpenGL appeared in the early "90s as a professional graphics API, but originally suffered from performance issues which allowed the Glide API to step in and become a dominant force on the PC in the late "90s.DirectX became popular among Windows game developers during the late 90s. Unlike OpenGL, Microsoft insisted on providing strict one-to-one support of hardware. The approach made DirectX less popular as a standalone graphics API initially, since many GPUs provided their own specific features, which existing OpenGL applications were already able to benefit from, leaving DirectX often one generation behind. (See: Comparison of OpenGL and Direct3D.)

Over time, Microsoft began to work more closely with hardware developers and started to target the releases of DirectX to coincide with those of the supporting graphics hardware. Direct3D 5.0 was the first version of the burgeoning API to gain widespread adoption in the gaming market, and it competed directly with many more-hardware-specific, often proprietary graphics libraries, while OpenGL maintained a strong following. Direct3D 7.0 introduced support for hardware-accelerated transform and lighting (T&L) for Direct3D, while OpenGL had this capability already exposed from its inception. 3D accelerator cards moved beyond being just simple rasterizers to add another significant hardware stage to the 3D rendering pipeline. The Nvidia pixel shader and vertex shader units which were far more flexible and programmable.

Nvidia was first to produce a chip capable of programmable shading; the Xbox console, it competed with the PlayStation 2, which used a custom vector unit for hardware accelerated vertex processing (commonly referred to as VU0/VU1). The earliest incarnations of shader execution engines used in Xbox were not general purpose and could not execute arbitrary pixel code. Vertices and pixels were processed by different units which had their own resources with pixel shaders having much tighter constraints (being as they are executed at much higher frequencies than with vertices). Pixel shading engines were actually more akin to a highly customizable function block and didn"t really "run" a program. Many of these disparities between vertex and pixel shading were not addressed until much later with the Unified Shader Model.

By October 2002, with the introduction of the ATI Direct3D 9.0 accelerator, pixel and vertex shaders could implement looping and lengthy floating point math, and were quickly becoming as flexible as CPUs, yet orders of magnitude faster for image-array operations. Pixel shading is often used for bump mapping, which adds texture, to make an object look shiny, dull, rough, or even round or extruded.

With the introduction of the Nvidia GeForce 8 series, and then new generic stream processing unit GPUs became a more generalized computing devices. Today, parallel GPUs have begun making computational inroads against the CPU, and a subfield of research, dubbed GPU Computing or GPGPU for General Purpose Computing on GPU, has found its way into fields as diverse as machine learning,oil exploration, scientific image processing, linear algebra,statistics,3D reconstruction and even stock options pricing determination. GPGPU at the time was the precursor to what is now called a compute shader (e.g. CUDA, OpenCL, DirectCompute) and actually abused the hardware to a degree by treating the data passed to algorithms as texture maps and executing algorithms by drawing a triangle or quad with an appropriate pixel shader. This obviously entails some overheads since units like the Scan Converter are involved where they aren"t really needed (nor are tr

Ms.Josey

Ms.Josey

Ms.Josey

Ms.Josey