seeed lcd displays for raspberry pi free sample

With the development of technology, our usual contact information consultation is also obtained through mobile phones. Mobile phones have replaced traditional books and newspapers, and they use mobile phones to read e-books and browse the web when commuting in the subway. However, the screen of the mobile phone is not suitable for reading text for a long time, and the blue light emitted by the traditional LCD screen may make the eyes feel tired. The ink screen is different. Although the color is monotonous, due to the characteristics of the ink screen, the light it emits will not harm the eyes, and the stronger the light, the clearer the display. So maybe it is a good choice for the calendar ink screen I want to design.

In order to get local weather conditions and time, it is a good thing to use the powerful Raspberry Pi 3b+ as the main controller, becauseRaspberry 3B. Not only does it have WiFi functionality, but also Python code development.

The display is of course using an ink screen. After a comprehensive price/performance comparison, the2.7"" Triple-Color E Ink Display for Raspberry Pifrom Seeed is used.

Since the official C++ library does not have a python library, I found a similarpython driver codeby consulting the relevant information, due to the difference between the hardware, we successfully transplanted the python driver code found on the Internet to our hardware by modifying the pin number. The specific operation is as follows:

step 2: Open the four pin numbers in theepdconfig.pyfile and change them toRST_PIN = 13,DC_PIN = 6,CS_PIN = 5,BUSY_PIN = 19.cd ~nano ~/e-Paper/Raspberry\ Pi/python3/lib/epdconfig.py

For detailed procedures for installing dependencies, please refer toInky-Calendar.Below I will briefly describe my design process. First, we need to do the cloud weather collection on our device, obviouslyOpenWeatherMapis very suitable for us.

Step 2: You can set theOpenWeatherMaprelated parameters according to theSettings.pyfile provided bySeeed_Elink_Raspberry_calendarand save it to the corresponding Folder.

Step 3: You can run the code below to see the corresponding weather information.git clone https://github.com/hansonCc/Seeed_Elink_Raspberry_calendar.gitcd ~/Seeed_Elink_Raspberry_calendar/python3python3 Calendar.py

Then, we need to display the collected time and weather information on the top of the "2.7"" Triple-Color E Ink Display for Raspberry Pi. In order to make the desired GUI, we specifically refer toInky-CalendarThe above model, the actual running effect is as follows:

This website is using a security service to protect itself from online attacks. The action you just performed triggered the security solution. There are several actions that could trigger this block including submitting a certain word or phrase, a SQL command or malformed data.

This website is using a security service to protect itself from online attacks. The action you just performed triggered the security solution. There are several actions that could trigger this block including submitting a certain word or phrase, a SQL command or malformed data.

The Wio Terminal from Seeed Studio is a development device that is standalone or e.g. can be operated with the Raspberry Pi. It is possible to display simple data such as memory information, CPU usage, etc., or even to add a third screen to the Pi.

In addition, the Wio Terminal brings among others also a gyroscope, SD card slot, microphone, buzzer, buttons, and much more. All of these modules can be read out and programmed. In this tutorial, we will focus on how to send various system data from the Raspberry Pi to the Wio Terminal via serial interface and display it.

The code for the Wio terminal is transferred via the Arduino IDE. We also use the IDE for the Arduino and ESP8266. If you haven’t already installed it, start with it. Now let’s download the Seeed Studio LCD library from Github (Code> Download ZIP).

Additional boards are now displayed under “Tools”. As the last point we select “Tools”> “Board”> “Seeed SAMD …”> “Seeduino Wio Terminal”. Also accept the other settings if they are not as shown here:

Lines 111-144: loop – is called via an endless loop – the rendering is called here. We wait for a message over the serial port and extract the data from it. Then 3 screens are shown one after the other, each with a 2-second delay.

From line 146: Render functions of the individual screens. The LCD functions of the Wio Terminal can be called up using tft (and graphics can be drawn with them).

Now we load the code onto the device. After completing the upload, connect the Wio Terminal to the Raspberry Pi and start both. In the beginning, a white screen with “Starting …” is displayed (as defined in the HomeScreen function).

The Wio Terminal from Seeed Studio is a very interesting tool in my opinion, which can be used for certain projects just like that (e.g. using MQTT) or a Raspberry Pi to a display, sensors, buttons, and much more. can expand. For all other functions there are also code examples on the wiki page, which can be transferred directly to the Arduino IDE. It is just as possible to operate all those sensors and modules separately, but you don’t have everything in one compact, small housing.

The only drawback that I noticed: You need an additional “stacking header” if you want to plug the terminal onto the GPIOs, otherwise it won’t fit on top of a Raspberry Pi 4.

I am thinking of buying a Pico for a project I"m working on, but I can"t figure out how to use an i2c lcd screen with it. In the product page for the Pico there is a picture of the Pico with a screen attached to it:

My question is what is the simplest way to program a 16 by 2 lcd screen like the one in the picture with micropython and how would I go about doing so. Thanks in advance for any advice.

There a C examples for exactly that display in the pico-examples tree, Micropython should also be pretty easy. The main issue is ensuring you have a 3.3v device rather than 5v. Or use level shifters to compensate.

The modules I use have a jumper for the 5v feed to the backlight. Pull the jumper and feed the display with 5v from the PI. The electronics can get 3v3 and the I2C feeds from the PI and the unit will work perfectly.

I found a datasheet for a 2004A LCD display that indicated the character code of 253 for ° but haven"t been able to work out how to send that (LCD.display(253, c=16, l=1) just displays "253")

Currently, I don"t know how to do this. I could sure look into it. So it"s a (Degrees) symbol you are trying to display? Normally I would just use an asterix for that.

In the lcd.api.py, it specifies 2 methods of putting charecters, lcd.putstr() or lcd.putchar() or lcd.custom_char(). I don"t know how they work for now but you could look at that file and the comments attached to the commands.

I found a datasheet for a 2004A LCD display that indicated the character code of 253 for ° but haven"t been able to work out how to send that (LCD.display(253, c=16, l=1) just displays "253")

From the i2c.scan used the address into the code i2c.writeto(39, "hello"). But nothing happens. If I connect another i2C LCD and run i2C scan, I get the exact same address 39. If I connect two i2c LED, I only get the one 39 address reported.

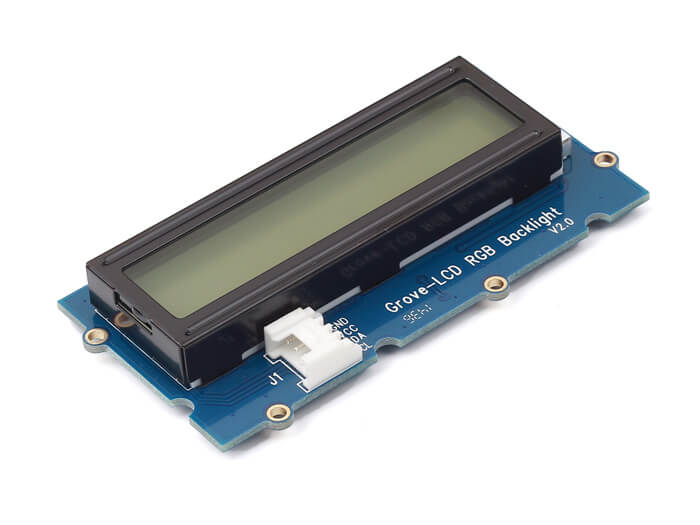

Hey Andrew, those codes are actually designed for the Seed Studio grove RGB LCD. They require a sequence of hex codes to initialize the display and have a different I2C controller. If you can tell me or attach a picture of the LCD I can help you with what code you would require. As I commented previously, most of the Amazon I2C LCD backpacks use a PCF8574 and they can be controlled by the code I had directed people to with the GitHub link.

To answer the question you also originally asked, the address is in decimal and you would have to change it for one of the displays to differentiate which one you are communicating with.

A BIG THANK YOU Tyler, between you and dhylands, I have managed to get the pico to power the LCD. Where I was going wrong was I assumed that all things were equal, that one i2c LCD would be the same as any other. No mention was made in "Getting Started with MicroPython on Raspberry Pi Pico" in the chapter on i2c that you had to buy a specific i2c LCD (which is expensive and difficult to get hold of).

The code they publish just will not work with any other LCD. So what I have leant is this., Most i2c LCD"s work in 5 v, that is to say the data coming into the LCD has to be at 5 v. The pico data is at 3v, so you need an additional bit of hardware, 3.3 to 5v level translator. You also need some additional code. https://github.com/t-622/rpi-pico-i2c-lcd.

I had the same problem. Sadly the serial LCD used in the book is not your usual Hitachi character LCD with an I2C backpack on it. It is a Sparkfun product which has an AVR microcontroller to handle the I2C interface and additionally handles the complexity of programming the Hitachi display, I discovered this the hard way after some happy hours of sending instructions to the LCD and getting unusual results. I’ve used the cheap I2C backpacks in quite a few projects on different hardware over the years but this is the first time I’ve had problems. Given that I’m a beginner at Python ( though not programming) I initially thought it was me,

It’s also worth noting that the serLCD is quite hard to find in the U.K. Mouser U.K. have it but it is £22.82 about 4 times the price of a standard LCD and backpack from Amazon.

I’m going to give the library mentioned above a try with my collection of elderly Hitachi LCDs. If anyone wants to understand how they work I commend “How to use intelligent LCDs “ by Julyan Ilett which was published in “Everyday Practical Electronics “ in 1997. It’s in two parts and the pdf is free from a number of sites.

Once I’d found a missing “import machine “ statement in the test script T622’s library works very well. Many thanks. I’m using a cheap I2C backpack. I’m powering the LCD from a separate 5 volt supply and connecting the data lines directly to the Pico without a voltage converter, but with a common Earth. I’m pretty sure the I2C hardware specifications permit 3v3 for the data lines. Either way mine works.

i bought an lcd2004a from amazon, tried the code in the "getting started with micropython on raspberry pi pico" book. had no luck so went looking for drivers, found one that didn"t work with the pico, I messed about with it a little and managed to get it to work

Once I’d found a missing “import machine “ statement in the test script T622’s library works very well. Many thanks. I’m using a cheap I2C backpack. I’m powering the LCD from a separate 5 volt supply and connecting the data lines directly to the Pico without a voltage converter, but with a common Earth. I’m pretty sure the I2C hardware specifications permit 3v3 for the data lines. Either way mine works.

This website is using a security service to protect itself from online attacks. The action you just performed triggered the security solution. There are several actions that could trigger this block including submitting a certain word or phrase, a SQL command or malformed data.

This website is using a security service to protect itself from online attacks. The action you just performed triggered the security solution. There are several actions that could trigger this block including submitting a certain word or phrase, a SQL command or malformed data.

Display Seeed studio 7 " with a resolution of 720 x 1280 px with a wide-angle IPS. It works with Raspberry Pi in versions 3B+ 3B+ 2B, 1B+, 1B, 1A and Zero and Zero W via the HDMI and USB cable.

The screen is small, lightweight and easy to carry. Compatibile with most platforms with HDMI. In addition, the display has an adjustable backlight, which is controlled using the buttons located on the back.

In order to run screen, you must connect the device to a Raspberry Pi via the supplied wire: HDMI and microUSB. The screen works with the latest operating system Raspbian without the need to install drivers. However, in order that the screen displays the picture properly, you should change the resolution in the configuration file. To do this, open the file config.txt and then at the end add the following statements.

Note that for non-3D GUI code no extra definitions are required in the CMakeLists.txt file. For wireframe OpenGL GUI code the following is needed to set some #define values:

The VEE_* lines are optional. By default, the virtual EEPROM code uses a payload size of 4 bytes but the calibration code works with a size of 1,2,3 or 4 bytes so this can be set to any size the is good for the application if it will store other values. If no other values will be saved, leave it at 4 as that is the most efficient size. The VEE_VALID_BUFFER_MARKER should be different for each application so that values from a previous project loaded into the Pico are not misinterpreted.

If you plan on using an LCD with your Raspberry Pi, there’s a good chance you’ll need to program it in Python at some point. Python is probably the most popular programming language for coding on the Raspberry Pi, and many of the projects and examples you’ll find are written in Python.

In this tutorial, I’ll show you how to connect your LCD and program it in Python, using the RPLCD library. I’ll start with showing you how to connect it in either 8 bit mode or 4 bit mode. Then I’ll explain how to install the library, and provide examples for printing and positioning text, clearing the screen, and controlling the cursor. I’ll also give you examples for scrolling text, creating custom characters, printing data from a sensor, and displaying the date, time, and IP address of your Pi.

BONUS: I made a quick start guide for this tutorial that you can download and go back to later if you can’t set this up right now. It covers all of the steps, diagrams, and code you need to get started.

You can also connect the LCD via I2C, which uses only two wires, but it requires some extra hardware. Check out our article, How to Setup an I2C LCD on the Raspberry Pi to see how.

There are two ways to connect the LCD to your Raspberry Pi – in 4 bit mode or 8 bit mode. 4 bit mode uses 6 GPIO pins, while 8 bit mode uses 10. Since it uses up less pins, 4 bit mode is the most common method, but I’ll explain how to set up and program the LCD both ways.

Each character and command is sent to the LCD as a byte (8 bits) of data. In 8 bit mode, the byte is sent all at once through 8 data wires, one bit per wire. In 4 bit mode, the byte is split into two sets of 4 bits – the upper bits and lower bits, which are sent one after the other over 4 data wires.

Theoretically, 8 bit mode transfers data about twice as fast as 4 bit mode, since the entire byte is sent all at once. However, the LCD driver takes a relatively long time to process the data, so no matter which mode is being used, we don’t really notice a difference in data transfer speed between 8 bit and 4 bit modes.

If this is your first time writing and running a Python program, you might want to read How to Write and Run a Python Program on the Raspberry Pi, which will explain everything you need to know to run the examples below.

The RPLCD library can be installed from the Python Package Index, or PIP. It might already be installed on your Pi, but if not, enter this at the command prompt to install it:

The example programs below use the Raspberry Pi’s physical pin numbers, not the BCM or GPIO numbers. I’m assuming you have your LCD connected the way it is in the diagrams above, but I’ll show you how to change the pin connections if you need to.

Let’s start with a simple program that will display “Hello world!” on the LCD. If you have a different sized LCD than the 16×2 I’m using (like a 20×4), change the number of columns and rows in line 2 of the code. cols= sets the number of columns, and rows= sets the number of rows. You can also change the pins used for the LCD’s RS, E, and data pins. The data pins are set as pins_data=[D0, D1, D2, D3, D4, D5, D6, D7].

The text can be positioned anywhere on the screen using lcd.cursor_pos = (ROW, COLUMN). The rows are numbered starting from zero, so the top row is row 0, and the bottom row is row 1. Similarly, the columns are numbered starting at zero, so for a 16×2 LCD the columns are numbered 0 to 15. For example, the code below places “Hello world!” starting at the bottom row, fourth column:

The RPLCD library provides several functions for controlling the cursor. You can have a block cursor, an underline cursor, or a blinking cursor. Use the following functions to set the cursor:

Text will automatically wrap to the next line if the length of the text is greater than the column length of your LCD. You can also control where the text string breaks to the next line by inserting \n\r where you want the break to occur. The code below will print “Hello” to the top row, and “world!” to the bottom row.

This program will print the IP address of your ethernet connection to the LCD. To print the IP of your WiFi connection, just change eth0 in line 19 to wlan0:

Each character on the LCD is an array of 5×8 of pixels. You can create any pattern or character you can think of, and display it on the screen as a custom character. Check out this website for an interactive tool that creates the bit array used to define custom characters.

First we define the character in lines 4 to 12 of the code below. Then we use the function lcd.create_char(0-7, NAME) to store the character in the LCD’s CGRAM memory. Up to 8 (0-7) characters can be stored at a time. To print the custom character, we use lcd.write_string(unichr(0)), where the number in unichr() is the memory location (0-7) defined in lcd.create_char().

To demonstrate how to print data from a sensor, here’s a program that displays the temperature from a DS18B20 Digital Temperature Sensor. There is some set up to do before you can get this to work on the Raspberry Pi, so check out our tutorial on the DS18B20 to see how.

In general, you take the input variable from your sensor and convert it to an integer to perform any calculations. Then convert the result to a string, and output the string to the display using lcd.write_string(sensor_data()):

Well, that about covers most of what you’ll need to get started programming your LCD with Python. Try combining the programs to get some interesting effects. You can display data from multiple sensors by printing and clearing the screen or positioning the text. You can also make fun animations by scrolling custom characters.

The world of micro-controllers and maker creations is a vast and ever-growing one that can be super intimidating to newcomers. Should you start with an Arduino? A Raspberry Pi? What’s an ESP something something? How do you add a screen? Sensors? The team at Seeed Studio are trying to make things easier and their Wio Terminal looks like a nice option for those who want to start with a screen and some sensors.

I’ve got some crazy schemes in mind for the Wio Terminal, which we’ll be experimenting with in an upcoming Dev Diner Twitch stream once it ships (should be shipping mid-April!). So, what is this curious device?

“Wio Terminal is a simple and tiny device to build I/O with the physical world. It is an ATSAMD51-based microcontroller with wireless connectivity supported by Realtek RTL8720DN.” — Seeed Studio

In other words — it’s a really nice and easy way to have an Arduino/Raspberry Pi/MicroPython project with a screen, accelerometer, microphone, buzzer and all sorts of things in one neatly packaged device!

Fully Open Source Manufacturing – Injection Molding of Wio Terminal — the Seeed Studio team are publicising each phrase of the development of the Wio Terminal! In this post, you can see their injection moulding process.

Thank you to Seeed Studio for providing a bunch of info and a lovely coupon code! If you’d like to get a free Wio Link with your Wio Terminal, order before April 15th and use the code FREEWIO.

Each successive generation of Raspberry Pi has brought something new to the table. The latest release, the Raspberry Pi 4, is no exception, upgrading the low-cost single-board computer to include true gigabit Ethernet connectivity, a high-performance 64-bit central processor, more powerful graphics processor, and up to 4GB of RAM.

Even with these impressive-for-the-price specifications, though, there’s something the Raspberry Pi can’t easily do unaided: deep learning and other artificial intelligence workloads. With an explosion of interest in AI-at-the-edge, though, there’s a market for Raspberry Pi add-ons which offer to fill in the gap - and the Grove AI HAT is just such a device, billed by creator Seeed Studio as ideal for AI projects in fields from hobbyist robotics to the medical industry.

Before getting into the nitty gritty of the Grove AI HAT itself, there’s something that makes it stand out from competition like Google’s Coral AI Accelerator - a USB-connected variant of the company’s in-house Tensor Processing Unit (TPU) chips, designed to accelerate deep-learning performance on any device with a USB port - and that’s the instruction set architecture (ISA) in use. Where a lot of accelerator devices - known in the early days of computing as co-processors, or coppers - are either based on graphics processing unit (GPU) technology or proprietary chip designs, the Grove AI HAT uses something different: RISC-V.

Originally developed at the University of California, Berkeley, RISC-V - pronounced “risk five,” with RISC standing for “reduced instruction set computing” - isn’t very old: the first version of the ISA was released in 2010. It’s generating considerable interest, though, and for a very good reason: it’s free-as-in-speech, licensed in such a way that anyone is able to build a processor from the specification, modified or otherwise, and sell it. With licenses for proprietary ISAs like x86 and Arm - holding the majority shares of the mainstream computing and smartphone markets respectively - costing millions of dollars in fees plus royalties on top, that’s a tempting proposition.

Early adopters of RISC-V were, naturally, academics. In the years since its public release, though, it has won considerable support in the industry: Western Digital, which has released its own RISC-V core under a permissive license, uses RISC-V for storage processing products; Nvidia for logic chips in its graphics processing products plus as an IO core for its high-performance prototype research processor RC18; Rambus has baked RISC-V into some of its security products; and human-machine interface specialist Synaptics has begun shifting to RISC-V parts too.

RISC-V, its proponents claim, scales remarkably well. Implementations of the ISA are being developed by teams around the world for everything from ultra-low-power embedded microcontrollers to many-core chips aimed at high-performance computing (HPC). Some are released under permissive licenses, just like the ISA itself; others remain proprietary, a freedom of choice permitted by the terms of the RISC-V license.

The reason for the modern history lesson: the Grove AI HAT is based on the RISC-V ISA, courtesy of a Kendryte K210 system-on-chip hidden under a metal heatspreader. This SoC includes two 64-bit RISC-V processor cores running at 400MHz or 600MHz depending on user preference and a custom “Kendryte Processing Unit” (KPU) co-processor which offers a claimed performance of 230 billion matrix multiplication operations per second (GMULps).

The Kendryte K210 has then been taken on by a company called Sipeed, which built what it calls the Maix M1 AI Module around it. This unlocks the functionality of the K210 SoC and adds a few features of its own, providing access to a fast Fourier transform accelerator and an audio co-processor capable of driving eight microphones simultaneously.

It’s this module that Seeed Studio, the third company to be involved in the development of this particular product, has taken to power the Grove AI HAT itself. Seed’s contribution: a carrier board for the module which connects to a Raspberry Pi via the GPIO header and includes six Grove-format connectors for external hardware - of which one offers digital input/output (IO), one pulse-width modulated (PWM) IO, one access to an I²C bus, one a UART bus, and two analogue-to-digital conversion (ADC). There’s also a connector for an LCD display, and another for a camera, plus a USB Type-C connector, and a single built-in microphone.

The hardware side of installation is straightforward: a bundled booster header slides through a passthrough header on the Grove AI HAT, then mates with the Raspberry Pi’s GPIO header. Mounting holes matching those of a full-size Raspberry Pi board are included in the HAT’s design, but standoffs are not supplied; the full-size HAT rests on the USB ports, however, remaining fairly stable in use.

The booster header’s pins are lengthy and provide access to the unused GPIO pins for adding additional hardware, which is handy. What’s less handy is the documentation, which fails to make it clear which pins are used by the AI HAT and which are available for use with alternative hardware.

The documentation does, however, walk through the installation of the software required to use the Grove AI HAT: the Arduino IDE. The first two example projects on the Seeed Studio site are based purely on using the Grove aspect of the HAT, turning it into a RISC-V-based microcontroller. They also reveal a somewhat surprising aspect of the HAT: unlike most Raspberry Pi add-on boards, it’s entirely functional as a standalone microcontroller board.

Using the Grove AI HAT with the Arduino IDE is no more difficult than using any other non-standard Arduino-compatible board: a custom board definition is loaded into the IDE’s settings screen, then the complete toolchain downloaded. Programs written for the Grove AI HAT in the Arduino IDE look, by and large, no different from any other Arduino program, and are uploaded in the same way: via the USB port, which both powers the board and provides two-way data communication.

The only AI-focused project for the Grove AI HAT, at the time of writing, starts off in a promising manner: it details how to use the board as an actual Raspberry Pi add-on, rather than a standalone development board, to detect faces in a video stream and provide a running tally. It’s fairly basic stuff, but the Sipeed Maix M1 AI Module isn’t exactly a powerhouse: the board only includes 6MB of static RAM plus an additional 2MB dedicated to the KPU co-processor.

Anyone who has bought the bare board is in for a nasty surprise: the project mandates the use of a custom camera module and small LCD panel - optional extras priced at $7.60 and $6.90 respectively - which connect to the ports on the Grove AI HAT itself. Without these, there’s no way to complete the build - which leads onto the next issue.

For all that it’s sold as a HAT - an acronym standing for Hardware Attached on Top, a standard put together by the Raspberry Pi Foundation which mandates both the shape and size of a given board along with the presence of an EEPROM through which the board can communicate its capabilities to the Raspberry Pi - the Grove AI HAT is remarkably disconnected from the Raspberry Pi itself. The reason the face-recognition project requires a special camera is that the HAT has no way to access the video feed from a Raspberry Pi Camera Module hooked into the Pi’s CSI connector; the LCD panel, meanwhile, is needed as there’s no way to feed video from the Grove AI HAT’s own camera connector to the Raspberry Pi’s display connectors.

The disconnect doesn’t only exist in hardware, either. At the time of writing, Kendryte - responsible for the K210 SoC Sipeed used to build the Maix M1 AI Module which Seeed Studio then used to build the Grove AI HAT - hadn’t released a toolchain for Arm processors. The result: while you can install the Arduino IDE on the Raspberry Pi, you can’t compile any programs for the K210 nor upload them to the Grove AI HAT. This is made clear in the face recognition project instructions, where programming of the HAT needs to take place on a traditional x86 desktop or laptop prior to its connection to the Raspberry Pi.

The actual AI aspect, in fact, comes straight from Kendryte’s GitHub repository. Seeed Studio’s documentation doesn’t even attempt to describe how it works: instead, it concentrates purely on how to compile and upload the pre-written face recognition project to the Grove AI HAT. A separate instruction page details how to use a modified version of the project with a custom counter program that runs on the Raspberry Pi - and which is supplied as a precompiled binary, making it entirely useless as a means of learning how to write your own programs for the Grove AI HAT.

The best you can say about the demo application is that it’s functional: it does, indeed, recognize faces as they are presented to the camera, ringing them in the live video feed on the LCD and notifying the Raspberry Pi that another face has been seen so it can increment its counter. It’s just that’s all it does: you can’t transfer a still or video image from the HAT to the Raspberry Pi, and with no storage and only 8MB total RAM on the HAT expanding the project to do something useful like record a clip of visitors or match faces to a recognition database isn’t an option. In short, it’s more a face counter than a facial recognition program.

Without an Arm toolchain from Kendryte, there’s no way to program the Grove AI HAT from a Raspberry Pi. The only AI-focused project on Seeed’s site handily demonstrates the limitations even when you’re happy to program the HAT from a different computer, too: the Raspberry Pi is left doing nothing other than incrementing a counter, oblivious to the video feed or AI workload being carried out on the Grove AI HAT.

There’s also no way to access the K210 directly from the Raspberry Pi, meaning it’s not possible to use the Grove AI HAT as a true accelerator or co-processor, nor to use it to accelerate common workloads like TensorFlow and Caffee, and while Seeed’s engineers say they’re looking into the possibility it’s likely to hinge on Kendryte deciding to develop an Arm-compatible toolchain - and even then will be sorely constrained by the slow peak throughput available from the Raspberry Pi’s GPIO header.

As a standalone development board, the Grove AI HAT is more interesting. Divorced from any promise of bringing AI acceleration to the Raspberry Pi, the Grove AI HAT is a low-cost board - though don’t forget to factor in the proprietary camera and LCD panel add-ons - with some very interesting hardware on it. It’s not the only K210-based board out there, though, and certainly not the cheapest - even from Seeed Studio itself - and has no on-board networking for communication with the outside world.

It’s the documentation that’s the real let-down, however. There’s no attempt made to walk the user through actually creating their own AI programs, simply downloading and running someone else’s - and providing the Raspberry Pi half of the face recognition project as a precompiled binary means you can’t even take a look at the code and try to figure it out yourself, short of reverse-engineering it.

For anyone looking to accelerate traditional deep-learning workloads on the Raspberry Pi, the Grove AI HAT is useless; look into Google’s admittedly considerably more expensive Coral USB Accelerator instead, or even abandon the Raspberry Pi platform and move to Nvidia’s AI-focused Jetson Nano.

This website is using a security service to protect itself from online attacks. The action you just performed triggered the security solution. There are several actions that could trigger this block including submitting a certain word or phrase, a SQL command or malformed data.

Ms.Josey

Ms.Josey

Ms.Josey

Ms.Josey