size of vr lcd panel free sample

Riel, H. et al. Tuning the emission characteristics of top-emitting organic light-emitting devices by means of a dielectric capping layer: an experimental and theoretical study. J. Appl. Phys. 94, 5290–5296 (2003).

Cheng, D. W. et al. Design of an optical see-through head-mounted display with a low f-number and large field of view using a freeform prism. Appl. Opt. 48, 2655–2668 (2009).

Benitez, P. et al. Advanced freeform optics enabling ultra-compact VR headsets. In Proc. SPIE 10335, Digital Optical Technologies (SPIE, Germany, 2017)

Gagnon, H. C. et al. Gap affordance judgments in mixed reality: testing the role of display weight and field of view. Front. Virtual Real. 2, 654656 (2021).

Chang, K. D. et al. A hybrid simulated method for analyzing the optical efficiency of a head-mounted display with a quasi-crystal OLED panel. Opt. Express 22, A567–A576 (2014).

Käläntär, K. A directional backlight with narrow angular luminance distribution for widening the viewing angle for an LCD with a front-surface light-scattering film. J. Soc. Inf. Disp. 20, 133–142 (2012).

Hoffman, D. M., Stepien, N. N. & Xiong, W. The importance of native panel contrast and local dimming density on perceived image quality of high dynamic range displays. J. Soc. Inf. Disp. 24, 216–228 (2016).

Kikuchi, S. et al. Thin mini-LED backlight using reflective mirror dots with high luminance uniformity for mobile LCDs. Opt. Express 29, 26724–26735 (2021).

Song, S. J. et al. Deep-learning-based pixel compensation algorithm for local dimming liquid crystal displays of quantum-dot backlights. Opt. Express 27, 15907–15917 (2019).

Deng, M. Y. et al. Reducing power consumption of active-matrix mini-LED backlit LCDs by driving circuit. IEEE Trans. Electron Devices 68, 2347–2354 (2021).

Chang, C. L. et al. Toward the next-generation VR/AR optics: a review of holographic near-eye displays from a human-centric perspective. Optica 7, 1563–1578 (2020).

Isomae, Y. et al. Design of 1-μm-pitch liquid crystal spatial light modulators having dielectric shield wall structure for holographic display with wide field of view. Opt. Rev. 24, 165–176 (2017).

Isomae, Y. et al. Alignment control of liquid crystals in a 1.0-μm-pitch spatial light modulator by lattice-shaped dielectric wall structure. J. Soc. Inf. Disp. 27, 251–258 (2019).

Moser, S., Ritsch-Marte, M. & Thalhammer, G. Model-based compensation of pixel crosstalk in liquid crystal spatial light modulators. Opt. Express 27, 25046–25063 (2019).

Persson, M., Engström, D. & Goksör, M. Reducing the effect of pixel crosstalk in phase only spatial light modulators. Opt. Express 20, 22334–22343 (2012).

Shi, L. et al. Near-eye light field holographic rendering with spherical waves for wide field of view interactive 3D computer graphics. ACM Trans. Graph. 36, 236 (2017).

Lavrentovich, M. D., Sergan, T. A. & Kelly, J. R. Switchable broadband achromatic half-wave plate with nematic liquid crystals. Opt. Lett. 29, 1411–1413 (2004).

He, Z., Nose, T. & Sato, S. Diffraction and polarization properties of a liquid crystal grating. Japanese Journal of Applied. Physics 35, 3529–3530 (1996).

Yi, Y. et al. Alignment of liquid crystals by topographically patterned polymer films prepared by nanoimprint lithography. Appl. Phys. Lett. 90, 163510 (2007).

Schadt, M., Seiberle, H. & Schuster, A. Optical patterning of multi-domain liquid-crystal displays with wide viewing angles. Nature 381, 212–215 (1996).

Lee, Y. H., Zhan, T. & Wu, S. T. Enhancing the resolution of a near-eye display with a Pancharatnam–Berry phase deflector. Opt. Lett. 42, 4732–4735 (2017).

Martínez-Corral, M. & Javidi, B. Fundamentals of 3D imaging and displays: a tutorial on integral imaging, light-field, and plenoptic systems. Adv. Opt. Photonics 10, 512–566 (2018).

Chigrinov, V. G., Kozenkov, V. M. & Kwok, H. S. Photoalignment of Liquid Crystalline Materials: Physics and Applications (Hoboken: John Wiley & Sons, 2008).

Schadt, M. et al. Surface-induced parallel alignment of liquid crystals by linearly polymerized photopolymers. Jpn. J. Appl. Phys. 31, 2155–2164 (1992).

Bai, B. F. et al. Optimization of nonbinary slanted surface-relief gratings as high-efficiency broadband couplers for light guides. Appl. Opt. 49, 5454–5464 (2010).

Äyräs, P., Saarikko, P. & Levola, T. Exit pupil expander with a large field of view based on diffractive optics. J. Soc. Inf. Disp. 17, 659–664 (2009).

Gu, Y. C. et al. Holographic waveguide display with large field of view and high light efficiency based on polarized volume holographic grating. IEEE Photonics J. 14, 7003707 (2022).

Shi, Z. J., Chen, W. T. & Capasso, F. Wide field-of-view waveguide displays enabled by polarization-dependent metagratings. In Proc. SPIE 10676, Digital Optics for Immersive Displays. 1067615 (SPIE, France, 2018).

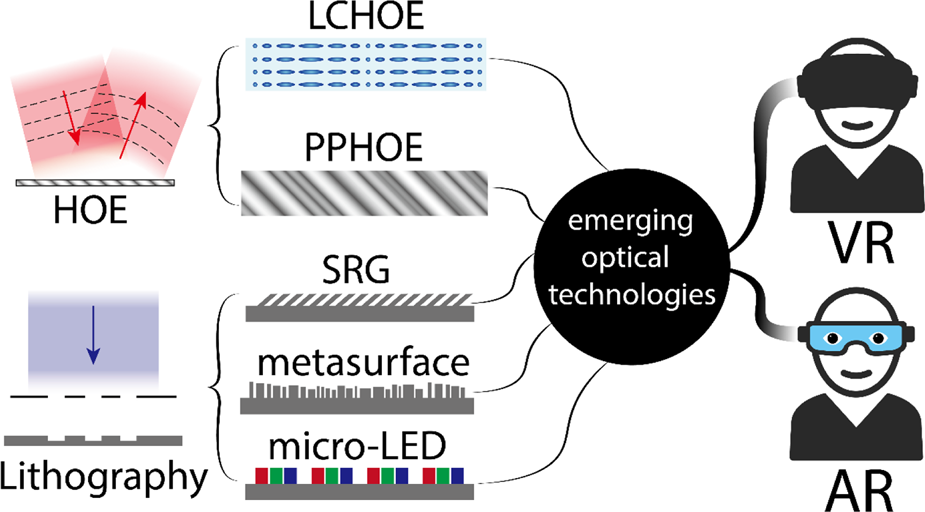

Unlike VR displays with a relatively fixed optical configuration, there exist a vast number of architectures in AR displays. Therefore, instead of following the narrative of tackling different challenges, a more appropriate way to review AR displays is to separately introduce each architecture and discuss its associated engineering challenges. An AR display usually consists of a light engine and an optical combiner. The light engine serves as display image source, while the combiner delivers the displayed images to viewer’s eye and in the meantime transmits the environment light. Some performance parameters like frame rate and power consumption are mainly determined by the light engine. Parameters like FoV, eyebox and MTF are primarily dependent on the combiner optics. Moreover, attributes like image brightness, overall efficiency, and form factor are influenced by both light engine and combiner. In this section, we will firstly discuss the light engine, where the latest advances in micro-LED on chip are reviewed and compared with existing microdisplay systems. Then, we will introduce two main types of combiners: free-space combiner and waveguide combiner.

The light engine determines several essential properties of the AR system like image brightness, power consumption, frame rate, and basic etendue. Several types of microdisplays have been used in AR, including micro-LED, micro-organic-light-emitting-diodes (micro-OLED), liquid-crystal-on-silicon (LCoS), digital micromirror device (DMD), and laser beam scanning (LBS) based on micro-electromechanical system (MEMS). We will firstly describe the working principles of these devices and then analyze their performance. For those who are more interested in final performance parameters than details, Table 1 provides a comprehensive summary.

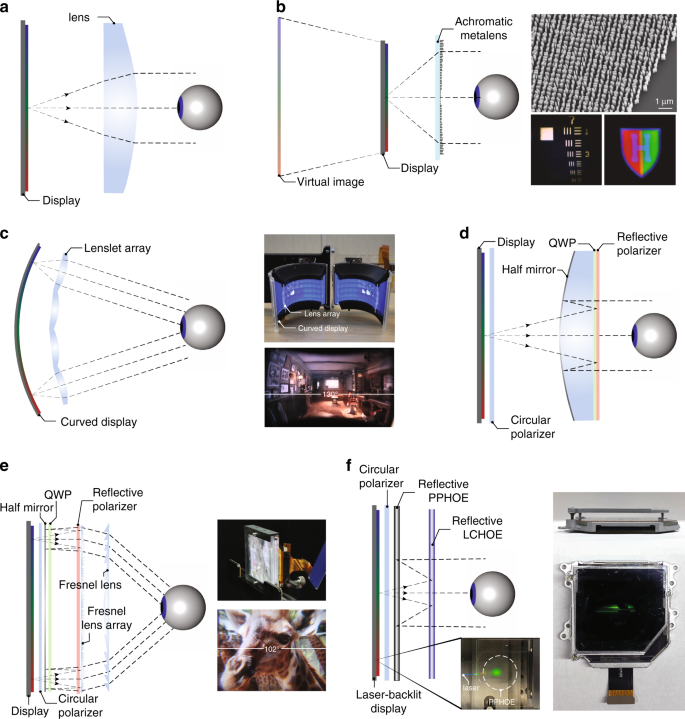

Micro-LED and micro-OLED are self-emissive display devices. They are usually more compact than LCoS and DMD because no illumination optics is required. The fundamentally different material systems of LED and OLED lead to different approaches to achieve full-color displays. Due to the “green gap” in LEDs, red LEDs are manufactured on a different semiconductor material from green and blue LEDs. Therefore, how to achieve full-color display in high-resolution density microdisplays is quite a challenge for micro-LEDs. Among several solutions under research are two main approaches. The first is to combine three separate red, green and blue (RGB) micro-LED microdisplay panels7a).

a RGB micro-LED microdisplays combined by a trichroic prism. b QD-based micro-LED microdisplay. c Micro-OLED display with 4032 PPI. Working principles of d LCoS, e DMD, and f MEMS-LBS display modules. Reprinted from a ref. b ref. c ref. d ref. e ref. f ref.

Another solution is to assemble color-conversion materials like quantum dot (QD) on top of blue or ultraviolet (UV) micro-LEDs7b). The quantum dot color filter (QDCF) on top of the micro-LED array is mainly fabricated by inkjet printing or photolithography

WOLED displays use color filters to generate color images. Without the process of depositing patterned organic materials, a high-resolution density up to 4000 PPI has been achieved7c). However, compared to RGB OLED, the color filters in WOLED absorb about 70% of the emitted light, which limits the maximum brightness of the microdisplay. To improve the efficiency and peak brightness of WOLED microdisplays, in 2019 Sony proposed to apply newly designed cathodes (InZnO) and microlens arrays on OLED microdisplays, which increased the peak brightness from 1600 nits to 5000 nits

LCoS and DMD are light-modulating displays that generate images by controlling the reflection of each pixel. For LCoS, the light modulation is achieved by manipulating the polarization state of output light through independently controlling the liquid crystal reorientation in each pixel7d). Both phase-only and amplitude modulators have been employed. DMD is an amplitude modulation device. The modulation is achieved through controlling the tilt angle of bi-stable micromirrors7e). To generate an image, both LCoS and DMD rely on the light illumination systems, with LED or laser as light source. For LCoS, the generation of color image can be realized either by RGB color filters on LCoS (with white LEDs) or color-sequential addressing (with RGB LEDs or lasers). However, LCoS requires a linearly polarized light source. For an unpolarized LED light source, usually, a polarization recycling system

MEMS-based LBS7f). Different gray levels are achieved by pulse width modulation (PWM) of the employed laser diodes. In practice, 2D scanning can be achieved either through a 2D scanning mirror or two 1D scanning mirrors with an additional focusing lens after the first mirror. The small size of MEMS mirror offers a very attractive form factor. At the same time, the output image has a large depth-of-focus (DoF), which is ideal for projection displays. One shortcoming, though, is that the small system etendue often hinders its applications in some traditional display systems.

There are several important parameters for a light engine, including image resolution, brightness, frame rate, contrast ratio, and form factor. The resolution requirement (>2K) is similar for all types of light engines. The improvement of resolution is usually accomplished through the manufacturing process. Thus, here we shall focus on other three parameters.

Image brightness usually refers to the measured luminance of a light-emitting object. This measurement, however, may not be accurate for a light engine as the light from engine only forms an intermediate image, which is not directly viewed by the user. On the other hand, to solely focus on the brightness of a light engine could be misleading for a wearable display system like AR. Nowadays, data projectors with thousands of lumens are available. But the power consumption is too high for a battery-powered wearable AR display. Therefore, a more appropriate way to evaluate a light engine’s brightness is to use luminous efficacy (lm/W) measured by dividing the final output luminous flux (lm) by the input electric power (W). For a self-emissive device like micro-LED or micro-OLED, the luminous efficacy is directly determined by the device itself. However, for LCoS and DMD, the overall luminous efficacy should take into consideration the light source luminous efficacy, the efficiency of illumination optics, and the efficiency of the employed spatial light modulator (SLM). For a MEMS LBS engine, the efficiency of MEMS mirror can be considered as unity so that the luminous efficacy basically equals to that of the employed laser sources.

As mentioned earlier, each light engine has a different scheme for generating color images. Therefore, we separately list luminous efficacy of each scheme for a more inclusive comparison. For micro-LEDs, the situation is more complicated because the EQE depends on the chip size. Based on previous studies

Another aspect of a light engine is the frame rate, which determines the volume of information it can deliver in a unit time. A high volume of information is vital for the construction of a 3D light field to solve the VAC issue. For micro-LEDs, the device response time is around several nanoseconds, which allows for visible light communication with bandwidth up to 1.5 Gbit/s

Form factor is another crucial aspect for the light engines of near-eye displays. For self-emissive displays, both micro-OLEDs and QD-based micro-LEDs can achieve full color with a single panel. Thus, they are quite compact. A micro-LED display with separate RGB panels naturally have a larger form factor. In applications requiring direct-view full-color panel, the extra combining optics may also increase the volume. It needs to be pointed out, however, that the combing optics may not be necessary for some applications like waveguide displays, because the EPE process results in system’s insensitivity to the spatial positions of input RGB images. Therefore, the form factor of using three RGB micro-LED panels is medium. For LCoS and DMD with RGB LEDs as light source, the form factor would be larger due to the illumination optics. Still, if a lower luminous efficacy can be accepted, then a smaller form factor can be achieved by using a simpler optics

Finally, contrast ratio (CR) also plays an important role affecting the observed images6:1. For a laser beam scanner, its CR can also achieve 106:1 because the laser can be turned off completely at dark state. On the other hand, LCoS and DMD are reflective displays, and their CR is around 2000:1 to 5000:1

The performance parameters of different light engines are summarized in Table 1. Micro-LEDs and micro-OLEDs have similar levels of luminous efficacy. But micro-OLEDs still face the burn-in and lifetime issue when driving at a high current, which hinders its use for a high-brightness image source to some extent. Micro-LEDs are still under active development and the improvement on luminous efficacy from maturing fabrication process could be expected. Both devices have nanosecond response time and can potentially achieve a high frame rate with a well-designed integrated circuit. The frame rate of the driving circuit ultimately determines the motion picture response time

The term ‘free-space’ generally refers to the case when light is freely propagating in space, as opposed to a waveguide that traps light into TIRs. Regarding the combiner, it can be a partial mirror, as commonly used in AR systems based on traditional geometric optics. Alternatively, the combiner can also be a reflective HOE. The strong chromatic dispersion of HOE necessitates the use of a laser source, which usually leads to a Maxwellian-type system.

Several systems based on geometric optics are illustrated in Fig. 8. The simplest design uses a single freeform half-mirror8a). This design can achieve a large FoV (up to 90°)8b. Compared to the single-combiner design, birdbath design has an extra optics on the display side, which provides space for aberration correction. The integration of beam splitter provides a folded optical path, which reduces the form factor to some extent. Another way to fold optical path is to use a TIR-prism. Cheng et al.8c) offering a diagonal FoV of 54° and exit pupil diameter of 8 mm. All the surfaces are freeform, which offer an excellent image quality. To cancel the optical power for the transmitted environmental light, a compensator is added to the TIR prism. The whole system has a well-balanced performance between FoV, eyebox, and form factor. To release the space in front of viewer’s eye, relay optics can be used to form an intermediate image near the combiner8d. Although the design offers more optical surfaces for aberration correction, the extra lenses also add to system weight and form factor.

Overall, AR displays based on traditional geometric optics have a relatively simple design with a decent FoV (~60°) and eyebox (8 mm)S2). To estimate the combiner efficiency of geometric combiners, we assume 50% of half-mirror transmittance and the efficiency of other optics to be 50%. Then the final combiner efficiency is about 4200 nit/lm, which is a high value in comparison with waveguide combiners. Nonetheless, to further shrink the system size or improve system performance ultimately encounters the etendue conservation issue. In addition, AR systems with traditional geometric optics is hard to achieve a configuration resembling normal flat glasses because the half-mirror has to be tilted to some extent.

The Maxwellian view, proposed by James Clerk Maxwell (1860), refers to imaging a point light source in the eye pupil9a). Because the point source is much smaller than the eye pupil, the image is always-in-focus on the retina irrespective of the eye lens’ focus. For applications in AR display, the point source is usually a laser with narrow angular and spectral bandwidths. LED light sources can also build a Maxwellian system, by adding an angular filtering module

a Schematic of the working principle of Maxwellian displays. Maxwellian displays based on b SLM and laser diode light source and c MEMS-LBS with a steering mirror as additional modulation method. Generation of depth cues by d computational digital holography and e scanning of steering mirror to produce multiple views. Adapted from b, d ref. c, e ref.

To modulate the light, a SLM like LCoS or DMD can be placed in the light path, as shown in Fig. 9b. Alternatively, LBS system can also be used (Fig. 9c), where the intensity modulation occurs in the laser diode itself. Besides the operation in a normal Maxwellian-view, both implementations offer additional degrees of freedom for light modulation.

For a SLM-based system, there are several options to arrange the SLM pixels9d), which is often referred as computer-generated holography (CGH)9d). To better understand this feature, we need to again exploit the concept of etendue. The laser light source can be considered to have a very small etendue due to its excellent collimation. Therefore, the system etendue is provided by the SLM. The micron-sized pixel-pitch of SLM offers a certain maximum diffraction angle, which, multiplied by the SLM size, equals system etendue. By varying the display content on SLM, the final exit pupil size can be changed accordingly. In the case of a large-DoF Maxwellian view, the exit pupil size is small, accompanied by a large FoV. For the holographic display mode, the reduced DoF requires a larger exit pupil with dimension close to the eye pupil. But the FoV is reduced accordingly due to etendue conservation. Another commonly concerned issue with CGH is the computation time. To achieve a real-time CGH rendering flow with an excellent image quality is quite a challenge. Fortunately, with recent advances in algorithm

For LBS-based system, the additional modulation can be achieved by integrating a steering module, as demonstrated by Jang et al.9e. However, there exists a tradeoff between the number of viewpoint and the final image frame rate, because the total frames are equally divided into each viewpoint. To boost the frame rate of MEMS-LBS systems by the number of views (e.g., 3 by 3) may be challenging.

Maxwellian-type systems offer several advantages. The system efficiency is usually very high because nearly all the light is delivered into viewer’s eye. The system FoV is determined by the f/# of combiner and a large FoV (~80° in horizontal) can be achieved

To generate multiple viewpoints, one can focus on modulating the incident light or the combiner. Recall that viewpoint is the image of light source. To duplicate or shift light source can achieve pupil duplication or steering accordingly, as illustrated in Fig. 10a. Several schemes of light modulation are depicted in Fig. 10b–e. An array of light sources can be generated with multiple laser diodes (Fig. 10b). To turn on all or one of the sources achieves pupil duplication or steering. A light source array can also be produced by projecting light on an array-type PPHOE10c). Apart from direct adjustment of light sources, modulating light on the path can also effectively steer/duplicate the light sources. Using a mechanical steering mirror, the beam can be deflected10d), which equals to shifting the light source position. Other devices like a grating or beam splitter can also serve as ray deflector/splitter10e).

a Schematic of duplicating (or shift) viewpoint by modulation of incident light. Light modulation by b multiple laser diodes, c HOE lens array, d steering mirror and e grating or beam splitters. f Pupil duplication with multiplexed PPHOE. g Pupil steering with LCHOE. Reproduced from c ref. e ref. f ref. g ref.

Nonetheless, one problem of the light source duplication/shifting methods for pupil duplication/steering is that the aberrations in peripheral viewpoints are often serious10f). Wavefronts of several viewpoints can be recorded into one PPHOE sample. Three viewpoints with a separation of 3 mm were achieved. However, a slight degree of ghost image and gap can be observed in the viewpoint transition. For a PPHOE to achieve pupil steering, the multiplexed PPHOE needs to record different focal points with different incident angles. If each hologram has no angular crosstalk, then with an additional device to change the light incident angle, the viewpoint can be steered. Alternatively, Xiong et al.10g). The polarization-sensitive nature of LCHOE enables the controlling of which LCHOE to function with a polarization converter (PC). When the PC is off, the incident RCP light is focused by the right-handed LCHOE. When the PC is turned on, the RCP light is firstly converted to LCP light and passes through the right-handed LCHOE. Then it is focused by the left-handed LCHOE into another viewpoint. To add more viewpoints requires stacking more pairs of PC and LCHOE, which can be achieved in a compact manner with thin glass substrates. In addition, to realize pupil duplication only requires the stacking of multiple low-efficiency LCHOEs. For both PPHOEs and LCHOEs, because the hologram for each viewpoint is recorded independently, the aberrations can be eliminated.

Recently, another type of display in close relation with Maxwellian view called pin-light display11a. Each pin-light source is a Maxwellian view with a large DoF. When the eye pupil is no longer placed near the source point as in Maxwellian view, each image source can only form an elemental view with a small FoV on retina. However, if the image source array is arranged in a proper form, the elemental views can be integrated together to form a large FoV. According to the specific optical architectures, pin-light display can take different forms of implementation. In the initial feasibility demonstration, Maimone et al.11b). The light inside the waveguide plate is extracted by the etched divots, forming a pin-light source array. A transmissive SLM (LCD) is placed behind the waveguide plate to modulate the light intensity and form the image. The display has an impressive FoV of 110° thanks to the large scattering angle range. However, the direct placement of LCD before the eye brings issues of insufficient resolution density and diffraction of background light.

a Schematic drawing of the working principle of pin-light display. b Pin-light display utilizing a pin-light source and a transmissive SLM. c An example of pin-mirror display with a birdbath optics. d SWD system with LBS image source and off-axis lens array. Reprinted from b ref. d ref.

To avoid these issues, architectures using pin-mirrors11c. In this case, the pin-mirrors replace the original beam-splitter in the birdbath and can thus shrink the system volume, while at the same time providing large DoF pin-light images. Nonetheless, such a system may still face the etendue conservation issue. Meanwhile, the size of pin-mirror cannot be too small in order to prevent degradation of resolution density due to diffraction. Therefore, its influence on the see-through background should also be considered in the system design.

To overcome the etendue conservation and improve see-through quality, Xiong et al.11d, the system uses an LBS as the image source. The collimated scanned laser rays are trapped in the waveguide and encounter an array of off-axis lenses. Upon each encounter, the lens out-couples the laser rays and forms a pin-light source. SWD has the merits of good see-through quality and large etendue. A large FoV of 100° was demonstrated with the help of an ultra-low f/# lens array based on LCHOE. However, some issues like insufficient image resolution density and image non-uniformity remain to be overcome. To further improve the system may require optimization of Gaussian beam profile and additional EPE module

Overall, pin-light systems inherit the large DoF from Maxwellian view. With adequate number of pin-light sources, the FoV and eyebox can be expanded accordingly. Nonetheless, despite different forms of implementation, a common issue of pin-light system is the image uniformity. The overlapped region of elemental views has a higher light intensity than the non-overlapped region, which becomes even more complicated considering the dynamic change of pupil size. In theory, the displayed image can be pre-processed to compensate for the optical non-uniformity. But that would require knowledge of precise pupil location (and possibly size) and therefore an accurate eye-tracking module

Besides free-space combiners, another common architecture in AR displays is waveguide combiner. The term ‘waveguide’ indicates the light is trapped in a substrate by the TIR process. One distinctive feature of a waveguide combiner is the EPE process that effectively enlarges the system etendue. In the EPE process, a portion of the trapped light is repeatedly coupled out of the waveguide in each TIR. The effective eyebox is therefore enlarged. According to the features of couplers, we divide the waveguide combiners into two types: diffractive and achromatic, as described in the followings.

As the name implies, diffractive-type waveguides use diffractive elements as couplers. The in-coupler is usually a diffractive grating and the out-coupler in most cases is also a grating with the same period as the in-coupler, but it can also be an off-axis lens with a small curvature to generate image with finite depth. Three major diffractive couplers have been developed: SRGs, photopolymer gratings (PPGs), and liquid crystal gratings (grating-type LCHOE; also known as polarization volume gratings (PVGs)). Some general protocols for coupler design are that the in-coupler should have a relatively high efficiency and the out-coupler should have a uniform light output. A uniform light output usually requires a low-efficiency coupler, with extra degrees of freedom for local modulation of coupling efficiency. Both in-coupler and out-coupler should have an adequate angular bandwidth to accommodate a reasonable FoV. In addition, the out-coupler should also be optimized to avoid undesired diffractions, including the outward diffraction of TIR light and diffraction of environment light into user’s eyes, which are referred as light leakage and rainbow. Suppression of these unwanted diffractions should also be considered in the optimization process of waveguide design, along with performance parameters like efficiency and uniformity.

The basic working principles of diffractive waveguide-based AR systems are illustrated in Fig. 12. For the SRG-based waveguides12a), the in-coupler can be a transmissive-type or a reflective-type12b), the small angular bandwidth of a high-efficiency transmissive PPG prohibits its use as in-coupler. Therefore, both in-coupler and out-coupler are usually reflective types. The gradient efficiency can be achieved by space-variant exposure to control the local index modulation12c) also prefer reflective PVGs as in-couplers because the transmissive PVGs are much more difficult to fabricate due to the LC alignment issue. In addition, the angular bandwidth of transmissive PVGs in Bragg regime is also not large enough to support a decent FoV

The above discussion describes the basic working principle of 1D EPE. Nonetheless, for the 1D EPE to produce a large eyebox, the exit pupil in the unexpanded direction of the original image should be large. This proposes design challenges in light engines. Therefore, a 2D EPE is favored for practical applications. To extend EPE in two dimensions, two consecutive 1D EPEs can be used13a. The first 1D EPE occurs in the turning grating, where the light is duplicated in y direction and then turned into x direction. Then the light rays encounter the out-coupler and are expanded in x direction. To better understand the 2D EPE process, the k-vector diagram (Fig. 13b) can be used. For the light propagating in air with wavenumber k0, its possible k-values in x and y directions (kx and ky) fall within the circle with radius k0. When the light is trapped into TIR, kx and ky are outside the circle with radius k0 and inside the circle with radius nk0, where n is the refractive index of the substrate. kx and ky stay unchanged in the TIR process and are only changed in each diffraction process. The central red box in Fig. 13b indicates the possible k values within the system FoV. After the in-coupler, the k values are added by the grating k-vector, shifting the k values into TIR region. The turning grating then applies another k-vector and shifts the k values to near x-axis. Finally, the k values are shifted by the out-coupler and return to the free propagation region in air. One observation is that the size of red box is mostly limited by the width of TIR band. To accommodate a larger FoV, the outer boundary of TIR band needs to be expanded, which amounts to increasing waveguide refractive index. Another important fact is that when kx and ky are near the outer boundary, the uniformity of output light becomes worse. This is because the light propagation angle is near 90° in the waveguide. The spatial distance between two consecutive TIRs becomes so large that the out-coupled beams are spatially separated to an unacceptable degree. The range of possible k values for practical applications is therefore further shrunk due to this fact.

a Schematic of 2D EPE based on two consecutive 1D EPEs. Gray/black arrows indicate light in air/TIR. Black dots denote TIRs. b k-diagram of the two-1D-EPE scheme. c Schematic of 2D EPE with a 2D hexagonal grating d k-diagram of the 2D-grating scheme

Aside from two consecutive 1D EPEs, the 2D EPE can also be directly implemented with a 2D grating13c. The hexagonal grating can provide k-vectors in six directions. In the k-diagram (Fig. 13d), after the in-coupling, the k values are distributed into six regions due to multiple diffractions. The out-coupling occurs simultaneously with pupil expansion. Besides a concise out-coupler configuration, the 2D EPE scheme offers more degrees of design freedom than two 1D EPEs because the local grating parameters can be adjusted in a 2D manner. The higher design freedom has the potential to reach a better output light uniformity, but at the cost of a higher computation demand for optimization. Furthermore, the unslanted grating geometry usually leads to a large light leakage and possibly low efficiency. Adding slant to the geometry helps alleviate the issue, but the associated fabrication may be more challenging.

Finally, we discuss the generation of full-color images. One important issue to clarify is that although diffractive gratings are used here, the final image generally has no color dispersion even if we use a broadband light source like LED. This can be easily understood in the 1D EPE scheme. The in-coupler and out-coupler have opposite k-vectors, which cancels the color dispersion for each other. In the 2D EPE schemes, the k-vectors always form a closed loop from in-coupled light to out-coupled light, thus, the color dispersion also vanishes likewise. The issue of using a single waveguide for full-color images actually exists in the consideration of FoV and light uniformity. The breakup of propagation angles for different colors results in varied out-coupling situations for each color. To be more specific, if the red and the blue channels use the same in-coupler, the propagating angle for the red light is larger than that of the blue light. The red light in peripheral FoV is therefore easier to face the mentioned large-angle non-uniformity issue. To acquire a decent FoV and light uniformity, usually two or three layers of waveguides with different grating pitches are adopted.

Regarding the system performance, the eyebox is generally large enough (~10 mm) to accommodate different user’s IPD and alignment shift during operation. A parameter of significant concern for a waveguide combiner is its FoV. From the k-vector analysis, we can conclude the theoretical upper limit is determined by the waveguide refractive index. But the light/color uniformity also influences the effective FoV, over which the degradation of image quality becomes unacceptable. Current diffractive waveguide combiners generally achieve a FoV of about 50°. To further increase FoV, a straightforward method is to use a higher refractive index waveguide. Another is to tile FoV through direct stacking of multiple waveguides or using polarization-sensitive couplers

Other parameters special to waveguide includes light leakage, see-through ghost, and rainbow. Light leakage refers to out-coupled light that goes outwards to the environment, as depicted in Fig. 14a. Aside from decreased efficiency, the leakage also brings drawback of unnatural “bright-eye” appearance of the user and privacy issue. Optimization of the grating structure like geometry of SRG may reduce the leakage. See-through ghost is formed by consecutive in-coupling and out-couplings caused by the out-coupler grating, as sketched in Fig. 14b, After the process, a real object with finite depth may produce a ghost image with shift in both FoV and depth. Generally, an out-coupler with higher efficiency suffers more see-through ghost. Rainbow is caused by the diffraction of environment light into user’s eye, as sketched in Fig. 14c. The color dispersion in this case will occur because there is no cancellation of k-vector. Using the k-diagram, we can obtain a deeper insight into the formation of rainbow. Here, we take the EPE structure in Fig. 13a as an example. As depicted in Fig. 14d, after diffractions by the turning grating and the out-coupler grating, the k values are distributed in two circles that shift from the origin by the grating k-vectors. Some diffracted light can enter the see-through FoV and form rainbow. To reduce rainbow, a straightforward way is to use a higher index substrate. With a higher refractive index, the outer boundary of k diagram is expanded, which can accommodate larger grating k-vectors. The enlarged k-vectors would therefore “push” these two circles outwards, leading to a decreased overlapping region with the see-through FoV. Alternatively, an optimized grating structure would also help reduce the rainbow effect by suppressing the unwanted diffraction.

Achromatic waveguide combiners use achromatic elements as couplers. It has the advantage of realizing full-color image with a single waveguide. A typical example of achromatic element is a mirror. The waveguide with partial mirrors as out-coupler is often referred as geometric waveguide15a. The in-coupler in this case is usually a prism to avoid unnecessary color dispersion if using diffractive elements otherwise. The mirrors couple out TIR light consecutively to produce a large eyebox, similarly in a diffractive waveguide. Thanks to the excellent optical property of mirrors, the geometric waveguide usually exhibits a superior image regarding MTF and color uniformity to its diffractive counterparts. Still, the spatially discontinuous configuration of mirrors also results in gaps in eyebox, which may be alleviated by using a dual-layer structure15b). It exhibits a remarkable FoV of 50° by 30° (Fig. 15c) and an exit pupil of 4 mm with a 1D EPE. To achieve 2D EPE, similar architectures in Fig. 13a can be used by integrating a turning mirror array as the first 1D EPE modulek-vector diagrams in Fig. 13b, d cannot be used here because the k values in x-y plane no longer conserve in the in-coupling and out-coupling processes. But some general conclusions remain valid, like a higher refractive index leading to a larger FoV and gradient out-coupling efficiency improving light uniformity.

a Schematic of the system configuration. b Geometric waveguide with five partial mirrors. c Image photos demonstrating system FoV. Adapted from b, c ref.

The fabrication process of geometric waveguide involves coating mirrors on cut-apart pieces and integrating them back together, which may result in a high cost, especially for the 2D EPE architecture. Another way to implement an achromatic coupler is to use multiplexed PPHOE16a). To understand the working principle, we can use the diagram in Fig. 16b. The law of reflection states the angle of reflection equals to the angle of incidence. If we translate this behavior to k-vector language, it means the mirror can apply any length of k-vector along its surface normal direction. The k-vector length of the reflected light is always equal to that of the incident light. This puts a condition that the k-vector triangle is isosceles. With a simple geometric deduction, it can be easily observed this leads to the law of reflection. The behavior of a general grating, however, is very different. For simplicity we only consider the main diffraction order. The grating can only apply a k-vector with fixed kx due to the basic diffraction law. For the light with a different incident angle, it needs to apply different kz to produce a diffracted light with equal k-vector length as the incident light. For a grating with a broad angular bandwidth like SRG, the range of kz is wide, forming a lengthy vertical line in Fig. 16b. For a PPG with a narrow angular bandwidth, the line is short and resembles a dot. If multiple of these tiny dots are distributed along the oblique line corresponding to a mirror, then the final multiplexed PPGs can imitate the behavior of a tilted mirror. Such a PPHOE is sometimes referred as a skew-mirrorδn. But this proposes a bigger challenge in device fabrication. Recently, Utsugi et al. demonstrated an impressive skew-mirror waveguide based on 54 multiplexed PPGs (Fig. 16c, d). The display exhibits an effective FoV of 35° by 36°. In the peripheral FoV, there still exists some non-uniformity (Fig. 16e) due to the out-coupling gap, which is an inherent feature of the flat-type out-couplers.

a System configuration. b Diagram demonstrating how multiplexed PPGs resemble the behavior of a mirror. Photos showing c the system and d image. e Picture demonstrating effective system FoV. Adapted from c–e ref.

Table 2 summarizes the performance of different AR combiners. When combing the luminous efficacy in Table 1 and the combiner efficiency in Table 2, we can have a comprehensive estimate of the total luminance efficiency (nit/W) for different types of systems. Generally, Maxwellian-type combiners with pupil steering have the highest luminance efficiency when partnered with laser-based light engines like laser-backlit LCoS/DMD or MEM-LBS. Geometric optical combiners have well-balanced image performances, but to further shrink the system size remains a challenge. Diffractive waveguides have a relatively low combiner efficiency, which can be remedied by an efficient light engine like MEMS-LBS. Further development of coupler and EPE scheme would also improve the system efficiency and FoV. Achromatic waveguides have a decent combiner efficiency. The single-layer design also enables a smaller form factor. With advances in fabrication process, it may become a strong contender to presently widely used diffractive waveguides.

Metaverse-style immersion would seem to require Brelyon Ultra Reality monitor projects panoramic and cinema-scale virtual images with added depth. As you lean in to peer inside, you"ll see an image that appears to float 5 feet in the distance with a 101-degree field of view.

The monitor I tried is a prototype: It had a 4K LG LCD screen with a 24x9 aspect ratio and a 60-hertz refresh rate. Brelyon has a partnership with LG to bring 5K OLED screens to future models, as well as a refresh rate of 144 hertz aimed toward gaming.

"We engineer the wavefront of the light to give you a sense of depth. Unlike conventional autostereoscopic displays that sample the light field in angle to give shallow stereoscopic (or left-eye, right-eye) depth cue, we sample the light field in the wavefront domain to provide deep monocular or single-eye depth," wrote Heshmet in an email.

He went on to note: "These monocular depth layers require much more accurate crafting of light and are engineered to pan across multiple horopters. Because of this phenomenon, the brainfills in the gaps and gets a 3D sensation."

That means, according to Heshmet, that "although technically the content is 2D and no re-rendering is needed, different parts of the image are set to be at slightly different monocular depths, which gives that pleasant sense of immersion -- that feeling of looking through a window."

Ahead of the demo, I asked if I could bring my personal computer to try some video editing or plug in a Nintendo Switch and play Mario Kart, but unfortunately the company was unable to accommodate my tech. Instead, I tried a couple of preset demos: a video with movie clips, video game recordings and scenic views.

Brelyon"s first batch of monitors will be geared toward enterprise users and come out later this year. Those enterprise units might ship by the fourth quarter of 2022. Initially, there will be two models: the one I tried at 60 hertz and the other at 144 hertz, costing somewhere between $5,000 to $7,500.

Science fiction movies like the original Star Wars depict volumetric displays showing 3D objects that appear to occupy real space, just as in life. You can examine the top, bottom and sides of displayed objects when you move your head, bringing them dramatically closer to the appearance of physical reality.

In reality, such a display would be a game-changer for anyone who designs 3D objects: industrial designers, architects, engineers, game developers and computer graphics artists for motion pictures. Such a display would also constitute a breakthrough for detailed scientific visualization of 3D objects in biochemistry, geology, Geographic Information Systems and other disciplines.

Sony’s Spatial Reality Display works with a PC and a 3D computer graphics platform to deliver glasses-free stereoscopic 3D viewing and images that pivot immediately in response to head movement. The result is visual magic: an overwhelming impression of 3D reality. Even jaded design and computer graphics professionals are blown away.

A breakthrough for viewing 3D computer graphics, the ELF-SR1 Spatial Reality Display consists of a 15.6” diagonal 4K LCD panel angled in the supplied stand at 45°. The panel, which has over 8 million pixels, displays independent left- and right-eye images, each with about half the pixels. A micro optical lens in front of the LCD panel separates the two images and directs each to the correct eye. This creates the powerful illusion of a 3D scene that extends in front of the screen surface. While impressive, the effect becomes even more compelling as you move your head. The system immediately pivots the 3D scene in response to movement, maintaining the illusion of real 3D objects. You’re free to look around, exploring the scene from different angles.

The system follows eye movement down to the millisecond and the image pivots in sync as you tilt or move your head up and down, left and right—even forward and back. The result is an overpowering sensation of 3D reality.

The secret is an eye sensing camera built into the bezel of the display. This outputs video data via USB 3.2 to a Windows® 10 PC. An advanced real-time rendering algorithm in Sony’s Spatial Reality Display Runtime Software interprets the eye sensing data and directs supported game engines to immediately pivot the scene according to your head motions.

The Spatial Reality Display is supported by two of the world’s top 3D content production platforms: Unity® and Unreal® Engine*1 . In this way, the Spatial Reality Display is ready to work with a large library of existing virtual reality content.

*1 Recommend use of "high resolution, quality images" created using Unity or Unreal Engine 4 software. Computer required with a recommended CPU of Intel Core i7-9700K @3.60 GHz or faster; and a graphics card such as NVIDIA GeForce RTX 2070 SUPER or faster. Only Windows 10 (64-bit) is supported.

Unique in configuration and brimming with proprietary technology, Sony’s Spatial Reality Display empowers you to see what could never be seen before. For designers, researchers and content creation professionals, the benefits are profound.

Glasses-free Stereoscopic 3D of exceptional quality. Some 3D displays are prone to Left/Right ghosting, also known as crosstalk, where each eye sees some of the image intended for the other. The micro optical lens is meticulously engineered to minimize crosstalk. The result is a convincing 3D effect that has won rave reviews from discerning professionals.

Down-to-the-millisecond response to head motion with six degrees of freedom. The Spatial Reality Display sustains the powerful illusion of a solid volumetric scene by adjusting the view as you move your head. The system senses and smoothly responds to your head motion with six degrees of freedom. There are three axes of motion: up/down, left/right and forward/back up to the limits of the display’s viewing “cone.” Within limits, the display can also accommodate roll (tilting your head left and right), pitch (tilting your head up and down) and yaw (pivoting your head left and right). Time delay (latency) between your head motion and the resulting display adjustment would degrade the illusion. For maximum effect, the system operates in real time, with millisecond response and high accuracy. Sony accomplishes these goals with hardware, firmware and software advances including a high-speed camera, sophisticated face detection and eye sensing technology and a proprietary real-time rendering algorithm.

High resolution. The Spatial Reality Display has an uncanny ability to convey object details and textures thanks to a 4K (3840 x 2160) native display panel featuring over 8 million pixels total. To optimize the 3D immersive experience, Sony applies a unique masked bezel edge. About half the pixels are delivered to each eye.

Viewing comfort and convenience. Where VR headsets isolate the user from the computer keyboard and input devices, the Spatial Reality Display functions as a secondary desktop monitor. It works alongside traditional 2D monitors empowering you to seamlessly transition between 2D and 3D evaluation. And while some users can experience nausea or discomfort with VR headsets, the Spatial Reality Display minimizes discomfort.

Accommodates existing workflows. The Spatial Reality Display will fit right in wherever professionals create 3D computer graphics. The system works with a Windows® 10 PC fitted with the CPU and GPU power typical of 3D computer graphics houses. Sony provides plug-in support for two of the world’s top 3D content production platforms: Unity and Unreal Engine. The ELF-SR1 can also display existing content already authored for Virtual Reality, either directly or with minor modification, depending on the content.

Since the first public demonstrations at CES 2020, the Spatial Reality Display has generated intense interest across many professions. Feedback from customer trials with evaluation samples has given us a preliminary glimpse at the potential markets.

Automotive design. Car companies are recognizing the potential of this technology. “At Volkswagen, we"ve been evaluating Sony"s Spatial Reality Display from its early stages, and we see considerable usefulness and multiple applications throughout the ideation and design process, and even with training," commented Frantisek Zapletal, Virtual Engineering Lab US, Volkswagen Group of America, Inc.

Industrial design. Spatial Reality represents a completely new way for designers to preview their 3D creations. After working with a prototype, product designer So Morimoto said, “When I check my designs, I often change the angle of light to check the texture. I naturally tilt my head while I’m looking at things and what is amazing is that this display precisely reflects those natural movements.” Product designer and mechanical engineer Tatsuhito Aono said, “Even when working remotely like between Tokyo and Shanghai… we could share designs with this kind of presence.”

Architecture. Architects are constantly striving to communicate 3D concepts. “This really feels like a step toward remotely communicating in shapes and being able to send ‘things’ to many different people,” said Keisuke Toyota, co-founder of noiz, an architecture practice in the Meguro section of Tokyo.

Game development. Compatible with the Unity and Unreal Engine platforms that so many game developers are already using, the Spatial Reality Display is a natural fit for both conventional 2D and VR projects. You can readily evaluate 3D attributes without leaving your work environment.

Entertainment/content creation. We sent a sample to The Mill, a leading production studio with offices in London, New York, Los Angeles, Chicago, Berlin and Bangalore. According to Dan Philips, executive producer of emerging technology, “You"re literally looking at magic happen on the screen, wondering how it"s working. Every single person I’ve seen observing this display is just like, ‘I’ve never seen anything like it.’” Sony Pictures Entertainment and Columbia Pictures subsidiary Ghost Corps worked on Ghostbusters: Afterlife. Says Eric Reich, brand and franchise executive at Ghost Corps, “The display offers a new approach to visualizing concepts and characters, making understanding the finished product that much easier.”

cientific visualization. Whether you’re trying to understand complex proteins, mineral deposits, or structural stress, there are applications where two dimensions just aren’t enough. The Spatial Reality Display enables powerful 3D visualization without the need for cumbersome VR headsets or the complexity of CAVE automatic virtual environments.

In the words of company co-founder Akio Morita, “We do what others don’t.” The Spatial Reality Display is an expression of that ambition. Of course, the display also reflects Sony’s technological leadership in three fields.

The Spatial Reality Display represents a first-of-its-kind integration of all these world-class technologies. This is a compelling 3D experience you won’t find anywhere else.

As most professionals reading this document know, humans rely on depth perception every time we drive a car, reach for a pencil or hit a tennis ball. Since depth perception is so fundamental, it takes advantage of a broad range of visual cues. These include many cues evident in 2D monitors and projectors and even visible in Renaissance painting: occlusion, relative size and aerial perspective. 2D moving pictures provide another cue: camera motion parallax.

Binocular disparity. Thanks to the spatial separation between eyes, the left eye image is slightly different from the right. This one cue is solely responsible for the difference between 2D and 3D cinema presentation. In this conceptual view of superimposed left and right images in 3D cinema, the spoon has disparity to appear in front of the screen, the mug has zero disparity to appear at the screen and the apple has negative disparity to appear behind the screen.

Head motion occlusion. In real life, your motion changes the way foreground objects obstruct background objects. Scene 2 simulates moving your head to the right, revealing more of the apple.

Head motion parallax. It’s not just occlusion. Scene 2 simulates moving your viewpoint up and to the right. This shifts the entire perspective and reveals the top of the mug.

VR headsets have gained traction for gaming and selected consumer and professional applications. For all their advantages, they place the user into an isolated environment divorced from the working world. You need to take off the headset to accomplish everything from most design tasks to answering emails and picking up the phone. The Spatial Reality Display acts like a second computer monitor, enabling you to instantly switch from 3D evaluation to all the other tasks that make up your day.

CAVE automatic virtual environments continue to be the gold standard in 3D viewing. We’re proud that leading CAVE installations have selected Sony’s SXRD® 3D video projectors. However, CAVE viewing is not for everyone. You need a dedicated room – a substantial investment in real estate – in addition to the cost of multiple projectors, displays and CGI computers. CAVE also requires meticulous engineering, precise installation, careful calibration and 3D glasses. While CAVE is ideal for well-endowed research facilities, it’s out of reach for everyone else.

In addition, CAVE, like VR headsets, is not a good fit with the everyday work environment. While Spatial Reality literally fits on a desk, CAVE viewing takes researchers away from their desks altogether.

At first glance, Sony’s Spatial Reality Display appears to serve the same function as commercially available multi-view 3D monitors. A deeper dive reveals important differences and suggests substantially different applications and possibilities. While Sony’s Spatial Reality Display serves one user at a time, multi-view 3D monitors can serve several users simultaneously. Naturally, this capability involves significant tradeoffs.

Step-wise rendering. According to Sony’s survey of commercial products as of February 2021, multi-view displays typically offer 45 views. In comparison, the PC in Sony’s Spatial Reality system can render literally hundreds of views, each precisely calculated for the user’s exact position. With just 45 views, the image changes in discrete steps as you move your head. Step-wise rendering can be moderated by allowing each eye of the user to see two or more adjacent views, but that entails considerable blurring on parts of the image that extend in front and behind the display’s “zero parallax plane.” Step-wise rendering can also be moderated by intentional blurring during content creation. But once again, this sacrifices image detail.

Limited resolution. Using the example of 45 views, each user typically sees 2/45 of the display’s native resolution. This means as much as 95% of the display pixels are devoted to views you’re not seeing. The engineers of multi-view 3D displays thus face a challenging tradeoff. On one hand, they can maximize resolution. On the other, they can provide lots of views. They can’t do both.

No response to up/down or forward/back head motion. According to Sony’s survey of published claims, you cannot change your view of the object by moving vertically, toward the display or away. The view changes in response to side-to-side head movement only.

The global virtual reality market size was valued at USD 11.64 billion in 2021. The market is projected to grow from USD 16.67 billion in 2022 to USD 227.34 billion by 2029, exhibiting a CAGR of 45.2% during the forecast period. The global COVID-19 pandemic has been unprecedented and staggering, with experiencing lower-than-anticipated demand across all regions compared to pre-pandemic levels. Based on our analysis, the global market exhibited a decline of 42.2% in 2020 as compared to 2019.

The study includes devices and software such as Quest 2, Google Cardboard, Microsoft HoloLens 2, PlayStation Headset and Move Motion Controller, Unity Virtual Reality Development Software, among others. Virtual reality provides simulated experience to the customers and offers vast applications to industries such as retail, healthcare, automotive, gaming, and entertainment, among others. The industries are adopting this immersive technology to conduct virtual training, assistance, marketing, engineering and maintenance, designing, and simulation activities with their employees and workers. Also, the advancement in the 5G technology and infrastructure is driving the virtual reality market growth. The key market players are extensively focused on offering advanced hardware and content to improve customer experience. For instance,

In April 2022, Nikon Corporation announced a collaboration with Microsoft Corporation to develop and design 3D content and virtual reality solutions. The new partnership is agreed upon under a new subsidiary, Nikon Creates Corporation, which aims to enhance the content creation space.

The production and manufacturing plants of components, devices, equipment, and other hardware products have been severely impacted owing to the coronavirus outbreak worldwide. The temporary shutdown of industries, limited number of in-house staff, sealed country borders, and more have significantly impacted the supply of components and the delivery of final products. Thus, initially, in 2020, owing to the limited stock of virtual reality products and services, the market witnessed a small dip in its year-on-year trend, and this has impacted the overall global market.

However, in many industrial applications, the technology emerged with a true potential to support the pandemic crisis. The demand for VR technology increased in industries apart from healthcare, such as education, gaming, entertainment, manufacturing, retail, military, and defense, among others. For instance, in the initial phase of 2020, the Kansas City University Center for Medical Education Innovation integrated immersive technology for medical student"s training using fully immersive simulations. Thus, amid the pandemic, the VR demand in commercial industries has increased significantly, and its advancements have surged its potential for future applications.

As per the virtual reality statistics and industry experts’ analysis, the healthcare industry is expected to witness significant disruption across the industry with VR applications. The technology has showcased potential in improving the healthcare provisions, patient care system, planned surgeries, and medical training, among others.

Its stimulated experience of reflecting the real-world environment is expected to surge the demand in the healthcare industry. The assisted robotic VR is helping the healthcare providers by assisting during surgeries. It also helps by providing surgical training to develop required skills. For instance,

The concept of live virtual entertainment is evolving worldwide. Today, people are preferring at-home entertainment by adopting technology to access live events or shows. As the technology offers an immersive and real-world environment to the users, its demand for live music concerts, live events, or sports is growing significantly. The concept of live virtual entertainment is evolving worldwide. Today, people are preferring at-home entertainment by adopting technology to access live events or shows. As it offers a virtual world environment to the users, its demand for live music concerts, live events, or sports is growing significantly.

In April 2022, rock band artists, Foo Fighters, organized its concert on Meta virtual stage. The concert offered 180-degree live video over the Meta Quest headset.

The technology demands robust hardware components and high computing power for the proper functioning of devices. However, the majority of current desktop accessories are not compatible with applications. Similarly, most head-mounted displays are wireless and consume high energy, requiring high-energy batteries for long-duration operations. In industries such as gaming and entertainment, it is necessary to have devices with long battery life. Further, the highly connected devices and components require high-speed network bandwidth such as 5G. Thus, lack of suitable speed and power for operating the system might challenge the market growth.

The hardware segment is expected to dominate the global virtual reality market share during the forecast period. The key players are highly investing in the launch and innovation of hardware. For instance, in May 2021, Axon launched its VR-based wireless simulator to provide hands-on training-focused solutions.

The software segment is likely to gain traction owing to the rising demand for immersive technology. The increasing application such as gaming, entertainment, live events, and more is enhancing the software demand. The content segment is likely to witness steady growth during the forecast period due to increasing demand for interactive environment virtual sessions. The industries are demanding new content for applications such as marketing, training, entertainment, gaming, and other sections.

HMD is expected to lead the market as it offers the most immersive environment. With technological development, the demand for smartphone-connected virtual reality headsets is growing due to low-cost investments such as Google’s low-cost drawing board-based headsets. The adoption of HMD is significantly growing in industrial applications such as training, marketing, entertainment, and more. For instance, in April 2021, Vajro and VRM Switzerland were officially qualified by the European Union Aviation Safety Agency for virtual aviation training. The companies in collaboration have built a human-eye resolution-based headset for pilot training.

VR simulator is getting highly implemented for training, designing, and prototyping across industries as it helps reduce expenses. For instance, in March 2019, Nissan collaborated with Haptx to provide a realistic car designing virtual simulator to reduce time and investment. Similarly, with the increasing demand, gaming and entertainment industries are vastly adopting treadmills and haptic gloves. This is likely to ensure a highly immersive environment for customers. Therefore, the adoption of VR glasses for training and education is expected to grow during the forecast period.

The gaming and entertainment segments are expected to dominate the market during the forecast period. The existing and new players are highly investing in developing content, software, and hardware specifically for gaming purposes. The rising demand for theme parks, virtual museums, and gaming arcades is further boosting the market growth. Similarly, the retail and education industries are steadily adopting technology for various applications. This is likely to boost the VR market in the near future.

The adoption of technology in the automotive industry majorly caters to the training and education of engineers and technicians. Similarly, the implementation of VR simulators for test driving is boosting showroom customer engagement. For instance, in September 2020, Mercedes-Benz announced a partnership with Microsoft Corporation for its HoloLens 2 VR goggles and Dynamics 365 Remote Assist to improve its customer experience.

North America is expected to dominate the market share over the forecast period. The significant presence of key players in the U.S. is driving the market growth in the region. Also, various start-ups are entering the

Ms.Josey

Ms.Josey

Ms.Josey

Ms.Josey