prepare png file for arduino tft display factory

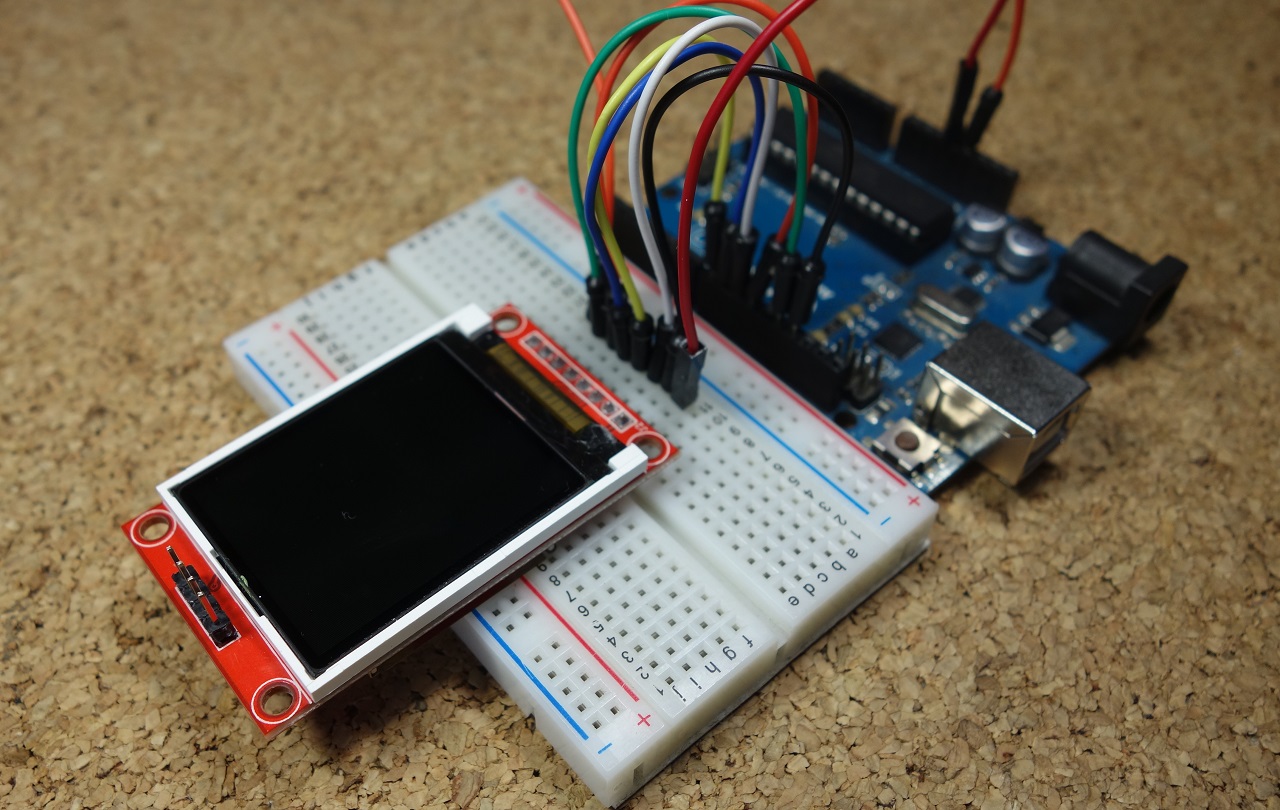

I found the TFT screen and Uno on Banggood.com about a month ago and over the weekend I was messing with the pair and found the tftbmp draw code in the demo.. I extended it with the ability to read any bmp file on the SD card.. so all you do is put your bitmaps on the SD and plug it in.. Having to add/edit/recompile/reload the Uno everytime is BS... Here is my code:

This website is using a security service to protect itself from online attacks. The action you just performed triggered the security solution. There are several actions that could trigger this block including submitting a certain word or phrase, a SQL command or malformed data.

I got a 7-inch TFT LCD display (800x480 pixels, capacitive touch screen) to use with an Arduino DUE processor. It"s a ER-TFTM070-4V2.1 display, came with a shield for a DUE or Mega2560 processor, uses an SSD1963 processor. Got it working so it ran the examples in the UTFT library, displaying sine waves, moire patterns etc. Found some files of UTFT fonts and started putting together an interface of a medical ventilator, now it displays major parameters using three different fonts simultaneously, looks cool so far.

The Arduino DUE runs on 3.3V, of course, and has a voltage regulator on board so I can feed it 9VDC. It runs well with that and the display looks as good as I can make it (which isn"t saying much, my artistic talents are mediocre at best).

I"d like to have it display a small picture (150x100 pixels) in one part of the display. Picture is available in any of several formats (BMP, GIF, PNG, JPG etc.).

This website is using a security service to protect itself from online attacks. The action you just performed triggered the security solution. There are several actions that could trigger this block including submitting a certain word or phrase, a SQL command or malformed data.

This website is using a security service to protect itself from online attacks. The action you just performed triggered the security solution. There are several actions that could trigger this block including submitting a certain word or phrase, a SQL command or malformed data.

This website is using a security service to protect itself from online attacks. The action you just performed triggered the security solution. There are several actions that could trigger this block including submitting a certain word or phrase, a SQL command or malformed data.

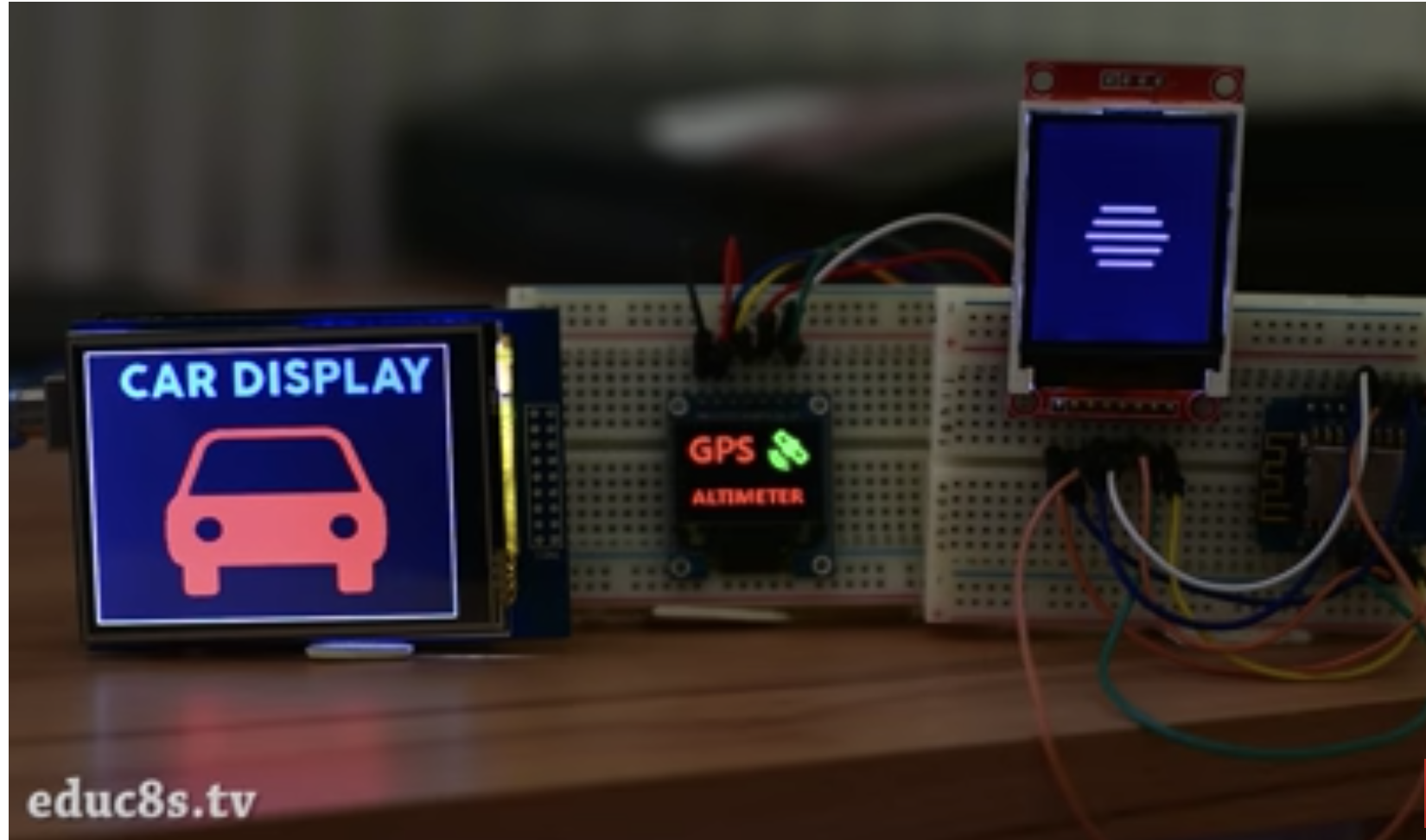

In this article, you will learn how to use TFT LCDs by Arduino boards. From basic commands to professional designs and technics are all explained here.

In electronic’s projects, creating an interface between user and system is very important. This interface could be created by displaying useful data, a menu, and ease of access. A beautiful design is also very important.

There are several components to achieve this. LEDs, 7-segments, Character and Graphic displays, and full-color TFT LCDs. The right component for your projects depends on the amount of data to be displayed, type of user interaction, and processor capacity.

TFT LCD is a variant of a liquid-crystal display (LCD) that uses thin-film-transistor (TFT) technology to improve image qualities such as addressability and contrast. A TFT LCD is an active matrix LCD, in contrast to passive matrix LCDs or simple, direct-driven LCDs with a few segments.

In Arduino-based projects, the processor frequency is low. So it is not possible to display complex, high definition images and high-speed motions. Therefore, full-color TFT LCDs can only be used to display simple data and commands.

In this article, we have used libraries and advanced technics to display data, charts, menu, etc. with a professional design. This can move your project presentation to a higher level.

In electronic’s projects, creating an interface between user and system is very important. This interface could be created by displaying useful data, a menu, and ease of access. A beautiful design is also very important.

There are several components to achieve this. LEDs, 7-segments, Character and Graphic displays, and full-color TFT LCDs. The right component for your projects depends on the amount of data to be displayed, type of user interaction, and processor capacity.

TFT LCD is a variant of a liquid-crystal display (LCD) that uses thin-film-transistor (TFT) technology to improve image qualities such as addressability and contrast. A TFT LCD is an active matrix LCD, in contrast to passive matrix LCDs or simple, direct-driven LCDs with a few segments.

In Arduino-based projects, the processor frequency is low. So it is not possible to display complex, high definition images and high-speed motions. Therefore, full-color TFT LCDs can only be used to display simple data and commands.

In this article, we have used libraries and advanced technics to display data, charts, menu, etc. with a professional design. This can move your project presentation to a higher level.

Size of displays affects your project parameters. Bigger Display is not always better. if you want to display high-resolution images and signs, you should choose a big size display with higher resolution. But it decreases the speed of your processing, needs more space and also needs more current to run.

After choosing the right display, It’s time to choose the right controller. If you want to display characters, tests, numbers and static images and the speed of display is not important, the Atmega328 Arduino boards (such as Arduino UNO) are a proper choice. If the size of your code is big, The UNO board may not be enough. You can use Arduino Mega2560 instead. And if you want to show high resolution images and motions with high speed, you should use the ARM core Arduino boards such as Arduino DUE.

In electronics/computer hardware a display driver is usually a semiconductor integrated circuit (but may alternatively comprise a state machine made of discrete logic and other components) which provides an interface function between a microprocessor, microcontroller, ASIC or general-purpose peripheral interface and a particular type of display device, e.g. LCD, LED, OLED, ePaper, CRT, Vacuum fluorescent or Nixie.

The display driver will typically accept commands and data using an industry-standard general-purpose serial or parallel interface, such as TTL, CMOS, RS232, SPI, I2C, etc. and generate signals with suitable voltage, current, timing and demultiplexing to make the display show the desired text or image.

The LCDs manufacturers use different drivers in their products. Some of them are more popular and some of them are very unknown. To run your display easily, you should use Arduino LCDs libraries and add them to your code. Otherwise running the display may be very difficult. There are many free libraries you can find on the internet but the important point about the libraries is their compatibility with the LCD’s driver. The driver of your LCD must be known by your library. In this article, we use the Adafruit GFX library and MCUFRIEND KBV library and example codes. You can download them from the following links.

You must add the library and then upload the code. If it is the first time you run an Arduino board, don’t worry. Just follow these steps:Go to www.arduino.cc/en/Main/Software and download the software of your OS. Install the IDE software as instructed.

By these two functions, You can find out the resolution of the display. Just add them to the code and put the outputs in a uint16_t variable. Then read it from the Serial port by Serial.println(); . First add Serial.begin(9600); in setup().

First you should convert your image to hex code. Download the software from the following link. if you don’t want to change the settings of the software, you must invert the color of the image and make the image horizontally mirrored and rotate it 90 degrees counterclockwise. Now add it to the software and convert it. Open the exported file and copy the hex code to Arduino IDE. x and y are locations of the image. sx and sy are sizes of image. you can change the color of the image in the last input.

Upload your image and download the converted file that the UTFT libraries can process. Now copy the hex code to Arduino IDE. x and y are locations of the image. sx and sy are size of the image.

In this template, We converted a .jpg image to .c file and added to the code, wrote a string and used the fade code to display. Then we used scroll code to move the screen left. Download the .h file and add it to the folder of the Arduino sketch.

In this template, We used sin(); and cos(); functions to draw Arcs with our desired thickness and displayed number by text printing function. Then we converted an image to hex code and added them to the code and displayed the image by bitmap function. Then we used draw lines function to change the style of the image. Download the .h file and add it to the folder of the Arduino sketch.

In this template, We created a function which accepts numbers as input and displays them as a pie chart. We just use draw arc and filled circle functions.

In this template, We added a converted image to code and then used two black and white arcs to create the pointer of volumes. Download the .h file and add it to the folder of the Arduino sketch.

In this template, We added a converted image and use the arc and print function to create this gauge. Download the .h file and add it to folder of the Arduino sketch.

while (a < b) { Serial.println(a); j = 80 * (sin(PI * a / 2000)); i = 80 * (cos(PI * a / 2000)); j2 = 50 * (sin(PI * a / 2000)); i2 = 50 * (cos(PI * a / 2000)); tft.drawLine(i2 + 235, j2 + 169, i + 235, j + 169, tft.color565(0, 255, 255)); tft.fillRect(200, 153, 75, 33, 0x0000); tft.setTextSize(3); tft.setTextColor(0xffff); if ((a/20)>99)

while (b < a) { j = 80 * (sin(PI * a / 2000)); i = 80 * (cos(PI * a / 2000)); j2 = 50 * (sin(PI * a / 2000)); i2 = 50 * (cos(PI * a / 2000)); tft.drawLine(i2 + 235, j2 + 169, i + 235, j + 169, tft.color565(0, 0, 0)); tft.fillRect(200, 153, 75, 33, 0x0000); tft.setTextSize(3); tft.setTextColor(0xffff); if ((a/20)>99)

In this template, We display simple images one after each other very fast by bitmap function. So you can make your animation by this trick. Download the .h file and add it to folder of the Arduino sketch.

In this template, We just display some images by RGBbitmap and bitmap functions. Just make a code for touchscreen and use this template. Download the .h file and add it to folder of the Arduino sketch.

The speed of playing all the GIF files are edited and we made them faster or slower for better understanding. The speed of motions depends on the speed of your processor or type of code or size and thickness of elements in the code.

Voltage type: 5v or 3v voltage input voltage,input is selectable. Because TFT can only work under 3.3 V voltage, so when the input voltage VIN is 5V, need through the 3.3 V voltage regulator IC step down to 3.3V , when the input voltage of 3.3 V, you need to use the zero resistance make J2 short , is equivalent to not through the voltage regulator IC for module and power supply directly.

When most people hear the term “JPEG decoding,” they will usually assume that it’s something really difficult, something that requires lots of processing power and complicated mathematics, something that is impossible – or at least impractical – on relatively cheap and slow 8-bit microcontroller platforms like the Arduino. In this article, we’ll learn how to take JPEG photo using an Arduino-controlled camera, turn the photo into lots and lots of pixels, and to transmit all of them via serial port to our PC – or wherever we like!

Even though what is described above is entirely possible, it is worth mentioning why exactly are we going into all the trouble of decoding a JPEG photo. After all, there’s an SD module listed in the hardware requirements above, and you’d ask “Can we just store the photo on the SD card as aphoto.jpegfile?” Sure, that is actually an important part of the entire process, but try to look at this from a different perspective: What if we want to send that photo somewhere using a slow, somewhat unreliable connection? If we simply chopped up the JPEG photo into packages and send them via a slow connection, we risk that some of them might get corrupted, while others may get lost entirely. When that happens, we most likely won’t be able to restore the original photo from the corrupted data.

However, when we decode the JPEG into bitmap, and then send the actual pixels, we risk nothing. If some of the data gets corrupted or lost during the transmission, we will still have an image, only with the corrupted data somehow discolored, misplaced, or simply missing. Granted, it’s not the same picture we originally started with, but it still carries most of the original information and is still “human-readable”. Now that we knowwhywe’re doing this, let’s take a look athowwe can approach this method.

Before we start decoding JPEG photos, first we have to take the photos. Since our ultimate goal is to take a photo, store it on a SD card and then send it somewhere. Let’s start with a simple setup that will allow us to do this.

Since we need quite a bit of RAM to decode the photos, we’ll be using Arduino Mega. Also, there’s an added bonus in the form of four separate hardware serial ports on Mega, so we can use portSerial1to communicate with the camera, and portSerialto communicate with our PC.

You probably noticed there’s a simple resistor voltage divider on the camera RX line. This is because the logic level of the VC0706 chip is 3.3 V (even though the supply voltage is 5 V), but the logic level of Arduino Mega is 5 V. Here’s a friendly advice: always use at least a voltage divider on the RX line when interfacing 5 V Arduino with 3.3 V modules. It’s much quicker than waiting until a new module arrives. The SD card reader is connected directly by SPI interface.

Now that the hardware is set up, we need to get the code sorted out. Since the library for SD cards is already a part of the standard Arduino IDE installation, we can check the SD card off the list.

Now, the Arduino will take a picture every 10 seconds or so until we run out of space on the SD card. But since the photos are typically around 48 kB, and I’m currently using 2 GB SD card, we have enough space for more than 43,000 photos. It seems reasonable to say that we don’t need that many. But now that we have some photos taken, we can now move on to the fun stuff: turning them from JPEG-compressed hard-to-manage gibberish into a simple array of pixels!

Before we start decoding, let’s take a quick look at how exactly the picture data are stored inside a JPEG file. If you don’t really care about this, please feel free to skip the next three paragraphs. If you actually know a thing or two about graphics and compressions – unlike me – you could also skip this part, as well. The following text is simplified to an extent.

When we talk about storing any sort of picture data, there are two basic approaches: lossless or lossy compression. The difference between the two is clear: when image is encoded using lossless compression, PNG for example, every pixel is exactly the same as when you started at the end of the process. This is great for things like computer graphics, but unfortunately, it comes at a cost of increased file size. On the other hand, with lossy compression like JPEG, we lose some details but the resulting file size is much smaller.

The way this is achieved in JPEG can be somewhat challenging to grasp since it involves a little something called “discrete cosine transformation”, but the main principle is actually pretty simple. First, the picture is converted from RGB color space into YCbCr. We all know RGB color space – it stores colors as values of red (R), green (G) and blue (B). YCbCr is quite different – it uses luminance (Y – basically the original image in grayscale), blue-difference chroma component (Cb – “blueness” of the picture) and red-difference chroma component (Cr – “redness” of the picture).

The way JPEG achieves the reduction in file size is actually closely related to the way human eyes process colors. Take a look at the three pictures of the Y, Cb and Cr components in the above picture. Which one looks more like the original picture? That’s right, the grayscale one! This is because the human eye is much more sensitive to luminance than to the other two components. JPEG compression uses this in a very clever way that allows it to reduce the amount of information in the Cb and Cr components while keeping the original Y component. This leads to a picture that is much smaller than the original file, and because most the compressed information was in the components human eyes aren’t too sensitive towards, you can barely notice the difference of a compressed picture in comparison to an uncompressed one.

Now let’s run a code that does the actual magic of turning JPEG into an array of pixels. Fortunately, there is a library that does exactly that – Bodmer’s JPEGDecoder (available onGitHub) which is based on an excellent picojpeg library by Rich Geldreich (also onGitHub). Even though JPEGDecoder was originally written to display images on TFT display, with a few minor tweaks it will work just fine for us.

Using the library is fairly simple: we give it the JPEG file, and the library will start generating arrays of pixels – so called Minimum Coded Units, or MCUs for short. The MCU is a block of 16 by 8 pixels. The functions in the library will return the color value for each pixel as 16-bit color value. The upper 5 bits are the red value, the middle 6 are green and the lower 5 are blue. Now we can send these values by any sort of communication channel we like. I’m going to use Serial port so that we can easily receive the data later. The following Arduino sketch decodes an image, then sends the 16-bit RGB value for each pixel in the MCU and repeats this for all the MCUs in the image file.

Header packet: This packet starts with the string “$ITHDR” and contains basic information about the image we will be sending: height and width in pixels, number of MCUs pre row and column and finally the original filename. For each image we want to send, we will send one header packet.

Now we have a code that will decode and send picture files, but there’s still one core feature missing: right now, there’s nothing listening for those data at the other end. This means it’s time to start up Processing again!

I covered a little bit of Processing inArduino Hexapod PART 3: REMOTE CONTROL to write an app that allowed us to easily control the hexapod. For a quick refresher: Processing is a Java-based language that is primarily focused on drawing stuff. This makes it perfect for what we need to do, which is displaying pixels! This program does just that.

When you run this program with the Arduino connected, and then press any key on your keyboard, you will (hopefully) see the dull, boring gray background being gradually replaced by the image that was originally stored on the SD card. And since the replacement is done pixel by pixel, the entire process has a sort of old-school, dial-up-modem style of loading the image!

The original image is 640 pixels wide and 480 pixels tall for a total of 307,200 pixels. Each of these pixels is represented by 2-byte color value, that is a total of 614,400 bytes – or 600 kilobytes – to transfer. This leaves us with the final speed of about 10 kB/s. That’s not that terrible for a “protocol” that we just made up on the go, isn’t it? Also, it shows you why image compression is so useful. The original JPEG file was only around 48 kB, while the decoded bitmap takes 600 kB. If we were to transfer the JPEG file, we would be done with it in less than 5 seconds, even when using our extremely simple “protocol.” Of course, we most likely wouldn’t be able to retrieve any data in case the transfer fails – which is something that cannot happen now.

Finally, we have proven what this article started with: processing images on Arduinoispossible and can be even useful in certain situations. We can now snap pictures using a serial camera, decode them, send them over a serial port and then receive them on the other side! Consider this article your short intro into image processing on Arduino.

As usual, there is a lot of things that can be improved. One major addition could be to encrypt our messages using AES, which is fairly easy to implement, even on Arduino. Security is usually dangerously overlooked on Arduino, so we might focus a bit more on that in another project.

Whatever you are currently celebrating, Christmas, Hanukkah, Jul, Samhain, Festivus, or any other end-of-the-civil-year festivities, I wish you a good time! This December 25th edition of the Nextion Sunday Blog won"t be loaded with complex mathematical theory or hyper-efficient but difficult to understand code snippets. It"s about news and information. Please read below...After two theory-loaded blog posts about handling data array-like in strings (Strings, arrays, and the less known sp(lit)str(ing) function and Strings & arrays - continued) which you are highly recommended to read before continuing here, if you haven"t already, it"s big time to see how things work in practice! We"ll use a string variable as a lookup lookup table containing data of one single wave period and add this repeatedly to a waveform component until it"s full.A few weeks ago, I wrote this article about using a text variable as an array, either an array of strings or an array of numbers, using the covx conversion function in addition for the latter, to extract single elements with the help of the spstr function. It"s a convenient and almost a "one fits all" solution for most use cases and many of the demo projects or the sample code attached to the Nextion Sunday Blog articles made use of it, sometimes even without mentioning it explicitly since it"s almost self-explaining. Then, I got a message from a reader, writing: "... Why then didn"t you use it for the combined sine / cosine lookup table in the flicker free turbo gauge project?"105 editions of the Nextion Sunday blog in a little over two years - time to look back and forth at the same time. Was all the stuff I wrote about interesting for my readers? Is it possible at all to satisfy everybody - hobbyists, makers, and professionals - at the same time? Are people (re-)using the many many HMI demo projects and code snippets? Is anybody interested in the explanation of all the underlying basics like the algorithms for calculating square roots and trigonometric functions with Nextion"s purely integer based language? Are optimized code snippets which allow to save a few milliseconds here and there helpful to other developers?Looking through the different Nextion user groups on social networks, the Nextion user forum and a few not so official but Nextion related forums can be surprising. Sometimes, Nextion newbies ask questions or have issues although the required function is well (in a condensed manner for the experienced developer, I admit) documented on the Nextion Instruction Set page, accessible through the menu of this website. On top of that, there is for sure one of my more than 100 Sunday blog articles which deals not only with that function, but goes often even beyond the usual usage of it. Apparently, I should sometimes move away from always trying to push the limits and listen to the "back to the roots!" calls by my potential readers...Do you remember the (almost) full screen sized flicker free and ultra rapid gauge we designed in June? And this without using the built-in Gauge component? If not, it"s time to read this article first, to understand today"s improvements. The June 2022 version does its job perfectly, the needle movement is quick and smooth, and other components can be added close to the outer circle without flickering since there is no background which needs constantly to be redrawn. But there was a minor and only esthetic weak point: The needle was a 1px thin line, sometimes difficult to see. Thus, already a short time after publishing, some readers contacted me and asked if there were a way to make the needle thicker, at least 2 pixels.

The display is driven by a ST7735R controller ( ST7735R-specifications.pdf (2.1 MB) ), can be used in a “slow” and a “fast” write mode, and is 3.3V/5V compatible.

Adafruit_ST7735 is the library we need to pair with the graphics library for hardware specific functions of the ST7735 TFT Display/SD-Card controller.

In the file dialog select the downloaded ZIP file and your library will be installed automatically. This will automatically install the library for you (requires Arduino 1.0.5 or newer). Restarting your Arduino software is recommended as it will make the examples visible in the examples menu.

The easiest way to remedy this is by extracting the GitHub ZIP file. Place the files in a directory with the proper library name (Adafruit_GFX, Adafruit_ST7735 or SD) and zip the folder (Adafruit_GFX, Adafruit_ST7735.zip, SD.zip). Now the Arduino software can read and install the library automatically for you.

Basically, besides the obvious backlight, we tell the controller first what we are talking to with the CS pins. CS(TFT) selects data to be for the Display, and CS(SD) to set data for the SD-Card. Data is written to the selected device through SDA (display) or MOSI (SD-Card). Data is read from the SD-Card through MISO.

So when using both display and SD-Card, and utilizing the Adafruit libraries with a SainSmart display, you will need to connect SDA to MOSI, and SCL to SCLK.

As mentioned before, the display has a SLOW and a FAST mode, each serving it’s own purpose. Do some experiments with both speeds to determine which one works for your application. Of course, the need of particular Arduino pins plays a role in this decision as well …

Note: Adafruit displays can have different colored tabs on the transparent label on your display. You might need to adapt your code if your display shows a little odd shift. I noticed that my SainSmart display (gree tab) behaves best with the code for the black tab – try them out to see which one works best for yours.

Low Speed display is about 1/5 of the speed of High Speed display, which makes it only suitable for particular purposes, but at least the SPI pins of the Arduino are available.

After connecting the display in Low Speed configuration, you can load the first example from the Arduino Software (“File” “Example” “Adafruit_ST7735” – recommend starting with the “graphictest“).

Below the code parts for a LOW SPEED display (pay attention to the highlighted lines) – keep in mind that the names of the pins in the code are based on the Adafruit display:

The SD-Card needs to be FAT-16 or FAT-32 formatted, single partition, and the BMP file needs to be placed in the root (ie. not in a directory or anything like that).

You can name your BMP file “parrot.bmp” or modify the Sketch to have the proper filename (in “spitftbitmap” line 70, and in “soft_spitftbitmap” line 74).

#define SD_CS 4 // Chip select line for SD card#define TFT_CS 10 // Chip select line for TFT display#define TFT_DC 9 // Data/command line for TFT#define TFT_RST 8 // Reset line for TFT (or connect to +5V)

#define SD_CS 4 // Chip select line for SD card#define TFT_CS 10 // Chip select line for TFT display#define TFT_DC 9 // Data/command line for TFT#define TFT_RST 8 // Reset line for TFT (or connect to +5V)

As you have seen before the Adafruit_GFX library (supported by the Adafruit_ST7735 library) makes this easy for us – More information can be found at the GFX Reference page.

To use this in your Arduino Sketch: The first 2 characters represent RED, the second set of two characters is for GREEN and the last 2 characters represent BLUE. Add ‘0x’ in front of each of these hex values when using them (‘0x’ designates a hexadecimal value).

This function is used to indicate what corner of your display is considered (0,0), which in essence rotates the coordinate system 0, 90, 180 or 270 degrees.

However, if your application needs your screen sideways, then you’d want to rotate the screen 90 degrees, effectively changing the display from a 128×160 pixel (WxH) screen to a 160×128 pixel display. Valid values are: 0 (0 degrees), 1 (90 degrees), 2 (180 degrees) and 3 (270 degrees).

Based on these functions, I did create a little demo to show what these functions do. Either download the file or just copy the code and paste it into an empty Arduino Sketch.

tft.print("Lorem ipsum dolor sit amet, consectetur adipiscing elit. Curabitur adipiscing ante sed nibh tincidunt feugiat. Maecenas enim massa, fringilla sed malesuada et, malesuada sit amet turpis. Sed porttitor neque ut ante pretium vitae malesuada nunc bibendum. Nullam aliquet ultrices massa eu hendrerit. Ut sed nisi lorem. In vestibulum purus a tortor imperdiet posuere. ");

The Computer-Aided Design ("CAD") files and all associated content posted to this website are created, uploaded, managed and owned by third-party users. Each CAD and any associated text, image or data is in no way sponsored by or affiliated with any company, organization or real-world item, product, or good it may purport to portray.

This website is using a security service to protect itself from online attacks. The action you just performed triggered the security solution. There are several actions that could trigger this block including submitting a certain word or phrase, a SQL command or malformed data.

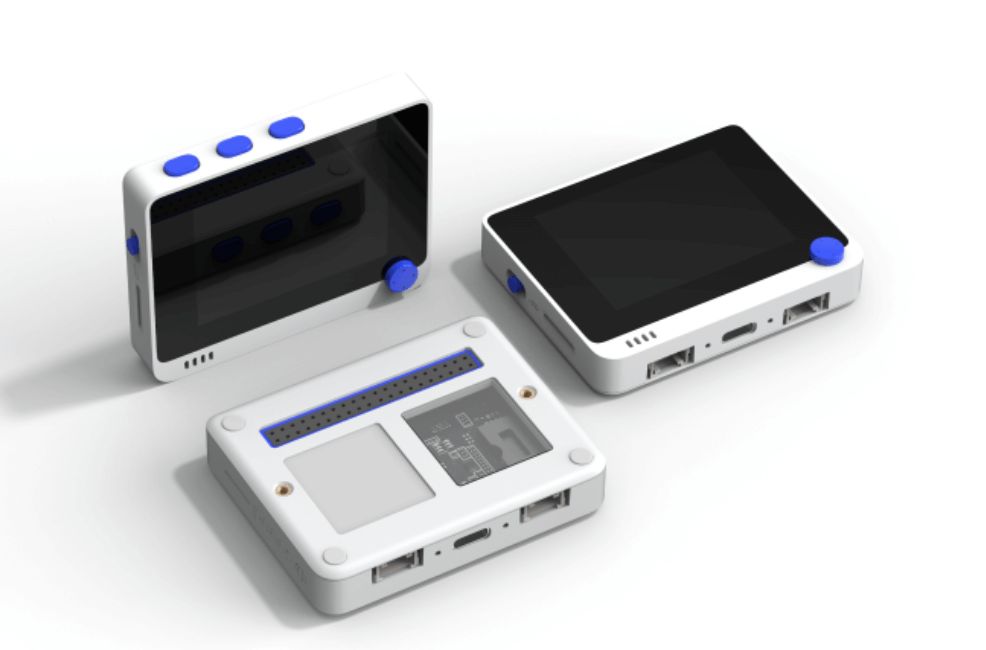

Through the UART / I2C port, HuskyLens can connect to Arduino and micro:bit to help you make very creative projects without playing with complex algorithms.

Short press the "Learning button" to learn the specified object; long press the "Learning button" to continuously learn the specified object from different angles and distances; if HuskyLens has learned the object before, short press the "Learn button" to make it forget.

Before using this product, it is strongly recommended to upgrade HuskyLens" firmware to the latest version to get the latest functions and the most stable experience. If your HuskyLens already has the latest firmware, no update is required. We recommand to upload the firmware on windows 10 using the HuskyLens Uploader since it features a GUI, and easy-to-use.

Run the HuskyLens Uploader, a small black cmd window will pop up first, and after a while, the interface window will appear, then click the "Select File" button to load the firmware.

Click the "Upload" button. Wait about 5 minutes to complete the uploading. The firmware file is large, so it may take a little bit time. After the uploading is completed, the prompt text "Uploading" will disappear and the HuskyLens screen will light up. Please do not close the interface window and the small black cmd window during uploading.

When you don"t operate the Huskylens for a period of time, the menu on the screen will automatically hide. This duration time can be adjusted from 1-100 seconds. The default value is 10 seconds.

2. Face Learning: Point the “+” symbol at a face, short press the "learning button" to learn the face. If the same face is detected by HuskyLens, a blue frame with words "Face: ID1" will be displayed on the screen,which indicates that HuskyLens has learned the face and can recognize it now.

Keep pressing the “learning button”, point HuskyLens" "+" symbol at different angles of the face. During this process, a yellow frame with words "Face: ID1" will be displayed on the screen, which indicates HuskyLens is learning the face. Please point the yellow frame at different angles of the same person"s face, such as front face and side face (or multiple photos of the same person), to enter all angles of this person"s face.

Then you can release the "learning button" to finish the learning. When Huskylens detected the learned face, a blue frame with words "Face: ID1" will be displayed, now HuskyLens can recognize the face from different angles.

If there is no “+” symbol in the center of the screen, it means that the HuskyLens has already learned the face in the current function, now HuskyLens is detecting it. If you want to let HuskyLens learn a new face, you have to make it forget the learned face first.

The learned face information will be automatically saved. When HuskyLens detects the learned face, this face will be selected by a blue frame and identified as face: ID1. The size of the frame will change with the size of the face, and the face will be tracked automatically.

If there is no “+” symbol in the center of the screen, it means that the HuskyLens has already learned the face in the current function. If you want to recognize another face, or re-enter face information, you need to delete the current face information.

When HuskyLens is in the face recognition mode, short press the "learning button", the screen will display "click again to forget". Before the countdown ends, short press the "learning button" again to delete the learned face information, then the yellow "+" symbol is displayed. Now you can let HuskyLens learn a new face.

Dial the function button until "Learn Multiple" is displayed, then short press the function button, and dial to the right to turn on the "Learn Multiple" switch, that is, progress bar turns blue and the square icon on the progress bar moves to the right. Then short press the function button to confirm this parameter.

Point the “+” symbol at the face, long press the "learning button" to learn the face of the first person. Then release the "learning button", a blue frame with words "Face: ID1" will be displayed if HuskyLens detects the same face,meanwhile, a message "Click again to continue! Click other button to finish" will be displayed. Please short press the "learning button" before the countdown ends if you want to learn the face of other person. If not, short press the "function button" before the countdown ends, or do not press any button to let the countdown ends.

In this chapter, we will learn the next face continuously. So we need to short press the "learning button" before the countdown ends. Then we can let HuskyLens learn the face of the second person. The same as the steps to recognize the first face, point the “+” symbol at the second face, long press the "learning button" to learn the face of the second person. Then release the "learning button", a blue frame with words "Face: ID2" will be displayed if HuskyLens detects the same face.

If there is no “+” symbol in the center of the screen before learning, it means that the HuskyLens has already learned, now HuskyLens is detecting face. If you want to let HuskLens learn new face, you need to let HuskyLens forget the learned face first.

The learned face information will be saved automatically. When HuskyLens detects the learned face from multiple faces, the learned face will be selected with a frame and identified by the message face: IDx. For example, when HuskyLens detects the learned face of the first person, it will be selected with a blue frame and identify face: ID1; when HuskyLens detects the learned face of the second person, it will be selected with a yellow frame and identify face: ID2; and so on.

Point Huskylens to the target object, adjusting the distance and until the object is included in the yellow frame of the center of the screen. Then long press "learning button" to learn the object from various angles and distances. During the learning process, the yellow frame with words "Learning: ID1" will be displayed on the screen.

When tracking the object, the yellow words “Learning: ID1” will be displayed, indicating that HuskyLens is tracking the object while learning. This setting improves the object tracking ability.

Object recognition cannot distinguish the difference between objects of the same type. For example, it can only recognize that this is a cat, but it cannot recognize what kind of cat it is. Different from face recognition, people are one type, but different faces can be distinguished.

Dial the function button until "Learn Multiple" is displayed, then short press the function button, and dial to the right to turn on the "Learn Multiple" switch, that is, progress bar turns blue and the square icon on the progress bar moves to the right. Then short press the function button to confirm this parameter.

Point the “+” symbol at the object, then short press the “learning button”, the color of the frame changes from white to blue, and the name of the object and it"s ID1 will appear on the screen, meanwhile, a message "Click again to continue! Click other button to finish" will be displayed. Please short press the "learning button" before the countdown ends if you want to mark the next object. If not, short press the "function button" before the countdown ends, or do not press any button to let the countdown ends.

When encountering the learned objects, they will be selected by the color frame, and the name and ID number will be displayed. When encountering new ones, the selection frame is white.

The ID number is related to the order of marking objects. For example, if a dog is marked for the first time and a cat is marked for the second time, when the dog is recognized, the words "dog: ID1" will be displayed on the screen; and when the cat is recognized, the words "cat: ID2" will be displayed on the screen.

Dial the function button until "Learn Multiple" is displayed, then short press the function button, and dial to the right to turn on the "Learn Multiple" switch, that is, progress bar turns blue and the square icon on the progress bar moves to the right. Then short press the function button to confirm this parameter.

Point the “+” symbol at the first color block, and long press the “learning button”. A yellow frame will be displayed on the screen, indicating that HuskyLens is learning the color. At this time, adjust the distance and angle between HuskyLens and the color block, to let HuskyLens learn the color block in various distances and angles. Then, release the "learning button" to complete learning the first color block, meanwhile, a message "Click again to continue! Click other button to finish" will be displayed. Please short press the "learning button" before the countdown ends if you want to learn other color blocks. If not, short press the "function button" before the countdown ends, or do not press any button to let the countdown ends.

When encountering the same or similar color blocks, some color frames with IDs will be automatically displayed on the screen, and the size of the frames are same as the size of the color blocks.

The ID number is related to the order of learned color. For example, if a yellow block is marked for the first time and a green block is marked for the second time, when the yellow block is recognized, the words "Color: ID1" will be displayed on the screen, and when the green block is recognized, the words "Color: ID2" will be displayed on the screen.

Point the "+" symbol at the target balloon, then press the "learning button" to learn the color of the balloon, and then release the "learning button" to complete the learning. You can see that the color of the balloon can be recognized , but for balloons with similar colors, it may not be able to identify them.

When recognizing color blocks of similar colors, the recognition accuracy can be adjusted by setting the threshold. For example, in the figure above, some yellow balloons with similar colors are not recognized, and the threshold may be set higher. In the parameter setting of the color recognition function, there is the "Block Sized Threshold" parameter. The lower the value of this parameter, the lower the accuracy, but the more similar color blocks can be recognized. As shown in the figure below, when the threshold is 20, only one yellow ball can be recognized, and when the threshold is 0, all three yellow balls can be recognized. Please adjust the threshold according to the actual effect, so that the recognition accuracy is within your acceptable range.

Dial the function button until "Learn Multiple" is displayed, then short press the function button, and dial to the right to turn on the "Learn Multiple" switch, that is, progress bar turns blue and the square icon on the progress bar moves to the right. Then short press the function button to confirm this parameter.

Point the “+” symbol at the first tag, and press the “learning button”. A yellow frame with words "Tag:ID1" will be displayed on the screen, indicating that HuskyLens is learning the tag now. Then, release the "learning button" to complete learning the first tag, meanwhile, a message "Click again to continue! Click other button to finish" will be displayed. Please short press the "learning button" before the countdown ends if you want to learn other tags. If not, short press the "function button" before the countdown ends, or do not press any button to let the countdown ends.

When encountering the learned tag, some color frames with IDs will be automatically displayed on the screen. The size of the frames changes with the size of the tags, and the frames automatically track these tags.

This function can learn multiple photos of different objects, and then use the built-in machine learning algorithm for training. After the training is completed, when the learned objects appear again in the HuskyLens" camera, HuskyLens can recognize them and display their ID numbers. The more HuskyLens learns the photos of the same object, the more accurate the recognition can be.

Dial the function button until "Learn Multiple" is displayed, then short press the function button, and dial to the right to turn on the "Learn Multiple" switch, that is, progress bar turns blue and the square icon on the progress bar moves to the right. Then short press the function button to confirm this parameter.

Point the large frame at the first target object(the worker with a helmet on the left in the picture above), and long press the “learning button”, a yellow frame with words "Learning XX/30 ID:1" will be displayed on the screen, indicating that HuskyLens is learning the object now. Adjust the distance and angle, let HuskyLens learn the object in various distances and angles. Then, release the "learning button" to complete learning the first object, meanwhile, a message "Click again to continue! Click other button to finish" will be displayed. Please short press the "learning button" before the countdown ends if you want to learn other objects. If not, short press the "function button" before the countdown ends, or do not press any button to let the countdown ends.

In this chapter, you need to continue to learn the next object (the worker without a helmet on the right in the above picture), so press the "learning button" before the countdown ends, and then point the large frame at the second target object, long press the "learning button" to complete the learning of the second object. And so on.

When HuskyLens encounters the learned object again, its ID number will be displayed on the screen. As shown in the figure below, when HuskyLens recognizes that the worker is wearing a helmet, the screen displays ID1, and if there is no helmet, it displays ID2.

Answer: No. In the object classification function, the position of the frame is fixed, and its x and y center coordinates on the screen remain unchanged, so it cannot give the relative position of the object on the screen. But you can learn different positions of objects as different IDs, and judge the position by ID. For example, in automatic vehicles, learn the ID 1, 2, and 3 as on the left, middle, and right sides of the road. By judging the ID, you can know the position of the automatic vehicles relative to the road.

You can change the corresponding name of the ID, for example: change “Face:ID1” to “Jack:ID1”, and “Color:ID1” to “Red:ID1”. This function is used to name a people or object, and make the result of recognition more recognizable. All 6 algorithms support customizing the ID name except line tracking. And only English names are supported.

The onboard screen can be used to display texts anywhere on itself. It supports English characters, numbers and symbols. The recognition results and data from sensors can be directly displayed on the screen. All 7 algorithms support the customized text.

Like a digital camera, HUSKYLENS can take photos or screenshots, and save them on an SD card. With an onboard SD card slot on HUSKYLENS, you can just plug in the SD card and use it. The screenshots contain the texts, frames displayed on the screen, while the photos contain only the image.

Please format the SD card as FAT32. Taking photos and screenshots, and saving them costs some time. So, it is recommended to use this function at least 0.5 seconds apart.

Press button A of micro:bit or pull down the Arduino Pin A0, then HUSKYLENS will take a screenshot and save it in SD card. Take out the SD card and insert it into a computer, then you can view the screenshot taken by HUSKYLENS.

HUSKYLENS supports taking multiple objects learned from the same algorithm as one data model. The SD card can be used to save the model. The model can be reloaded even if all the data from the current algorithm is deleted, allowing HUSKYLENS to automatically learn the data in the model. By saving the model, HUSKYLENS can be used for multiple scenes in one algorithm. For example, in the object classification algorithm, learn rock-paper-scissors as one model and garbage classification as one model. Then, through model switching, the required functions can be quickly realized to avoid repeating learning.

This function requires an SD card, which needs to be formatted as FAT32. All 7 algorithms, each of which can save 5 models, support SD card saving and loading models.

1. Learn new objects: Let HUSKYLENS learn several new objects. For example: in the object classification function, learn several animals with different ID numbers in turn.

After the model is exported to the SD card, read the SD card with the computer, and you can see the model data file with suffix .conf in the "HUSKYLENS" folder. The file names saved by different algorithms are different. Shown as below:

Tip: According to the names of the files, we can know which algorithm function the exported model data file belongs to. The pictures learned in each model cannot be viewed.

3. Import model: When the model data file is already in the SD card, we can directly import it into HUSKYLENS. For example, in the object classification function, if the model data file of object classification is already in the SD card, then, just select "Import from SD Card" in parameter setting interface of secondary menu of object classification function, and select anyone from 0 to 4 to load the model into HUSKYLENS.

In this way, micro:bit, Arduino, and other mainboards can be used to automatically trigger the saving or loading of model files. The following code block can be used to achieve this function.

In addition to manually pressing the "learn button" to learn the target object, it can also be triggered programmatically. This function enables micro: Bit, Arduino, and other mainboards to automatically control the learning of HUSKYLENS.

Demonstration: Press button A on the mainboard of micro:bit or pull down the Arduino Pin A0 to let HUSKYLENS learn the object once. The object’s ID is 1.

In object classification mode, aim HUSKYLENS at the following three images in turn. Press button A of micro:bit or pull down the Arduino Pin A0, then HUSKYLENS will learn these three images as objects:ID1 in turn. When HUSKYLENS recognizes any of these three images again, it displays object:ID1 on the screen, indicating that the three workers are wearing safety hats.

Here you can customize the name of ID1, such as safe:ID1; You can also customize the text on the screen, overlaying relevant information to indicate that the status is with safety hat. With the information, the recognition results are easy to understand.

Unzip the file, then copy the folder to the "libraries" folder of the Arduino IDE. Then check whether the folder name is "HUSKYLENS". If not, please change it as "HUSKYLENS". Please note that the library file name must be HUSKYLENS.

In this project, HuskyLens will be connected to Arduino mainboard. And Arduino Uno will read position data of the object from HuskyLens. Then the serial port monitor will print the data. So that, you can read the position of the object in real time.

Open the serial monitor of Arduino IDE, then you will get the position data of the object, same as the results in UART mode. Please refer to the previous chapter, which will not be repeated here.

Mind+ is a Scratch 3.0-based programming tool, which allows you to build a program by dragging and snapping coding blocks. With tons of tutorials, sample projects and a large community, it is one of the best tools for you to learn programing from absolutely zero!

This chapter demonstrates how to connect HuskyLens to the micro: bit board, then the micro: bit board reads the face recognition results from HuskyLens. If HuskyLens recognizes you (the learned face), the dot-matrix screen of the micro: bit displays a smiling face, otherwise it displays a crying face.

The following picture is only for reference when wiring. The R and T pins of HuskyLens (their functions are SCL and SDA here) are connected to the SCL (P19) and SDA (P20) pins of the micro: bit respectively. The communication protocol between HuskyLens and micro: bit is I2C.

Tips: HuskyLens consumes heavy current, up to 3.3V 320mA or more. The mciro: bit board is not enough to supply power. Therefore, external power supply is required. You can connect the external power supply to the external power connector of the micro: bit expansion board or the USB connector of HuskyLens.

If no COM port is found or you use mcirobit for the first time, please click the Install Serialport Driverbutton to install the driver with one click.

When HuskyLens recognizes your face, the dot-matrix screen on the micro: bit board will show a smiling face. If it were not your face, or no face appeared, it would display a crying face.

Microsoft MakeCode is a free, open source platform for creating engaging computer science learning experiences that support a progression path into real-world programming. Click here to view the MakeCode for micro:bit.

This chapter demonstrates how to connect HuskyLens to the micro: bit board, then the micro: bit board reads the face recognition results from HuskyLens. If HuskyLens recognizes you (the learned face), the dot-matrix screen of the micro: bit displays a smiling face, otherwise it displays a crying face.

The following picture is only for reference when wiring. The R and T pins of HuskyLens (their functions are SCL and SDA here) are connected to the SCL (P19) and SDA (P20) pins of the micro: bit respectively. The communication protocol between HuskyLens and micro: bit is I2C.

Tips: HuskyLens consumes heavy current, up to 3.3V 320mA or more. The mciro: bit board is not enough to supply power. Therefore, external power supply is required. You can connect the external power supply to the external power connector of the micro: bit expansion board, or the HuskyLens USB connector.

When HuskyLens recognizes your face, the dot-matrix screen on the micro: bit board will show a smiling face. If it were not your face, or no face appeared, it would display a crying face.

A. The firmware you downloaded is not correct, that is, The file is incomplete and wrong. (e.g. The correct firmware is up to 9MB, but the firmware you download is only 66KB.) It may caused by the unstable network or other matters. Please download it from the github again.

Ms.Josey

Ms.Josey

Ms.Josey

Ms.Josey