linux tft lcd driver hdmi free sample

In these videos, the SPI (GPIO) bus is referred to being the bottleneck. SPI based displays update over a serial data bus, transmitting one bit per clock cycle on the bus. A 320x240x16bpp display hence requires a SPI bus clock rate of 73.728MHz to achieve a full 60fps refresh frequency. Not many SPI LCD controllers can communicate this fast in practice, but are constrained to e.g. a 16-50MHz SPI bus clock speed, capping the maximum update rate significantly. Can we do anything about this?

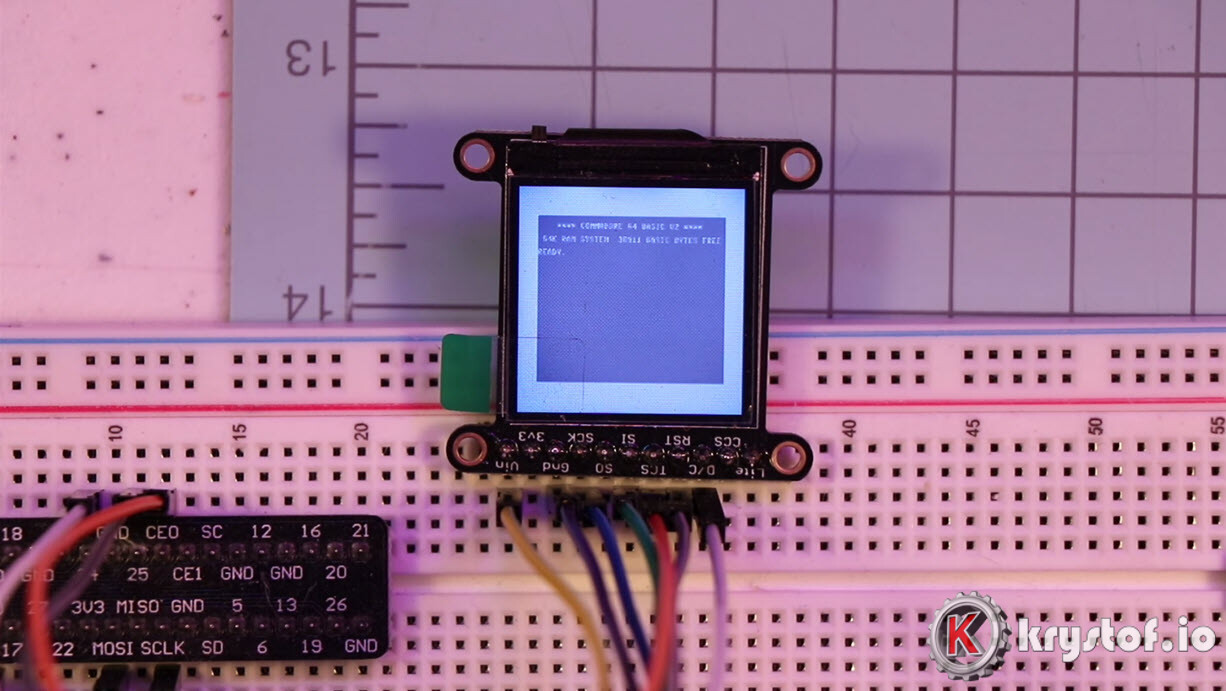

The fbcp-ili9341 project started out as a display driver for the Adafruit 2.8" 320x240 TFT w/ Touch screen for Raspberry Pi display that utilizes the ILI9341 controller. On that display, fbcp-ili9341 can achieve a 60fps update rate, depending on the content that is being displayed. Check out these videos for examples of the driver in action:

Good old interlacing is added into the mix: if the amount of pixels that needs updating is detected to be too much that the SPI bus cannot handle it, the driver adaptively resorts to doing an interlaced update, uploading even and odd scanlines at subsequent frames. Once the number of pending pixels to write returns to manageable amounts, progressive updating is resumed. This effectively doubles the maximum display update rate. (If you do not like the visual appearance that interlacing causes, it is easy to disable this by uncommenting the line #define NO_INTERLACING in file config.h)

although not all boards are actively tested on, so ymmv especially on older boards. (Bug fixes welcome, use https://elinux.org/RPi_HardwareHistory to identify which board you are running on)

This driver does not utilize the notro/fbtft framebuffer driver, so that needs to be disabled if active. That is, if your /boot/config.txt file has lines that look something like dtoverlay=pitft28r, ..., dtoverlay=waveshare32b, ... or dtoverlay=flexfb, ..., those should be removed.

This program neither utilizes the default SPI driver, so a line such as dtparam=spi=on in /boot/config.txt should also be removed so that it will not cause conflicts.

If you have been running existing fbcp driver, make sure to remove that e.g. via a sudo pkill fbcp first (while running in SSH prompt or connected to a HDMI display), these two cannot run at the same time. If /etc/rc.local or /etc/init.d contains an entry to start up fbcp at boot, that directive should be deleted.

-DPIRATE_AUDIO_ST7789_HAT=ON: If specified, targets a Pirate Audio 240x240, 1.3inch IPS LCD display HAT for Raspberry Pi with ST7789 display controller

-DKEDEI_V63_MPI3501=ON: If specified, targets a KeDei 3.5 inch SPI TFTLCD 480*320 16bit/18bit version 6.3 2018/4/9 display with MPI3501 display controller.

-DGPIO_TFT_DATA_CONTROL=number: Specifies/overrides which GPIO pin to use for the Data/Control (DC) line on the 4-wire SPI communication. This pin number is specified in BCM pin numbers. If you have a 3-wire SPI display that does not have a Data/Control line, set this value to -1, i.e. -DGPIO_TFT_DATA_CONTROL=-1 to tell fbcp-ili9341 to target 3-wire ("9-bit") SPI communication.

-DGPIO_TFT_RESET_PIN=number: Specifies/overrides which GPIO pin to use for the display Reset line. This pin number is specified in BCM pin numbers. If omitted, it is assumed that the display does not have a Reset pin, and is always on.

-DGPIO_TFT_BACKLIGHT=number: Specifies/overrides which GPIO pin to use for the display backlight line. This pin number is specified in BCM pin numbers. If omitted, it is assumed that the display does not have a GPIO-controlled backlight pin, and is always on. If setting this, also see the #define BACKLIGHT_CONTROL option in config.h.

Here is a full example of what to type to build and run, if you have the Adafruit 2.8" 320x240 TFT w/ Touch screen for Raspberry Pi with ILI9341 controller:

If the size of the default HDMI output /dev/fb0 framebuffer differs from the resolution of the display, the source video size will by default be rescaled to fit to the size of the SPI display. fbcp-ili9341 will manage setting up this rescaling if needed, and it will be done by the GPU, so performance should not be impacted too much. However if the resolutions do not match, small text will probably appear illegible. The resizing will be done in aspect ratio preserving manner, so if the aspect ratios do not match, either horizontal or vertical black borders will appear on the display. If you do not use the HDMI output at all, it is probably best to configure the HDMI output to match the SPI display size so that rescaling will not be needed. This can be done by setting the following lines in /boot/config.txt:

These lines hint native applications about the default display mode, and let them render to the native resolution of the TFT display. This can however prevent the use of the HDMI connector, if the HDMI connected display does not support such a small resolution. As a compromise, if both HDMI and SPI displays want to be used at the same time, some other compatible resolution such as 640x480 can be used. See Raspberry Pi HDMI documentation for the available options to do this.

On the other hand, it is desirable to control how much CPU time fbcp-ili9341 is allowed to use. The default build settings are tuned to maximize the display refresh rate at the expense of power consumption on Pi 3B. On Pi Zero, the opposite is done, i.e. by default the driver optimizes for battery saving instead of maximal display update speed. The following options can be controlled to balance between these two:

The main option to control CPU usage vs performance aspect is the option #define ALL_TASKS_SHOULD_DMA in config.h. Enabling this option will greatly reduce CPU usage. If this option is disabled, SPI bus utilization is maximized but CPU usage can be up to 80%-120%. When this option is enabled, CPU usage is generally up to around 15%-30%. Maximal CPU usage occurs when watching a video, or playing a fast moving game. If nothing is changing on the screen, CPU consumption of the driver should go down very close to 0-5%. By default #define ALL_TASKS_SHOULD_DMA is enabled for Pi Zero, but disabled for Pi 3B.

The CMake option -DUSE_DMA_TRANSFERS=ON should always be enabled for good low CPU usage. If DMA transfers are disabled, the driver will run in Polled SPI mode, which generally utilizes a full dedicated single core of CPU time. If DMA transfers are causing issues, try adjusting the DMA send and receive channels to use for SPI communication with -DDMA_TX_CHANNEL=

The option #define RUN_WITH_REALTIME_THREAD_PRIORITY can be enabled to make the driver run at realtime process priority. This can lock up the system however, but still made available for advanced experimentation.

This does not mean that overall input to display latency in games would be so immediate. Briefly testing a NES emulated game in Retropie suggests a total latency of about 60-80 msecs. This latency is caused by the NES game emulator overhead and extra latency added by Linux, DispmanX and GPU rendering, and GPU framebuffer snapshotting. (If you ran fbcp-ili9341 as a static library bypassing DispmanX and the GPU stack, directly linking your GPIO input and application logic into fbcp-ili9341, you would be able to get down to this few msecs of overall latency, like shown in the above GPIO input video)

Interestingly, fbcp-ili9341 is about ~33msecs faster than a cheap 3.5" KeDei HDMI display. I do not know if this is a result of the KeDei HDMI display specifically introducing extra latency, or if all HDMI displays connected to the Pi would have similar latency overhead. An interesting question is also how SPI would compare with DPI connected displays on the Pi.

To get tearing free updates, you should use a DPI display, or a good quality HDMI display. Beware that cheap small 3.5" HDMI displays such as KeDei do also tear - that is, even if they are controlled via HDMI, they don"t actually seem to implement VSYNC timed internal operation.

Having no vsync is not all bad though, since with the lack of vsync, SPI displays have the opportunity to obtain smoother animation on content that is not updating at 60Hz. It is possible that content on the SPI display will stutter even less than what DPI or HDMI displays on the Pi can currently provide (although I have not been able to test this in detail, except for the KeDei case above).

The main option that affects smoothness of display updates is the #define USE_GPU_VSYNC line in config.h. If this is enabled, then the internal Pi GPU HDMI vsync clock is used to drive frames onto the display. The Pi GPU clock runs at a fixed rate that is independent of the content. This rate can be discovered by running tvservice -s on the Pi console, and is usually 59Hz or 60Hz. If your application renders at this rate, animation will look smooth, but if not, there will be stuttering. For example playing a PAL NES game that updates at 50Hz with HDMI clock set at 60Hz will cause bad microstuttering in video output if #define USE_GPU_VSYNC is enabled.

If USE_GPU_VSYNC is disabled, then a busy spinning GPU frame snapshotting thread is used to drive the updates. This will produce smoother animation in content that does not maintain a fixed 60Hz rate. Especially in OpenTyrian, a game that renders at a fixed 36fps and has slowly scrolling scenery, the stuttering caused by USE_GPU_VSYNC is particularly visible. Running on Pi 3B without USE_GPU_VSYNC enabled produces visually smoother looking scrolling on an Adafruit 2.8" ILI9341 PiTFT set to update at 119Hz, compared to enabling USE_GPU_VSYNC on the same setup. Without USE_GPU_VSYNC, the dedicated frame polling loop thread "finds" the 36Hz update rate of the game, and then pushes pixels to the display at this exact rate. This works nicely since SPI displays disregard vsync - the result is that frames are pushed out to the SPI display immediately as they become available, instead of pulling them at a fixed 60Hz rate like HDMI does.

The codebase captures screen framebuffers by snapshotting via the VideoCore vc_dispmanx_snapshot() API, and the obtained pixels are then routed on to the SPI-based display. This kind of polling is performed, since there does not exist an event-based mechanism to get new frames from the GPU as they are produced. The result is inefficient and can easily cause stuttering, since different applications produce frames at different paces. Ideally the code would ask the VideoCore API to receive finished frames in callback notifications immediately after they are rendered, but this kind of functionality does not exist in the current GPU driver stack. In the absence of such event delivery mechanism, the code has to resort to polling snapshots of the display framebuffer using carefully timed heuristics to balance between keeping latency and stuttering low, while not causing excessive power consumption. These heuristics keep continuously guessing the update rate of the animation on screen, and they have been tuned to ensure that CPU usage goes down to 0% when there is no detected activity on screen, but it is certainly not perfect. This GPU limitation is discussed at raspberrypi/userland#440. If you"d like to see fbcp-ili9341 operation reduce latency, stuttering and power consumption, please throw a (kind!) comment or a thumbs up emoji in that bug thread to share that you care about this, and perhaps Raspberry Pi engineers might pick the improvement up on the development roadmap. If this issue is resolved, all of the #define USE_GPU_VSYNC, #define SAVE_BATTERY_BY_PREDICTING_FRAME_ARRIVAL_TIMES and #define SELF_SYNCHRONIZE_TO_GPU_VSYNC_PRODUCED_NEW_FRAMES hacks from the previous section could be deleted from the driver, hopefully leading to a best of all worlds scenario without drawbacks.

At the moment fbcp-ili9341 is only likely to work on 32-bit OSes, on Raspbian/Ubuntu/Debian family of distributions, where Broadcom and DispmanX libraries are available. 64-bit operating systems do not currently work (see issue #43). It should be possible to port the driver to 64-bit and other OSes, though the amount of work has not been explored.

The fbcp part in the name means framebuffer copy; specifically for the ILI9341 controller. fbcp-ili9341 is not actually a framebuffer copying driver, it does not create a secondary framebuffer that it would copy bytes across to from the primary framebuffer. It is also no longer a driver only for the ILI9341 controller. A more appropriate name might be userland-raspi-spi-display-driver or something like that, but the original name stuck.

Enable the option #define DISPLAY_ROTATE_180_DEGREES in config.h. This should rotate the SPI display to show up the other way around, while keeping the HDMI connected display orientation unchanged. Another option is to utilize a /boot/config.txt option display_rotate=2, which rotates both the SPI output and the HDMI output.

Yes, both work fine. For linux command line terminal, the /dev/tty1 console should be set to output to Linux framebuffer 0 (/dev/fb0). This is the default mode of operation and there do not exist other framebuffers in a default distribution of Raspbian, but if you have manually messed with the con2fbmap command in your installation, you may have inadvertently changed this configuration. Run con2fbmap 1 to see which framebuffer the /dev/tty1 console is outputting to, it should print console 1 is mapped to framebuffer 0. Type con2fbmap 1 0 to reset console 1 back to outputting to framebuffer 0.

If fbcp-ili9341 does not support your display controller, you will have to write support for it. fbcp-ili9341 does not have a "generic SPI TFT driver routine" that might work across multiple devices, but needs specific code for each. If you have the spec sheet available, you can ask for advice, but please do not request to add support to a display controller "blind", that is not possible.

Perhaps. This is a more recent experimental feature that may not be as stable, and there are some limitations, but 3-wire ("9-bit") SPI display support is now available. If you have a 3-wire SPI display, i.e. one that does not have a Data/Control (DC) GPIO pin to connect, configure it via CMake with directive -DGPIO_TFT_DATA_CONTROL=-1 to tell fbcp-ili9341 that it should be driving the display with 3-wire protocol.

No. Those are completely different technologies altogether. It should be possible to port the driver algorithm to work on I2C however, if someone is interested.

At the moment one cannot utilize the XPT2046/ADS7846 touch controllers while running fbcp-ili9341, so touch is mutually incompatible with this driver. In order for fbcp-ili9341 to function, you will need to remove all dtoverlays in /boot/config.txt related to touch.

Yes, fbcp-ili9341 shows the output of the HDMI display on the SPI screen, and both can be attached at the same time. A HDMI display does not have to be connected however, although fbcp-ili9341 operation will still be affected by whatever HDMI display mode is configured. Check out tvservice -s on the command line to check what the current DispmanX HDMI output mode is.

At the moment fbcp-ili9341 has been developed to only display the contents of the main DispmanX GPU framebuffer over to the SPI display. That is, the SPI display will show the same picture as the HDMI output does. There is no technical restriction that requires this though, so if you know C/C++ well, it should be a manageable project to turn fbcp-ili9341 to operate as an offscreen display library to show a completely separate (non-GPU-accelerated) image than what the main HDMI display outputs. For example you could have two different outputs, e.g. a HUD overlay, a dashboard for network statistics, weather, temps, etc. showing on the SPI while having the main Raspberry Pi desktop on the HDMI.

This suggests that the power line or the backlight line might not be properly connected. Or if the backlight connects to a GPIO pin on the Pi (and not a voltage pin), then it may be that the pin is not in correct state for the backlight to turn on. Most of the LCD TFT displays I have immediately light up their backlight when they receive power. The Tontec one has a backlight GPIO pin that boots up high but must be pulled low to activate the backlight. OLED displays on the other hand seem to stay all black even after they do get power, while waiting for their initialization to be performed, so for OLEDs it may be normal for nothing to show up on the screen immediately after boot.

If the backlight connects to a GPIO pin, you may need to define -DGPIO_TFT_BACKLIGHT=

This suggests same as above, increase SPI bus divisor or troubleshoot disabling DMA. If DMA is detected to be the culprit, try changing up the DMA channels. Double check that /boot/config.txt does not have any dtoverlays regarding other SPI display drivers or touch screen controllers, and that it does NOT have a dtparam=spi=on line in it - fbcp-ili9341 does not use the Linux kernel SPI driver.

Double check the Data/Command (D/C) GPIO pin physically, and in CMake command line. Whenever fbcp-ili9341 refers to pin numbers, they are always specified in BCM pin numbers. Try setting a higher -DSPI_BUS_CLOCK_DIVISOR= value to CMake. Make sure no other fbcp programs or SPI drivers or dtoverlays are enabled.

fbcp-ili9341 needs a few megabytes of GPU memory to function if DMA transfers are enabled. The gpu_mem boot config option dictates how much of the Pi"s memory area is allocated to the GPU. By default this is 64MB, which has been observed to not leave enough memory for fbcp-ili9341 if HDMI is run at 1080p. If this error happens, try increasing GPU memory to e.g. 128MB by adding a line gpu_mem=128 in /boot/config.txt.

All the ILI9341 displays work nice and super fast at ~70-80MHz. My WaveShare 3.5" 320x480 ILI9486 display runs really slow compared to its pixel resolution, ~32MHz only. See fbcp-ili9341 ported to ILI9486 WaveShare 3.5" (B) SpotPear 320x480 SPI display for a video of this display in action. Adafruit"s 320x480 3.5" HX8357D PiTFTs is ~64% faster in comparison.

The Tontec MZ61581 controller based 320x480 3.5" display on the other hand can be driven insanely fast at up to 140MHz! These seem to be quite hard to come by though and they are expensive. Tontec seems to have gone out of business and for example the domain itontec.com from which the supplied instructions sheet asks to download original drivers from is no longer registered. I was able to find one from eBay for testing.

Port fbcp-ili9341 to work as a static code library that one can link to another application for CPU-based drawing directly to display, bypassing inefficiencies and latency of the general purpose Linux DispmanX/graphics stack.

This driver is licensed under the MIT License. See LICENSE.txt. In nonlegal terms, it"s yours for both free and commercial projects, DIY packages, kickstarters, Etsys and Ebays, and you don"t owe back a dime. Feel free to apply and derive as you wish.

After execution, the driver will be installed. The system will automatically restart, and the display screen will rotate 90 degrees to display and touch normally.

( " XXX-show " can be changed to the corresponding driver, and " 90 " can be changed to 0, 90, 180 and 270, respectively representing rotation angles of 0 degrees, 90 degrees, 180 degrees, 270 degrees)

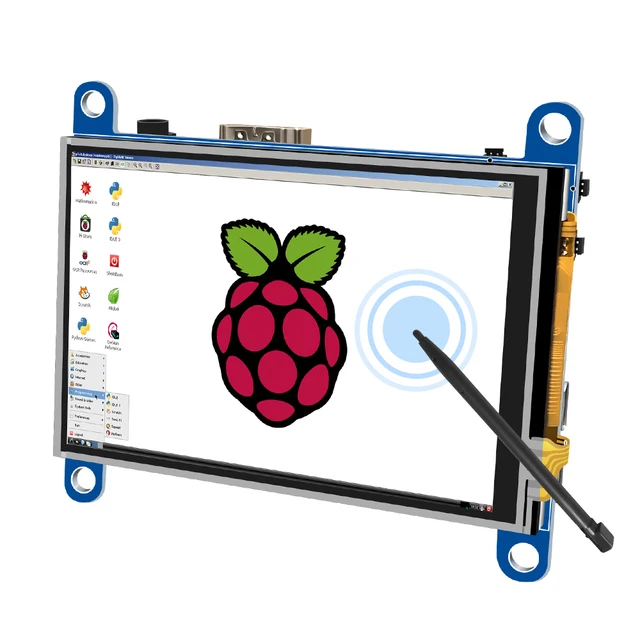

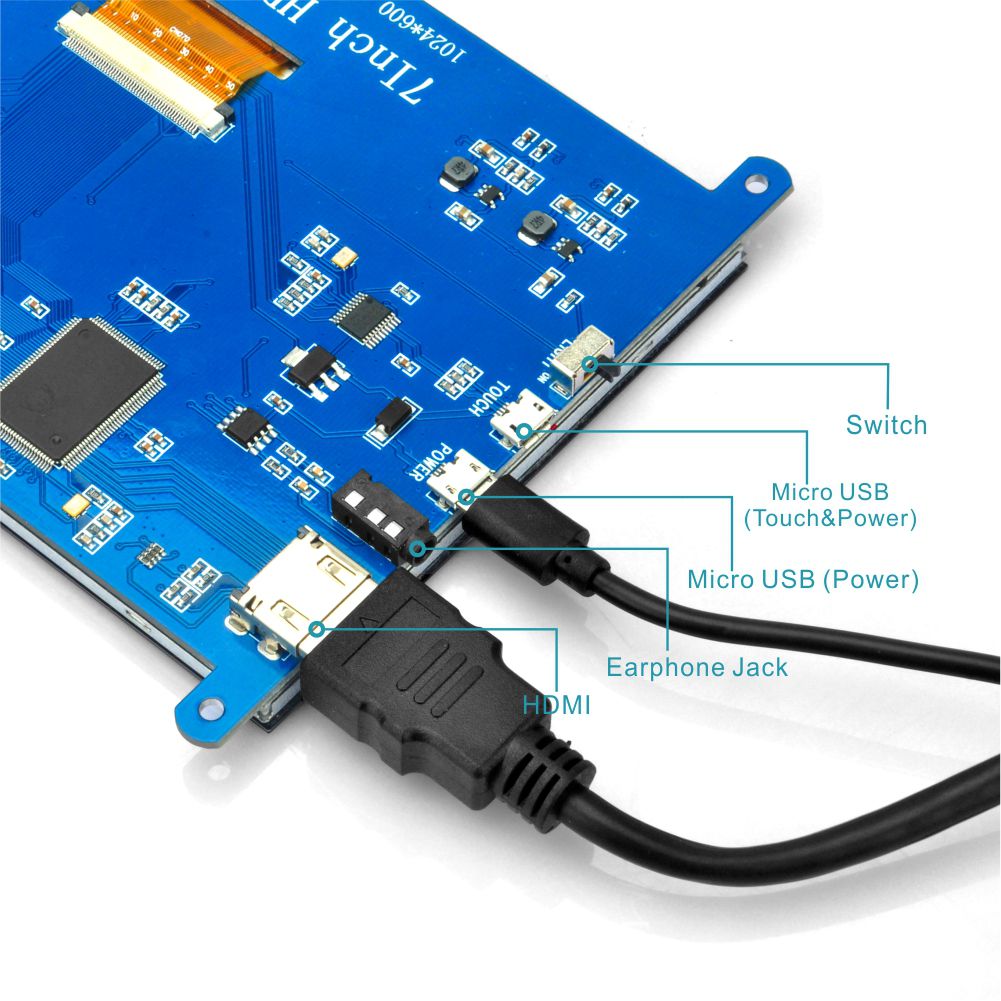

Insert the TF Card to Raspberry Pi, connect the Raspberry Pi and LCD by HDMI cable; connect USB cable to one of the four USB ports of Raspberry Pi, and connect the other end of the USB cable to the USB port of the LCD; then supply power to Raspberry Pi; after that if the display and touch both are OK, it means drive successfully (please use the full 2A for power supply).

After execution, the driver will be installed. The system will automatically restart, and the display screen will rotate 90 degrees to display and touch normally.

(" XXX-show " can be changed to the corresponding driver, and " 90 " can be changed to 0, 90, 180 and 270, respectively representing rotation angles of 0 degrees, 90 degrees, 180 degrees, 270 degrees)

Compatible and Direct-connect with any revision of Raspberry Pi (If you are using a Raspberry Pi Zero / Zero 2 W, an additional HDMI cable is required).

Power: turn on/off the back light. If you needn"t use the LCD for a long time, you can turn off the back light with this button to reduce the comsuption

Since the first-generation Raspberry Pi released, Waveshare has been working on designing, developing, and producing various fantastic touch LCDs for the Pi. Unfortunately, there are quite a few pirated/knock-off products in the market. They"re usually some poor copies of our early hardware revisions, and comes with none support service.

Compatible and Direct-connect with any revision of Raspberry Pi. (If you are using a Raspberry Pi Zero / Zero 2 W, an additional HDMI cable is required).

a line of extreme and ultra-narrow bezel LCD displays that provides a video wall solution for demanding requirements of 24x7 mission-critical applications and high ambient light environments

Friendly Reminder: In the past deliveries, there is a CD with outdated driver. Please ignore the CD, we won"t put the CD in the next deliveries. Download the latest driver from this page.

This 5 inch TFT Display with Touch Screen is a mini panel-mountable HDMI monitor. So small and simple, but you can use this display with any computer that has HDMI output, and the shape makes it easy to attach to a electronic product. Although the 800x480 common HDMI display is made for Raspberry Pi, we can use it other where not only for Raspberry Pi.

Our 5 inch screen supports Raspbian,Ubuntu Mate,Kali Linux and Retropie system for Raspberry Pi.If you use it on PC or others that the touch function is unable to use.

And next, we will teach you how to install the driver for your raspberry pi OS. If no system in your SD card, please refer to the Raspberry Pi office tutorial.

After I published my $1 MCU write-up, several readers suggested I look at application processors — the MMU-endowed chips necessary to run real operating systems like Linux. Massive shifts over the last few years have seen internet-connected devices become more featureful (and hopefully, more secure), and I’m finding myself putting Linux into more and more places.

This article is targeted at embedded engineers who are familiar with microcontrollers but not with microprocessors or Linux, so I wanted to put together something with a quick primer on why you’d want to run embedded Linux, a broad overview of what’s involved in designing around application processors, and then a dive into some specific parts you should check out — and others you should avoid — for entry-level embedded Linux systems.

If my mantra for the microcontroller article was that you should pick the right part for the job and not be afraid to learn new software ecosystems, my argument for this post is even simpler: once you’re booted into Linux on basically any of these parts, they become identical development environments.

That makes chips running embedded Linux almost a commodity product: as long as your processor checks off the right boxes, your application code won’t know if it’s running on an ST or a Microchip part — even if one of those is a brand-new dual-core Cortex-A7 and the other is an old ARM9. Your I2C drivers, your GPIO calls — even your V4L-based image processing code — will all work seamlessly.

As a result, the boards I built for this review are akin to the notes from your high school history class or a recording you made of yourself practicing a piece of music to study later. So while I’ll post pictures of the boards and screenshots of layouts to illustrate specific points, these aren’t intended to serve as reference designs or anything; the whole point of the review is to get you to a spot where you’ll want to go off and design your own little Linux boards. Teach a person to fish, you know?

Coming from microcontrollers, the first thing you’ll notice is that Linux doesn’t usually run on Cortex-M, 8051, AVR, or other popular microcontroller architectures. Instead, we use application processors — popular ones are the Arm Cortex-A, ARM926EJ-S, and several MIPS iterations.

The biggest difference between these application processors and a microcontroller is quite simple: microprocessors have a memory management unit (MMU), and microcontrollers don’t. Yes, you can run Linux without an MMU, but you usually shouldn’t: Cortex-M7 parts that can barely hit 500 MHz routinely go for double or quadruple the price of faster Cortex-A7s. They’re power-hungry: microcontrollers are built on larger processes than application processors to reduce their leakage current. And without an MMU and generally-low clock speeds, they’re downright slow.

When your microcontroller project outgrows its super loop and the random ISRs you’ve sprinkled throughout your code with care, there are many bare-metal tasking kernels to turn to — FreeRTOS, ThreadX (now Azure RTOS), RT-Thread, μC/OS, etc. By an academic definition, these are operating systems. However, compared to Linux, it’s more useful to think of these as a framework you use to write your bare-metal application inside. They provide the core components of an operating system: threads (and obviously a scheduler), semaphores, message-passing, and events. Some of these also have networking, filesystems, and other libraries.

Comparing bare-metal RTOSs to Linux simply comes down to the fundamental difference betweenthese and Linux: memory management and protection. This one technical difference makes Linux running on an application processor behave quite differently from your microcontroller running an RTOS.((Before the RTOS snobs attack with pitchforks, yes, there are large-scale, well-tested RTOSes that are usually run on application processors with memory management units. Look at RTEMS as an example. They don’t have some of the limitations discussed below, and have many advantages over Linux for safety-critical real-time applications.))

Because Linux-capable application processors have a memory management unit, *alloc() calls execute swiftly and reliably. Physical memory is only reserved (faulted in) when you actually access a memory location. Memory fragmentation is much less an issue since Linux frees and reorganizes pages behind the scenes. Plus, switching to Linux provides easier-to-use diagnostic tools (like valgrind) to catch bugs in your application code in the first place. And finally, because applications run in virtual memory, if your app does have memory bugs in it, Linux will kill it — leaving the rest of your system running. ((As a last-ditch kludge, it’s not uncommon to call your app in a superloop shell script to automatically restart it if it crashes without having to restart the entire system.))

Running something like lwIP under FreeRTOS on a bare-metal microcontroller is acceptable for a lot of simple applications, but application-level network services like HTTP can burden you to implement in a reliable fashion. Stuff that seems simple to a desktop programmer — like a WebSockets server that can accept multiple simultaneous connections — can be tricky to implement in bare-metal network stacks. Because C doesn’t have good programming constructs for asynchronous calls or exceptions, code tends to contain either a lot of weird state machines or tons of nested branches. It’s horrible to debug problems that occur. In Linux, you get a first-class network stack, plus tons of rock-solid userspace libraries that sit on top of that stack and provide application-level network connectivity. Plus, you can use a variety of high-level programming languages that are easier to handle the asynchronous nature of networking.

Somewhat related is the rest of the standards-based communication / interface frameworks built into the kernel. I2S, parallel camera interfaces, RGB LCDs, SDIO, and basically all those other scary high-bandwidth interfaces seem to come together much faster when you’re in Linux. But the big one is USB host capabilities. On Linux, USB devices just work. If your touchscreen drivers are glitching out and you have a client demo to show off in a half-hour, just plug in a USB mouse until you can fix it (I’ve been there before). Product requirements change and now you need audio? Grab a $20 USB dongle until you can respin the board with a proper audio codec. On many boards without Ethernet, I just use a USB-to-Ethernet adapter to allow remote file transfer and GDB debugging. Don’t forget that, at the end of the day, an embedded Linux system is shockingly similar to your computer.

Secure boot isn’t available on every application processor reviewed here, it’s much more common. While there are still vulnerabilities that get disclosed from time to time, my non-expert opinion is that the implementations seem much more robust than on Cortex-M parts: boot configuration data and keys are stored in one-time-programmable memory that is not accessible from non-privileged code. Network security is also more mature and easier to implement using Linux network stack and cryptography support, and OP-TEE provides a ready-to-roll secure environment for many parts reviewed here.

Imagine that you needed to persist some configuration data across reboot cycles. Sure, you can use structs and low-level flash programming code, but if this data needs to be appended to or changed in an arbitrary fashion, your code would start to get ridiculous. That’s why filesystems (and databases) exist. Yes, there are embedded libraries for filesystems, but these are way clunkier and more fragile than the capabilities you can get in Linux with nothing other than ticking a box in menuconfig. And databases? I’m not sure I’ve ever seen an honest attempt to run one on a microcontroller, while there’s a limitless number available on Linux.

In a bare-metal environment, you are limited to a single application image. As you build out the application, you’ll notice things get kind of clunky if your system has to do a few totally different things simultaneously. If you’re developing for Linux, you can break this functionality into separate processes, where you can develop, debug, and deploy separately as separate binary images.

Bare-metal MCU development is primarily done in C and C++. Yes, there are interesting projects to run Python, Javascript, C#/.NET, and other languages on bare metal, but they’re usually focused on implementing the core language only; they don’t provide a runtime that is the same as a PC. And even their language implementation is often incompatible. That means your code (and the libraries you use) have to be written specifically for these micro-implementations. As a result, just because you can run MicroPython on an ESP32 doesn’t mean you can drop Flask on it and build up a web application server. By switching to embedded Linux, you can use the same programming languages and software libraries you’d use on your PC.

In Linux, there is a hard separation between userspace calls and the underlying hardware driver code. One key advantage of this is how easy it is to move from one hardware platform to another; it’s not uncommon to only have to change a couple of lines of code to specify the new device names when porting your code.

Yes, you can poke GPIO pins, perform I2C transactions, and fire off SPI messages from userspace in Linux, and there are some good reasons to use these tools during diagnosing and debugging. Plus, if you’re implementing a custom I2C peripheral device on a microcontroller, and there’s very little configuration to be done, it may seem silly to write a kernel driver whose only job is to expose a character device that basically passes on whatever data directly to the I2C device you’ve built.

But if you’re interfacing with off-the-shelf displays, accelerometers, IMUs, light sensors, pressure sensors, temperature sensors, ADCs, DACs, and basically anything else you’d toss on an I2C or SPI bus, Linux already has built-in support for this hardware that you can flip on when building your kernel and configure in your DTS file.

Sleep-mode power consumption. First, the good news: active mode power consumption of application processors is quite good when compared to microcontrollers. These parts tend to be built on smaller process nodes, so you get more megahertz for your ampere than the larger processes used for Cortex-M devices. Unfortunately, embedded Linux devices have a battery life that’s measured in hours or days, not months or years.

Boot time. Embedded Linux systems can take several seconds to boot up, which is orders of magnitude longer than a microcontroller’s start-up time. Alright, to be fair, this is a bit of an apples-to-oranges comparison: if you were to start initializing tons of external peripherals, mount a filesystem, and initialize a large application in an RTOS on a microcontroller, it could take several seconds to boot up as well. While boot time is a culmination of tons of different components that can all be tweaked and tuned, the fundamental limit is caused by application processors’ inability to execute code from external flash memory; they must copy it into RAM first ((unless you’re running an XIP kernel)).

Responsiveness. By default, Linux’s scheduler and resource system are full of unbounded latencies that under weird and improbable scenarios may take a long time to resolve (or may actually never resolve). Have you ever seen your mouse lock up for 3 seconds randomly? There you go. If you’re building a ventilator with Linux, think carefully about that. To combat this, there’s been a PREEMPT_RT patch for some time that turns Linux into a real-time operating system with a scheduler that can basically preempt anything to make sure a hard-real-time task gets a chance to run.

Also, when many people think they need a hard-real-time kernel, they really just want their code to be low-jitter. Coming from Microcontrollerland, it feels like a 1000 MHz processor should be able to bit-bang something like a 50 kHz square wave consistently, but you would be wrong. The Linux scheduler is going to give you something on the order of ±10 µs of jitter for interrupts, not the ±10 ns jitter you’re used to on microcontrollers. This can be remedied too, though: while Linux gobbles up all the normal ARM interrupt vectors, it doesn’t touch FIQ, so you can write custom FIQ handlers that execute completely outside of kernel space.

Figuring out system requirements for your software frameworks can be rather unintuitive. For example, doing a multi-touch-capable finger-painting app in Qt 5 is actually much less of a resource hog than running a simple backend server for a web app written in a modern stack using a JIT-compiled language. Many developers familiar with traditional Linux server/desktop development assume they’ll just throw a .NET Core web app on their rootfs and call it a day — only to discover that they’ve completely run out of RAM, or their app takes more than five minutes to launch, or they discover that Node.js can’t even be compiled for the ARM9 processor they’ve been designing around.

Slower ARM9 cores are for simple headless gadgets written in C/C++.Yes, you can run basic, animation-free low-resolution touch linuxfb apps with these, but blending and other advanced 2D graphics technology can really bog things down. And yes, you can run very simple Python scripts, but in my testing, even a “Hello, World!” Flask app took 38 seconds from launch to actually spitting out a web page to my browser on a 300 MHz ARM9. Yes, obviously once the Python file was compiled, it was much faster, but you should primarily be serving up static content using lightweight HTTP servers whenever possible. And, no, you can’t even compile Node.JS or .NET Core for these architectures. These also tend to boot from small-capacity SPI flash chips, which limits your framework choices.

I know that there are lots of people — especially hobbyists but even professional engineers — who have gotten to this point in the article and are thinking, “I do all my embedded Linux development with Raspberry Pi boards — why do I need to read this?” Yes, Raspberry Pi single-board computers, on the surface, look similar to some of these parts: they run Linux, you can attach displays to them, do networking, and they have USB, GPIO, I2C, and SPI signals available.

And for what it’s worth, the BCM2711 mounted on the Pi 4 is a beast of a processor and would easily best any part in this review on that measure. Dig a bit deeper, though: this processor has video decoding and graphics acceleration, but not even a single ADC input. It has built-in HDMI transmitters that can drive dual 4k displays, but just two PWM channels. This is a processor that was custom-made, from the ground up, to go into smart TVs and set-top boxes — it’s not a general-purpose embedded Linux application processor, so it isn’t generally suited for embedded Linux work.

It might be the perfect processor for your particular project, but it probably isn’t; forcing yourself to use a Pi early in the design process will over-constrain things. Yes, there are always workarounds to the aforementioned shortcomings — like I2C-interfaced PWM chips, SPI-interfaced ADCs, or LCD modules with HDMI receivers — but they involve external hardware that adds power, bulk, and cost. If you’re building a quantity-of-one project and you don’t care about these things, then maybe the Pi is the right choice for the job, but if you’re prototyping a real product that’s going to go into production someday, you’ll want to look at the entire landscape before deciding what’s best.

This article is all about getting an embedded application processor booting Linux — not building an entire embedded system. If you’re considering running Linux in an embedded design, you likely have some combination of Bluetooth, WiFi, Ethernet, TFT touch screen, audio, camera, or low-power RF transceiver work going on.

If you’re coming from the MCU world, you’ll have a lot of catching up to do in these areas, since the interfaces (and even architectural strategies) are quite different. For example, while single-chip WiFi/BT MCUs are common, very few application processors have integrated WiFi/BT, so you’ll typically use external SDIO- or USB-interfaced chipsets. Your SPI-interfaced ILI9341 TFTs will often be replaced with parallel RGB or MIPI models. And instead of burping out tones with your MCU’s 12-bit DAC, you’ll be wiring up I2S audio CODECs to your processor.

Processor vendors vigorously encourage reference design modification and reuse for customer designs. I think most professional engineers are most concerned with getting Rev A hardware that boots up than playing around with optimization, so many custom Linux boards I see are spitting images of off-the-shelf EVKs.

Most MPUs can boot from SPI NOR flash, SPI NAND flash, parallel, or MMC (for use with eMMC or MicroSD cards). Because of its organization, NOR flash memory has better read speeds but worse write speeds than NAND flash. SPI NOR flash memory is widely used for tiny systems with up to 16 MB of storage, but above that, SPI NAND and parallel-interfaced NOR and NAND flash become cheaper. Parallel-interfaced NOR flash used to be the ubiquitous boot media for embedded Linux devices, but I don’t see it deployed as much anymore — even though it can be found at sometimes half the price of SPI flash. My only explanation for its unpopularity is that no one likes wasting lots of I/O pins on parallel memory.

Unlike MCU-based designs, on an embedded Linux system, you absolutely, positively, must have a console UART available. Linux’s entire tracing architecture is built around logging messages to a console, as is the U-Boot bootloader.

That doesn’t mean you shouldn’t also have JTAG/SWD access, especially in the early stage of development when you’re bringing up your bootloader (otherwise you’ll be stuck with printf() calls). Having said that, if you actually have to break out your J-Link on your embedded Linux board, it probably means you’re having a really bad day. While you can attach a debugger to an MPU, getting everything set up correctly is extremely clunky when compared to debugging an MCU. Prepare to relocate symbol tables as your code transitions from SRAM to main DRAM memory. It’s not uncommon to have to muck around with other registers, too (like forcing your CPU out of Thumb mode). And on top of that, I’ve found that some U-Boot ports remux the JTAG pins (either due to alternate functionality or to save power), and the JTAG chains on some parts are quite complex and require using less-commonly used pins and features of the interface. Oh, and since you have an underlying Boot ROM that executes first, JTAG adapters can screw that up, too.

When most people think of DDR routing, length-tuning is the first thing that comes to mind. If you use a decent PCB design package, setting up length-tuning rules and laying down meandered routes is so trivial to do that most designers don’t think anything of it — they just go ahead and length-match everything that’s relatively high-speed — SDRAM, SDIO, parallel CSI / LCD, etc. Other than adding a bit of design time, there’s no reason not to maximize your timing margins, so this makes sense.

For the data groups, DDR3 uses on-die termination (ODT), configurable for 40, 60, or 120 ohm on memory chips (and usually the same or similar on the CPU) along with adjustable output impedance drivers. ODT is only enabled on the receiver’s end, so depending on whether you’re writing data or reading data, ODT will either be enabled on the memory chip, or on the CPU.

When building embedded Linux systems, we need to start by compiling all the off-the-shelf software we plan on running — the bootloader, kernel, and userspace libraries and applications. We’ll have to write and customize shell scripts and configuration files, and we’ll also often write applications from scratch. It’s really a totally different development process, so let’s talk about some prerequisites.

If you want to build a software image for a Linux system, you’ll need a Linux system. If you’re also the person designing the hardware, this is a bit of a catch-22 since most PCB designers work in Windows. While Windows Subsystem for Linux will run all the software you need to build an image for your board, WSL currently has no ability to pass through USB devices, so you won’t be able to use hardware debuggers (or even a USB microSD card reader) from within your Linux system. And since WSL2 is Hyper-V-based, once it’s enabled, you won’t be able to launch VMware, which uses its own hypervisor((Though a beta versions of VMWare will address this)).

Consequently, I recommend users skip over all the newfangled tech until it matures a bit more, and instead just spin up an old-school VMWare virtual machine and install Linux on it. In VMWare you can pass through your MicroSD card reader, debug probe, and even the device itself (which usually has a USB bootloader).

Building images is a computationally heavy and highly-parallel workload, so it benefits from large, high-wattage HEDT/server-grade multicore CPUs in your computer — make sure to pass as many cores through to your VM as possible. Compiling all the software for your target will also eat through storage quickly: I would allocate an absolute minimum of 200 GB if you anticipate juggling between a few large embedded Linux projects simultaneously.

While your specific project will likely call for much more software than this, these are the five components that go into every modern embedded Linux system((Yes, there are alternatives to these components, but the further you move away from the embedded Linux canon, the more you’ll find yourself on your own island, scratching your head trying to get things to work.)):

A cross toolchain, usually GCC + glibc, which contains your compiler, binutils, and C library. This doesn’t actually go into your embedded Linux system, but rather is used to build the other components.

As you’re reading through this, don’t get overwhelmed: if your hardware is reasonably close to an existing reference design or evaluation kit, someone has already gone to the trouble of creating default configurations for you for all of these components, and you can simply find and modify them. As an embedded Linux developer doing BSP work, you’ll spend way more time reading other people’s code and modifying it than you will be writing new software from scratch.

Just like with microcontroller development, when working on embedded Linux projects, you’ll write and compile the software on your computer, then remotely test it on your target. When programming microcontrollers, you’d probably just use your vendor’s IDE, which comes with a cross toolchain — a toolchain designed to build software for one CPU architecture on a system running a different architecture. As an example, when programming an ATTiny1616, you’d use a version of GCC built to run on your x64 computer but designed to emit AVR code. With embedded Linux development, you’ll need a cross toolchain here, too (unless you’re one of the rare types coding on an ARM-based laptop or building an x64-powered embedded system).

Unfortunately, our CPU’s boot ROM can’t directly load our kernel. Linux has to be invoked in a specific way to obtain boot arguments and a pointer to the device tree and initrd, and it also expects that main memory has already been initialized. Boot ROMs also don’t know how to initialize main memory, so we would have nowhere to store Linux. Also, boot ROMs tend to just load a few KB from flash at the most — not enough to house an entire kernel. So, we need a small program that the boot ROM can load that will initialize our main memory and then load the entire (usually-multi-megabyte) Linux kernel and then execute it.

U-Boot has to know a lotof technical details about your system. There’s a dedicated board.c port for each supported platform that initializes clocks, DRAM, and relevant memory peripherals, along with initializing any important peripherals, like your UART console or a PMIC that might need to be configured properly before bringing the CPU up to full speed. Newer board ports often store at least some of this configuration information inside a Device Tree, which we’ll talk about later. Some of the DRAM configuration data is often autodetected, allowing you to change DRAM size and layout without altering the U-Boot port’s code for your processor ((If you have a DRAM layout on the margins of working, or you’re using a memory chip with very different timings than the one the port was built for, you may have to tune these values)). You configure what you want U-Boot to do by writing a script that tells it which device to initialize, which file/address to load into which memory address, and what boot arguments to pass along to Linux. While these can be hard-coded, you’ll often store these names and addresses as environmental variables (the boot script itself can be stored as a bootcmd environmental variable). So a large part of getting U-Boot working on a new board is working out the environment.

Here’s the headline act. Once U-Boot turns over the program counter to Linux, the kernel initializes itself, loads its own set of device drivers((Linux does notcall into U-Boot drivers the way that an old PC operating system like DOS makes calls into BIOS functions.)) and other kernel modules, and calls your init program.

To get your board working, the necessary kernel hacking will usually be limited to enabling filesystems, network features, and device drivers — but there are more advanced options to control and tune the underlying functionality of the kernel.

Turning drivers on and off is easy, but actually configuring these drivers is where new developers get hung up. One big difference between embedded Linux and desktop Linux is that embedded Linux systems have to manually pass the hardware configuration information to Linux through a Device Tree file or platform data C code, since we don’t have EFI or ACPI or any of that desktop stuff that lets Linux auto-discover our hardware.

We need to tell Linux the addresses and configurations for all of our CPU’s fancy on-chip peripherals, and which kernel modules to load for each of them. You may think that’s part of the Linux port for our CPU, but in Linux’s eyes, even peripherals that are literally inside our processor— like LCD controllers, SPI interfaces, or ADCs — have nothing to do with the CPU, so they’re handled totally separately as device drivers stored in separate kernel modules.

And then there’s all the off-chip peripherals on our PCB. Sensors, displays, and basically all other non-USB devices need to be manually instantiated and configured. This is how we tell Linx that there’s an MPU6050 IMU attached to I2C0 with an address of 0x68, or an OV5640 image sensor attached to a MIPI D-PHY. Many device drivers have additional configuration information, like a prescalar factor, update rate, or interrupt pin use.

The old way of doing this was manually adding C structs to a platform_data C file for the board, but the modern way is with a Device Tree, which is a configuration file that describes every piece of hardware on the board in a weird quasi-C/JSONish syntax. Each logical piece of hardware is represented as a node that is nested under its parent bus/device; its node is adorned with any configuration parameters needed by the driver.

A DTS file is not compiled into the kernel, but rather, into a separate .dtb binary blob file that you have to deal with (save to your flash memory, configure u-boot to load, etc)((OK, I lied. You can actually append the DTB to the kernel so U-Boot doesn’t need to know about it. I see this done a lot with simple systems that boot from raw Flash devices.)). I think beginners have a reason to be frustrated at this system, since there’s basically two separate places you have to think about device drivers: Kconfig and your DTS file, and if these get out of sync, it can be frustrating to diagnose, since you won’t get a compilation error if your device tree contains nodes that there are no drivers for, or if your kernel is built with a driver that isn’t actually referenced for in the DTS file, or if you misspell a property or something (since all bindings are resolved at runtime).

Once Linux has finished initializing, it runs init. This is the first userspace program invoked on start-up. Our init program will likely want to run some shell scripts, so it’d be nice to have a sh we can invoke. Those scripts might touch or echo or cat things. It looks like we’re going to need to put a lot of userspace software on our root filesystem just to get things to boot — now imagine we want to actually login (getty), list a directory (ls), configure a network (ifconfig), or edit a text file (vi, emacs, nano, vim, flamewars ensue).

BusyBox configuration is obvious and uses the same Kconfig-based system that Linux and U-Boot use. You simply tell it which packages (and options) you wish to build the binary image with. There’s not much else to say — though a minor “gotcha” for new users is that the lightweight versions of these tools often have fewer features and don’t always support the same syntax/arguments.

Linux requires a root filesystem; it needs to know where the root filesystem is and what filesystem format it uses, and this parameter is part of its boot arguments.

If the preceding section made you dizzy, don’t worry: there’s really no reason to hand-configure and hand-compile all of that stuff individually. Instead, everyone uses build systems — the two big ones being Yocto and Buildroot — to automatically fetch and compile a full toolchain, U-Boot, Linux kernel, BusyBox, plus thousands of other packages you may wish, and install everything into a target filesystem ready to deploy to your hardware.

Yes, on their own, both U-Boot and Linux have defconfigs that do the heavy lifting: For example, by using a U-Boot defconfig, someone has already done the work for you in configuring U-Boot to initialize a specific boot media and boot off it (including setting up the SPL code, activating the activating the appropriate peripherals, and writing a reasonable U-Boot environment and boot script).

But the build system default configurations go a step further and integrate all these pieces together. For example, assume you want your system to boot off a MicroSD card, with U-Boot written directly at the beginning of the card, followed by a FAT32 partition containing your kernel and device tree, and an ext4 root filesystem partition. U-Boot’s defconfig will spit out the appropriate bin file to write to the SD card, and Linux’s defconfig will spit out the appropriate vmlinuz file, but it’s the build system itself that will create a MicroSD image, write U-Boot to it, create the partition scheme, format the filesystems, and copy the appropriate files to them. Out will pop an “image.sdcard” file that you can write to a MicroSD card.

Buildroot started as a bunch of Makefiles strung together to test uClibc against a pile of different commonly-used applications to help squash bugs in the library. Today, the infrastructure is the same, but it’s evolved to be the easiest way to build embedded Linux images.

By using the same Kconfig system used in Linux, U-Boot, and BusyBox, you configure everything — the target architecture, the toolchain, Linux, U-Boot, target packages, and overall system configuration — by simply running make menuconfig. It ships with tons of canned defconfigs that let you get a working image for your dev board by loading that config and running make. For example, make raspberrypi3_defconfig && make will spit out an SD card image you can use to boot your Pi off of.

Buildroot can also pass you off to the respective Kconfigs for Linux, U-Boot, or BusyBox — for example, running make linux-menuconfig will invoke the Linux menuconfig editor from within the Buildroot directory. I think beginners will struggle to know what is a Buildroot option and what is a Linux kernel or U-Boot option, so be sure to check in different places.

And, honestly, for run-and-gun projects, you probably won’t even bother creating an official board or defconfig — you’ll just hack at the existing ones. We can do this because Buildroot is crafty in lots of good ways designed to make it easy to make stuff work. For starters, most of the relevant settings are part of the defconfig file that can easily be modified and saved — for very simple projects, you won’t have to make further modifications. Think about toggling on a device driver: in Buildroot, you can invoke Linux’s menuconfig, modify things, save that config back to disk, and update your Buildroot config file to use your local Linux config, rather the one in the source tree. Buildroot knows how to pass out-of-tree DTS files to the compiler, so you can create a fresh DTS file for your board without even having to put it in your kernel source tree or create a machine or anything. And if you do need to modify the kernel source, you can hardwire the build process to bypass the specified kernel and use an on-disk one (which is great when doing active development).

The chink in the armor is that Buildroot is brain-dead at incremental builds. For example, if you load your defconfig, make, and then add a package, you can probably just run make again and everything will work. But if you change a package option, running make won’t automatically pick that up, and if there are other packages that need to be rebuilt as a result of that upstream dependency, Buildroot won’t rebuild those either. You can use the make [package]-rebuild target, but you have to understand the dependency graph connecting your different packages. Half the time, you’ll probably just give up and do make clean && make ((Just remember to save your Linux, U-Boot, and BusyBox configuration modifications first, since they’ll get wiped out.)) and end up rebuilding everything from scratch, which, even with the compiler cache enabled, takes forever. Honestly, Buildroot is the principal reason that I upgraded to a Threadripper 3970X during this project.

There are many layers in the official Yocto repos. Layers can be licensed and distributed separately, so many companies maintain their own “Yocto layers” (e.g., meta-atmel), and the big players actually maintain their own distribution that they build with Yocto. TI’s ProcessorSDK is built using their Arago Project infrastructure, which is built on top of Yocto. The same goes for ST’s OpenSTLinux Distribution. Even though Yocto distributors make heavy use of Google’s repo tool, getting a set of all the layers necessary to build an image can be tedious, and it’s not uncommon for me to run into strange bugs that occur when different vendors’ layers collide.

But here’s where Yocto falls flat for me as a hardware person: it has absolutely no interest in helping you build images for the shiny new custom board you just made. It is not a tool for quickly hacking together a kernel/U-Boot/rootfs during the early stages of prototyping (say, during this entire blog project). It wasn’t designed for that, so architectural decisions they made ensure it will never be that. It’s written in a very software-engineery way that values encapsulation, abstraction, and generality above all else. It’s not hard-coded to know anything, so you have to modify tons of recipes and create clunky file overlays whenever you want to do even the simplest stuff. It doesn’t know what DTS files are, so it doesn’t have a “quick trick” to compile Linux with a custom one. Even seemingly mundane things — like using menuconfig to modify your kernel’s config file and save that back somewhere so it doesn’t get wiped out — become ridiculous tasks. Just read through Section 1 of this Yocto guide to see what it takes to accomplish the equivalent of Buildroot’s make linux-savedefconfig((Alright, to be fair: many kernel recipes are set up with a hardcoded defconfig file inside the recipe folder itself, so you can often just manually copy over that file with a generated defconfig file from your kernel build directory — but this relies on your kernel recipe being set up this way)). Instead, if I plan on having to modify kernel configurations or DTS files, I usually resort to the nuclear option: copy the entire kernel somewhere else and then set the kernel recipe’s SRC_URI to that.

It may not seem like a big distinction when you’re getting started, but Yocto builds a Linux distribution, while Buildroot builds a system image. Yocto knows what each software component is and how those components depend on each other. As a result, Yocto can build a package feed for your platform, allowing you to remotely install and update software on your embedded product just as you would a desktop or server Linux instance. That’s why Yocto thinks of itself not as a Linux distribution, but as a tool to build Linux distributions. Whether you use that feature or not is a complicated decision — I think most embedded Linux engineers prefer to do whole-image updates at once to ensure there’s no chance of something screwy going on. But if you’re building a huge project with a 500 MB root filesystem, pushing images like that down the tube can eat through a lot of bandwidth (and annoy customers with “Downloading….” progress bars).

Allwinner F1C200s: a 400 MHz ARM9 SIP with 64 MB (or 32 MB for the F1C100s) of DDR SDRAM, packaged in an 88-pin QFN. Suitable for basic HMI applications with a parallel LCD interface, built-in audio codec, USB port, one SDIO interface, and little else.

Nuvoton NUC980: 300 MHz ARM9 SIP available in a variety of QFP packages and memory configurations. No RGB LCD controller, but has an oddly large number of USB ports and controls-friendly peripherals.

Rockchip RK3308: A quad-core 1.3 GHz Cortex-A35 that’s a much newer design than any of the other parts reviewed. Tailor-made for smart speakers, this part has enough peripherals to cover general embedded Linux work while being one of the easiest Rockchip parts to design around.

The Microchip, NXP, ST, and TI parts are what I would consider general-purpose MPUs: designed to drop into a wide variety of industrial and consumer connectivity, control, and graphical applications. They have 10/100 ethernet MACs (obviously requiring external PHYs to use), a parallel RGB LCD interface, a parallel camera sensor interface, two SDIO interfaces (typically one used for storage and the other for WiFi), and up to a dozen each of UARTs, SPI, I2C, and I2S interfaces. They often have extensive timers and a dozen or so ADC channels. These parts are also packaged in large BGAs that ball-out 100 or more I/O pins that enable you to build larger, more complicated systems.

On the other hand, the Allwinner and Rockchip parts are much more purpose-built for consumer goods — usually very specific consumer goods. With a built-in Ethernet PHY and a parallel and MIPI camera interface, the V3s is obviously designed as an IP camera. The F1C100s — a part with no Ethernet but with a hardware video decoder — is built for low-cost video playback applications. The A33 — with LVDS / MIPI display support, GPU acceleration, and no Ethernet — is for entry-level Android tablets. None of these parts have more than a couple UART, I2C, or SPI interfaces, and you might get a single ADC input and PWM channel on them, with no real timer resources available. But they all have built-in audio codecs — a feature not found anywhere else — along with hardware video decoding (and, in some cases, encoding). Unfortunately, with Allwinner, you always have to put a big asterisk by these hardware peripherals, since many of them will only work when using the old kernel that Allwinner distributes — along with proprietary media encoding/decoding libraries. Mainline Linux support will be discussed more for each part separately.

But, being Nuvoton, this chip has some (mostly good) weirdness up its sleeve. Unlike the other mainstream parts that were packaged in ~270 ball BGAs, the NUC980 comes in 216-pin, 128-pin, and even 64-pin QFP packages. I’ve never had issues hand-placing 0.8mm pitch BGAs, but there’s definitely a delight that comes from running Linux on something that looks like it could be a little Cortex-M microcontroller.

Another weird feature of this chip is that in addition to the 2 USB high-speed ports, there are 6 additional “host lite” ports that ru

Ms.Josey

Ms.Josey

Ms.Josey

Ms.Josey