gamma correction for lcd monitors for sale

Problems like extremely poor display of shadow areas, blown-out highlights, or images prepared on Macs appearing too dark on Windows computers are often due to gamma characteristics. In this session, we"ll discuss gamma, which has a significant impact on color reproduction on LCD monitors. Understanding gamma is useful in both color management and product selection. Users who value picture quality are advised to check this information.

* Below is the translation from the Japanese of the ITmedia article "Is the Beauty of a Curve Decisive for Color Reproduction? Learning About LCD Monitor Gamma" published July 13, 2009. Copyright 2011 ITmedia Inc. All Rights Reserved.

The term gamma comes from the third letter of the Greek alphabet, written Γ in upper case and γ in lower case. The word gamma occurs often in everyday life, in terms like gamma rays, the star called Gamma Velorum, and gamma-GTP. In computer image processing, the term generally refers to the brightness of intermediate tones (gray).

Let"s discuss gamma in a little more detail. In a PC environment, the hardware used when working with color includes monitors, printers, and scanners. When using these devices connected to a PC, we input and output color information to and from each device. Since each device has its own unique color handling characteristics (or tendencies), color information cannot be output exactly as input. The color handling characteristics that arise in input and output are known as gamma characteristics.

While certain monitors are also compatible with color handling at 10 bits per RGB color (210 = 1024 tones), or 1024 x 3 (approximately 1,064,330,000 colors), operating system and application support for such monitors has lagged. Currently, some 16.77 million colors, with eight bits per RGB color, is the standard color environment for PC monitors.

When a PC and a monitor exchange color information, the ideal is a relationship in which the eight-bit color information per RGB color input from the PC to the monitor can be output accurately—that is, a 1:1 relationship for input:output. However, since gamma characteristics differ between PCs and monitors, color information is not transmitted according to a 1:1 input:output relationship.

How colors ultimately look depends on the relationship resulting from the gamma values (γ) that numerically represent the gamma characteristics of each hardware device. If the color information input is represented as x and output as y, the relationship applying the gamma value can be represented by the equation y = xγ.

Gamma characteristics are represented by the equation y = xγ. At the ideal gamma value of 1.0, y = x; but since each monitor has its own unique gamma characteristics (gamma values), y generally doesn"t equal x. The above graph depicts a curve adjusted to the standard Windows gamma value of 2.2. The standard gamma value for the Mac OS is 1.8.

Ordinarily, the nature of monitor gamma is such that intermediate tones tend to appear dark. Efforts seek to promote accurate exchange of color information by inputting data signals in which the intermediate tones have already been brightened to approach an input:output balance of 1:1. Balancing color information to match device gamma characteristics in this way is called gamma correction.

A simple gamma correction system. If we account for monitor gamma characteristics and input color information with gamma values adjusted accordingly (i.e., color information with intermediate tones brightened), color handling approaches the y = x ideal. Since gamma correction generally occurs automatically, users usually obtain correct color handling on a PC monitor without much effort. However, the precision of gamma correction varies from manufacturer to manufacturer and from product to product (see below for details).

In most cases, if a computer runs the Windows operating system, we can achieve close to ideal colors by using a monitor with a gamma value of 2.2. This is because Windows assumes a monitor with a gamma value of 2.2, the standard gamma value for Windows. Most LCD monitors are designed based on a gamma value of 2.2.

The standard monitor gamma value for the Mac OS is 1.8. The same concept applies as in Windows. We can obtain color reproduction approaching the ideal by connecting a Mac to a monitor configured with a gamma value of 1.8.

An example of the same image displayed at gamma values of 2.2 (photo at left) and 1.8 (photo at right). At a gamma value of 1.8, the overall image appears brighter. The LCD monitor used is EIZO"s 20-inch wide-screen EV2023W FlexScan model (ITmedia site).

To equalize color handling in mixed Windows and Mac environments, it"s a good idea to standardize the gamma values between the two operating systems. Changing the gamma value for the Mac OS is easy; but Windows provides no such standard feature. Since Windows users perform color adjustments through the graphics card driver or separate color-adjustment software, changing the gamma value can be an unexpectedly complex task. If the monitor used in a Windows environment offers a feature for adjusting gamma values, obtaining more accurate results will likely be easier.

If we know that a certain image was created in a Mac OS environment with a gamma value of 1.8, or if an image received from a Mac user appears unnaturally dark, changing the monitor gamma setting to 1.8 should show the image with the colors intended by the creator.

Eizo Nanao"s LCD monitors allow users to configure the gamma value from the OSD menu, making this procedure easy. In addition to the initially configured gamma value of 2.2., one can choose from multiple settings, including the Mac OS standard of 1.8.

To digress slightly, standard gamma values differ between Windows and Mac OS for reasons related to the design concepts and histories of the two operating systems. Windows adopted a gamma value corresponding to television (2.2), while the Mac OS adopted a gamma value corresponding to commercial printers (1.8). The Mac OS has a long history of association with commercial printing and desktop publishing applications, for which 1.8 remains the basic gamma value, even now. On the other hand, a gamma value of 2.2 is standard in the sRGB color space, the standard for the Internet and for digital content generally, and for Adobe RGB, the use of which has expanded for wide-gamut printing,.

Given the proliferating use of color spaces like sRGB and Adobe RGB, plans call for the latest Mac OS scheduled for release by Apple Computer in September 2009, Mac OS X 10.6 Snow Leopard, to switch from a default gamma value of 1.8 to 2.2. A gamma value of 2.2 is expected to become the future mainstream for Macs.

On the preceding page, we mentioned that the standard gamma value in a Windows environment is 2.2 and that many LCD monitors can be adjusted to a gamma value of 2.2. However, due to the individual tendencies of LCD monitors (or the LCD panels installed in them), it"s hard to graph a smooth gamma curve of 2.2.

Traditionally, LCD panels have featured S-shaped gamma curves, with ups and downs here and there and curves that diverge by RGB color. This phenomenon is particularly marked for dark and light tones, often appearing to the eye of the user as tone jumps, color deviations, and color breakdown.

The internal gamma correction feature incorporated into LCD monitors that emphasize picture quality allows such irregularity in the gamma curve to be corrected to approach the ideal of y = x γ. Device specs provide one especially useful figure to help us determine whether a monitor has an internal gamma correction feature: A monitor can be considered compatible with internal gamma correction if the figure for maximum number of colors is approximately 1,064,330,000 or 68 billion or if the specs indicate the look-up table (LUT) is 10- or 12-bit.

An internal gamma correction feature applies multi-gradation to colors and reallocates them. While the input from a PC to an LCD monitor is in the form of color information at eight bits per RGB color, within the LCD monitor, multi-gradation is applied to increase this to 10 bits (approximately 1,064,330,000 colors) or 12 bits (approximately 68 billion colors). The optimal color at eight bits per RGB color (approximately 16.77 million colors) is identified by referring to the LUT and displayed on screen. This corrects irregularity in the gamma curve and deviations in each RGB color, causing the output on screen to approach the ideal of y = x γ.

Let"s look at a little more information on the LUT. The LUT is a table containing the results of certain calculations performed in advance. The results for certain calculations can be obtained simply by referring to the LUT, without actually performing the calculations. This accelerates processing and reduces the load on a system. The LUT in an LCD monitor identifies the optimal eight-bit RGB colors from multi-gradation color data of 10 or more bits.

An overview of an internal gamma correction feature. Eight-bit RGB color information input from the PC is subjected to multi-gradation to 10 or more bits. This is then remapped to the optimal eight-bit RGB tone by referring to the LUT. Following internal gamma correction, the results approach the ideal gamma curve, dramatically improving on screen gradation and color reproduction.

Eizo Nanao"s LCD monitors proactively employ internal gamma correction features. In models designed especially for high picture quality and in some models in the ColorEdge series designed for color management, eight-bit RGB input signals from the PC are subjected to multi-gradation, and calculations are performed at 14 or 16 bits. A key reason for performing calculations at bit counts higher than the LUT bit count is to improve gradation still further, particularly the reproduction of darker tones. Users seeking high-quality color reproduction should probably choose a monitor model like this one.

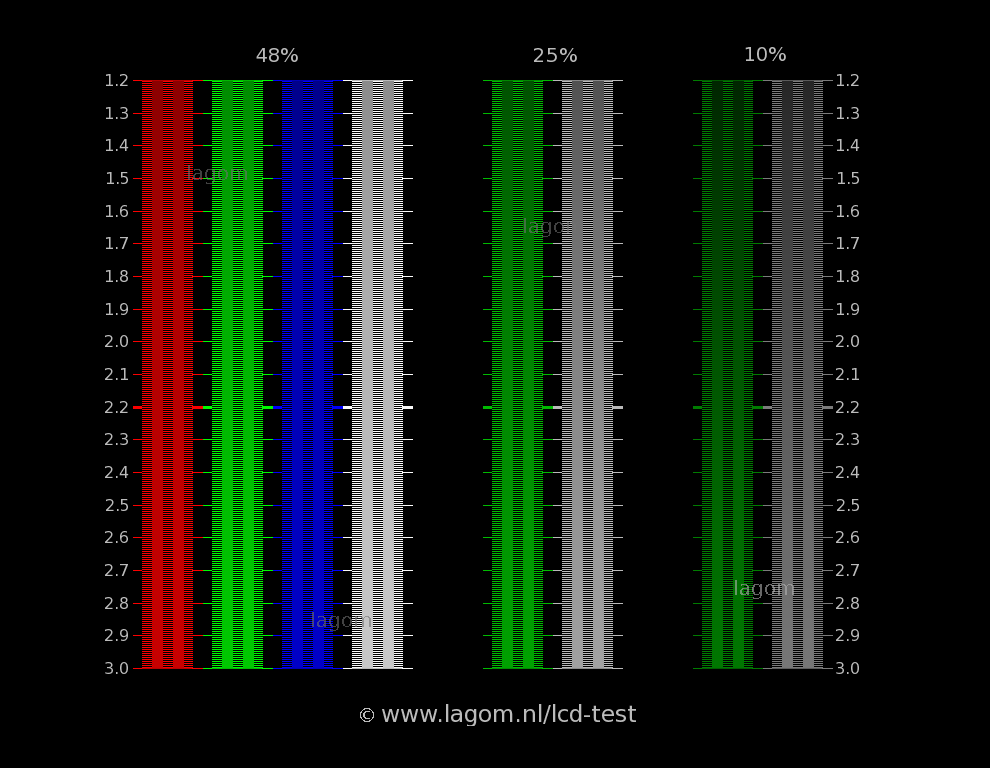

In conclusion, we"ve prepared image patterns that make it easy to check the gamma values of an LCD monitor, based on this session"s discussion. Looking directly at your LCD monitor, move back slightly from the screen and gaze at the following images with your eyes half-closed. Visually compare the square outlines and the stripes around them, looking for patterns that appear to have the same tone of gray (brightness). The pattern for which the square frame and the striped pattern around it appear closest in brightness represents the rough gamma value to which the monitor is currently configured.

Based on a gamma value of 2.2, if the square frame appears dark, the LCD monitor"s gamma value is low. If the square frame appears bright, the gamma value is high. You can adjust the gamma value by changing the LCD monitor"s brightness settings or by adjusting brightness in the driver menu for the graphics card.

Naturally, it"s even easier to adjust the gamma if you use a model designed for gamma value adjustments, like an EIZO LCD monitor. For even better color reproduction, you can set the gamma value and optimize color reproduction by calibrating your monitor.

Monitors for color grading offer the precise colors and performance required to get movies and photos looking exactly the way you want them. Typical consumer-grade monitors display a relatively limited array of colors and do so without much concern for accuracy or consistency. That doesn’t work in a professional setting. The best monitors for color grading allow creative pros to dial in their color, brightness, and a bevy of other settings that can have a profound effect on how your final products look.

Few segments in the consumer electronics market require consumers to navigate more complex feature sets and spec lists than monitors. Here are some essential terms and features to understand before making a new monitor purchase.

Monitors have grown in recent years. While 24 inches was very commonly considered a fairly large monitor just a few years ago, that now falls on the small side of the spectrum. More commonly, 27-inch and even 32-inch monitors dominate the space. When it comes to choosing a size, consider how much room you have on your desk as well as how far you’ll be sitting from your displays. If you like to sit right up on your monitor, then you’re goint to expend a lot of neck muscle energy trying to observe everything on 32-inch screen. Personally, I think 27 inches hits a very sweet spot for my personal setup. A pair of 27-inch screens works just right for me.

When you make a purchase, remember that you’re going to need to display both the content you’re editing as well as the tools built into the editing software. If you’re planning to get two monitors, make sure you have your whole setup planned in your head before making a purchase.

While 4K resolution is all but essential when it comes to TVs, it’s not necessarily mandatory in monitors just yet. There are some truly great 1440p displays out there with fantastic color performance and excellent brightness that more than makes up for fewer pixels.

Creatives running a single-monitor setup may want to shell out the extra money for a 4K display. That leaves room on the screen for the media as well as the editing software UI. Once those tools and panels start taking over a lot of screen real estate, it can be tricky to get a real good look at the clips you’re actually editing together without toggling back and forth between full screen and window mode.

This is where monitors for color grading separate themselves from the mass market offerings. There are four main color spaces to consider when choosing a monitor for color grading. They are: sRGB, Rec .709, DCI-P3, and Rec. 2020. Each of those represents a set of colors and a few other variables that ensure your display can accurately recreate the picture you’d get from a typical TV, movie projector, or other display tech.

Rec .709 and sRGB are the smallest and most common spaces. Monitors will often indicate what percentage of the colors from those spaces they can replicate. DCI-P3 is meant more for cinema projection technology, so fewer monitors offer full coverage there. Rec. 2020 is the widest of the gamuts and it’s still relegated to high-end production and playback situations.

While many of the monitors on this list come calibrated out of the box, you’ll likely want an external calibration tool that you can run on a regular basis. Some very high-end monitors include their own calibration hardware that takes readings of the screen’s color and applies that information to create custom color profiles to keep the on-screen picture as accurate as possible.

Without delving too deeply into the types of LCD displays, you’re generally looking for a model with IPS or in-plane switching. You’ll find various flavors of IPS out there on the market as new ones emerge, but this tech basically ensures that the screen will provide a solid viewing angle and consistent color and brightness performance all the way across the display. Some typical screens get brighter or darker around the edges and lose too much contrast if you’re not looking at them dead-on.

I’ll be clear about this up-front: This is a consumer-grade monitor, but it has the specs and overall qualities it needs for pro work. From a color standpoint, it covers more than 100 percent of the sRGB and Rec .709 color spaces, which is impressive for a monitor this affordable. It also offers 4K resolution and comes in a 32-inch version if you want to go bigger than 27 inches.

It comes calibrated straight from the factory, but creatives can add an extra layer of accuracy with an external calibration device. The Dualview mode allows you to see two different versions of the same image on screen at the same time. So, if you want to see how it will look in two different color spaces, you don’t need to flip back and forth.

There are a few trade-offs. It’s not a particularly bright monitor, so it will work best for you keep your work environment relatively dim. It does have an anti-glare screen, however, so that helps it battle reflections better than some other models.

If you’re looking to keep as much money in your gear budget as possible, this 27-inch 4K screen comes at a surprisingly low price. It still offers 4K resolution and in-plane switching tech. It covers 99 percent of the sRGB color space, which is more than monitors at this price point typically offer.

You will make some sacrifices when it comes to brightness and connectivity, but this is a great display for those who are just starting out or maybe even need a second monitor but don’t want to spend as much as they did on their main screen.

For some setups, a single large screen can work better than two smaller displays. This 31.5-inch (which puts it in the 32-inch class) model offers 4K resolution. So, it can make great use of all that real estate. You can keep an HD version of your media in the playback window while surrounding it with your editing tools.

It has more than just size going for it. Dell’s high-end display covers 99 percent of the sRGB and Rec. 709 color spaces. It also covers 95 percent of the DCI-P3 space, which is up there with even pricier screens.

Connect a laptop via USB-C and this screen can deliver up to 90w of power, which is enough to provide juice for electricity-hungry MacBook Pros. This display has standard mounting sockets on the back, so you could put two or even three of them together if you wanted to make an ultimate editing station.

Compared to higher-end pro models, this 24-inch screen is actually relatively affordable. Its color support goes beyond anything else on this list to cover the entirety of the Rec. 709 and sRGB spaces. Beyond that it covers 99 percent of the AdobeRGB space and 98 percent of the DCI-P3 space. That’s great if you’re working on paid professional editing work.

At just 24 inches, it’s relatively small, but you can make that work in a variety of ways. You can keep this as a second monitor for previewing your media while keeping all of your tools and palettes on the other screen.

To ensure that the color stays super-accurate, the monitor has a built-in calibration tool that you can use to measure real-world performance. It can then automatically tweak performance to stay where it should be.

With this kind of color performance, bigger displays with more resolution can easily push north of $5,000, so don’t let this display’s relatively high price throw you.

Viewsonic makes some of the best monitors around. I use a version of this display every day for my work and have for years. I bought it because it hits a sweet spot of size, resolution, features, and cost.

For a monitor under $1,000, it covers a surprising portion of the color spaces. It handles 99 percent of Adobe RGB and 96 percent of the DCI-P3 space. That’s plenty for pro photo and video work. You can connect to the monitor with a single USB-C port, which delivers both data and power so it’s an ideal companion for a laptop like a MacBook Pro.

At 27 inches, it’s not huge. And at 1440p, it doesn’t offer massive resolution. But, it’s great if you want to buy a pair of monitors without spending a truly wild amount of money.

If you want something that’s going to be consistent and accurate, you shouldn’t expect to pay anything less than $350 on the very low end. On the high-end, they can easily climb up over $5,000 if you want something that’s ready for studio-grade work.

However much you spend, we recommend also investing in a calibration tool that can help ensure those colors stay accurate over time. Changes in the monitor’s backlight and LCD panel can cause shifts over time and you want to stay ahead of that.

Some high-end monitors include built-in calibration tools that take hardware readings of the on-screen image and tweak the display’s performance for accurate colors. Those models are few and far between, however. You’re likely going to want to buy a color calibration system of your own. I have used Datacolor’s Spyder gear for years and it has worked well for me.

The best way to check a monitor’s color really is with a hardware solution. You want something that will take a real measurement of the on-screen performance and then give your computer feedback about how to dial it in. Again, I recommend the Datacolor Spyder equipment, but there are others out there. Eyeballing it will only get you so far and mistakes can be costly in professional settings.

I have been evaluating and reviewing monitors for Popular Photography and other publications for years. I regularly perform professional photography (and to a lesser extent) video jobs that require careful color reproduction. In order to choose the best monitors for color grading, I relied on personal experience, as well as user reviews, editorial feedback, spec comparisons, and information from friends in the industry.

While this list doesn’t represent much of the super-high-end, those models typically fall outside the scope for most people. This list is largely comprised of high-end consumer grade or pro-level monitors meant for everyday use in addition to their creative work applications.

If you’re looking for a solid all-around monitor that can absolutely handle pro photo and video work, the BenQ PD2700U is a heck of a deal. It’s big enough and has high-enough resolution to satisfy every day tasks. Plus, its color performance is on-par with pricier models. Whatever you get, make sure to keep it calibrated and well cared for, because even the best display won’t treat your content right if you abuse it.

Your monitor’s gamma tells you its pixels’ luminance at every brightness level, from 0-100%. Lower gamma makes shadows looks brighter and can result in a flatter, washed out image, where it"s harder to see brighter highlights. Higher gamma can make it harder to see details in shadows. Some monitors offer different gamma modes, allowing you to tweak image quality to your preference. The standard for the sRGBcolor space, however, is a value of 2.2.

Gamma is important because it affects the appearance of dark areas, like blacks and shadows and midtones, as well as highlights. Monitors with poor gamma can either crush detail at various points or wash it out, making the entire picture appear flat and dull. Proper gamma leads to more depth and realism and a more three-dimensional image.

The image gallery below (courtesy of BenQ(opens in new tab)) shows an image with the 2.2 gamma standard compared to that same image with low gamma and with high gamma.

Typically, if you are running on the Windows operating system, the most accurate color is achieved with a gamma value of 2.2 (for Mac OS, the ideal gamma value is 1.8). So when testing monitors, we strive for a gamma value of 2.2. A monitor’s range of gamma values indicates how far the lowest and highest values differ from the 2.2 standard (the smaller the difference, the better).

In our monitor reviews, we’ll show you gamma charts like the one above, with the x axis representing different brightness levels. The yellow line represents 2.2 gamma value. The closer the gray line conforms to the yellow line, the better.

With the SVX-4096 LCD controller board you can have your own gamma correction curve lookup tables together with custom on-screen menu entries to select them.

Being able to calibrate a display system is essential for some applications such as viewing medical images on a DICOM compliant monitor. On the SVX-4096 you can provide us (Digital View) with the look-up table data and we will create a custom labeled on-screen menu entry or RS-232 command to call up those settings. Alternatively we can calibrate your chosen panel kit as an engineering service.

Software, Controller Utility: It is worth having a look at the Controller Utility application that provides a user interface for modification of the gamma curve. See the Software section here. It is a free download for Microsoft Windows providing access to a lot of controller functions in addition to the gamma curve adjustment.

DICOM note: DICOM (Digital Imaging and Communications in Medicine) is a standard covering the distribution and viewing of medical images. The SVX-4096 DICOM support takes the form of calibration using a color analyzer to build a gamma curve LUT (Lookup Table) for a specified LCD panel and then code this into the firmware of the SVX-4096.

The DICOM LUT is then available as a setting within the OSD commands of the controller. The important point is that this is LCD panel model specific though the good news is that many LCD panels these days meet the criteria for calibration.

Many statements on here are incorrect. Gamma in the signal path is a desired benefit, and a design choice by early video engineers to reduce perceived noise in transmission.

All vacuum tubes, CRTs included, exhibit various non-linearities (see Langmuir-Child law) CRTs can vary from a "gamma" of 1.5 to over 3.5 (when driven by a voltage signal) depending on various design differences. The nonlinearities were less of an issue with monochrome, but became more critical with color so the NTSC specified a signal gamma of 1/2.2. CRT design and supporting circuits adjust actual gamma from the Langmuir-Child law (commonly understood as 1.5, but is typically higher with CRTs due to a number of factors) to a level in line with human perception "gamma" of ~2.5. For NTSC, the television set was assumed to have a gamma target of ~2.4,** while PAL indicated ~2.8

The higher gamma in the old analog broadcast signal standards is specifically to reduce perceived noise, based on human perception being non-linear. In this use case, taking advantage of the non-linearities to hide noise by the "companding" effect of gamma encoding the signal. This is quite academic.

There are a few ways that CRT TV & Monitor design could have been altered to achieve linearity as opposed to a gamma-type curve, but a gamma curve in analog broadcasting reduced apparent noise by 30 dB. Gamma was desirable then AS IT IS NOW.

Gamma is needed even if an LCD monitor could be used in a linear (gamma 1.0) way. The claims here that gamma is no longer needed are complete bunk, and fail to understand the current purpose of applying a pre-emphasis curve.

The computer monitors we use are still either 8 or 10 bit FOR DISPLAY, so all linear images still need to be gamma-adjusted before being sent to the monitor. Why?

Most "good" monitors are just 8 bit per chan, and many are just "6 bit internal" meaning they take an 8 bit per chan image and display as 6 bit per channel. How can they make an acceptable image?

10 bit per channel monitors are rare and expensive (like my NEX PA271W). My NEC can take a 10 bit signal, and uses a 14 bit internal LUT for profiling. But 10 bits is still not enough for linear!

Gamma or some form of preemph/deemph curve is required even for 10 bit. 12 bit is the bare minimum for reasonable linear display, and even then is unacceptable for the feature film industry.

DCI was created for theaters and is its own closed eco-system, with no reliance on old technologies like CRT. If there was some "advantage" to using a linear (gamma 1.0) space, it would have been used, but it is not.

** While the NTSC specified a signal gamma of 1/2.2, TVs were expected to have a gamma of 2.4 for a system gamma gain. It is useful to point out that Rec709 (HDTV) and sRGB are identical except for the transfer curve. And interestingly, Rec709 (via BT1886) specifies a "physical display gamma" of 2.4 (i.e. the gamma of the monitor itself) and sRGB monitors are typically set at 2.4 or higher (surveys show most users set them 2.5 and up). But the SIGNAL gamma is different, approx. 1/2.2 for sRGB and approx 1/2.0 for Rec709. in both cases, there is a system gamma gain which is intentional based on the expected viewing environment.

With most display systems, the gamma correction is applied in the video card (by downloading a custom LUT into the card). Some of the higher-end monitors (high end NEC & Eizo monitors) have the ability to apply a correction LUT internally inside the monitor. The advantage of this is that they are generally higher bit depth (typically 10 or 12 bits) than the correction applied via the display card (8-bits).

As it seems from FreddyNZ"s calibration image my LCD monitor is totally "screwed up".As freddyNZ mentioned, the correction LUT isn"t automatically calculated, as it depends upon the individual characteristics of the display (CRT or LCD) being used. If you"re using a hardware display calibrator, it starts off by loading a linear LUT into the video card and then proceeds to measure the characteristics of the display. It then calulates a correction LUT that will bring the display to a known gamma & color temperature and loads that into the video card (or monitor if supported). After calibrating the display to known set of values, it then proceeds to characterize the color characteristics of the display. This data is used to build the ICC profile for the display. Note that if you use a display that doesn"t support an internal correction LUT (that"s most of us), the correction LUT gets loaded into the video card when the OS boots up. It is usually done with a small LUT loader utility which runs at startup and reads the correction LUT from the default display profile and loads it into the video card.

Do you happen to know if a correction LUT made by a hardware calibrator is stored inside the ICC profile you mention? Or are the monitor"s ICC profile and the correction LUT two totally different things?

"When the data is saved, after being gamma corrected to 1.8, that gamma correction stays with the file. However, most file formats (GIF, JPEG) don"t have anyway to tell a user the gamma correction that has already been applied to image data. Therefore, the user must guess and gamma correct until he is satisfied with how it looks. The Targa and PNG file formats do encode the exact gamma information, removing some of the guess work. The 3D modeling program, 3D Studio, actually takes advantage of this information!

Gamma correction, then, can be done on file data directly (the individual bits in the file are changed to reflect the correction). This is what is meant by the File Gamma or "gamma of a file." On the other hand gamma correction can be done as post processing on file data. In the latter case, the data in the file is unchanged, but between reading the file and displaying the data on your monitor, the data is gamma corrected for display purposes. Ideally, if one knows the File Gamma and their own System Gamma, they can determine the gamma correction needed (if any) to accurately display the file on their system."

So are gamma corrected values stored often in image files by image editing software? And do any programs really take it into consideration when showing this kind of image file by lowering midtones of the image before sending it to video card? So is this "file gamma" really a common problem among photo editing or a very rare exception?

The effect of gamma correction on an image: The original image was taken to varying powers, showing that powers larger than 1 make the shadows darker, while powers smaller than 1 make dark regions lighter.

Gamma correction or gamma is a nonlinear operation used to encode and decode luminance or tristimulus values in video or still image systems.power-law expression:

Gamma encoding of images is used to optimize the usage of bits when encoding an image, or bandwidth used to transport an image, by taking advantage of the non-linear manner in which humans perceive light and color.lightness), under common illumination conditions (neither pitch black nor blindingly bright), follows an approximate power function (which has no relation to the gamma function), with greater sensitivity to relative differences between darker tones than between lighter tones, consistent with the Stevens power law for brightness perception. If images are not gamma-encoded, they allocate too many bits or too much bandwidth to highlights that humans cannot differentiate, and too few bits or too little bandwidth to shadow values that humans are sensitive to and would require more bits/bandwidth to maintain the same visual quality.floating-point images is not required (and may be counterproductive), because the floating-point format already provides a piecewise linear approximation of a logarithmic curve.

Although gamma encoding was developed originally to compensate for the input–output characteristic of cathode ray tube (CRT) displays, it is not its main purpose or advantage in modern systems. In CRT displays, the light intensity varies nonlinearly with the electron-gun voltage. Altering the input signal by gamma compression can cancel this nonlinearity, such that the output picture has the intended luminance. However, the gamma characteristics of the display device do not play a factor in the gamma encoding of images and video. They need gamma encoding to maximize the visual quality of the signal, regardless of the gamma characteristics of the display device.

Analogously, digital cameras record light using electronic sensors that usually respond linearly. In the process of rendering linear raw data to conventional RGB data (e.g. for storage into JPEG image format), color space transformations and rendering transformations will be performed. In particular, almost all standard RGB color spaces and file formats use a non-linear encoding (a gamma compression) of the intended intensities of the primary colors of the photographic reproduction. In addition, the intended reproduction is almost always nonlinearly related to the measured scene intensities, via a tone reproduction nonlinearity.

That is, gamma can be visualized as the slope of the input–output curve when plotted on logarithmic axes. For a power-law curve, this slope is constant, but the idea can be extended to any type of curve, in which case gamma (strictly speaking, "point gamma"

When a photographic film is exposed to light, the result of the exposure can be represented on a graph showing log of exposure on the horizontal axis, and density, or negative log of transmittance, on the vertical axis. For a given film formulation and processing method, this curve is its characteristic or Hurter–Driffield curve.

Output to CRT-based television receivers and monitors does not usually require further gamma correction. The standard video signals that are transmitted or stored in image files incorporate gamma compression matching the gamma expansion of the CRT (although it is not the exact inverse).

For television signals, gamma values are fixed and defined by the analog video standards. CCIR System M and N, associated with NTSC color, use gamma 2.2; the rest (systems B/G, H, I, D/K, K1 and L) associated with PAL or SECAM color, use gamma 2.8.

In most computer display systems, images are encoded with a gamma of about 0.45 and decoded with the reciprocal gamma of 2.2. A notable exception, until the release of Mac OS X 10.6 (Snow Leopard) in September 2009, were Macintosh computers, which encoded with a gamma of 0.55 and decoded with a gamma of 1.8. In any case, binary data in still image files (such as JPEG) are explicitly encoded (that is, they carry gamma-encoded values, not linear intensities), as are motion picture files (such as MPEG). The system can optionally further manage both cases, through color management, if a better match to the output device gamma is required.

Plot of the sRGB standard gamma-expansion nonlinearity in red, and its local gamma value (slope in log–log space) in blue. The local gamma rises from 1 to about 2.2.

The sRGB color space standard used with most cameras, PCs, and printers does not use a simple power-law nonlinearity as above, but has a decoding gamma value near 2.2 over much of its range, as shown in the plot to the right. Below a compressed value of 0.04045 or a linear intensity of 0.00313, the curve is linear (encoded value proportional to intensity), so γ = 1. The dashed black curve behind the red curve is a standard γ = 2.2 power-law curve, for comparison.

Gamma correction in computers is used, for example, to display a gamma = 1.8 Apple picture correctly on a gamma = 2.2 PC monitor by changing the image gamma. Another usage is equalizing of the individual color-channel gammas to correct for monitor discrepancies.

Some picture formats allow an image"s intended gamma (of transformations between encoded image samples and light output) to be stored as metadata, facilitating automatic gamma correction as long as the display system"s exponent is known. The PNG specification includes the gAMA chunk for this purposeJPEG and TIFF the Exif Gamma tag can be used.

These features have historically caused problems, especially on the web. There is no numerical value of gamma that matches the "show the 8-bit numbers unchanged" method used for JPG, GIF, HTML, and CSS colors, so the PNG would not match.Google Chrome (and all other Chromium-based browsers) and Mozilla Firefox either ignore the gamma setting entirely, or ignore it when set to known wrong values.

A gamma characteristic is a power-law relationship that approximates the relationship between the encoded luma in a television system and the actual desired image luminance.

With this nonlinear relationship, equal steps in encoded luminance correspond roughly to subjectively equal steps in brightness. Ebner and Fairchildused an exponent of 0.43 to convert linear intensity into lightness (luma) for neutrals; the reciprocal, approximately 2.33 (quite close to the 2.2 figure cited for a typical display subsystem), was found to provide approximately optimal perceptual encoding of grays.

The following illustration shows the difference between a scale with linearly-increasing encoded luminance signal (linear gamma-compressed luma input) and a scale with linearly-increasing intensity scale (linear luminance output).

On most displays (those with gamma of about 2.2), one can observe that the linear-intensity scale has a large jump in perceived brightness between the intensity values 0.0 and 0.1, while the steps at the higher end of the scale are hardly perceptible. The gamma-encoded scale, which has a nonlinearly-increasing intensity, will show much more even steps in perceived brightness.

A cathode ray tube (CRT), for example, converts a video signal to light in a nonlinear way, because the electron gun"s intensity (brightness) as a function of applied video voltage is nonlinear. The light intensity I is related to the source voltage Vs according to

where γ is the Greek letter gamma. For a CRT, the gamma that relates brightness to voltage is usually in the range 2.35 to 2.55; video look-up tables in computers usually adjust the system gamma to the range 1.8 to 2.2,

For simplicity, consider the example of a monochrome CRT. In this case, when a video signal of 0.5 (representing a mid-gray) is fed to the display, the intensity or brightness is about 0.22 (resulting in a mid-gray, about 22% the intensity of white). Pure black (0.0) and pure white (1.0) are the only shades that are unaffected by gamma.

To compensate for this effect, the inverse transfer function (gamma correction) is sometimes applied to the video signal so that the end-to-end response is linear. In other words, the transmitted signal is deliberately distorted so that, after it has been distorted again by the display device, the viewer sees the correct brightness. The inverse of the function above is

where Vc is the corrected voltage, and Vs is the source voltage, for example, from an image sensor that converts photocharge linearly to a voltage. In our CRT example 1/γ is 1/2.2 ≈ 0.45.

A color CRT receives three video signals (red, green, and blue) and in general each color has its own value of gamma, denoted γR, γG or γB. However, in simple display systems, a single value of γ is used for all three colors.

Other display devices have different values of gamma: for example, a Game Boy Advance display has a gamma between 3 and 4 depending on lighting conditions. In LCDs such as those on laptop computers, the relation between the signal voltage Vs and the intensity I is very nonlinear and cannot be described with gamma value. However, such displays apply a correction onto the signal voltage in order to approximately get a standard γ = 2.5 behavior. In NTSC television recording, γ = 2.2.

The power-law function, or its inverse, has a slope of infinity at zero. This leads to problems in converting from and to a gamma colorspace. For this reason most formally defined colorspaces such as sRGB will define a straight-line segment near zero and add raising x + K (where K is a constant) to a power so the curve has continuous slope. This straight line does not represent what the CRT does, but does make the rest of the curve more closely match the effect of ambient light on the CRT. In such expressions the exponent is not the gamma; for instance, the sRGB function uses a power of 2.4 in it, but more closely resembles a power-law function with an exponent of 2.2, without a linear portion.

Up to four elements can be manipulated in order to achieve gamma encoding to correct the image to be shown on a typical 2.2- or 1.8-gamma computer display:

The pixel"s intensity values in a given image file; that is, the binary pixel values are stored in the file in such way that they represent the light intensity via gamma-compressed values instead of a linear encoding. This is done systematically with digital video files (as those in a DVD movie), in order to minimize the gamma-decoding step while playing, and maximize image quality for the given storage. Similarly, pixel values in standard image file formats are usually gamma-compensated, either for sRGB gamma (or equivalent, an approximation of typical of legacy monitor gammas), or according to some gamma specified by metadata such as an ICC profile. If the encoding gamma does not match the reproduction system"s gamma, further correction may be done, either on display or to create a modified image file with a different profile.

The rendering software writes gamma-encoded pixel binary values directly to the video memory (when highcolor/truecolor modes are used) or in the CLUT hardware registers (when indexed color modes are used) of the display adapter. They drive Digital-to-Analog Converters (DAC) which output the proportional voltages to the display. For example, when using 24-bit RGB color (8 bits per channel), writing a value of 128 (rounded midpoint of the 0–255 byte range) in video memory it outputs the proportional ≈ 0.5 voltage to the display, which it is shown darker due to the monitor behavior. Alternatively, to achieve ≈ 50% intensity, a gamma-encoded look-up table can be applied to write a value near to 187 instead of 128 by the rendering software.

Modern display adapters have dedicated calibrating CLUTs, which can be loaded once with the appropriate gamma-correction look-up table in order to modify the encoded signals digitally before the DACs that output voltages to the monitor.hardware calibration.

Some modern monitors allow the user to manipulate their gamma behavior (as if it were merely another brightness/contrast-like setting), encoding the input signals by themselves before they are displayed on screen. This is also a calibration by hardware technique but it is performed on the analog electric signals instead of remapping the digital values, as in the previous cases.

In a typical system, for example from camera through JPEG file to display, the role of gamma correction will involve several cooperating parts. The camera encodes its rendered image into the JPEG file using one of the standard gamma values such as 2.2, for storage and transmission. The display computer may use a color management engine to convert to a different color space (such as older Macintosh"s γ = 1.8 color space) before putting pixel values into its video memory. The monitor may do its own gamma correction to match the CRT gamma to that used by the video system. Coordinating the components via standard interfaces with default standard gamma values makes it possible to get such system properly configured.

This procedure is useful for making a monitor display images approximately correctly, on systems in which profiles are not used (for example, the Firefox browser prior to version 3.0 and many others) or in systems that assume untagged source images are in the sRGB colorspace.

In the test pattern, the intensity of each solid color bar is intended to be the average of the intensities in the surrounding striped dither; therefore, ideally, the solid areas and the dithers should appear equally bright in a system properly adjusted to the indicated gamma.

Normally a graphics card has contrast and brightness control and a transmissive LCD monitor has contrast, brightness, and backlight control. Graphics card and monitor contrast and brightness have an influence on effective gamma, and should not be changed after gamma correction is completed.

Given a desired display-system gamma, if the observer sees the same brightness in the checkered part and in the homogeneous part of every colored area, then the gamma correction is approximately correct.

Before gamma correction the desired gamma and color temperature should be set using the monitor controls. Using the controls for gamma, contrast and brightness, the gamma correction on an LCD can only be done for one specific vertical viewing angle, which implies one specific horizontal line on the monitor, at one specific brightness and contrast level. An ICC profile allows one to adjust the monitor for several brightness levels. The quality (and price) of the monitor determines how much deviation of this operating point still gives a satisfactory gamma correction. Twisted nematic (TN) displays with 6-bit color depth per primary color have lowest quality. In-plane switching (IPS) displays with typically 8-bit color depth are better. Good monitors have 10-bit color depth, have hardware color management and allow hardware calibration with a tristimulus colorimeter. Often a 6bit plus FRC panel is sold as 8bit and a 8bit plus FRC panel is sold as 10bit. FRC is no true replacement for more bits. The 24-bit and 32-bit color depth formats have 8 bits per primary color.

With Microsoft Windows 7 and above the user can set the gamma correction through the display color calibration tool dccw.exe or other programs.ICC profile file and load it as default. This makes color management easy.color Look Up Table correctly after waking up from standby or hibernate mode and show wrong gamma. In this case update the graphics card driver.

On some operating systems running the X Window System, one can set the gamma correction factor (applied to the existing gamma value) by issuing the command xgamma -gamma 0.9 for setting gamma correction factor to 0.9, and xgamma for querying current value of that factor (the default is 1.0). In macOS systems, the gamma and other related screen calibrations are made through the System Preferences.

The test image is only valid when displayed "raw", i.e. without scaling (1:1 pixel to screen) and color adjustment, on the screen. It does, however, also serve to point out another widespread problem in software: many programs perform scaling in a color space with gamma, instead of a physically-correct linear space. In a sRGB color space with an approximate gamma of 2.2, the image should show a "2.2" result at 50% size, if the zooming is done linearly. Jonas Berlin has created a "your scaling software sucks/rules" image based on the same principle.

In addition to scaling, the problem also applies to other forms of downsampling (scaling down), such as chroma subsampling in JPEG"s gamma-enabled Y′CbCr.WebP solves this problem by calculating the chroma averages in linear space then converting back to a gamma-enabled space; an iterative solution is used for larger images. The same "sharp YUV" (formerly "smart YUV") code is used in sjpeg. Kornelski provides a simpler approximation by luma-based weighted average.Alpha compositing, color gradients, and 3D rendering are also affected by this issue.

Paradoxically, when upsampling (scaling up) an image, the result processed in the "wrong" gamma-enabled space tends to be more aesthetically pleasing. This is because upscaling filters are tuned to minimize the ringing artifacts in a linear space, but human perception is non-linear and better approximated by gamma. An alternative way to trim the artifacts is using a sigmoidal light transfer function, a technique pioneered by GIMP"s LoHalo filter and later adopted by madVR.

The term intensity refers strictly to the amount of light that is emitted per unit of time and per unit of surface, in units of lux. Note, however, that in many fields of science this quantity is called luminous exitance, as opposed to luminous intensity, which is a different quantity. These distinctions, however, are largely irrelevant to gamma compression, which is applicable to any sort of normalized linear intensity-like scale.

One contrasts relative luminance in the sense of color (no gamma compression) with luma in the sense of video (with gamma compression), and denote relative luminance by Y and luma by Y′, the prime symbol (′) denoting gamma compression.

Gamma correction is a type of power law function whose exponent is the Greek letter gamma (γ). It should not be confused with the mathematical Gamma function. The lower case gamma, γ, is a parameter of the former; the upper case letter, Γ, is the name of (and symbol used for) the latter (as in Γ(x)). To use the word "function" in conjunction with gamma correction, one may avoid confusion by saying "generalized power law function".

Without context, a value labeled gamma might be either the encoding or the decoding value. Caution must be taken to correctly interpret the value as that to be applied-to-compensate or to be compensated-by-applying its inverse. In common parlance, in many occasions the decoding value (as 2.2) is employed as if it were the encoding value, instead of its inverse (1/2.2 in this case), which is the real value that must be applied to encode gamma.

McKesson, Jason L. "Chapter 12. Dynamic Range – Linearity and Gamma". Learning Modern 3D Graphics Programming. Archived from the original on 18 July 2013. Retrieved 11 July 2013.

"11A: Characteristics of systems for monochrome and color television". Reports of the CCIR, 1990: Also Decisions : XVIIth Plenary Assembly, Dusseldorf (PDF). International Radio Consultative Committee. 1990.

Fritz Ebner and Mark D Fairchild, "Development and testing of a color space (IPT) with improved hue uniformity," Proceedings of IS&T/SID"s Sixth Color Imaging Conference, p 8-13 (1998).

Koren, Norman. "Monitor calibration and gamma". Retrieved 2018-12-10. The chart below enables you to set the black level (brightness) and estimate display gamma over a range of 1 to 3 with precison better than 0.1.

Nienhuys, Han-Kwang (2008). "Gamma calibration". Retrieved 2018-11-30. The reason for using 48% rather than 50% as a luminance is that many LCD screens have saturation issues in the last 5 percent of their brightness range that would distort the gamma measurement.

Andrews, Peter. "The Monitor calibration and Gamma assessment page". Retrieved 2018-11-30. the problem is caused by the risetime of most monitor hardware not being sufficiently fast to turn from full black to full white in the space of a single pixel, or even two, in some cases.

Werle, Eberhard. "Quickgamma". Retrieved 2018-12-10. QuickGamma is a small utility program to calibrate a monitor on the fly without having to buy expensive hardware tools.

This is the second installment of a 2-part guest post by Jim Perkins, a professor at the Rochester Institute of Technology"s medical illustration program. His first post detailed why it"s a good idea to calibrate your computer monitor regularly. This next post walks us through the process and explains the mysterious settings known as gamma and white point.

If you have never calibrated your monitor, it’s almost certainly out of whack. Maybe a lot. Maybe a little. There’s really no way to know unless you generate an expensive prepress proof (e.g., a Kodak Approval, Fuji FinalProof, Creo Veris) and compare it to the on-screen image. Even a high quality monitor may not display colors accurately, especially as it ages. All monitors change over time, so calibration must be done on a regular basis. Most experts recommend doing it every few weeks to every few months.

In practice, however, calibration is a little bit trickier. First of all, you need to control some aspects of the monitor’s environment to ensure proper calibration. Second, you must make some critical decisions about how you want the monitor to display color. As I’ll discuss below, these decisions depends on whether you are creating art primarily for print, on-screen display (web, gaming), or broadcast (TV/film).

Calibration should be done under the same conditions that you normally use the monitor. You don’t want to calibrate under one set of conditions and use the monitor under different conditions. It won’t look the same. For example, a monitor’s display can change as it warms up. So be sure to turn the monitor on at least 30 minutes before calibrating so it warms up to normal operating temperature. This was more of a concern with old CRT monitors, but applies to flat panel LCDs as well.

Next, make sure you are using your monitor under moderate ambient lighting conditions. It’s not necessary to work in the dark, but the monitor should be the strongest light source in your work area. Don’t have strong lights shining directly on the screen, as this will affect the apparent brightness of the display and can introduce a color cast. Some calibration systems have ambient light sensors to compensate for this, but they’re not perfect.

Some photo studios and prepress services go so far as to paint their walls and furniture a neutral 50% gray and use only daylight-balanced D50 fluorescent lights. The International Organization for Standardization (ISO – www.iso.org) publishes a set of guidelines called “Graphic Technology and Photography -- Viewing Conditions” (ISO 3664:2009) for photographers, artists, and web developers; and a stricter set of guidelines for photo imaging labs and prepress service bureaus called “Graphic Technology - Displays for Colour Proofing - Characteristics and Viewing Conditions” (ISO 12646:2008). This is probably overkill for most artists.

When you connect the colorimeter and run the calibration software, it will ask you to select some important settings. The two most important settings are gamma and color temperature, both of which are fairly difficult concepts to understand.

Gamma is the relationship between the numerical value of a pixel in an image file and the brightness of that pixel when viewed on screen. The computer translates the numerical values in the image file into voltage that is sent to the monitor. This relationship is non-linear, meaning that a change in voltage does not translate into an equivalent change in brightness. For almost all TVs and computer monitors, a change in voltage results in a change in brightness raised to the 2.5 power. The gamma for these devices, therefore, is said to be 2.5.

Gamma correction is a way of compensating for this non-linear relationship between voltage and brightness. A combination of hardware and/or software can reduce the gamma to something closer to 1.0, i.e. a perfect linear relationship. This helps ensure that a change in pixel value in the digital file translates into a proportional change in brightness on screen.

Prior to calibrating a monitor, it is critical to tell the calibration software which gamma setting you wish to use. Historically, there has been a big difference in hardware gamma correction between Macs and PCs. For many years, this dictated the choice of gamma on these two platforms. However, as we’ll see below, the choice now depends more on the type of work you do and not on the operating system.

Since its introduction in 1984, the Macintosh computer had built-in correction that brought the gamma of the system down to 1.8. Therefore, we say that the “system gamma” of Macs is 1.8. Apple chose this number for a very good reason. It turns out that printing devices have a type of gamma also. A 10% gray area of pixels in a digital file is printed as a series of tiny dots that cover 10% of the surface of the paper. In theory, this should produce the appearance of a 10% gray on paper, matching the value in the digital file. In practice, however, the ink or toner bleeds into the paper and spreads (called “dot gain”), creating a pattern of dots that covers more than 10% of the paper. This makes the printed image appear darker than it should, especially in the midtones. The Mac system gamma of 1.8 compensates for this phenomenon, making the image slightly lighter so it matches the digital file.

The original Mac was designed from the outset to be a graphic arts system. Its release coincided with the introduction of the Apple Laserwriter, the Linotype Linotronics imagesetter, and Aldus Pagemaker, the first page layout program. All of these components were tied together by the PostScript page description language, also released in 1984 by a fledgling company called Adobe. This launched the desktop publishing revolution of the mid-1980s and beyond. It was no coincidence that Apple chose a system gamma that was geared towards print output.

Windows PCs, on the other hand, have never had built-in gamma correction, although this is an option on some graphics cards. This reflects the fact that PCs were always targeted towards business and the mass consumer market rather than to graphics professionals. With no hardware correction, the Windows system gamma is about 2.2.

With the release of Mac OSX 10.6 (Snow Leopard) in 2009, Apple finally changed their default system gamma from 1.8 to 2.2. They did this, of course, to ensure that video games and web images looked the same on Mac and PC systems. In doing so, however, they abandoned their traditional base of support among graphics professionals.

The choice of gamma settings, therefore, is no longer dictated by the computer platform or operating system. Instead, when calibrating your monitor, you can choose a gamma setting that is best suited to the type of work you normally do. This will override the built-in settings of the system.

If you create mostly images that will be viewed on screen – for the web, PowerPoint, video games, etc. – set your gamma to 2.2. This will help ensure that your images look consistent across the widest range of computers used in business and the mass consumer market.

On the other hand, if you still create most of your work for print (as I do), stick with 1.8. Not only is this setting more compatible with high-end printing system, it also produces noticeably lighter images on screen. This helps you see detail in shadows, something that is critical when creating and editing digital images.

Physicists express the temperature of the ideal black body in degrees Kelvin (°K). This is just a different scale for measuring temperature, like Celsius and Fahrenheit. The Kelvin scale is noteworthy because zero degrees on the Kelvin scale is known as Absolute Zero – the temperature at which all molecular motion stops (equal to -459.67° on the Fahrenheit scale)

So what does this have to do with monitor calibration? There is no such thing as pure white. Every light source has a slight hue or color cast to it. For any given light source, we can match it up to a temperature on the Kelvin scale that emits the same color of light. Below is a list of lighting conditions and their corresponding color temperatures:

Any white objects that appear on your computer screen will have one of these color casts. You probably don’t notice it because you are accustomed to thinking of a blank page on screen as being “pure” white. However, if you change the color temperature of your monitor, you will see a dramatic difference and the color cast will become obvious. On the Mac, go to the Monitor controls under System Preferences. Select the Color tab and click Calibrate. Here you have the option of changing both the gamma setting and color temperature to see how they affect your screen. However, I recommend you DO NOT save any of these changes. You’ll have a chance to choose gamma and color temperature later when you run the calibration software that came with your colorimeter.

So which color temperature setting is best? As with the gamma setting, it depends on what kind of work you do. For many years, the standard color temperature setting for graphic arts work was 5000°K (also known as D50). This is closest to neutral white and simulates common lighting conditions for reading printed materials. Therefore, I feel this is the ideal color temperature to select if you do mostly work for print.

If you create mostly web graphics or other images viewed on screen, choose 6500°K (also known as D65). This is the default color temperature of the sRGB color space and is used by mass market computer monitors, most of which are uncalibrated. It also displays images with a bluish color cast that is familiar to consumers who watch lots of TV (e.g., most Americans).

Some experts argue that all computers should switch over to 6500°K (D65), even if they are used mostly for print work. This has been a recent trend in the graphic arts. They feel that a monitor calibrated to 5000°K (D50) is too dull and yellow. Most users prefer to work at D65, which appears brighter and bluer, like a TV.

I disagree with this logic. It’s true that the screen image will appear noticeably dull and yellow if you switch from D65 to D50. However, after working on the computer for a few minutes, you won’t even notice it. If you work at D50 for a while and then switch back to D65, you’ll be shocked at how blue and gaudy the screen appears. More importantly, the D50 standard does a better job of simulating what images look like when printed on paper under normal viewing conditions. This is why the D50 standard was adopted by the graphic arts industry in the first place. Switching all monitors to D65, even for print work, seems like a one-size-fits-all approach, pandering to the masses who work on cheap, uncalibrated systems. As someone who is 6’2” and 300 lbs., I chuckle at the notion of anything that claims to be “one-size-fits-all.”

5. When prompted, select the values for gamma and color temperature. If you do mostly print work, I recommend gamma 1.8 and 5000°K (D50). If you create mostly web graphics, game assets, or

Ms.Josey

Ms.Josey

Ms.Josey

Ms.Josey