origin of lag in lcd displays supplier

Display lag is a phenomenon associated with most types of liquid crystal displays (LCDs) like smartphones and computers and nearly all types of high-definition televisions (HDTVs). It refers to latency, or lag between when the signal is sent to the display and when the display starts to show that signal. This lag time has been measured as high as 68 ms,Hz display. Display lag is not to be confused with pixel response time, which is the amount of time it takes for a pixel to change from one brightness value to another. Currently the majority of manufacturers quote the pixel response time, but neglect to report display lag.

For older analog cathode ray tube (CRT) technology, display lag is nearly zero, due to the nature of the technology, which does not have the ability to store image data before display. The picture signal is minimally processed internally, simply for demodulation from a radio-frequency (RF) carrier wave (for televisions), and then splitting into separate signals for the red, green, and blue electron guns, and for the timing of the vertical and horizontal sync. Image adjustments typically involve reshaping the signal waveform but without storage, so the image is written to the screen as fast as it is received, with only nanoseconds of delay for the signal to traverse the wiring inside the device from input to the screen.

For modern digital signals, significant computer processing power and memory storage is needed to prepare an input signal for display. For either over-the-air or cable TV, the same analog demodulation techniques are used, but after that, then the signal is converted to digital data, which must be decompressed using the MPEG codec, and rendered into an image bitmap stored in a frame buffer.

For progressive scan display modes, the signal processing stops here, and the frame buffer is immediately written to the display device. In its simplest form, this processing may take several microseconds to occur.

For interlaced video, additional processing is frequently applied to deinterlace the image and make it seem to be clearer or more detailed than it actually is. This is done by storing several interlaced frames and then applying algorithms to determine areas of motion and stillness, and to either merge interlaced frames for smoothing or extrapolate where pixels are in motion, the resulting calculated frame buffer is then written to the display device.

De-interlacing imposes a delay that can be no shorter than the number of frames being stored for reference, plus an additional variable period for calculating the resulting extrapolated frame buffer; delays of 16-32ms are common.

While the pixel response time of the display is usually listed in the monitor"s specifications, no manufacturers advertise the display lag of their displays, likely because the trend has been to increase display lag as manufacturers find more ways to process input at the display level before it is shown. Possible culprits are the processing overhead of HDCP, Digital Rights Management (DRM), and also DSP techniques employed to reduce the effects of ghosting – and the cause may vary depending on the model of display. Investigations have been performed by several technology-related websites, some of which are listed at the bottom of this article.

LCD, plasma, and DLP displays, unlike CRTs, have a native resolution. That is, they have a fixed grid of pixels on the screen that show the image sharpest when running at the native resolution (so nothing has to be scaled full-size which blurs the image). In order to display non-native resolutions, such displays must use video scalers, which are built into most modern monitors. As an example, a display that has a native resolution of 1600x1200 being provided a signal of 640x480 must scale width and height by 2.5x to display the image provided by the computer on the native pixels. In order to do this, while producing as few artifacts as possible, advanced signal processing is required, which can be a source of introduced latency. Interlaced video signals such as 480i and 1080i require a deinterlacing step that adds lag. Anecdotallyprogressive scanning mode. External devices have also been shown to reduce overall latency by providing faster image-space resizing algorithms than those present in the LCD screen.

Many LCDs also use a technology called "overdrive" which buffers several frames ahead and processes the image to reduce blurring and streaks left by ghosting. The effect is that everything is displayed on the screen several frames after it was transmitted by the video source.

Display lag can be measured using a test device such as the Video Signal Input Lag Tester. Despite its name, the device cannot independently measure input lag. It can only measure input lag and response time together.

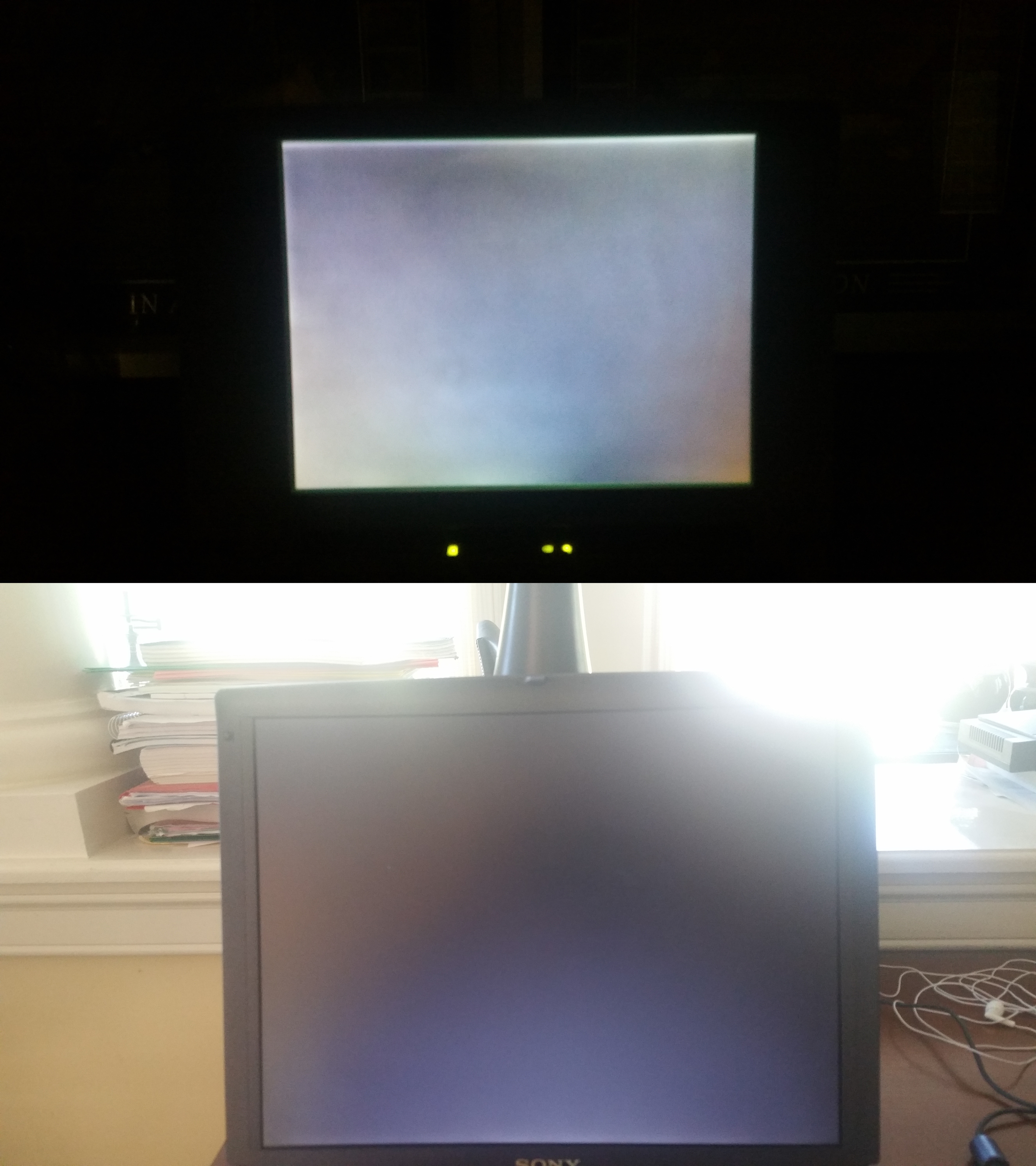

Lacking a measurement device, measurement can be performed using a test display (the display being measured), a control display (usually a CRT) that would ideally have negligible display lag, a computer capable of mirroring an output to the two displays, stopwatch software, and a high-speed camera pointed at the two displays running the stopwatch program. The lag time is measured by taking a photograph of the displays running the stopwatch software, then subtracting the two times on the displays in the photograph. This method only measures the difference in display lag between two displays and cannot determine the absolute display lag of a single display. CRTs are preferable to use as a control display because their display lag is typically negligible. However, video mirroring does not guarantee that the same image will be sent to each display at the same point in time.

In the past it was seen as common knowledge that the results of this test were exact as they seemed to be easily reproducible, even when the displays were plugged into different ports and different cards, which suggested that the effect is attributable to the display and not the computer system. An in depth analysis that has been released on the German website Prad.de revealed that these assumptions have been wrong. Averaging measurements as described above lead to comparable results because they include the same amount of systematic errors. As seen on different monitor reviews the so determined values for the display lag for the very same monitor model differ by margins up to 16 ms or even more.

To minimize the effects of asynchronous display outputs (the points of time an image is transferred to each monitor is different or the actual used frequency for each monitor is different) a highly specialized software application called SMTT

Several approaches to measure display lag have been restarted in slightly changed ways but still reintroduced old problems, that have already been solved by the former mentioned SMTT. One such method involves connecting a laptop to an HDTV through a composite connection and run a timecode that shows on the laptop"s screen and the HDTV simultaneously and recording both screens with a separate video recorder. When the video of both screens is paused, the difference in time shown on both displays have been interpreted as an estimation for the display lag.16 ms or even more.

Display lag contributes to the overall latency in the interface chain of the user"s inputs (mouse, keyboard, etc.) to the graphics card to the monitor. Depending on the monitor, display lag times between 10-68 ms have been measured. However, the effects of the delay on the user depend on each user"s own sensitivity to it.

Display lag is most noticeable in games (especially older video-game consoles), with different games affecting the perception of delay. For instance, in PvE, a slight input delay is not as critical compared to PvP, or to other games favoring quick reflexes like

If the game"s controller produces additional feedback (rumble, the Wii Remote"s speaker, etc.), then the display lag will cause this feedback to not accurately match up with the visuals on-screen, possibly causing extra disorientation (e.g. feeling the controller rumble a split second before a crash into a wall).

TV viewers can be affected as well. If a home theater receiver with external speakers is used, then the display lag causes the audio to be heard earlier than the picture is seen. "Early" audio is more jarring than "late" audio. Many home-theater receivers have a manual audio-delay adjustment which can be set to compensate for display latency.

Many televisions, scalers and other consumer-display devices now offer what is often called a "game mode" in which the extensive preprocessing responsible for additional lag is specifically sacrificed to decrease, but not eliminate, latency. While typically intended for videogame consoles, this feature is also useful for other interactive applications. Similar options have long been available on home audio hardware and modems for the same reason. Connection through VGA cable or component should eliminate perceivable input lag on many TVs even if they already have a game mode. Advanced post-processing is non existent on analog connection and the signal traverses without delay.

A television may have a picture mode that reduces display lag for computers. Some Samsung and LG televisions automatically reduce lag for a specific input port if the user renames the port to "PC".

LCD screens with a high response-time value often do not give satisfactory experience when viewing fast-moving images (they often leave streaks or blur; called ghosting). But an LCD screen with both high response time and significant display lag is unsuitable for playing fast-paced computer games or performing fast high-accuracy operations on the screen, due to the mouse cursor lagging behind.

One of the areas where the A-MVA panel does extremely well is in the areas of display lag and pixel response time. Just to recap, you may have heard complaints about "input lag" on various LCDs, so that"s one area we look at in our LCD reviews. We put input lag in quotation marks because while many people call it "input lag", the reality is that this lag occurs somewhere within the LCD panel circuitry, or perhaps even at the level of the liquid crystals. Where this lag occurs isn"t the concern; instead, we just want to measure the duration of the lag. That"s why we prefer to call it "processing lag" or "display lag".

To test for display lag, we run the Wings of Fury benchmark in 3DMark03, with the output set to the native LCD resolution - in this case 1920x1200. Our test system is a quad-core Q6600 running a Radeon HD 3870 on a Gigabyte GA-X38-DQ6 motherboard - we had to disable CrossFire support in order to output the content to both displays. We connect the test LCD and a reference LCD to two outputs from the Radeon 3870 and set the monitors to run in clone mode.

The reference Monitor is an HP LP3065, which we have found to be one of the best LCDs we currently possess in terms of not having display lag. (The lack of a built-in scaler probably has something to do with this.) While we know some of you would like us to compare performance to a CRT, that"s not something we have around our offices anymore. Instead, we are looking at relative performance, and it"s possible that the HP LP3065 has 20ms of lag compared to a good CRT - or maybe not. Either way, the relative lag is constant, so even if a CRT is faster at updating, we can at least see if an LCD is equal to or better than our reference display.

While the benchmark is looping, we snap a bunch of pictures of the two LCDs sitting side-by-side (using a relatively fast shutter speed). 3DMark03 shows the runtime with a resolution of 10ms at the bottom of the display, and we can use this to estimate whether a particular LCD has more or less processing lag than our reference LCD. We sort through the images and discard any where the times shown on the LCDs are not clearly legible, until we are left with 10 images for each test LCD. We record the difference in time relative to the HP LP3065 and average the 10 results to come up with an estimated processing lag value, with lower numbers being better. Negative numbers indicate a display is faster than the HP LP3065, while positive numbers mean the HP is faster and has less lag.

It"s important to note that this is merely an estimate - whatever the reference monitor happens to be, there are some inherent limitations. For one, LCDs only refresh their display 60 times per second, so we cannot specifically measure anything less than approximately 17ms with 100% accuracy. Second, the two LCDs can have mismatched vertical synchronizations, so it"s entirely possible to end up with a one frame difference on the time readout because of this. That"s why we average the results of 10 images, and we are confident that our test procedure can at least show when there is a consistent lag/internal processing delay. Here is a summary of our results for the displays we have tested so far.

As you can see, all of the S-PVA panels we have tested to date show a significant amount of input lag, ranging from 20ms up to 40ms. In contrast, the TN and S-IPS panels show little to no processing lag (relative to the HP LP3065). The BenQ FP241VW performs similarly to the TN and IPS panels, with an average display lag of 2ms - not something you would actually notice compared to other LCDs. Obviously, if you"re concerned with display lag at all, you"ll want to avoid S-PVA panels for the time being. That"s unfortunate, considering S-PVA panels perform very well in other areas.

Despite what the manufacturers might advertise as their average pixel response time, we found most of the LCDs are basically equal in this area - they all show roughly a one frame "lag", which equates to a response time of around 16ms. In our experience, processing lag is far more of a concern than pixel response times. Taking a closer look at just the FP241VW, we can see the typical one frame lag in terms of pixel response time. However, the panel does appear to be a little faster in response time than some of the other panels we"ve tested (notice how the "ghost image" isn"t as visible as on the HP LP3065), and we didn"t see parts of three frames in any of the test images.

After the initial article went live, one of our readers who works in the display industry sent me an email. He provides some interesting information about the causes of image lag. Below is an (edited) excerpt from his email. (He wished to remain anonymous.)

PVA and MVA have inherent drawbacks with respect to LCD response time, especially gray-to-gray. To address this shortcoming, companies have invested in ASICs that perform a trick generically referred to as "overshoot." The liquid crystal (LC) material in *VA responds sluggishly to small voltage changes (a change from one gray level to another). To fix this, the ASIC does some image processing and basically applies an overvoltage to the electrodes of the affected pixel to spur the LC material into rapid movement. Eventually the correct settling voltage is applied to hold the pixel at the required level matching the input drive level.

It"s very complicated math taking place in the ASIC in real time. It works well but with an important caveat: it requires a frame buffer. What this means is that as video comes into the panel, there is a memory device that can capture one whole video frame and hold it. After comparing it to the next incoming frame, the required overshoot calculations are made. Only then is the first captured frame released to the panel"s timing controller, which is when the frame is rendered to the screen. As you may have already guessed, that causes at least one frame time worth of lag (17ms).

Some companies discovered some unintended artifacts in their overshoot calculations and the only way they saw to correct these was to allow for their algorithm to look ahead by two frames instead of one. So they had to up the memory of the frame buffer and now they started capturing and holding not one but two frames upon which they make their complex overshoot predictions to apply the corrected pixel drive levels and reduce gray-to-gray response time (at the expense of lag time). Again, it works very well for improving response time, but at the expense of causing lag, which gamers hate. That in a nutshell is the basis of around 33ms of the lag measured with S-PVA.

Not every display uses this approach, but this could account for the increase in display lag between earlier S-PVA and later S-PVA panels. It"s also important to note that I tested the Dell 2408WFP revision A00, and apparently revision A01 does not have as much lag. I have not been able to confirm this personally, however. The above also suggest that displays designed to provide a higher image quality through various signal processing techniques could end up with more display lag caused by the microchip and microcode, which makes sense. Now all we need are better algorithms and technologies in order to reduce the need for all of this extra image processing -- or as we have seen with some displays (particularly HDTVs), the ability to disable the image processing.

This website is using a security service to protect itself from online attacks. The action you just performed triggered the security solution. There are several actions that could trigger this block including submitting a certain word or phrase, a SQL command or malformed data.

Display lag is a phenomenon associated with some types of LCD displays, and nearly all types of HDTVs, that refers to latency, or lag measured by the difference between the time a signal is input into a display and the time it is shown by the display. This lag time has been measured as high as 68ms, or the equivalent of 3-4 frames on a 60 Hz display. Display lag is not to be confused with pixel response time.

For older analog cathode ray tube technology, display lag is extremely low due to the nature of the technology which does not have the ability to store image data before display. The picture signal is minimally processed internally, simply for demodulation from a radio frequency carrier wave (for televisions), and then splitting into separate signals for the red, green, and blue electron guns, and for timing of the vertical and horizontal sync. Image adjustments typically involved reshaping the signal waveform but without storage, so the image is written to the screen as fast as it is received, with only nanoseconds of delay for the signal to traverse the wiring inside the device from input to the screen.

For modern digital signals, significant computer processing power and memory storage is needed to prepare an input signal for display. For over-the-air or cable-TV, the same analog demodulation techniques are used, but after that the signal is converted to digital data which must be decompressed using the MPEG codec, and rendered into an image bitmap stored in a frame buffer. This frame buffer is then procedurally written to the display device. In its simplest form this processing may take several microseconds to occur.

While the pixel response time of the display is usually listed in the monitor"s specifications, no manufacturers advertise the display lag of their displays, likely because the trend has been to increase display lag as manufacturers find more ways to process input at the display level before it is shown. Possible culprits are the processing overhead of HDCP, DRM, and also DSP techniques employed to reduce the effects of ghosting - and the cause may vary depending on the model of display. Investigations have been performed by several technology related websites; some of which are listed at the bottom of this article.

LCD, plasma, and DLP displays, unlike CRTs, have a native resolution. That is, they have a fixed grid of pixels on the screen that show the image sharpest when running at the native resolution (so nothing has to be scaled full-size which blurs the image). In order to display non-native resolutions, such displays must use video scalers, which are built into most modern monitors. As an example, a display that has a native resolution of 1600x1200 being provided a signal of 640x480 must scale width and height by 2.5x to display the image provided by the computer on the native pixels. In order to do this while producing as few artifacts as possible, advanced signal processing is required, which can be a source of introduced latency. Interlaced video signals such as 480i and 1080i require a deinterlacing step that adds lag. Anecdotally, display lag is significantly less when displays operate in native resolutions for a given LCD screen and in a progressive scanning mode. External devices have also been shown to reduce overall latency by providing faster image-space resizing algorithms than those present in the LCD screen.

Many LCDs also use a technology called "overdrive" which buffers several frames ahead and processes the image to reduce blurring and streaks left by ghosting. The effect is that everything is displayed on the screen several frames after it was transmitted by the video source.[citation needed]

Showing the existence of input lag requires a test display (the display being measured), a control display (usually a CRT) that would ideally have no display lag, a computer capable of mirroring output to two displays, stopwatch software, and a high-speed camera pointed at the two displays running the stopwatch program. The lag time is measured by taking a photograph of the displays running the stopwatch software, then subtracting the two times on the displays in the photograph. This method only measures the difference in display lag between two displays and cannot determine the absolute display lag of a single display. CRTs are preferable to use as a control display because their display lag is typically negligible. Also, video mirroring does not guarantee that the same image will be sent to each display at the same point in time.

In the past it was seen as common knowledge that the results of this test were exact as they seemed to be easily reproducible, even when the displays were plugged into different ports and different cards, which suggested that the effect is attributable to the display and not the computer system. An in depth analysis that has been released on the German website Prad.de revealed that these assumptions have been wrong. Averaging measurements as described above lead to comparable results because they include the same amount of systematic errors. As seen on different monitor reviews the so determined values for the display lag for the very same monitor model differ by margins up to 16 ms or even more.

To minimize the effects of asynchronous display outputs (the points of time an image is transferred to each monitor is different or the actual used frequency for each monitor is different) a highly specialized software called SMTT or a very complex and expensive test environment has to be used.

Several approaches to measure display lag have been restarted in slightly changed ways but still reintroduced old problems, that have already been solved by the former mentioned SMTT. One such method involves connecting a laptop to an HDTV through a composite connection and run a timecode that shows on the laptop"s screen and the HDTV simultaneously and recording both screens with a separate video recorder. When the video of both screens is paused, the difference in time shown on both displays have been interpreted as an estimation for the display lag. Nevertheless this is almost identical to the use of casual stopwatches on two monitors using a "clone view" monitor setup as it does not care about the missing synchronisation between the composite video signal and the display of the laptop"s screen or the display lag of that screen or the detail that the vertical screen refresh of the two monitors is still asynchronous and not linked to each other. Even if v-sync is activated in the driver of the graphics card the video signals of the analog and the digital output will not be synchronized. Therefore it is impossible to use a single stop watch for display lag measurements, nervertheless if it is created by a timecode or a simple stopwatch application, as it will always cause an error of up to 16 ms or even more.

Display lag contributes to the overall latency in the interface chain of the user"s inputs (mouse, keyboard, etc.) to the graphics card to the monitor. Depending on the monitor, display lag times between 10ms and 68ms have been measured. However, the effects of the delay on the user depend on the user"s own sensitivity to it.

Display lag is most noticeable in games (especially older video game consoles), with different games affecting the perception of delay. For instance, in World of Warcraft"s PvE, a slight input delay isn"t as critical compared to PvP, or to games favoring quick reflexes like Counter-Strike. Rhythm based games such as Guitar Hero also require exact timing; display lag will create a noticeable offset between the music and the on-screen prompts. Notably, many games of this type include an option that attempts to calibrate for display lag. Arguably, fighting games such as Street Fighter and Tekken are the most affected, since they may require move inputs within extremely tight windows that sometimes only last 1-3 frames on screen.

If the game"s controller produces additional feedback (rumble, the Wii Remote"s speaker, etc.), then the display lag will cause this feedback to not accurately match up with the visuals on-screen, possibly causing extra disorientation (e.g. feeling the controller rumble a split second before a crash into a wall).

TV viewers can be affected as well. If a home theater receiver with external speakers is used then the display lag causes the audio to be heard earlier than the picture is seen. "Early" audio is more jarring than "late" audio. Many home theater receivers have a manual audio delay adjustment which can be set to compensate for display latency.

Many televisions, scalers and other consumer display devices now offer what is often called a "game mode," in which the extensive preprocessing responsible for additional lag is specifically sacrificed to decrease, but not eliminate, latency. While typically intended for videogame consoles, this feature is also useful for other interactive applications. Similar options have long been available on home audio hardware and modems for the same reason.

LCD screens with a high response time value often do not give satisfactory experience when viewing fast moving images (They often leave streaks or blur; called ghosting). But an LCD screen with both high response time and significant display lag is unsuitable for playing fast paced computer games or performing fast high accuracy operations on the screen due to the mouse cursor lagging behind. Manufacturers only state the response time of their displays and do not inform customers of the display lag value.

The process that occurs from when the user presses a button to when the screen reacts is outlined below (steps which have negligible response time contributions have been omitted). Each step in the process adds response time (commonly known as "input lag"), which varies from minor to noticeable.

1: Controller sends signal to console For wired controllers, this lag is negligible. For wireless controllers, opinions vary as to the effect of this lag. It is likely that opinions vary due to each user"s sensitivity to lag, model of wireless controller and the other equipment in the signal chain (i.e. the rest of their gaming setup).

2: Network lag (online gaming only) Since the console must know the current location of other players, there is sometimes a delay as this information travels over the network. This occurs in games where the input signals are "held" for several frames (to allow time for the data to arrive at every player"s console) before being used to render the next frame. At 25 FPS, holding 4 frames adds 40ms to the overall input lag.

3: Console processes information and sends frame output to television A console will send out a new frame once it has finished processing it. This is measured with the frame rate. Using Gran Turismo 5 as an example, the maximum theoretical framerate is 60 FPS (frames per second), which means the minimum theoretical input lag for the overall system is 17ms (note: the maximum real world FPS in 3D mode is 40-50 FPS). In situations where processor load is high (e.g. many cars are on-screen on a wet track), this can drop to 30 FPS (16 FPS for 3D mode) which is equivalent to 32ms.

4: Television processes frame (image correction, upscaling, etc.) and pixel changes colour This is the "input lag" of the television. Image processing (such as upscaling, 100 Hz, motion smoothing, edge smoothing) takes time and therefore adds some degree of input lag. It is generally considered that input lag of a television below 30ms is not noticeable, discussions on gaming forums tend to agree with this value. Once the frame has been processed, the final step is the pixel response time for the pixel to display the correct colour for the new frame.

Typical overall response times Overall response times (from controller input to display response) have been conducted in these tests: http://www.eurogamer.net/articles/digitalfoundry-lag-factor-article?page=2 It appears that overall input lag times of approximately 200ms are distracting to the gamer. It also appears that (excluding television input lag) 133ms is an average response time and the most sensitive games (first person shooters and Guitar Hero) achieve response times of 67ms (again, excluding television input lag).

This website is using a security service to protect itself from online attacks. The action you just performed triggered the security solution. There are several actions that could trigger this block including submitting a certain word or phrase, a SQL command or malformed data.

Inverse ghostingWhile input lag appears exclusive to PVA and MVA panels, there’s another more widespread overdrive-related issue that extends to TN screens, too.

In simple terms, inverse ghosting involves a trail or shadow that appears in the wake of a moving object in approximately the opposite colour to that object – hence the term inverse. It is not, therefore, the blurry, smeared trail seen on monitors with poor pixel response. In practice, you might see a dark, well defined shadow trailing behind a bright object and vice versa.

The precise manner in which it manifests varies widely and depends upon the colour of both the object and the background it is passing over as well as the panel type and strength of overdrive.

A good example of inverse ghosting is provided by Viewsonic"s new VX2260WM, a 1080p 22-inch monitor with a TN panel. Handily, Viewsonic has given the VX2260WM a response time toggle in the OSD menu with three options – Standard, Advanced and Ultra Fast. The Ultra Fast option uses a particularly aggressive form of pixel overdrive and the results can be seen below.

The first image shows an Explorer window being jostled back and forth on the desktop in one of the Viewsonic’s standard mode. Note in particular the colours of the folders. Then look at the second image, captured with the VX2260WM in Ultra Fast mode. Suddenly, the moving folders are shadowed by extremely odd-looking pink artefacts.

The same window, but this time it"s static.It’s hard to say exactly how widespread this problem is. It may affect all overdriven panels to some degree. Often it’s extremely hard to detect and only appears with objects of a very particular colour.

As with input lag, thanks to the unwillingness of monitor makers to discuss overdrive related problems, we can’t say exactly what is going on. It’s safe to say that it is related to the exaggerated voltages inherent to overdrive implementations and an educated guess would say it boils down to pixels overshooting their target colour states.

Ultimately, only the very worst examples of inverse ghosting noticeably degrade image quality. However, at the very least, its existence should make you wary of buying a monitor based mainly on exotically low pixel response performance. Odds are, aggressive overdrive is responsible.

To an extent, the same applies to input lag. Only the very laggiest panels will be unacceptable to most users. However, we still feel it pays to be wary of PVA panels with very low pixel response performance. Don"t let the monitor makers seduce you with showbiz performance claims. Tireless technological refinement has ironed out most of the really serious flaws suffered by early LCD monitors, but there’s still no such thing as a perfect panel.

.jpg)

This post may contain affiliate links. Clicking these links will redirect you to Amazon.com, and we will receive a small commission on purchases made through this link.

Don’t fall for this trap from display manufacturers when buying a display. Display manufacturers DO NOT advertise a display’s input lag when you’re buying a display, either on the box, on the manual, or anywhere relevant to their interests. I’m sure there are valid reasons for this, but due to the nature of the phrase “response time”, a lot of people get confused into thinking it reflects a display’s input lag. It does not.

The response time of a display is how quickly it can change its pixels from black-to-white or gray-to-gray. A display while turned on usually shows a black screen when nothing is being shown on it, and the time it takes to display a gray or white pixel from this state is recorded as its response time rating. So those pretty logos you see on the boxes of displays that state “2ms”, “6ms”, and so on reflect this measurement. It simply dictates how fast a display can go from a black-to-white or grey-to-grey pixel.

Simple answer: money. Display manufacturers know that going from black-to-white takes longer than it does for it to go from gray-to-gray. It makes more sense for them to advertise the fastest number, because it makes the display look more attractive in the consumer’s eyes. Unless you are comparing displays fairly (using either GTG or BTW), you shouldn’t read too deeply into this measurement. Manufacturers will spice up this statistic with a fancy logo, something that looks like this:

The response time of a display typically governs how much ghosting a display has. Ghosting is the blur that you see on your display trailing objects when there are fast scenes being displayed, such a car chase, or an action video game like

In a way, yes. As a pixel is inactive when it’s black, and active when it’s white, it means that a picture needs to be visible before you can react to what’s on the screen. The faster the response time of a display is, the quicker it can display an image. The quicker it does that, the quicker you can react to it. This is the reason why the lag tester is such a valuable tool; it records the combined display response time and input lag of the HDTV or monitor, and displays it as a single number. It’s a new way to measure this phenomenon that makes more sense. With a combined measurement for both response time and input lag, gamers can rest assured that a display is fast enough to reduce image ghosting, and responsive enough to register their inputs as soon as possible.

In summation, don’t read too deeply into the response time rating advertised by display manufacturers. I’m glad manufacturers advertise it, as long as it’s not confused with input lag, which is NOT advertised. Want to know the combined response time and input lag of a specific display? Head over to the

Gaming monitors are designed to make the output of your graphics card and CPU look as good as possible while gaming. They"re responsible for displaying the final result of all of your computer"s image rendering and processing, yet they can vary widely in their representation of color, motion, and image sharpness. When considering what to look for in a gaming monitor, it"s worth taking the time to understand everything a gaming monitor can do, so you can translate gaming monitor specs and marketing into real-world performance.

Display technology changes over time, but the basic goals of monitor manufacturers remain consistent. We"ll break down each group of monitor features below to isolate their benefits.

Resolution is a key feature of any monitor. It measures the width and height of the screen in terms of pixels, or “picture elements”, the tiny points of illumination that compose an image. A 2,560 × 1,440 screen, for example, has a total of 3,686,400 pixels.

Common resolutions include 1,920 × 1,080 (sometimes called “Full HD” or FHD), 2,560 × 1,440 (“Quad HD”, QHD, or “Widescreen Quad HD”, WQHD), or 3840 × 2160 (UHD, or “4K Ultra HD”). Ultrawide monitors are also available with resolutions such as 2560 x 1080 (UW-FHD) and 3440 x 1440 (UW-QHD), 3840x1080 (DFHD), and 5120x1440 (DQHD).

Sometimes manufacturers only reference one measurement for standard resolutions: 1080p and 1440p refer to height, while 4K refers to width. Any resolution higher than 1,280 × 720 is high definition (HD).

The pixels being counted in these measurements are usually rendered the same way: As squares on a two-dimensional grid. To see this, you can either move closer to (or magnify) the screen until you perceive individual blocks of color, or zoom in on an image until it becomes “pixelated”, and you see a staircase of small squares instead of clean diagonal lines.

As you increase your display resolution, it gets harder to pick out individual pixels with the naked eye, and the clarity of the picture increases in turn.

Beyond increasing the detail onscreen in games or movies, there"s another benefit to higher resolutions. They give you more desktop real estate to work with. That means you get a larger workspace on which to arrange windows and applications.

You might already know that a screen with 4K display resolution doesn"t magically make everything it displays look 4K. If you play a 1080p video stream on it, that content usually won"t look as good a 4K Blu-ray. However, it may still look closer to 4K than it used to, thanks to a process called upscaling.

Upscaling is a way to scale lower-resolution content to a higher resolution. When you play a 1080p video on a 4K monitor, the monitor needs to “fill in” all of the missing pixels that it expects to display (as a 4K monitor has four times as many pixels as 1080p). A built-in scaler interpolates new pixels by examining the values of surrounding pixels. HDTVs often feature more complex upscaling than PC monitors (with line-sharpening and other improvements), as the latter often simply turn one pixel into a larger block of the same pixels. The scaler is likely to cause some blurring and ghosting (double images), especially if you look closely.

Monitors can also change resolution. Modern screens have a fixed number of pixels, which defines their "native resolution" but can also be set to approximate lower resolutions. As you scale down, onscreen objects will look larger and fuzzier, screen real estate will shrink, and visible jaggedness may result from interpolation. (Note that it wasn’t always this way: older analog CRT monitors can actually switch between resolutions without interpolation, as they do not have a set number of pixels.)

Screens with 4K resolution and higher introduce another scaling concern: at ultra-high definition, text and interface elements like buttons can start to look small. This is especially true on smaller 4K screens when using programs that don’t automatically resize their text and UI.

Windows’ screen scaling settings can increase the size of text and layout elements, but at the cost of reducing screen real estate. There’s still a benefit of increased resolution, even when this scaling is used — onscreen content, like an image in an editing program, will appear at 4K resolution even if the menus around it have been rescaled.

Manufacturers measure screen size diagonally, from corner to corner. A larger screen size, in tandem with a higher resolution, means more usable screen space and more immersive gaming experiences.

Players sit or stand close to their monitors, often within 20”-24”. This means that the screen itself fills much more of your vision than an HDTV (when seated at the couch) or a smartphone/tablet. (Monitors boast the best ratio of diagonal screen size to viewing distance among common displays, with the exception of virtual reality headsets). The benefits of 1440p or 4K resolution are more immediately perceptible in this close-range situation.

Basically, you want to find a screen where you never perceive an individual pixel. You can do this using online tools that measure pixel density (in pixels per inch), which tells you the relative “sharpness” of the screen by determining how closely pixels are packed together, or the alternative pixels per degree formula, which automatically compares its measurements against the limits of human vision.

It"s also worth considering your own eyesight and desktop setup. If you have 20/20 vision and your eyes are around 20” from your screen, a 27” 4K panel will provide an immediate visual upgrade. However, if you know your eyesight is worse than 20/20, or you prefer to sit more than 24” away, a 1440p panel may look just as good to you.

A monitor"s aspect ratio is the proportion of width to height. A 1:1 screen would be completely square; the boxy monitors of the 1990s were typically 4:3, or “standard”. They have largely been replaced by widescreen (16:9) and some ultrawide (21:9, 32:9, 32:10) aspect ratios.

Most online content, such as YouTube videos, also defaults to a widescreen aspect ratio. However, you"ll still see horizontal black bars onscreen when watching movies or TV shows shot in theatrical widescreen (2.39:1, wider than 16:9), and vertical black bars when watching smartphone videos shot in thinner “portrait” mode. These black bars preserve the original proportions of the video without stretching or cropping it.

UltrawidesWhy opt for an ultrawide screen over regular widescreen? They offer a few advantages: They fill more of your vision, they can provide a movie-watching experience closer to the theater (as 21:9 screens eliminate “letterboxing” black bars for widescreen films), and they let you expand field of view (FOV) in games without creating a “fisheye” effect. Some players of first-person games prefer a wider FOV to help them spot enemies or immerse themselves in the game environment. (But note that some popular FPS games do not support high FOV settings, as they can give players an advantage).

Curved screens are another common feature on ultrawide monitors. These can correct one typical issue with larger ultrawides: Images at the distant edges of the screen look less distinct than those in the middle. A curved screen helps compensate for this and provides a clearer view of the extreme edges of the screen. However, its benefits are most noticeable on larger screens over 27”.

When viewing two monitors side-by-side, it"s sometimes easy to see which has more brilliant hues, deeper blacks, or a more lifelike color palette. It can be harder to put the picture together in your head when reading specifications, however, because color in monitors is evaluated in many different ways. There"s no one spec to focus on: Contrast ratio, brightness, black level, color gamut, and more all come into play. Before moving on to larger color features, let"s define these terms one-by-one.

Contrast RatioContrast ratio, one of the most basic measures of a monitor"s performance, measures the ratio between the extremes of black and white that the screen can display. A baseline contrast ratio like 1,000:1 means that the white parts of the image are 1,000 times brighter than the dark parts.

When it comes to contrast ratios, higher numbers are better. A high contrast ratio, like 4,000:1, means bright highlights, inky blacks, and dark areas where details are still perceptible. A contrast ratio of 200:1, on the other hand, means that the blacks look more like grays, and the colors look faded and indistinct from each other.

Use caution when LCDs advertise very high “dynamic contrast ratios”, which are achieved by changing the behavior of the backlight. For gaming or everyday use, the standard “static” contrast ratio discussed above is a better marker of the monitor"s quality.

LuminanceBrightness is often measured in “luminance”, a precise measure of how much light is emitted by the screen. It"s given in candelas per square meter (cd/m2), a unit which is also called a “nit”. For HDR displays, the VESA (Video Electronics Standards Association) has standardized a suite of tests for luminance using specific test patches. When comparing luminance specs, check to make sure they use this consistent test platform, rather than a proprietary metric.

Black LevelIn all LCD screens, light from the backlight inevitably leaks through the liquid crystal. This provides the basis for the contrast ratio: For example, if the screen leaks 0.1% of the illumination from the backlight in an area that"s supposed to be black, this establishes a contrast ratio of 1,000:1. An LCD screen with zero light leakage would have an infinite contrast ratio. However, this isn"t possible with current LCD technology.

“Glow” is a particular issue in dark viewing environments, which means that achieving low black levels is a major selling point for LCD monitors. However, an LCD screen can’t reach a black level of 0 nits unless it’s completely turned off.

OLEDs have incredible black levels because they don"t use backlights. When an OLED pixel isn"t activated by electricity, it creates no light at all. OLED screens may advertise black levels “below 0.0005 nits”, as taking measurements more precise is usually prohibitively expensive. However, the black level is usually much closer to 0 than 0.0005.

Color DepthMonitors need to display many subtle shades of color. If they can"t smoothly transition between slightly different hues, we see onscreen color “banding” — a stark shift between two different colors, creating visibly lighter, and darker bands where we should see a seamless gradient. This is sometimes referred to as “crushing” the colors.

A monitor"s ability to display many slightly different colors, and thus avoid banding and inaccuracy, is measured by color depth. Color depth specifies the amount of data (measured in bits) the screen can use to build the color of one pixel.

Each pixel onscreen has three color channels — red, green, and blue — illuminated at varying intensities to create (typically) millions of shades. 8-bit color means that each color channel uses eight bits. The total number of shades possible in a screen with 8-bit color depth is 28 x 28 x 28=16,777,216.

True 10-bit monitors are rare — many monitors use forms of internal color processing, such as FRC (frame rate control), to approximate a greater color depth. A “10-bit” monitor could be an 8-bit monitor with an additional FRC stage, often written as “8+2FRC”.

Some inexpensive LCD panels use 6-bit color along with “dithering” to approximate 8-bit color. In this context, dithering means the insertion of similar, alternating colors next to one another to fool the eye into seeing a different in-between color that the monitor cannot accurately display.

Frame Rate Control, or FRC, alternates different colors with each new frame to achieve this. While this can be implemented more cheaply than 8-bit True Color, color accuracy suffers, especially in low-light environments. Some screens also feature 8-bit color depth with an additional FRC stage (commonly listed as “8-bit + FRC”) to approximate 10-bit color.

Monitors sometimes feature a Look-Up Table (LUT) corresponding to a higher color depth, such as 10-bit color. This helps speed up color correction calculations that take place within the monitor as it converts color input to a color output appropriate for your screen. This intermediate step can help create smoother color transitions and more accurate output. These are usually reserved for more professional grade monitors than general consumer and gaming displays.

Color SpaceYou"ll often hear about a monitor"s color "space" or "gamut", which is different from its bit depth. The color space specifies the spectrum of colors that can appear, rather than just calculating the number of them.

Your eye can see a much wider spectrum of color than current displays can reproduce. To visualize all visible colors, a standard called CIE 1976 maps them to a grid, creating a horseshoe-shaped graph. The color gamuts available for monitors appear as subsets of this graph:

Common, mathematically defined color gamuts include sRGB, Adobe RGB, and DCI-P3. The first is a common standard for monitors (and the officially designated color space for the web). The second, wider standard is mostly used by photo and video editing professionals. The third, DCI-P3, is even wider, and is commonly used for HDR content.

Monitors advertising "99% sRGB" are claiming the screen covers 99% of the sRGB color gamut, which is often considered indistinguishable from 100% when viewed with the naked eye.

In LCD screens, the backlight and color filters determine the color space. All of the light created by the backlight passes through a color filter with red, green, and blue spots. Narrowing the “band-pass” of this filter restricts the wavelengths of light that can pass through, increasing the purity of the final colors produced. Although this lessens the screen"s efficiency (as the filter now blocks more of the backlight"s output), it creates a wider color gamut.

White LED (W-LED) backlight: A blue LED coated in yellow phosphors emits white light, which is filtered through red, green, and blue color channels to become the pixel"s final color. W-LED backlights produce a standard sRGB gamut color space. Sometimes an additional coating of special nanoparticles can be applied to a W-LED backlight to produce wider color gamut, often resulting in wider DCI-P3 color space coverage.

Quantum Dot Coating (QD): A blue LED backlight shines on green and red nanoparticles, which are manufactured to a tight tolerance. These emit a narrow frequency of green and red light. The nanoparticles don"t actually filter out light, which makes the process highly efficient. Instead, they convert and re-emit light on a narrow frequency range, which yields a wide color gamut.

HDR monitors display brighter images with better contrast and preserve more detail in both light and dark areas of the screen. Using an HDR monitor, you might be better able to spot something moving down a dark corridor in a horror game, or see more dramatic shafts of sunlight in an open-world title.

Though they work best with HDR content (which only some games and movies support), these monitors typically support 10-bit color depth and backlights that support a wide color gamut, which will also improve standard content (SDR). (Note that HDR monitors are often not true 10-bit color, but rather 8+2FRC displays that accept a 10-bit input signal).

For LCD displays, a high-end backlight feature called local dimming is critical to HDR quality. Dimming zones for the backlight behind the screen control the brightness of groups of LEDs; more dimming zones means more precise control, less “blooming” (where light areas of the image brighten dark ones), and generally improved contrast.

Edge-lit local dimming relies on groups of LEDs clustered around the edges of the screen to brighten or dim the image in what is typically a fairly limited number of dimming zones.

Full Array Local Dimming (FALD), a more high-end option, uses far more dimming zones (typically hundreds) directly behind the panel rather than just at the edges of the screen. It can give more finite control of the HDR content and dimming of the screen as a result.

It can be tough to evaluate the quality of an HDR monitor on your own. You should rely on HDR standards like VESA’s DisplayHDR, which measures the relative quality of an HDR monitor by listing out specs like their dimming capability.

The DisplayHDR standard is more reliable than specs that are advertised as "Typical", as that wording allows manufacturers to list results that are true averages. Look for monitors that meet the minimum specification for different levels of DisplayHDR.

On the low end, a DisplayHDR 400 screen can have a peak brightness of 400 nits (compared to a 300-nit standard monitor), but only needs a standard 95% sRGB color gamut and 8-bit color depth. DisplayHDR 400 doesn"t require backlight local dimming.

On the higher end, a DisplayHDR 600 screen needs a brightness of 600 nits, 90% of the DCI-P3 color gamut (providing a wider color space), 10-bit color depth, and some form of local dimming.

OLED standards add additional requirements to showcase the technology"s deeper black levels. DisplayHDR True Black 400 and 500 require a black level below 0.0005 in addition to similar peak brightness standards.

Refresh rate is the frequency at which your entire screen refreshes the image. Higher refresh rates make onscreen motion look smoother, because the screen updates the position of each object more rapidly. This can make it easier for competitive players to track moving enemies in a first-person shooter, or just make a screen feel more responsive as you scroll down a webpage or open an app on your phone.

Response rates are measured in hertz: A response rate of 120Hz, for example, means that the monitor refreshes every pixel 120 times per second. While 60Hz was once the standard for both PC monitors and smartphones, manufacturers are increasingly adopting higher refresh rates.

The benefits of jumping from 60Hz to 120Hz or 144Hz are clear to most players, especially in fast-paced first-person games. (However, you"ll only see benefits if you also have a GPU powerful enough to render frames faster than 60fps at the resolution and quality settings you"ve chosen).

A higher refresh rate makes it easier to track moving objects with your eye, makes sharp camera movements feel smoother, and reduces perceived motion blur. Online communities are divided about the improvement provided by monitors over 120Hz. If interested, it"s worth checking one out in person to see how much of a difference it might make for you.

Frame rate, measured in frames per second (FPS), tracks the number of images your graphics hardware draws. This online motion test demonstrates the improvements players will see when tracking moving objects at higher frame rates and refresh rates.

However, you"ll only actually see those extra frames onscreen if you have a refresh rate that matches or exceeds them; similarly, you only benefit from a high refresh rate screen if you have a CPU and graphics card capable of high frame rates. Plan your build accordingly to get the full benefit from your hardware.

Response time measures the time it takes a single pixel to change color in milliseconds. Lower response times mean fewer visual artifacts, such as motion blur or "trails" behind moving images.

Manufacturers often list "gray-to-gray" response time — the time it takes for a pixel to change from one shade of gray to another. The quoted number often indicates the manufacturer"s best-case result from a battery of different tests, rather than a reliable average.

An image-sharpening process called overdrive also influences test results. Overdrive applies increased voltage to pixels to boost the speed of color changes. If adjusted carefully, overdrive can lessen visible trails and ghosting (a faint double image) during motion. If not, it may "overshoot" the intended values and cause other visual artifacts.

Turning overdrive up can yield better results on gray-to-gray tests, but can also create visual artifacts that aren"t disclosed when quoting the best number from those gray-to-gray tests. Due to all the factors affecting reported response times, it"s best to refer to independent reviewers, who can measure response time across different manufacturers.

Players sometimes confuse response time with input lag, a measurement of the delay before your actions appear onscreen, similarly measured in milliseconds. Input lag is felt rather than seen, and is often a priority for players of fighting games and first-person shooters.

Input lag is a side effect of the processing done by the monitor scaler and the screen"s internal electronics. Selecting “Game Mode” on your monitor"s adjustment menu often switches off image processing features and lessens input lag. Disabling VSync (which prevents some visual artifacts) in in-game option menus can also help reduce input lag.

Adaptive SyncScreen tears will be instantly familiar to most players: A graphical glitch that appears as a horizontal line on your screen, with slightly mismatched images above and below it.

The glitch involves both your graphics card and monitor. The GPU draws a varying number of frames per second, but the monitor refreshes its screen at a fixed rate. If the GPU is midway through overwriting the previous frame in the frame buffer when the monitor reads the frame buffer to refresh the screen, the monitor will display the mismatched image as-is. The top of the image might be a new frame, but the bottom section will still show the previous frame, creating the “tear”.

VSync (vertical sync) provides one solution to this issue. This in-game feature reduces the speed at which frames are drawn in order to match your monitor"s refresh rate. However, VSync can cause stuttering when the frame rate drops below that cap. (For example, the GPU may suddenly drop to 30fps when it can"t deliver 60fps). The increased load on the GPU can also result in input lag.

G-Sync monitors use NVIDIA"s proprietary G-Sync scaler chip to match monitor refresh rates to GPU output, as well as predict GPU output based on recent performance. It also helps prevent stutter and input lag, which can result from duplicate frames being drawn as the first one waits to be displayed.

AMD Radeon FreeSync monitors operate along similar lines, matching the display to GPU output to avoid screen tearing and stutters. Rather than using a proprietary chip, they"re built on open Adaptive Sync protocols, which have been built into DisplayPort 1.2a and all later DisplayPort revisions. Though FreeSync monitors are often cheaper, the trade-off is that they aren"t subject to standard testing before release, and vary widely in quality.

Variable Refresh Rate (VRR) is a general term for technologies that sync up your monitor and GPU. Adaptive Sync is an open protocol included in DisplayPort 1.2a and later revisions. Recent Intel, AMD, and NVIDIA graphics technologies can all work with Adaptive Sync monitors.

Both LCDs and OLEDs "sample and hold", displaying moving objects as a series of static images that are rapidly refreshed. Each sample remains onscreen until it"s replaced with the next refresh. This "persistence" causes motion blur, as the human eye expects to track objects smoothly rather than see them jump to a new position. Even at high refresh rates, which update the image more often, the underlying sample-and-hold technology causes motion blur.

Motion blur reduction features use backlight strobing to shorten the time that frame samples are displayed onscreen. The screen turns black after every sample before displaying the next, reducing the time that a static image is held onscreen.

This mimics the operation of older CRT monitors, which worked differently than current LCD technology. CRT screens were illuminated by phosphors that rapidly decayed, providing brief impulses of illumination. This meant that the screen was actually dark for most of the refresh cycle. These quick impulses actually created a smoother impression of motion than sample-and-hold, and motion blur reduction features work to replicate this effect.

Because the backlight is being rapidly turned off and on, these features also lessen the brightness of the display. If you"re planning to use motion blur reduction backlight strobing, ensure that the screen you"re buying has high peak brightness.

These backlights should only be enabled for gaming and fast-moving content, as they will deliberately cause the backlight to flicker, which may be annoying during day-to-day tasks. They also can typically only be used at a fixed refresh rate (like 120Hz), and won"t work at the same time as VRR.

Cathode Ray Tube (CRT)These boxy computer monitors were common from the 1970s until the early 2000s, and are still prized by some players today for their low input lag and response times.

CRTs used three bulky electron guns to send a beam to excite red, green, and blue phosphors on the screen. These phosphors decayed within a few milliseconds, meaning the screen was illuminated by brief impulses on each refresh. This created a smooth illusion of motion, but also visible flickering.

Liquid Crystal Display (LCD)In TFT LCDs (thin-film-transistor liquid crystal displays), a backlight shines light through a layer of liquid crystals that can twist, turn, or block it. The liquid crystals do not emit light themselves, which is a key difference between LCDs and OLEDs.

After passing through the crystals, the light then passes through RGB filters (subpixels). Voltage is applied to illuminate each subpixel at a different intensity, leading to the mixed color that appears as one illuminated pixel.

Older LCDs used Cold-Cathode Fluorescent Lamps (CCFLs) as backlights. These large, energy-inefficient tubes were incapable of controlling the brightness of smaller zones of the screen, and were eventually phased out in favor of smaller, energy-efficient light-emitting diodes (LEDs).

LCD panels are available in a range of technologies and can vary widely in color reproduction, response time, and input lag, especially among high-end options. However, the following generalizations about panels usually hold true:

Oldest and most affordable LCD panel type. High refresh rates and response times for high-speed gaming such as first-person shooters or fighting games.

Restrictive viewing angles caused by the method of twisting light. Typically lack true 8-bit color depth. Typically low contrast ratios of 800:1 or 1,000:1.

Vertically aligned liquid crystals line up with two polarizers, rather than twisting, as in a TN panel. When in a resting state, the crystals can more effectively block illumination than TN panels.

Often slow response times, particularly on black-to-gray color transitions, often resulting in “black smearing” in motion. Wider viewing angles than TN panels, but often less than IPS panels. Some VA panels suffer significant color shift when viewed off-axis.

Several related technologies that rotate liquid crystals parall

Ms.Josey

Ms.Josey

Ms.Josey

Ms.Josey