ibm t220 t221 lcd monitors quotation

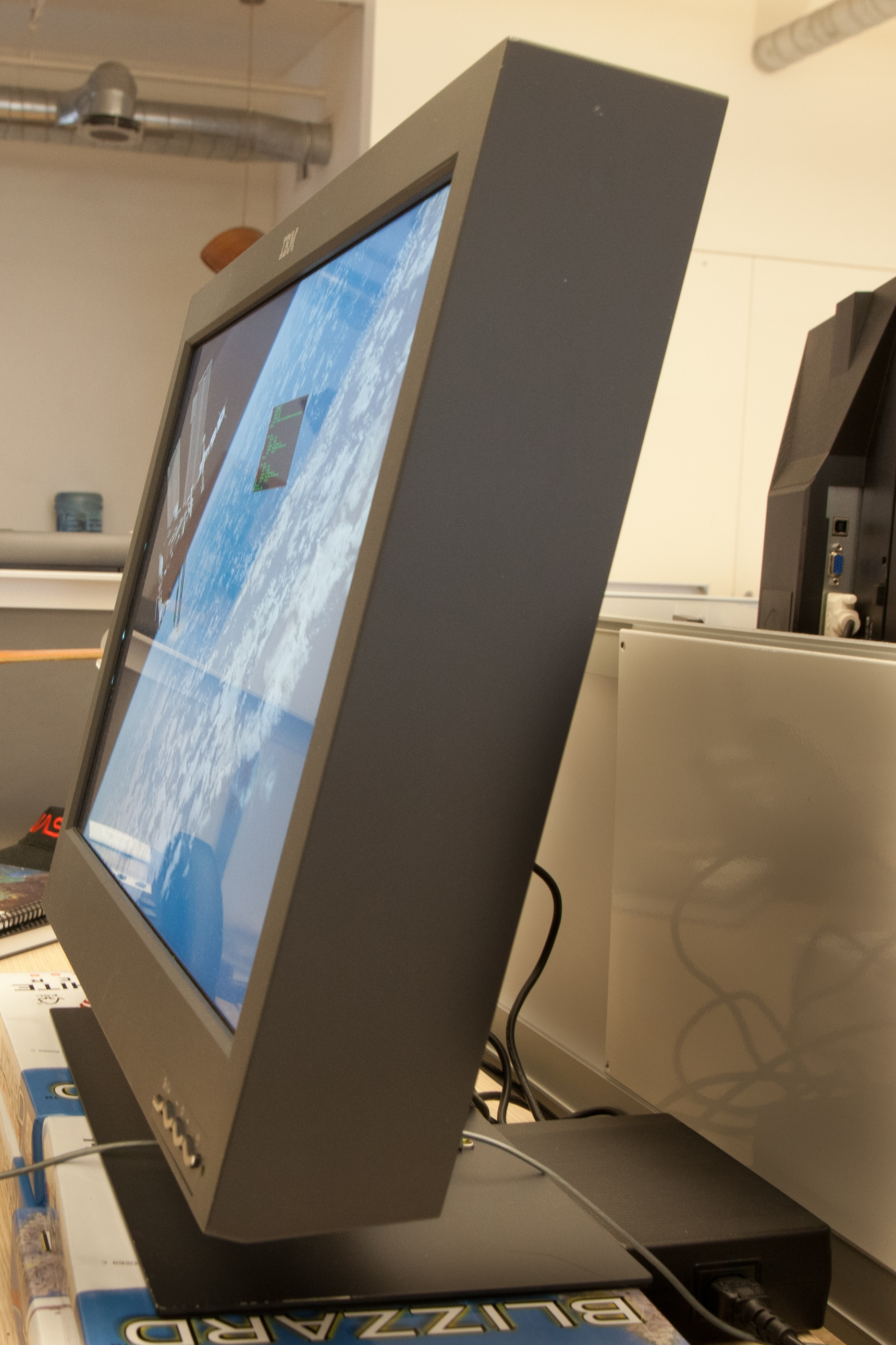

The IBM T220 and T221 are LCD monitors that were sold between 2001 and 2005, with a native resolution of 3840×2400 pixels (WQUXGA) on a screen with a diagonal of 22.2 inches (564 mm). This works out to 9,216,000 pixels, with a pixel density of 204 pixels per inch (80 dpcm, 0.1245 mm pixel pitch), much higher than contemporary computer monitors (about 100 pixels per inch) and approaching the resolution of print media. The display family was nicknamed "Big Bertha" in some trade journals. Costing around $8,400 in 2003, the displays saw few buyers. Such high-resolution displays would remain niche products for nearly a decade until modern high-dpi displays such as Apple"s Retina display line saw more-widespread adoption.

The IBM T220 was introduced in June 2001 and was the first monitor to natively support a resolution of 3840×2400.LFH-60 connectors. A pair of cables supplied with the monitor attaches to the connectors and splits into two single-link DVI connectors each, for a total of four DVI channels. One, two or four of the connectors may be used at once.

IBM T220 comes with a Matrox G200 MMS video card and two power supplies. To achieve native resolution the screen is sectioned into four columns of 960×2400 pixels or four tiles of 1920x1200 pixels. The monitor"s native refresh rate is 41 Hz.

This is a revised model of the original T220. Notable improvements include using only one power adapter instead of two and support for more screen modes. However, power consumption increased from 111 to 135 watts (111 to 150 at maximum.) They were initially available as 9503-DG1 and 9503-DG3 models. The 9503-DG1 model came with a Matrox G200 MMS graphics card and two LFH-60 connector cables. The 9503-DG3 model came with one cable connecting from one or two DVI ports on the graphics card to the T221"s LFH-60 sockets.

IBM T221 started out as an experimental technology from the flat panel display group at IBM Thomas J. Watson Research Center. In 2000, a prototype 22.2 in TFTLCD, code-named "Bertha", was made in a joint effort between IBM Research and IBM Japan. This display had a pixel format of 3840×2400 (QUXGA-W) with 204 ppi. On 10 November 2000, IBM announced the shipment of the prototype monitors to U.S. Department of Energy"s Lawrence Livermore National Laboratory in California. Later on 27 June 2001, IBM announced the production version of the monitor, known as T220. Later in November 2001, IBM announced its replacement, IBM T221. On 19 March 2002, IBM announced lowering the price of IBM T221 from US$17,999 to US$8,399. Later on 2 September 2003, IBM announced the availability of the 9503-DG5 model.

IBM and Chi Mei Group of Taiwan formed a joint venture called IDTechViewSoniciiyamaOEMed the T221 and sold it under their brand names. The production line of IDTech at Yasu Technologies was sold to Sony in 2005

Novaković, Nebojša (2003-03-28). "IBM T221 - the world"s finest monitor?". The Inquirer. Archived from the original on September 14, 2009. Retrieved 2011-12-23.link)

This is a revised model of the original T220. Notable improvements include using only one power adapter instead of two and support for more screen modes. However, power consumption increased from 111 to 135 watts (111 to 150 at maximum.) They were initially available as 9503-DG1 and 9503-DG3 models. The 9503-DG1 model came with a Matrox G200 MMS graphics card and two LFH-60 connector cables. The 9503-DG3 model came with one cable connecting from one or two DVI ports on the graphics card to the T221"s LFH-60 sockets.

Didn"t Apple find a way around this with their 30" LCD running through ADC ports?Apple"s 30" (and just about every other 30") is only 2560x1600, substantially lower in resolution than the 3840x2400 of the T221.

The monitor was originally created for engineers to use. I believe Los Alamos was one of IBM"s first customers for systems using the T220/T221. The low refresh rate and contrast aren"t a big deal for this monitor"s typical uses.

You have a bear. We"re still using XP to drive these monitors, and as soon as we can free one up to test it with 7 we will, but the thinking is that we are SOL. The new ATI videocards are promising, but whether or not they will drive these correctly is up in the air. I haven"t done any googling, though. It"s almost like the "HD revolution" is causing everything to take a step back. At this point in time, it"s just WAY easier to stick with 2k monitors. But, good luck.

I"ve used my T220 (VP2290b to be specific) on XP for several years without issue. The monitor supports four DVI plugs, but will function on a single DVI connection just fine, but only at 13hz. While this sounds horrid, it"s usable for everything but video (and even most youtube clips are watchable). So if you find yourself wanting 9.2 MP for Photoshop, programming, CAD, etc - it"s entirely usable. There is a SLIGHT "skip" effect with the mouse, but it"s not very noticeable, and there is no flicker. I even plugged the screen into a friend"s Macbook pro, and it ran fine without issue at full resolution. To get above 13Hz, you need to use more plugs, and there are various strategies for doing this. DVI has no "maximum resolution" - just finite bandwidth and you must trade resolution for refresh rate. Running the monitor at 1920x1200 will enable full refresh rate with one connector, if the need arises (Watching a DVD). The "converter box" is supported by newer versions of this screen, and it separates each link in a dual-link DVI, and routes it to each one of the connectors (This monitor was made before dual-link DVI). It"s not officially compatible with the older screens and I"ve never tried. Without the converter box, you use multiple connectors to drive different parts of the screen, which is well documented.

I"d say Windows 7 is probably the least T220-friendly OS (vs XP and Mac), but it definitely works with adequate hardware and some "tweaking" - and there"s no need to spend big money on a professional level videocard or anything "rated" for 3840x2400. YMMV, but every videocard I"ve tried (about 10) supports this resolution in some capacity in XP.

This monitor is not for the faint of heart. I have 20x18 vision and can read it easily from 12-18" away at normal DPI, but several of my friend with even slightly impaired vision find it very difficult to read. I can"t imagine getting work done without this monitor - you can view EIGHT documents in "page view" in word at the same time at 100% zoom. However it"s beyond useless for gaming, which drives a large amount of modern PC purchases. Also, the technology to make this monitor has probably gone down in price, but it was $22000 USD in 2001, and $8500 USD in 2004. Even if it fell to $1500, you can buy a lot of "consumer" monitors for that money. I"m sure that has a lot to do with demand. On the contrast ratio / general image quality: The measurement system for contrast ratios is anything but standard, and there is a large amount of embellishment with these figures, especially some of these "10000:1" numbers that float around. Whatever the number is, 300 or 400 to 1, it"s very conservative. I"ve seen monitors that were visually superior to this, but only slightly. I own a sharp aquos and a dell ultrasharp (IPS), and the T220 is very competitive. When you factor in it"s 9.2 MP resolution, it"s a no-brainer for photo editing. In closing, for those who haven"t seen a 220DPI monitor, the pixels are just small enough for the eye to resolve the individual pixels - but NOT the subpixels. It"s absolutely amazing to get 4" away from the monitor and just see more detail (like with paper), and not a bunch of RGB elements. This means there are almost no aliasing effects.

Holy sweet mother of Christ. That thing has twice the resolution of my two 24" LCDs combined, and all shoehorned into a little 22" panel. That"d be a bit small for my tastes, but I"d love to see that on a 30" LCD myself. I"d definitely make room on my desk for something like that.

Holy sweet mother of Christ. That thing has twice the resolution of my two 24" LCDs combined, and all shoehorned into a little 22" panel. That"d be a bit small for my tastes, but I"d love to see that on a 30" LCD myself. I"d definitely make room on my desk for something like that.

Anyway didn"t mean to brag. Hopefully the OP / anyone else with T220 questions will benefit from this, and I"m glad so many people are impressed - hopefully companies will make monitors with higher resolutions (I love how to be labeled "1080p" many desktop monitors have robbed us of 120 vertical pixels, going from 1900x1200 to 1900x1080).

Judging by that calculation, a standard 24" 1920x1200 LCD (like the pair I have) is ~93DPI. Presumably the T221 is 221DPI. I wouldn"t mind a panel somewhere in the middle of that range, say ~150DPI or so (about the DPI of cell phone screens, it seems). For a 24" 16:10 panel, that"s around about 3075x1912. That in itself would be quite the upgrade. Incompatible as hell with any sort of gaming, but interesting nonetheless.

The same pattern seems to apply to some aspects of the computer industry, when cost pressures take precedence over quality, features and innovation. In 2001, we saw the introduction of the IBM T220 monitor, with resolution of 3840×2400 on a 22.2″ panel. It was later superseded by the T221 with very similar specifications, but it was ultimately discontinued in 2005. Nothing matching it has been available since. Today, the screen resolutions seems to be undergoing an erosion. On small panels the “standards” (sub-standards?) have settled at the completely unusable 1024×600, and with total of three exceptions from Dell (3007WFP, 3008WFP), Samsung (305T) and Apple (Cinema HD), the commonly available screens are limited to 1920×1080 resolution. Even 1920×1200 screens are getting more and more rare, especially on laptops, because screens are marketed by diagonal size and for any given diagonal length, 16:9 ratio screens have a smaller surface area than 16:10 ratio screens.

IBM T221 monitors, especially of the latest DG5 variety, are very hard to come by and still expensive if you can ever find one. Typically they sell for double what you can get a Dell 3007WFP for. But you do get more than twice the pixel count and more than twice the pixel density. I have recently acquired a T221 and if your eyes can handle it (and mine can), the experience is quite amazing – once you get it working properly. Getting it working properly, however, can be quite a painful experience if you want to get the most out of it.

My T221 came with a single LFH-60 -> 2x SL-DVI (single link DVI) cable. There are two LFH-60 connectors on the T221, which allows the screen to be run using 4x SL-DVI inputs. This provides a maximum refresh of 48Hz. There is also a way to run this monitor using 2xDL-DVI inputs at 48Hz, but this requires special adapters, but that is a subject for another article, since I haven’t got any of those yet.

The 13Hz mode is completely straightforward to get working on both RHEL6 and XP x64, but 13Hz is just not fast enough. You can actually see the mouse pointer skipping as you move it, and playing back a video also results in visible frame skipping. So I have spent the effort to get the [email protected] mode working on my ATI HD4870X2. The end results are worth it, but the process isn’t entirely straightforward. The important thing to consider is that when running in anything other than 3840×[email protected] mode appears to the computer as two completely separate 1920×2400 monitors.

![]()

I dont think it was impossible to drive this. I remember having 1600x1200 CRT monitors back in 2000, and this is just 4x the pixels. In 2001, there was for example the Matrox Parhelia 256MB, which was with freaking 256MB of memory (I had 1.2GB HDD back then) which had:

I dont think it was impossible to drive this. I remember having 1600x1200 CRT monitors back in 2000, and this is just 4x the pixels. In 2001, there was for example the Matrox Parhelia 256MB, which was with freaking 256MB of memory (I had 1.2GB HDD back then) which had:

The 4:3 aspect ratio was common in older television cathode ray tube (CRT) displays, which were not easily adaptable to a wider aspect ratio. When good quality alternate technologies (i.e., liquid crystal displays (LCDs) and plasma displays) became more available and less costly, around the year 2000, the common computer displays and entertainment products moved to a wider aspect ratio, first to the 16:10 ratio. The 16:10 ratio allowed some compromise between showing older 4:3 aspect ratio broadcast TV shows, but also allowing better viewing of widescreen movies. However, around the year 2005, home entertainment displays (i.e., TV sets) gradually moved from 16:10 to the 16:9 aspect ratio, for further improvement of viewing widescreen movies. By about 2007, virtually all mass market entertainment displays were 16:9. In 2011, 2073600 !1920 × 1080 (Full HD, the native resolution of Blu-ray) was the favored resolution in the most heavily marketed entertainment market displays. The next standard, 8294400 !3840 × 2160 (4K UHD), was first sold in 2013.

This resolution is equivalent to a Full HD (2073600 !1920 × 1080) extended in width by 33%, with an aspect ratio of 64:27. It is sometimes referred to as "1080p ultrawide" or "UW-FHD" (ultrawide FHD). Monitors at this resolution usually contain built in firmware to divide the screen into two 1382400 !1280 × 1080 screens.

This resolution is equivalent to two Full HD (2073600 !1920 × 1080) displays side-by-side, or one vertical half of a 4K UHD (8294400 !3840 × 2160) display. It has an aspect ratio of 32:9 (3.55:1), close to the 3.6:1 ratio of IMAX UltraWideScreen 3.6. Samsung monitors at this resolution contain built in firmware to divide the screen into two 2073600 !1920 × 1080 screens, or one 2764800 !2560 × 1080 and one 1382400 !1280 × 1080 screen.

The first commercial displays capable of this resolution include an 82-inch LCD TV revealed by Samsung in early 2008,PPI 4K IPS monitor for medical purposes launched by Innolux in November 2010.Toshiba announced the REGZA 55x3,

When support for 4K at 60Hz was added in DisplayPort 1.2, no DisplayPort timing controllers (TCONs) existed which were capable of processing the necessary amount of data from a single video stream. As a result, the first 4K monitors from 2013 and early 2014, such as the Sharp PN-K321, Asus PQ321Q, and Dell UP2414Q and UP3214Q, were addressed internally as two 4147200 !1920 × 2160 monitors side-by-side instead of a single display and made use of DisplayPort"s Multi-Stream Transport (MST) feature to multiplex a separate signal for each half over the connection, splitting the data between two timing controllers.Asus PB287Q no longer rely on MST tiling technique to achieve 4K at 60Hz,

The Quarter Video Graphics Array (also known as Quarter VGA, QVGA, or qVGA) is a popular term for a computer display with 76800 !320 × 240 display resolution that debuted with the CGA Color Graphics Adapter for the original IBM PC. QVGA displays were most often used in mobile phones, personal digital assistants (PDA), and some handheld game consoles. Often the displays are in a "portrait" orientation (i.e., taller than they are wide, as opposed to "landscape") and are referred to as 76800 !240 × 320.

The name comes from having a quarter of the 307200 !640 × 480 maximum resolution of the original IBM VGA display technology, which became a de facto industry standard in the late 1980s. QVGA is not a standard mode offered by the VGA BIOS, even though VGA and compatible chipsets support a QVGA-sized Mode X. The term refers only to the display"s resolution and thus the abbreviated term QVGA or Quarter VGA is more appropriate to use.

Video Graphics Array (VGA) refers specifically to the display hardware first introduced with the IBM PS/2 line of computers in 1987.analog computer display standard, the 15-pin D-subminiature VGA connector, or the 307200 !640 × 480 resolution itself. While the VGA resolution was superseded in the personal computer market in the 1990s, it became a popular resolution on mobile devices in the 2000s.307200 !640 × 480 is called Standard Definition (SD), in comparison for instance to HD (921600 !1280 × 720) or Full HD (2073600 !1920 × 1080).

It is a common resolution among LCD projectors and later portable and hand-held internet-enabled devices (such as MID and Netbooks) as it is capable of rendering web sites designed for an 800 wide window in full page-width. Examples of hand-held internet devices, without phone capability, with this resolution include: Spice stellar nhance mi-435, ASUS Eee PC 700 series, Dell XCD35, Nokia 770, N800, and N810.

Originally, it was an extension to the VGA standard first released by IBM in 1987. Unlike VGA – a purely IBM-defined standard – Super VGA was defined by the Video Electronics Standards Association (VESA), an open consortium set up to promote interoperability and define standards. When used as a resolution specification, in contrast to VGA or XGA for example, the term SVGA normally refers to a resolution of 480000 !800 × 600 pixels.

The Extended Graphics Array (XGA) is an IBM display standard introduced in 1990. Later it became the most common appellation of the 786432 !1024 × 768 pixels display resolution, but the official definition is broader than that. It was not a new and improved replacement for Super VGA, but rather became one particular subset of the broad range of capabilities covered under the "Super VGA" umbrella.

The initial version of XGA (and its predecessor, the IBM 8514/A) expanded upon IBM"s older VGA by adding support for four new screen modes (three, for the 8514/A), including one new resolution:

Like the 8514, XGA offered fixed function hardware acceleration to offload processing of 2D drawing tasks. Both adapters allowed offloading of line-draw, bitmap-copy (bitblt), and color-fill operations from the host CPU. XGA"s acceleration was faster than 8514"s, and more comprehensive, supporting more drawing primitives, the VGA-res hi-color mode, versatile "brush" and "mask" modes, system memory addressing functions, and a single simple hardware sprite typically used to providing a low CPU load mouse pointer. It was also capable of wholly independent function, as it incorporated support for all existing VGA functions and modes – the 8514 itself was a simpler add-on adapter that required a separate VGA to be present. As they were designed for use with IBM"s own range of fixed-frequency monitors, neither adapter offered support for 480000 !800 × 600 SVGA modes.

IBM licensed the XGA technology and architecture to certain third party hardware developers, and its characteristic modes (although not necessarily the accelerator functions, nor the MCA data-bus interface) were aped by many others. These accelerators typically did not suffer from the same limitations on available resolutions and refresh rate, and featured other now-standard modes like 480000 !800 × 600 (and 1310720 !1280 × 1024) at various color depths (up to 24 bpp Truecolor) and interlaced, non-interlaced and flicker-free refresh rates even before the release of the XGA-2.

XGA should not be confused with EVGA (Extended Video Graphics Array), a contemporaneous VESA standard that also has 786432 !1024 × 768 pixels. It should also not be confused with the Expanded Graphics Adapter, a peripheral for the IBM 3270 PC which can also be referred to as XGA.

Wide Extended Graphics Array (Wide XGA or WXGA) is a set of non standard resolutions derived from the XGA display standard by widening it to a wide screen aspect ratio. WXGA is commonly used for low-end LCD TVs and LCD computer monitors for widescreen presentation. The exact resolution offered by a device described as "WXGA" can be somewhat variable owing to a proliferation of several closely related timings optimised for different uses and derived from different bases.

When referring to televisions and other monitors intended for consumer entertainment use, WXGA is generally understood to refer to a resolution of 1049088 !1366 × 768,786432 !1024 × 768 pixels, 4:3 aspect) extended to give square pixels on the increasingly popular 16:9 widescreen display ratio without having to effect major signalling changes other than a faster pixel clock, or manufacturing changes other than extending panel width by one third. As 768 does not divide exactly into 9, the aspect ratio is not quite 16:9 – this would require a horizontal width of 1365Template:1/3 pixels. However, at only 0.05%, the resulting error is insignificant.

A common variant on this resolution is 1044480 !1360 × 768, which confers several technical benefits, most significantly a reduction in memory requirements from just over to just under 1MB per 8-bit channel (1049088 !1366 × 768 needs 1024.5KB per channel; 1044480 !1360 × 768 needs 1020KB; 1MB is equal to 1024KB), which simplifies architecture and can significantly reduce the amount–and speed–of VRAM required with only a very minor change in available resolution, as memory chips are usually only available in fixed megabyte capacities. For example, at 32-bit color, a 1044480 !1360 × 768 framebuffer would require only 4MB, whilst a 1049088 !1366 × 768 one may need 5, 6 or even 8MB depending on the exact display circuitry architecture and available chip capacities. The 6-pixel reduction also means each line"s width is divisible by 8 pixels, simplifying numerous routines used in both computer and broadcast/theatrical video processing, which operate on 8-pixel blocks. Historically, many video cards also mandated screen widths divisible by 8 for their lower-color, planar modes to accelerate memory accesses and simplify pixel position calculations (e.g. fetching 4-bit pixels from 32-bit memory is much faster when performed 8 pixels at a time, and calculating exactly where a particular pixel is within a memory block is much easier when lines do not end partway through a memory word), and this convention still persisted in low-end hardware even into the early days of widescreen, LCD HDTVs; thus, most 1366-width displays also quietly support display of 1360-width material, with a thin border of unused pixel columns at each side. This narrower mode is of course even further removed from the 16:9 ideal, but the error is still less than 0.5% (technically, the mode is either 15.94:9.00 or 16.00:9.04) and should be imperceptible.

When referring to laptop displays or independent displays and projectors intended primarily for use with computers, WXGA is also used to describe a resolution of 1024000 !1280 × 800 pixels, with an aspect ratio of 16:10.both dimensions vs. the old standard (especially useful in portrait mode, or for displaying two standard pages of text side-by-side), a perceptibly "wider" appearance and the ability to display 720p HD video "native" with only very thin letterbox borders (usable for on-screen playback controls) and no stretching. Additionally, like 1044480 !1360 × 768, it required only 1000KB (just under 1MB) of memory per 8-bit channel; thus, a typical double-buffered 32-bit colour screen could fit within 8MB, limiting everyday demands on the complexity (and cost, energy use) of integrated graphics chipsets and their shared use of typically sparse system memory (generally allocated to the video system in relatively large blocks), at least when only the internal display was in use (external monitors generally being supported in "extended desktop" mode to at least 1920000 !1600 × 1200 resolution). 16:10 (or 8:5) is itself a rather "classic" computer aspect ratio, harking back all the way to early 64000 !320 × 200 modes (and their derivatives) as seen in the Commodore 64, IBM CGA card and others. However, as of mid 2013, this standard is becoming increasingly rare, crowded out by the more standardised and thus more economical-to-produce 1049088 !1366 × 768 panels, as its previously beneficial features become less important with improvements to hardware, gradual loss of general backwards software compatibility, and changes in interface layout. As of August 2013, the market availability of panels with 1024000 !1280 × 800 native resolution had been generally relegated to data projectors or niche products such as convertible tablet PCs and LCD-based eBook readers.

Widespread availability of 1024000 !1280 × 800 and 1049088 !1366 × 768 pixel resolution LCDs for laptop monitors can be considered an OS-driven evolution from the formerly popular 786432 !1024 × 768 screen size, which has itself since seen UI design feedback in response to what could be considered disadvantages of the widescreen format when used with programs designed for "traditional" screens. In Microsoft Windows operating system specifically, the larger task bar of Windows Vista and 7 occupies an additional 16 pixel lines by default, which may compromise the usability of programs that already demanded a full 786432 !1024 × 768 (instead of, e.g. 480000 !800 × 600) unless it is specifically set to use small icons; an "oddball" 784-line resolution would compensate for this, but 1024000 !1280 × 800 has a simpler aspect and also gives the slight bonus of 16 more usable lines. Also, the Windows Sidebar in Windows Vista and 7 can use the additional 256 or 336 horizontal pixels to display informational "widgets" without compromising the display width of other programs, and Windows 8 is specifically designed around a "two pane" concept where the full 16:9 or 16:10 screen is not required. Typically, this consists of a 4:3 main program area (typically 786432 !1024 × 768, 800000 !1000 × 800 or 1555200 !1440 × 1080) plus a narrow sidebar running a second program, showing a toolbox for the main program or a pop-out OS shortcut panel taking up the remainder.

XGA+ stands for Extended Graphics Array Plus and is a computer display standard, usually understood to refer to the 995328 !1152 × 864 resolution with an aspect ratio of 4:3. Until the advent of widescreen LCDs, XGA+ was often used on 17-inch desktop CRT monitors. It is the highest 4:3 resolution not greater than 220 pixels (≈1.05megapixels), with its horizontal dimension a multiple of 32 pixels. This enables it to fit closely into a video memory or framebuffer of 1MB (1 × 220 bytes), assuming the use of one byte per pixel. The common multiple of 32 pixels constraint is related to alignment.

Historically, the resolution also relates to the earlier standard of 1036800 !1152 × 900 pixels, which was adopted by Sun Microsystems for the Sun-2 workstation in the early 1980s. A decade later, Apple Computer selected the resolution of 1002240 !1152 × 870 for their 21-inch CRT monitors, intended for use as two-page displays on the Macintosh II computer. These resolutions are even closer to the limit of a 1MB framebuffer, but their aspect ratios differ slightly from the common 4:3.

WXGA+ (1296000 !1440 × 900) resolution is common in 19-inch widescreen desktop monitors (a very small number of such monitors use WSXGA+), and is also optional, although less common, in laptop LCDs, in sizes ranging from 12.1 to 17 inches.

Super Extended Graphics Array (SXGA) is a standard monitor resolution of 1310720 !1280 × 1024 pixels. This display resolution is the "next step" above the XGA resolution that IBM developed in 1990.

SXGA is the most common native resolution of 17 inch and 19 inch LCD monitors. An LCD monitor with SXGA native resolution will typically have a physical 5:4 aspect ratio, preserving a 1:1 pixel aspect ratio.

SXGA+ stands for Super Extended Graphics Array Plus and is a computer display standard. An SXGA+ display is commonly used on 14-inch or 15-inch laptop LCD screens with a resolution of 1470000 !1400 × 1050 pixels. An SXGA+ display is used on a few 12-inch laptop screens such as the ThinkPad X60 and X61 (both only as tablet) as well as the Toshiba Portégé M200 and M400, but those are far less common. At 14.1 inches, Dell offered SXGA+ on many of the Dell Latitude "C" series laptops, such as the C640, and IBM since the ThinkPad T21 . Sony also used SXGA+ in their Z1 series, but no longer produce them as widescreen has become more predominant.

In desktop LCDs, SXGA+ is used on some low-end 20-inch monitors, whereas most of the 20-inch LCDs use UXGA (standard screen ratio), or WSXGA+ (widescreen ratio).

WSXGA+ stands for Widescreen Super Extended Graphics Array Plus. WSXGA+ displays were commonly used on Widescreen 20-, 21-, and 22-inch LCD monitors from numerous manufacturers (and a very small number of 19-inch widescreen monitors), as well as widescreen 15.4-inch and 17-inch laptop LCD screens like the Thinkpad T61p, the late 17" Apple PowerBook G4 and the unibody Apple 15" MacBook Pro. The resolution is 1764000 !1680 × 1050 pixels (1,764,000 pixels) with a 16:10 aspect ratio.

UXGA has been the native resolution of many fullscreen monitors of 15 inches or more, including laptop LCDs such as the ones in ThinkPad A21p, A30p, A31p, T42p, T43p, T60p, Dell Inspiron 8000/8100/8200 and Latitude/Precision equivalents; Panasonic Toughbook CF-51; and the original Alienware Area 51m. However, in more recent times, UXGA is not used in laptops at all but rather in desktop UXGA monitors that have been made in sizes of 20 inches and 21.3 inches. Some 14-inch laptop LCDs with UXGA have also existed, but these were very rare.

WUXGA resolution has a total of 2,304,000 pixels. An uncompressed 8-bit RGB WUXGA image has a size of 6.75MB. As of 2014, this resolution is available in a few high-end LCD televisions and computer monitors (e.g. Dell Ultrasharp U2413, Lenovo L220x, Samsung T220P, ViewSonic SD-Z225, Asus PA248Q), although in the past it was used in a wider variety of displays, including 17-inch laptops. WUXGA use predates the introduction of LCDs of that resolution. Most QXGA displays support 2304000 !1920 × 1200 and widescreen CRTs such as the Sony GDM-FW900 and Hewlett Packard A7217A do as well. WUXGA is also available in some of the more high end mobile phablet devices such as the Huawei Honor X2 Gem.

The QXGA, or Quad Extended Graphics Array, display standard is a resolution standard in display technology. Some examples of LCD monitors that have pixel counts at these levels are the Dell 3008WFP, the Apple Cinema Display, the Apple iMac (27-inch 2009–present), the iPad (3rd generation), and the MacBook Pro (3rd generation). Many standard 21–22-inch CRT monitors and some of the highest-end 19-inch CRTs also support this resolution.

QWXGA (Quad Wide Extended Graphics Array) is a display resolution of 2359296 !2048 × 1152 pixels with a 16:9 aspect ratio. A few QWXGA LCD monitors were available in 2009 with 23- and 27-inch displays, such as the Acer B233HU (23-inch) and B273HU (27-inch), the Dell SP2309W, and the Samsung 2343BWX. As of 2011, most 2359296 !2048 × 1152 monitors have been discontinued, and as of 2013 no major manufacturer produces monitors with this resolution.

QXGA (Quad Extended Graphics Array) is a display resolution of 3145728 !2048 × 1536 pixels with a 4:3 aspect ratio. The name comes from it having four times as many pixels as an XGA display. Examples of LCDs with this resolution are the IBM T210 and the Eizo G33 and R31 screens, but in CRT monitors this resolution is much more common; some examples include the Sony F520, ViewSonic G225fB, NEC FP2141SB or Mitsubishi DP2070SB, Iiyama Vision Master Pro 514, and Dell and HP P1230. Of these monitors, none are still in production. A related display size is WQXGA, which is a wide screen version. CRTs offer a way to achieve QXGA cheaply. Models like the Mitsubishi Diamond Pro 2045U and IBM ThinkVision C220P retailed for around US$200, and even higher performance ones like the ViewSonic PerfectFlat P220fB remained under $500. At one time, many off-lease P1230s could be found on eBay for under $150. The LCDs with WQXGA or QXGA resolution typically cost four to five times more for the same resolution. IDTech manufactured a 15-inch QXGA IPS panel, used in the IBM ThinkPad R50p. NEC sold laptops with QXGA screens in 2002–05 for the Japanese market.iPad (starting from 3rd generation) also has a QXGA display.

To obtain a vertical refresh rate higher than 40Hz with DVI, this resolution requires dual-link DVI cables and devices. To avoid cable problems monitors are sometimes shipped with an appropriate dual link cable already plugged in. Many video cards support this resolution. One feature that is currently unique to the 30inch WQXGA monitors is the ability to function as the centerpiece and main display of a three-monitor array of complementary aspect ratios, with two UXGA (1920000 !1600 × 1200) 20-inch monitors turned vertically on either side. The resolutions are equal, and the size of the 1600 resolution edges (if the manufacturer is honest) is within a tenth of an inch (16-inch vs. 15.89999"), presenting a "picture window view" without the extreme lateral dimensions, small central panel, asymmetry, resolution differences, or dimensional difference of other three-monitor combinations. The resulting 7936000 !4960 × 1600 composite image has a 3.1:1 aspect ratio. This also means one UXGA 20-inch monitor in portrait orientation can also be flanked by two 30-inch WQXGA monitors for a 10112000 !6320 × 1600 composite image with an 11.85:3 (79:20, 3.95:1) aspect ratio. Some WQXGA medical displays (such as the Barco Coronis 4MP) can also be configured as two virtual 1920000 !1200 × 1600 or 2048000 !1280 × 1600 seamless displays by using both DVI ports at the same time.

An early consumer WQXGA monitor was the 30-inch Apple Cinema Display, unveiled by Apple in June 2004. At the time, dual-link DVI was uncommon on consumer hardware, so Apple partnered with Nvidia to develop a special graphics card that had two dual-link DVI ports, allowing simultaneous use of two 30-inch Apple Cinema Displays. The nature of this graphics card, being an add-in AGP card, meant that the monitors could only be used in a desktop computer, like the Power Mac G5, that could have the add-in card installed, and could not be immediately used with laptop computers that lacked this expansion capability.

In 2010, WQXGA made its debut in a handful of home theater projectors targeted at the Constant Height Screen application market. Both Digital Projection Inc and projectiondesign released models based on a Texas Instruments DLP chip with a native WQXGA resolution, alleviating the need for an anamorphic lens to achieve 1:2.35 image projection. Many manufacturers have 27–30-inch models that are capable of WQXGA, albeit at a much higher price than lower resolution monitors of the same size. Several mainstream WQXGA monitors are or were available with 30-inch displays, such as the Dell 3007WFP-HC, 3008WFP, U3011, U3014, UP3017, the Hewlett-Packard LP3065, the Gateway XHD3000, LG W3000H, and the Samsung 305T. Specialist manufacturers like NEC, Eizo, Planar Systems, Barco (LC-3001), and possibly others offer similar models. As of 2016, LG Display make a 10-bit 30-inch AH-IPS panel, with wide color gamut, used in monitors from Dell, NEC, HP, Lenovo and Iiyama.

QSXGA (Quad Super Extended Graphics Array) is a display resolution of 5242880 !2560 × 2048 pixels with a 5:4 aspect ratio. Grayscale monitors with a 5242880 !2560 × 2048 resolution, primarily for medical use, are available from Planar Systems (Dome E5), Eizo (Radiforce G51), Barco (Nio 5, MP), WIDE (IF2105MP), IDTech (IAQS80F), and possibly others.

Most display cards with a DVI connector are capable of supporting the 9216000 !3840 × 2400 resolution. However, the maximum refresh rate will be limited by the number of DVI links which are connected to the monitor. 1, 2, or 4 DVI connectors are used to drive the monitor using various tile configurations. Only the IBM T221-DG5 and IDTech MD22292B5 support the use of dual-link DVI ports through an external converter box. Many systems using these monitors use at least two DVI connectors to send video to the monitor. These DVI connectors can be from the same graphics card, different graphics cards, or even different computers. Motion across the tile boundary(ies) can show tearing if the DVI links are not synchronized. The display panel can be updated at a speed between 0Hz and 41Hz (48Hz for the IBM T221-DG5, -DGP, and IDTech MD22292B5). The refresh rate of the video signal can be higher than 41Hz (or 48Hz) but the monitor will not update the display any faster even if graphics card(s) do so.

In June 2001, WQUXGA was introduced in the IBM T220 LCD monitor using a LCD panel built by IDTech. LCD displays that support WQUXGA resolution include: IBM T220, IBM T221, Iiyama AQU5611DTBK, ViewSonic VP2290,IDTech MD22292 (models B0, B1, B2, B5, C0, C2). IDTech was the original equipment manufacturer which sold these monitors to ADTX, IBM, Iiyama, and ViewSonic.Hz and 48Hz, made them less attractive for many applications.

Lawler, Richard (17 October 2006). "CMO to ship 47-inch Quad HD – 1440p – LCD in 2007". Engadget. Retrieved 2008-07-06.

"CMO showcases latest "green" and "innovative" LCD panels". Chi Mei Optoelectronics. 24 October 2008. Archived from the original on 2010-03-13. Retrieved 2008-10-26.

Shin, Min-Seok; Choi, Jung-Whan; Kim, Yong-Jae; Kim, Kyong-Rok; Lee, Inhwan; Kwon, Oh-Kyong (2007). "Accurate Power Estimation of LCD Panels for Notebook Design of Low-Cost 2.2-inch qVGA LTPS TFT-LCD Panel". SID 2007 Digest 38 (1): 260–263.

Polsson, Ken (9 November 2010). "Chronology of IBM Personal Computers". Archived from the original on 2011-06-07. Retrieved 2010-11-18.

A common size for LCDs manufactured for small consumer electronics, basic mobile phones and feature phones, typically in a 1.7" to 1.9" diagonal size. This LCD is often used in portrait (128×160) orientation. The unusual 5:4 aspect ratio makes the display slightly different from QQVGA dimensions.

Half the resolution in each dimension as standard VGA. First appeared as a VESA mode (134h=256 color, 135h=Hi-Color) that primarily allowed 80x30 character text with graphics, and should not be confused with CGA (320x200); QVGA is normally used when describing screens on portable devices (PDAs, pocket media players, feature phones, smartphones, etc.). No set colour depth or refresh rate is associated with this standard or those that follow, as it is dependent both on the manufacturing quality of the screen and the capabilities of the attached display driver hardware, and almost always incorporates an LCD panel with no visible line-scanning. However, it would typically be in the 8-to-12 bpp (256–4096 colours) through 18 bpp (262,144 colours) range.

Atari ST line. High resolution monochrome mode using a custom non-interlaced monitor with the slightly lower vertical resolution (in order to be an integer multiple of low and medium resolution and thus utilize the same amount of RAM for the framebuffer) allowing a "flicker free" 71.25 Hz refresh rate, higher even than the highest refresh rate provided by VGA. All machines in the ST series could also use colour or monochrome VGA monitors with a proper cable or physical adapter, and all but the TT could display 640x400 at 71.25 Hz on VGA monitors.

Commodore Amiga line and others, e.g. Acorn Archimedes, Atari Falcon). They used NTSC or PAL-compliant televisions and monochrome, composite video or RGB-component monitors. The interlaced (i or I) mode produced visible flickering of finer details, eventually fixable by use of scan doubler devices and VGA monitors.

The second-generation Macintosh, launched in 1987, came with colour (and greyscale) capability as standard, at two levels, depending on monitor size—512×384 (1/4 of the later XGA standard) on a 12" (4:3) colour or greyscale (monochrome) monitor; 640×480 with a larger (13" or 14") high-resolution monitor (superficially similar to VGA, but at a higher 67 Hz refresh rate)—with 8-bit colour/256 grey shades at the lower resolution, and either 4-bit or 8-bit colour (16/256 grey) in high resolution depending on installed memory (256 or 512 kB), all out of a full 24-bit master palette. The result was equivalent to VGA or even PGC—but with a wide palette—at a point simultaneous with the IBM launch of VGA.

Later, larger monitors (15" and 16") allowed use of an SVGA-like binary-half-megapixel 832×624 resolution (at 75 Hz) that was eventually used as the default setting for the original, late-1990s iMac. Even larger 17" and 19" monitors could attain higher resolutions still, when connected to a suitably capable computer, but apart from the 1152×870 "XGA+" mode discussed further below, Mac resolutions beyond 832×624 tended to fall into line with PC standards, using what were essentially rebadged PC monitors with a different cable connection. Mac models after the II (Power Mac, Quadra, etc.) also allowed at first 16-bit High Colour (65,536, or "Thousands of" colours), and then 24-bit True Colour (16.7M, or "Millions of" colours), but much like PC standards beyond XGA, the increase in colour depth past 8 bpp was not strictly tied to changing resolution standards.

The first PowerBook, released in 1991, replaced the original Mac Portable (basically an original Mac with an LCD, keyboard and trackball in a lunchbox-style shell), and introduced a new 640×400 greyscale screen. This was joined in 1993 with the PowerBook 165c, which kept the same resolution but added colour capability similar to that of Mac II (256 colours from a palette of 16.7 million).

Introduced in 1984 by IBM. A resolution of 640×350 pixels of 16 different colours in 4 bits per pixel (bpp), selectable from a 64-colour palette in 2 bits per each of red-green-blue (RGB) unit.DIP switch options; plus full EGA resolution (and CGA hi-res) in monochrome, if installed memory was insufficient for full colour at above 320×200.

Introduced by IBM on ISA-based PS/2 models in 1987, with reduced cost compared to VGA. MCGA had a 320×200 256-colour (from a 262,144 colour palette) mode, and a 640×480 mode only in monochrome due to 64k video memory, compared to the 256k memory of VGA.

The high-resolution mode introduced by 8514/A became a de facto general standard in a succession of computing and digital-media fields for more than two decades, arguably more so than SVGA, with successive IBM and clone videocards and CRT monitors (a multisync monitor"s grade being broadly determinable by whether it could display 1024×768 at all, or show it interlaced, non-interlaced, or "flicker-free"), LCD panels (the standard resolution for 14" and 15" 4:3 desktop monitors, and a whole generation of 11–15" laptops), early plasma and HD ready LCD televisions (albeit at a stretched 16:9 aspect ratio, showing down-scaled material), professional video projectors, and most recently, tablet computers.

An extension to VGA defined by VESA for IBM PC-compatible computers in 1989 meant to take advantage of video cards that exceeded the minimum 256 kB defined in the VGA standard. For instance, one of the early supported modes was 800×600 in 16 colours at a slightly lower 56 Hz refresh rate, leading to 800×600 sometimes being referred to as "SVGA resolution" today.

An IBM display standard introduced in 1990. XGA built on 8514/A"s existing 1024×768 mode and added support for "high colour" (65,536 colours, 16 bpp) at 640×480. The second revision ("XGA-2") was a more thorough upgrade, offering higher refresh rates (75 Hz and up, non-interlaced, up to at least 1024×768), improved performance, and a fully programmable display engine capable of almost any resolution within its physical limits. For example, 1280×1024 (5:4) or 1360×1024 (4:3) in 16 colours at 60 Hz, 1056×400 [14h] Text Mode (132×50 characters); 800×600 in 256 or 64k colours; and even as high as 1600×1200 (at a reduced 50 Hz scan rate) with a high-quality multisync monitor (or an otherwise non-standard 960×720 at 60 Hz on a lower-end one capable of high refresh rates at 800×600, but only interlaced mode at 1024×768).I, 640×480×16 NI, high-res text) were commonly used outside Windows and other hardware-abstracting graphical environments.

A widely used aspect ratio of 5:4 (1.25:1) instead of the more common 4:3 (1.33:1), meaning that even 4:3 pictures and video will appear letterboxed on the narrower 5:4 screens. This is generally the native resolution—with, therefore, square pixels—of standard 17" and 19" LCD monitors. It was often a recommended resolution for 17" and 19" CRTs also, though as they were usually produced in a 4:3 aspect ratio, it either gave non-square pixels or required adjustment to show small vertical borders at each side of the image. Allows 24-bit colour in 4 MB of graphics memory, or 4-bit colour in 640 kB.

An enhanced version of the WXGA format. This display aspect ratio was common in widescreen notebook computers, and many 19" widescreen LCD monitors until ca. 2010.

A wide version of the SXGA+ format, the native resolution for many 22" widescreen LCD monitors, also used in larger, wide-screen notebook computers until ca. 2010.

This display aspect ratio is the native resolution for many 24" widescreen LCD monitors, and is expected to also become a standard resolution for smaller-to-medium-sized wide-aspect tablet computers in the near future (as of 2012).

A wide version of the UXGA format. This display aspect ratio was popular on high-end 15" and 17" widescreen notebook computers, as well as on many 23–27" widescreen LCD monitors, until ca. 2010. It is also a popular resolution for home cinema projectors, besides 1080p, in order to show non-widescreen material slightly taller than widescreen (and therefore also slightly wider than it might otherwise be), and is the highest resolution supported by single-link DVI at standard colour depth and scan rate (i.e., no less than 24 bpp and 60 Hz non-interlaced)

This is the highest resolution that generally can be displayed on analog computer monitors (most CRTs), and the highest resolution that most analogue video cards and other display transmission hardware (cables, switch boxes, signal boosters) are rated for (at 60 Hz refresh). 24-bit colour requires 9 MB of video memory (and transmission bandwidth) for a single frame. It is also the native resolution of medium-to-large latest-generation (2012) standard-aspect tablet computers.

A version of the XGA format, the native resolution for many 30" widescreen LCD monitors. Also, the highest resolution supported by dual-link DVI at a standard colour depth and non-interlaced refresh rate (i.e. at least 24 bpp and 60 Hz). Used on MacBook Pro with Retina display (13.3"). Requires 12 MB of memory/bandwidth for a single frame.

A digital format in testing by NHK in Japan (with a partnership extending to BBC for test coverage of the 2012 London Olympic Games), intended to provide effectively "pixel-less" imagery even on extra-large LCD or projection screens.

edit: for Emulex, the pixel pitch on 30" 2560x1600 monitors is lower than a 24" monitor, even though the 30" is bigger it has about the same ppi as a 21.5" 1920x1080.

Retina Display is a brand name used by Apple for its series of IPS LCD and OLED displays that have a higher pixel density than traditional Apple displays.trademark with regard to computers and mobile devices with the United States Patent and Trademark Office and Canadian Intellectual Property Office.

The Retina display has since expanded to most Apple product lines, such as Apple Watch, iPhone, iPod Touch, iPad, iPad Mini, iPad Air, iPad Pro, MacBook, MacBook Air, MacBook Pro, iMac, and Pro Display XDR, some of which have never had a comparable non-Retina display.marketing terms to differentiate between its LCD and OLED displays having various resolutions, contrast levels, color reproduction, or refresh rates. It is known as Liquid Retina display for the iPhone XR, iPad Air 4th Generation, iPad Mini 6th Generation, iPad Pro 3rd Generation and later versions,Retina 4.5K display for the iMac.

The displays are manufactured worldwide by different suppliers. Currently, the iPad"s display comes from Samsung,LG DisplayJapan Display Inc.twisted nematic (TN) liquid-crystal displays (LCDs) to in-plane switching (IPS) LCDs starting with the iPhone 4 models in June 2010.

Reviews of Apple devices with Retina displays have generally been positive on technical grounds, with comments describing it as a considerable improvement on earlier screens and praising Apple for driving third-party application support for high-resolution displays more effectively than on Windows.T220 and T221 had been sold in the past, they had seen little take-up due to their cost of around $8400.

Novakovic, Nebojsa. "IBM T221 - the world"s finest monitor?". The Inquirer. Archived from the original on September 14, 2009. Retrieved 30 August 2015.link)

The new iMac’s 5K display has 2880 vertical pixels. That means it contains 14,745,700 pixels in total, the most ever available in a monitor. Amazingly, the previous record holder, IBM’s T220/T221, offered 3,840 x 2,400 resolution back in 2001 (though it arguably was not a consumer-oriented display).

We first heard about panels with 5,120 x 2,160 resolution when Dell revealed its upcoming UltraSharp 27 5K monitor in early September. This announcement was a surprise, because no panel manufacturer had announced the availability of a 5K panel. Enthusiasts were forced to wonder if the design used a single panel, or two panels bound together by firmware. The latter approach was used by early 4K monitors that didn’t work particularly well.

Ms.Josey

Ms.Josey

Ms.Josey

Ms.Josey