not supported with gsync lcd panel factory

Information on this error message is REALLY sketchy online. Some say that the G-Sync LCD panel is hardwired to the dGPU and that the iGPU is connected to nothing. Some say that dGPU is connected to the G-Sync LCD through the iGPU. Some say that they got the MUX switch working after an intention ordering of bios update, iGPU drivers then dGPU drivers on a clean install.

I"m suspecting that if I connect an external 60hz IPS monitor to one of the display ports on the laptop and make it the only display, the Fn+F7 key will actually switch the graphics because the display is not a G-Sync LCD panel. Am I right on this?

If I"m right on this, does that mean that if I purchase this laptop, order a 15inch Alienware 60hz IPS screen and swap it with the FHD 120+hz screen currently inside, I will also continue to have MUX switch support and no G-Sync? The price for these screens is not outrageous.

Please note: Some Adaptive Sync monitors will ship with the variable refresh rate setting set to disabled. Consult with the user manual for your monitor to confirm the Adaptive Sync setting is enabled. Also some monitors may have the DisplayPort mode set to DisplayPort 1.1 for backwards compatibility. The monitor must be configured as a DisplayPort 1.2 or higher to support Adaptive Sync.

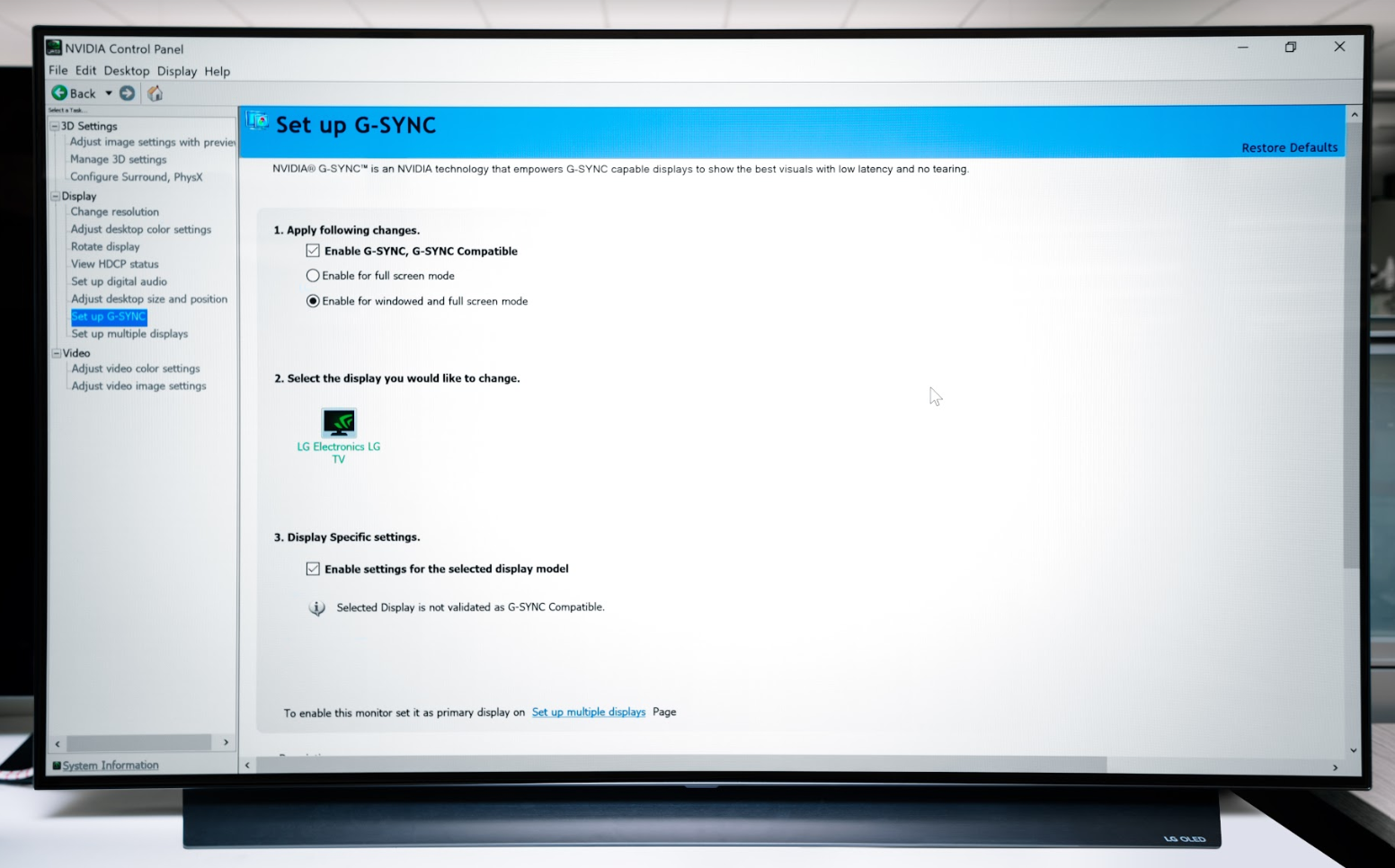

If your Adaptive Sync monitor isn’t listed as a G-SYNC Compatible monitor, you can enable the tech manually from the NVIDIA Control Panel. It may work, it may work partly, or it may not work at all. To give it a try:

3. From within Windows, open the NVIDIA Control Panel -> select "Set up G-SYNC" from the left column -> check the "Enable settings for the selected display model"box, and finally click on the Apply button on the bottom right to confirm your settings.

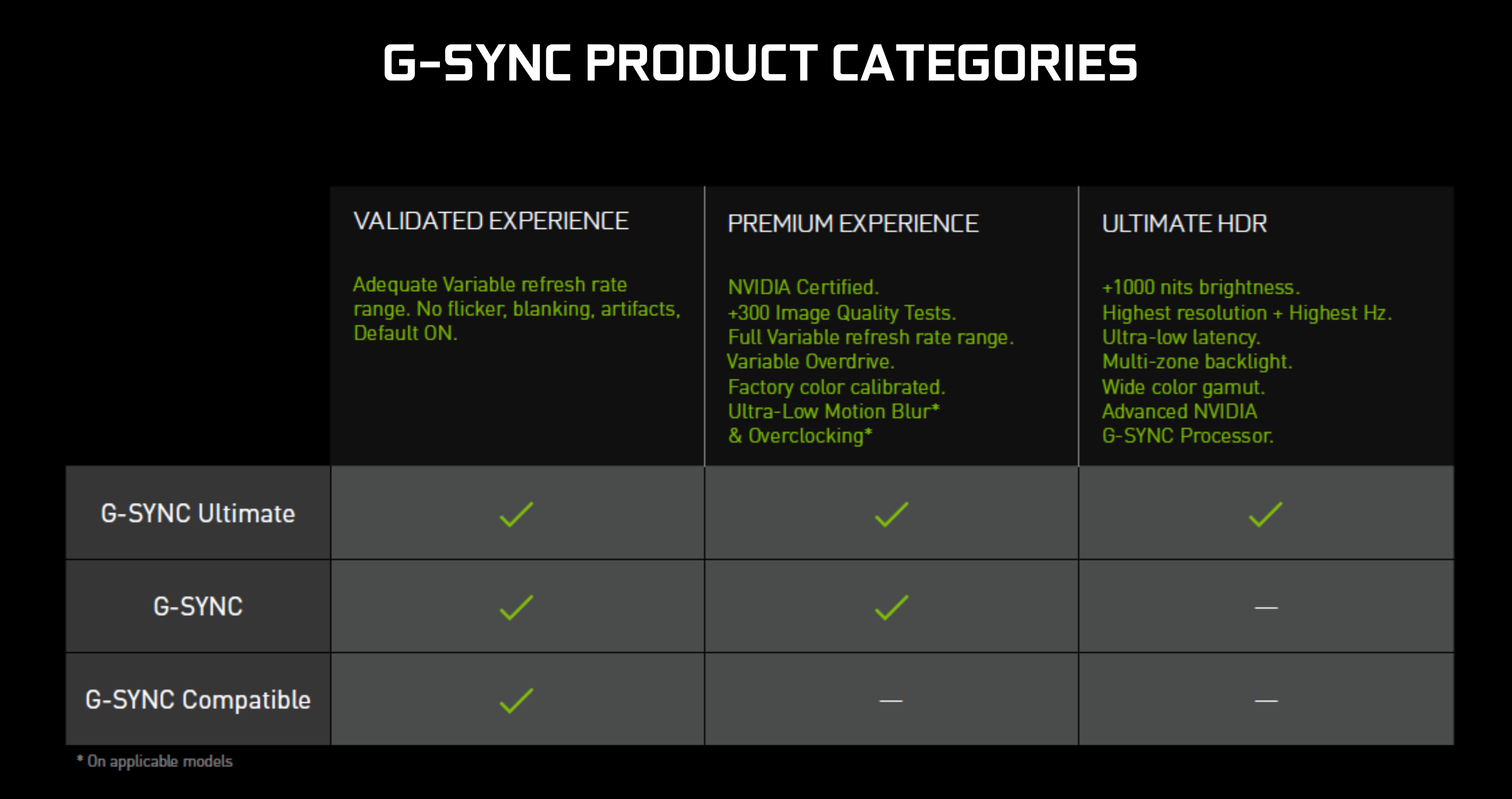

For the best gaming experience we recommend NVIDIA G-SYNC and G-SYNC Ultimate monitors: those with G-SYNC processors that have passed over over 300 compatibility and quality tests, and feature a full refresh rate range from 1Hz to the display panel’s max refresh rate, plus other advantages like variable overdrive, refresh rate overclocking, ultra low motion blur display modes, and industry-leading HDR with 1000 nits, full matrix backlight and DCI-P3 color.

If you want smooth gameplay without screen tearing and you want to experience the high frame rates that your Nvidia graphics card is capable of, Nvidia’s G-Sync adaptive sync tech, which unleashes your card’s best performance, is a feature that you’ll want in your next monitor.

To get this feature, you can spend a lot on a monitor with G-Sync built in, like the high-end $1,999 Acer Predator X27, or you can spend less on a FreeSync monitor that has G-Sync compatibility by way of a software update. (As of this writing, there are 15 monitors that support the upgrade.)

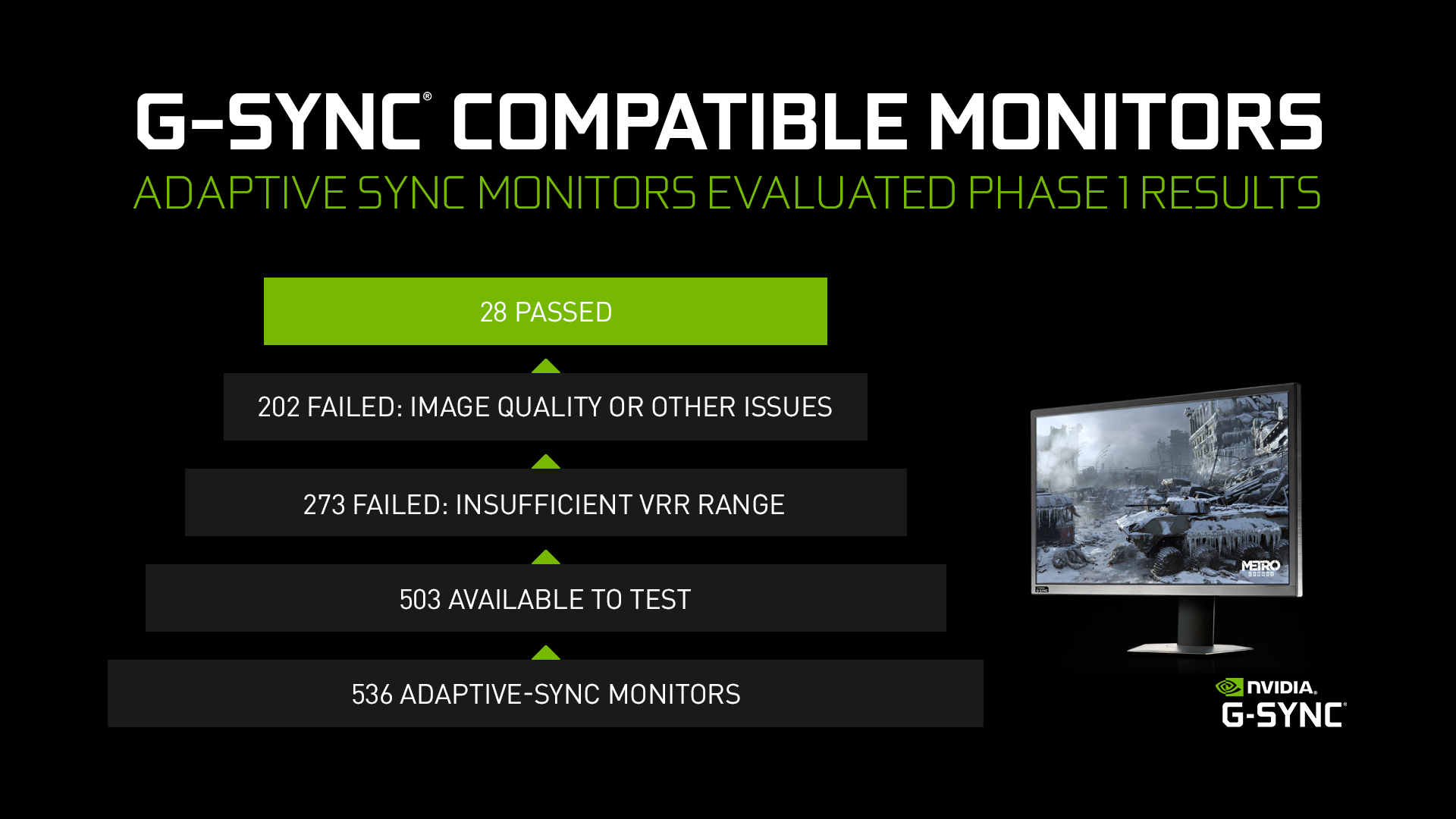

However, there are still hundreds of FreeSync models that will likely never get the feature. According to Nvidia, “not all monitors go through a formal certification process, display panel quality varies, and there may be other issues that prevent gamers from receiving a noticeably improved experience.”

But even if you have an unsupported monitor, it may be possible to turn on G-Sync. You may even have a good experience — at first. I tested G-Sync with two unsupported models, and, unfortunately, the results just weren’t consistent enough to recommend over a supported monitor.

The 32-inch AOC CQ32G1 curved gaming monitor, for example, which is priced at $399, presented no issues when I played Apex Legends and Metro: Exodus— at first. Then some flickering started appearing during gameplay, though I hadn’t made any changes to the visual settings. I also tested it with Yakuza 0,which, surprisingly, served up the worst performance, even though it’s the least demanding title that I tested. Whether it was in full-screen or windowed mode, the frame rate was choppy.

Another unsupported monitor, the $550 Asus MG279Q, handled both Metro: Exodus and Forza Horizon 4 without any noticeable issues. (It’s easy to confuse the MG279Q for the Asus MG278Q, which is on Nvidia’s list of supported FreeSync models.) In Nvidia’s G-Sync benchmark, there was significant tearing early on, but, oddly, I couldn’t re-create it.

Before you begin, note that in order to achieve the highest frame rates with or without G-Sync turned on, you’ll need to use a DisplayPort cable. If you’re using a FreeSync monitor, chances are good that it came with one. But if not, they aren’t too expensive.

First, download and install the latest driver for your GPU, either from Nvidia’s website or through the GeForce Experience, Nvidia’s Windows 10 app that can tweak graphics settings on a per-game basis. All of Nvidia’s drivers since mid-January 2019 have included G-Sync support for select FreeSync monitors. Even if you don’t own a supported monitor, you’ll probably be able to toggle G-Sync on once you install the latest driver. Whether it will work well after you do turn the feature on is another question.

Once the driver is installed, open the Nvidia Control Panel. On the side column, you’ll see a new entry: Set up G-Sync. (If you don’t see this setting, switch on FreeSync using your monitor’s on-screen display. If you still don’t see it, you may be out of luck.)

Check the box that says “Enable G-Sync Compatible,” then click “Apply: to activate the settings. (The settings page will inform you that your monitor is not validated by Nvidia for G-Sync. Since you already know that is the case, don’t worry about it.)

Nvidia offers a downloadable G-Sync benchmark, which should quickly let you know if things are working as intended. If G-Sync is active, the animation shouldn’t exhibit any tearing or stuttering. But since you’re using an unsupported monitor, don’t be surprised if you see some iffy results. Next, try out some of your favorite games. If something is wrong, you’ll realize it pretty quickly.

There’s a good resource to check out on Reddit, where its PC community has created a huge list of unsupported FreeSync monitors, documenting each monitor’s pros and cons with G-Sync switched on. These real-world findings are insightful, but what you experience will vary depending on your PC configuration and the games that you play.

Vox Media has affiliate partnerships. These do not influence editorial content, though Vox Media may earn commissions for products purchased via affiliate links. For more information, seeour ethics policy.

At first i thought that maybe i was sent a laptop with a g-sync display but when i checked in device manager the display is listed as "generic pnp display" no mention of g-sync yet i cant seem to be able to turn off the gpu and whenever i press fn+f7 i get the following message "not supported with g-sync ips display" even though the display is not a g-sync display.

There are four different possibilities of results for this test. Each result tells us something different about the monitor, and while native FreeSync monitors can still work with NVIDIA graphics cards, there are a few extra advantages you get with a native G-SYNC monitor too.

No:Some displays simply aren"t compatible with NVIDIA"s G-SYNC technology as there"s screen tearing. This is becoming increasingly rare, as most monitors at least work with G-SYNC.

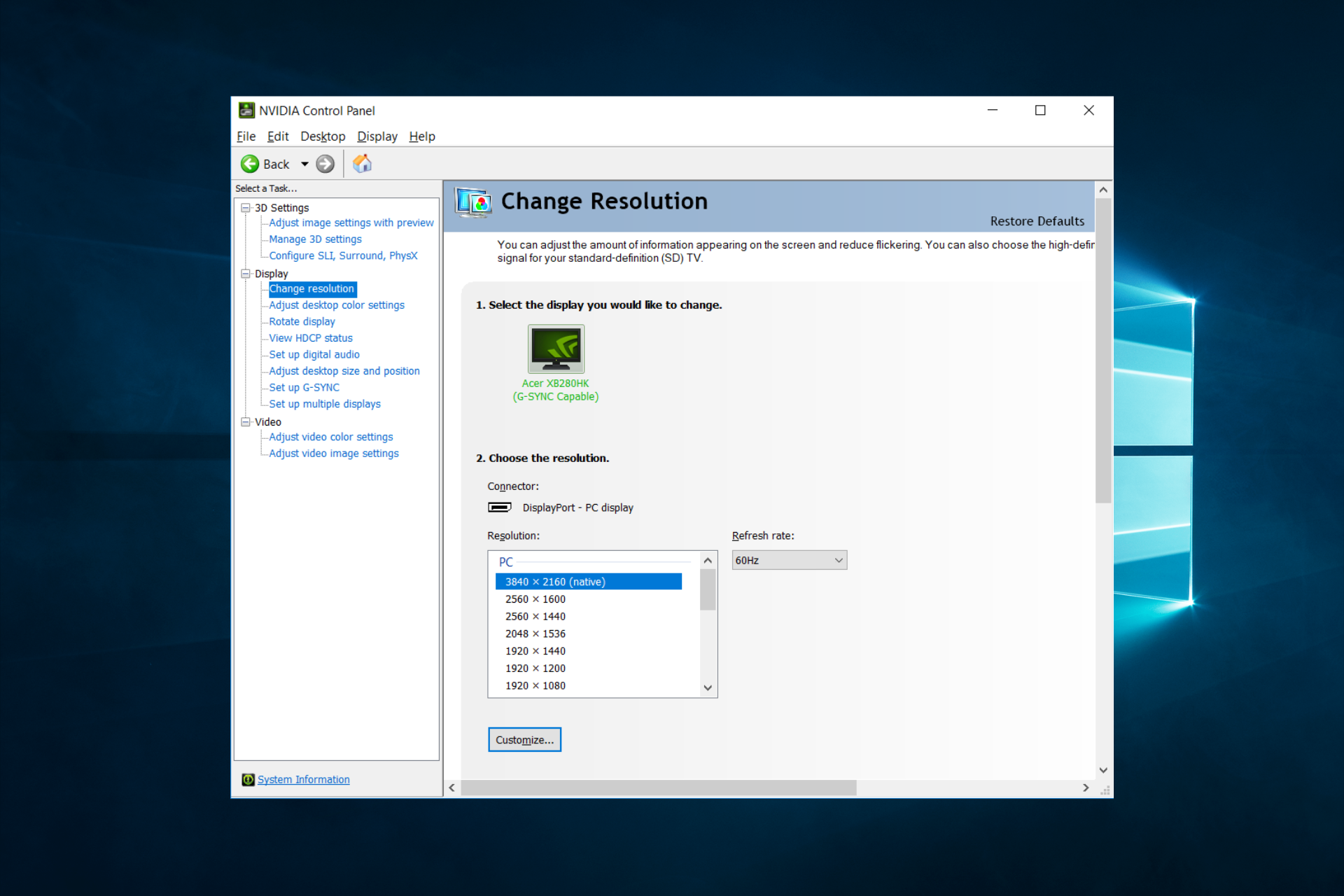

Compatible (NVIDIA Certified):NVIDIA officially certifies some monitors to work with their G-SYNC compatible program, and you can see the full list of certified monitors here. On certified displays, G-SYNC is automatically enabled when connected to at least a 10-series NVIDIA card over DisplayPort. NVIDIA tests them for compatibility issues and only certifies displays that work perfectly out of the box, but they lack the G-SYNC hardware module found on native G-SYNC monitors.

The simplest way to validate that a display is officially G-SYNC compatible is to check the "Set up G-SYNC" menu from the NVIDIA Control Panel. G-SYNC will automatically be enabled for a certified compatible display, and it"ll say "G-SYNC Compatible" under the monitor name. Most of the time, this works only over DisplayPort, but with newer GPUs, it"s also possible to enable G-SYNC over HDMI with a few monitors and TVs, but these are relatively rare.

Compatible (Tested):Monitors that aren"t officially certified but still have the same "Enable G-SYNC, G-SYNC Compatible" setting in the NVIDIA Control Panel get "Compatible (Tested)" instead of "NVIDIA Certified". However, you"ll see on the monitor name that there isn"t a certification here. There isn"t a difference in performance between the two sets of monitors, and there could be different reasons why it isn"t certified by NVIDIA, including NVIDIA simply not testing it. As long as the VRR support works over its entire refresh rate range, the monitor works with an NVIDIA graphics card.

Yes (Native):Displays that natively support G-SYNC have a few extra features when paired with an NVIDIA graphics card. They can dynamically adjust their overdrive to match the content, ensuring a consistent gaming experience. Some high refresh rate monitors also support the NVIDIA Reflex Latency Analyzer to measure the latency of your entire setup.

Like with certified G-SYNC compatible monitors, G-SYNC is automatically enabled on Native devices. Instead of listing them as G-SYNC Compatible in the "Set up G-SYNC page", Native monitors are identified as simply "G-SYNC Capable" below the monitor name. We don"t specify if it has a standard G-SYNC certification or G-SYNC Ultimate, as both are considered the same for this testing.

For this test, we ensure G-SYNC is enabled from the NVIDIA Control Panel and use the NVIDIA Pendulum Demo to ensure G-SYNC is working correctly. If we have any doubts, we"ll check with a few games to ensure it"s working with real content.

Nvidia built a special collision avoidance feature to avoid the eventuality of a new frame being ready while a duplicate is being drawn on screen (something that could generate lag and/or stutter) in which case the module anticipates the refresh and waits for the next frame to be completed.Overdriving pixels also becomes tricky in a non-fixed refresh scenario, and solutions predicting when the next refresh is going to happen and accordingly adjusting the overdrive value must be implemented and tuned for each panel in order to avoid ghosting.

The module carries all the functional parts. It is based around an Altera Arria V GX family FPGA featuring 156K logic elements, 396 DSP blocks and 67 LVDS channels. It is produced on the TSMC 28LP process and paired with three DDR3L DRAM chips to attain a certain bandwidth, for an aggregate 768MB capacity. The employed FPGA also features a LVDS interface to drive the monitor panel. It is meant to replace common scalers and be easily integrated by monitor manufacturers, who only have to take care of the power delivery circuit board and input connections.

G-Sync faces some criticismVESA standard Adaptive-Sync which is an optional feature of DisplayPort version 1.2a.AMD"s FreeSync relies on the above-mentioned optional component of DisplayPort 1.2a, G-Sync requires an Nvidia-made module in place of the usual scaler in the display in order for it to function properly with select Nvidia GeForce graphics cards, such as the ones from the GeForce 10 series (Pascal).

Nvidia announced that G-Sync will be available to notebook manufacturers and that in this case, it would not require a special module since the GPU is directly connected to the display without a scaler in between.

According to Nvidia, fine tuning is still possible given the fact that all notebooks of the same model will have the same LCD panel, variable overdrive will be calculated by shaders running on the GPU, and a form of frame collision avoidance will also be implemented.

At CES 2018 Nvidia announced a line of large gaming monitors built by HP, Asus and Acer with 65-inch panels, 4K, HDR, as well as G-Sync support. The inclusion of G-Sync modules make the monitors among the first TV-sized displays to feature variable refresh-rates.

At CES 2019, Nvidia announced that they will support variable refresh rate monitors with FreeSync technology under a new standard named G-Sync Compatible. All monitors under this new standard have been tested by Nvidia to meet their baseline requirements for variable refresh rate and will enable G-Sync automatically when used with an Nvidia GPU.

It started at CES, nearly 12 months ago. NVIDIA announced GeForce Experience, a software solution to the problem of choosing optimal graphics settings for your PC in the games you play. With console games, the developer has already selected what it believes is the right balance of visual quality and frame rate. On the PC, these decisions are left up to the end user. We’ve seen some games try and solve the problem by limiting the number of available graphical options, but other than that it’s a problem that didn’t see much widespread attention. After all, PC gamers are used to fiddling around with settings - it’s just an expected part of the experience. In an attempt to broaden the PC gaming user base (likely somewhat motivated by a lack of next-gen console wins), NVIDIA came up with GeForce Experience. NVIDIA already tests a huge number of games across a broad range of NVIDIA hardware, so it has a good idea of what the best settings may be for each game/PC combination.

Also at CES 2013 NVIDIA announced Project Shield, later renamed to just Shield. The somewhat odd but surprisingly decent portable Android gaming system served another function: it could be used to play PC games on your TV, streaming directly from your PC.

All of this makes sense after all. With ATI and AMD fully integrated, and Intel finally taking graphics (somewhat) seriously, NVIDIA needs to do a lot more to remain relevant (and dominant) in the industry going forward. Simply putting out good GPUs will only take the company so far.

NVIDIA’s latest attempt is G-Sync, a hardware solution for displays that enables a semi-variable refresh rate driven by a supported NVIDIA graphics card. The premise is pretty simple to understand. Displays and GPUs update content asynchronously by nature. A display panel updates itself at a fixed interval (its refresh rate), usually 60 times per second (60Hz) for the majority of panels. Gaming specific displays might support even higher refresh rates of 120Hz or 144Hz. GPUs on the other hand render frames as quickly as possible, presenting them to the display whenever they’re done.

When you have a frame that arrives in the middle of a refresh, the display ends up drawing parts of multiple frames on the screen at the same time. Drawing parts of multiple frames at the same time can result in visual artifacts, or tears, separating the individual frames. You’ll notice tearing as horizontal lines/artifacts that seem to scroll across the screen. It can be incredibly distracting.

You can avoid tearing by keeping the GPU and display in sync. Enabling vsync does just this. The GPU will only ship frames off to the display in sync with the panel’s refresh rate. Tearing goes away, but you get a new artifact: stuttering.

Because the content of each frame of a game can vary wildly, the GPU’s frame rate can be similarly variable. Once again we find ourselves in a situation where the GPU wants to present a frame out of sync with the display. With vsync enabled, the GPU will wait to deliver the frame until the next refresh period, resulting in a repeated frame in the interim. This repeated frame manifests itself as stuttering. As long as you have a frame rate that isn’t perfectly aligned with your refresh rate, you’ve got the potential for visible stuttering.

G-Sync purports to offer the best of both worlds. Simply put, G-Sync attempts to make the display wait to refresh itself until the GPU is ready with a new frame. No tearing, no stuttering - just buttery smoothness. And of course, only available on NVIDIA GPUs with a G-Sync display. As always, the devil is in the details.

G-Sync is a hardware solution, and in this case the hardware resides inside a G-Sync enabled display. NVIDIA swaps out the display’s scaler for a G-Sync board, leaving the panel and timing controller (TCON) untouched. Despite its physical location in the display chain, the current G-Sync board doesn’t actually feature a hardware scaler. For its intended purpose, the lack of any scaling hardware isn’t a big deal since you’ll have a more than capable GPU driving the panel and handling all scaling duties.

G-Sync works by manipulating the display’s VBLANK (vertical blanking interval). VBLANK is the period of time between the display rasterizing the last line of the current frame and drawing the first line of the next frame. It’s called an interval because during this period of time no screen updates happen, the display remains static displaying the current frame before drawing the next one. VBLANK is a remnant of the CRT days where it was necessary to give the CRTs time to begin scanning at the top of the display once again. The interval remains today in LCD flat panels, although it’s technically unnecessary. The G-Sync module inside the display modifies VBLANK to cause the display to hold the present frame until the GPU is ready to deliver a new one.

With a G-Sync enabled display, when the monitor is done drawing the current frame it waits until the GPU has another one ready for display before starting the next draw process. The delay is controlled purely by playing with the VBLANK interval.

You can only do so much with VBLANK manipulation though. In present implementations the longest NVIDIA can hold a single frame is 33.3ms (30Hz). If the next frame isn’t ready by then, the G-Sync module will tell the display to redraw the last frame. The upper bound is limited by the panel/TCON at this point, with the only G-Sync monitor available today going as high as 6.94ms (144Hz). NVIDIA made it a point to mention that the 144Hz limitation isn’t a G-Sync limit, but a panel limit.

The first G-Sync module only supports output over DisplayPort 1.2, though there is nothing technically stopping NVIDIA from adding support for HDMI/DVI in future versions. Similarly, the current G-Sync board doesn’t support audio but NVIDIA claims it could be added in future versions (NVIDIA’s thinking here is that most gamers will want something other than speakers integrated into their displays). The final limitation of the first G-Sync implementation is that it can only connect to displays over LVDS. NVIDIA plans on enabling V-by-One support in the next version of the G-Sync module, although there’s nothing stopping it from enabling eDP support as well.

Enabling G-Sync does have a small but measurable performance impact on frame rate. After the GPU renders a frame with G-Sync enabled, it will start polling the display to see if it’s in a VBLANK period or not to ensure that the GPU won’t scan in the middle of a scan out. The polling takes about 1ms, which translates to a 3 - 5% performance impact compared to v-sync on. NVIDIA is working on eliminating the polling entirely, but for now that’s how it’s done.

NVIDIA retrofitted an ASUS VG248QE display with its first generation G-Sync board to demo the technology. The V248QE is a 144Hz 24” 1080p TN display, a good fit for gamers but not exactly the best looking display in the world. Given its current price point ($250 - $280) and focus on a very high refresh rate, there are bound to be tradeoffs (the lack of an IPS panel being the big one here). Despite NVIDIA’s first choice being a TN display, G-Sync will work just fine with an IPS panel and I’m expecting to see new G-Sync displays announced in the not too distant future. There’s also nothing stopping a display manufacturer from building a 4K G-Sync display. DisplayPort 1.2 is fully supported, so 4K/60Hz is the max you’ll see at this point. That being said, I think it’s far more likely that we’ll see a 2560 x 1440 IPS display with G-Sync rather than a 4K model in the near term.

Naturally I disassembled the VG248QE to get a look at the extent of the modifications to get G-Sync working on the display. Thankfully taking apart the display is rather simple. After unscrewing the VESA mount, I just had to pry the bezel away from the back of the display. With the monitor on its back, I used a flathead screw driver to begin separating the plastic using the two cutouts at the bottom edge of the display. I then went along the edge of the panel, separating the bezel from the back of the monitor until I unhooked all of the latches. It was really pretty easy to take apart.

Once inside, it’s just a matter of removing some cables and unscrewing a few screws. I’m not sure what the VG248QE looks like normally, but inside the G-Sync modified version the metal cage that’s home to the main PCB is simply taped to the back of the display panel. You can also see that NVIDIA left the speakers intact, there’s just no place for them to connect to.

It’s difficult to buy a computer monitor, graphics card, or laptop without seeing AMD FreeSync and Nvidia G-Sync branding. Both promise smoother, better gaming, and in some cases both appear on the same display. But what do G-Sync and FreeSync do, exactly – and which is better?

Most AMD FreeSync displays can sync with Nvidia graphics hardware, and most G-Sync Compatible displays can sync with AMD graphics hardware. This is unofficial, however.

The first problem is screen tearing. A display without adaptive sync will refresh at its set refresh rate (usually 60Hz, or 60 refreshes per second) no matter what. If the refresh happens to land between two frames, well, tough luck – you’ll see a bit of both. This is screen tearing.

Screen tearing is ugly and easy to notice, especially in 3D games. To fix it, games started to use a technique called V-Syncthat locks the framerate of a game to the refresh rate of a display. This fixes screen tearing but also caps the performance of a game. It can also cause uneven frame pacing in some situations.

Adaptive sync is a better solution. A display with adaptive sync can change its refresh rate in response to how fast your graphics card is pumping out frames. If your GPU sends over 43 frames per second, your monitor displays those 43 frames, rather than forcing 60 refreshes per second. Adaptive sync stops screen tearing by preventing the display from refreshing with partial information from multiple frames but, unlike with V-Sync, each frame is shown immediately.

VESA Adaptive Sync is an open standard that any company can use to enable adaptive sync between a device and display. It’s used not only by AMD FreeSync and Nvidia G-Sync Compatible monitors but also other displays, such as HDTVs, that support Adaptive Sync.

AMD FreeSync and Nvidia G-Sync Compatible are so similar, in fact, they’re often cross compatible. A large majority of displays I test with support for either AMD FreeSync or Nvidia G-Sync Compatible will work with graphics hardware from the opposite brand.

This is how all G-Sync displays worked when Nvidia brought the technology to market in 2013. Unlike Nvidia G-Sync Compatible monitors, which often (unofficially) works with AMD Radeon GPUs, G-Sync is unique and proprietary. It only supports adaptive sync with Nvidia graphics hardware.

It’s usually possible to switch sides if you own an AMD FreeSync or Nvidia G-Sync Compatible display. If you buy a G-Sync or G-Sync Ultimate display, however, you’ll have to stick with Nvidia GeForce GPUs. (Here’s our guide to the best graphics cards for PC gaming.)

G-Sync and G-Sync Ultimate support the entire refresh range of a panel – even as low as 1Hz. This is important if you play games that may hit lower frame rates, since Adaptive Sync matches the display refresh rate with the output frame rate.

For example, if you’re playing Cyberpunk 2077 at an average of 30 FPS on a 4K display, that implies a refresh rate of 30Hz – which falls outside the range VESA Adaptive Sync supports. AMD FreeSync and Nvidia G-Sync Compatible may struggle with that, but Nvidia G-Sync and G-Sync Ultimate won’t have a problem.

AMD FreeSync Premium and FreeSync Premium Pro have their own technique of dealing with this situation called Low Framerate Compensation. It repeats frames to double the output such that it falls within a display’s supported refresh rate.

Other differences boil down to certification and testing. AMD and Nvidia have their own certification programs that displays must pass to claim official compatibility. This is why not all VESA Adaptive Sync displays claim support for AMD FreeSync and Nvidia G-Sync Compatible.

This is a bunch of nonsense. Neither has anything to do with HDR, though it can be helpful to understand that some level of HDR support is included in those panels. The most common HDR standard, HDR10, is an open standard from the Consumer Technology Association. AMD and Nvidia have no control over it. You don’t need FreeSync or G-Sync to view HDR, either, even on each company’s graphics hardware.

Both standards are plug-and-play with officially compatible displays. Your desktop’s video card will detect that the display is certified and turn on AMD FreeSync or Nvidia G-Sync automatically. You may need to activate the respective adaptive sync technology in your monitor settings, however, though that step is a rarity in modern displays.

Displays that support VESA Adaptive Sync, but are not officially supported by your video card, require you dig into AMD or Nvidia’s driver software and turn on the feature manually. This is a painless process, however – just check the box and save your settings.

AMD FreeSync and Nvidia G-Sync are also available for use with laptop displays. Unsurprisingly, laptops that have a compatible display will be configured to use AMD FreeSync or Nvidia G-Sync from the factory.

A note of caution, however: not all laptops with AMD or Nvidia graphics hardware have a display with Adaptive Sync support. Even some gaming laptops lack this feature. Pay close attention to the specifications.

VESA’s Adaptive Sync is on its way to being the common adaptive sync standard used by the entire display industry. Though not perfect, it’s good enough for most situations, and display companies don’t have to fool around with AMD or Nvidia to support it.

That leaves AMD FreeSync and Nvidia G-Sync searching for a purpose. AMD FreeSync and Nvidia G-Sync Compatible are essentially certification programs that monitor companies can use to slap another badge on a product, though they also ensure out-of-the-box compatibility with supported graphics card. Nvidia’s G-Sync and G-Sync Ultimate are technically superior, but require proprietary Nvidia hardware that adds to a display’s price. This is why G-Sync and G-Sync Ultimate monitors are becoming less common.

Nvidia built a special collision avoidance feature to avoid the eventuality of a new frame being ready while a duplicate is being drawn on screen (something that could generate lag and/or stutter) in which case the module anticipates the refresh and waits for the next frame to be completed.Overdriving pixels also becomes tricky in a non-fixed refresh scenario, and solutions predicting when the next refresh is going to happen and accordingly adjusting the overdrive value must be implemented and tuned for each panel in order to avoid ghosting.

The module carries all the functional parts. It is based around an Altera Arria V GX family FPGA featuring 156K logic elements, 396 DSP blocks and 67 LVDS channels. It is produced on the TSMC 28LP process and paired with three DDR3L DRAM chips to attain a certain bandwidth, for an aggregate 768MB capacity. The employed FPGA also features a LVDS interface to drive the monitor panel. It is meant to replace common scalers and be easily integrated by monitor manufacturers, who only have to take care of the power delivery circuit board and input connections.

G-Sync faces some criticismVESA standard Adaptive-Sync which is an optional feature of DisplayPort version 1.2a.AMD"s FreeSync relies on the above-mentioned optional component of DisplayPort 1.2a, G-Sync requires an Nvidia-made module in place of the usual scaler in the display in order for it to function properly with select Nvidia GeForce graphics cards, such as the ones from the GeForce 10 series (Pascal).

Nvidia announced that G-Sync will be available to notebook manufacturers and that in this case, it would not require a special module since the GPU is directly connected to the display without a scaler in between.

According to Nvidia, fine tuning is still possible given the fact that all notebooks of the same model will have the same LCD panel, variable overdrive will be calculated by shaders running on the GPU, and a form of frame collision avoidance will also be implemented.

At CES 2018 Nvidia announced a line of large gaming monitors built by HP, Asus and Acer with 65-inch panels, 4K, HDR, as well as G-Sync support. The inclusion of G-Sync modules make the monitors among the first TV-sized displays to feature variable refresh-rates.

At CES 2019, Nvidia announced that they will support variable refresh rate monitors with FreeSync technology under a new standard named G-Sync Compatible. All monitors under this new standard have been tested by Nvidia to meet their baseline requirements for variable refresh rate and will enable G-Sync automatically when used with an Nvidia GPU.

Note: If the Stream HDR video switch was off when you upgraded from version 1809 to version 1903 or later, the Stream HDR video switch won"t enable, preventing you from streaming high-dynamic-range (HDR) videos. To work around this issue, see KB4512062, "Stream HDR video" can"t be enabled when switched off before upgrading to Windows 10, version 1903 or later.

When shopping for a gaming monitor, you’ll undoubtedly come across a few displays advertising Nvidia’s G-Sync technology. In addition to a hefty price hike, these monitors usually come with gaming-focused features like a fast response time and high refresh rate. To help you know where your money is going, we put together a guide to answer the question: What is G-Sync?

In short, G-Sync is a hardware-based adaptive refresh technology that helps prevent screen tearing and stuttering. With a G-Sync monitor, you’ll notice smoother motion while gaming, even at high refresh rates.

G-Sync is Nvidia’s hardware-based monitor syncing technology. G-Sync solves screen tearing mainly, synchronizing your monitor’s refresh rate with the frames your GPU is pushing out each second.

V-Sync emerged as a solution. This software-based feature essentially forces your GPU to hold frames in its buffer until your monitor is ready to refresh. That solves the screen tearing problem, but it introduces another: Input lag. V-Sync forces your GPU to hold frames it has already rendered, which causes a slight delay between what’s happening in the game and what you see on screen.

Nvidia introduced a hardware-based solution in 2013 called G-Sync. It’s based on VESA’s Adaptive-Sync technology, which enables variable refresh rates on the display side. Instead of forcing your GPU to hold frames, G-Sync forces your monitor to adapt its refresh rate depending on the frames your GPU is rendering. That deals with input lag and screen tearing.

However, Nvidia uses a proprietary board that replaces the typical scaler board, which controls everything within the display like decoding image input, controlling the backlight, and so on. A G-Sync board contains 768MB of DDR3 memory to store the previous frame so that it can be compared to the next incoming frame. It does this to decrease input lag.

With G-Sync active, the monitor becomes a slave to your PC. As the GPU rotates the rendered frame into the primary buffer, the display clears the old image and gets ready to receive the next frame. As the frame rate speeds up and slows down, the display renders each frame accordingly as instructed by your PC. Since the G-Sync board supports variable refresh rates, images are often redrawn at widely varying intervals.

For years, there’s always been one big caveat with G-Sync monitors: You need an Nvidia graphics card. Although you still need an Nvidia GPU to fully take advantage of G-Sync — like the recent RTX 3080 — more recent G-Sync displays support HDMI variable refresh rate under the “G-Sync Compatible” banner (more on that in the next section). That means you can use variable refresh rate with an AMD card, though not Nvidia’s full G-Sync module. Outside of a display with a G-Sync banner, here’s what you need:

For G-Sync Ultimate displays, you’ll need a hefty GeForce GPU to handle HDR visuals at 4K. They’re certainly not cheap, but they provide the best experience.

As for G-Sync Compatible, it’s a newer category. These displays do not include Nvidia’s proprietary G-Sync board, but they do support variable refresh rates. These panels typically fall under AMD’s FreeSync umbrella, which is a competing technology for Radeon-branded GPUs that doesn’t rely on a proprietary scaler board. Nvidia tests these displays to guarantee “no artifacts” when connected to GeForce-branded GPUs. Consider these displays as affordable alternatives to G-Sync and G-Sync Ultimate displays.

Since G-Sync launched in 2013, it has always been specifically for monitors. However, Nvidia is expanding. Last year, Nvidia partnered with LG to certify recent LG OLED TVs as G-Sync Compatible. You’ll need some drivers and firmware to get started, which Nvidia outlines on its site. Here are the currently available TVs that support G-Sync:

FreeSync has more freedom in supported monitor options, and you don’t need extra hardware. So, FreeSync is a budget-friendly alternative to G-Synch compatible hardware.Asus’ MG279Qis around $100 less than the aforementioned ROG Swift monitor.

In addition, users point to a lack of compatibility with Nvidia’s Optimus technology. Optimus, implemented in many laptops, adjusts graphics performance on the fly to provide the necessary power to graphics-intensive programs and optimize battery life. Because the technology relies on an integrated graphics system, frames move to the screen at a set interval, not as they are created as seen with G-Sync. One can purchase an Optimus-capable device or a G-Sync-capable device, but no laptop exists that can do both.

Continue reading to learn about how Adaptive Sync prevents screen tearing and game stuttering for the smoothest gameplay possible. Or discover ViewSonic ELITE’s range of professional gaming monitors equipped with the latest sync capabilities.

However, no matter how advanced the specifications are, the monitor’s refresh rate and the graphics card’s frame rate need to be synced. Without the synchronization, gamers will experience a poor gaming experience marred with tears and judders. Manufacturers such as NVIDIA, AMD, and VESA have developed different display technologies that help sync frame rates and refresh rates to eliminate screen tearing and minimize game stuttering. And one such technology is Adaptive Sync.

Traditional monitors tend to refresh their images at a fixed rate. However, when a game requires higher frame rates outside of the set range, especially during fast-motion scenes, the monitor might not be able to keep up with the dramatic increase. The monitor will then show a part of one frame and the next frame at the same time.

As an example, imagine that your game is going at 90 FPS (Frames Per Second), but your monitor’s refresh rate is 60Hz, this means your graphics card is doing 90 updates per second with the display only doing 60. This overlap leads to split images – almost like a tear across the screen. These lines will take the beautiful viewing experience away and hamper any gameplay.

In every gameplay, different scenes demand varying levels of framerates. The more effects and details the scene has (such as explosions and smoke), the longer it takes to render the variance in framerate. Instead of consistently rendering the same framerate across all scenes, whether they are graphics-intensive or not, it makes more sense to sync the refresh rate accordingly.

Developed by VESA, Adaptive Sync adjusts the display’s refresh rate to match the GPU’s outputting frames on the fly. Every single frame is displayed as soon as possible to prevent input lag and not repeated, thus avoiding game stuttering and screen tearing.

Outside of gaming, Adaptive Sync can also be used to enable seamless video playback at various framerates, whether from 23.98 to 60 fps. It changes the monitor’s refresh rate to match with the framerate of the video content, thus banishing video stutters and even reducing power consumption.

Unlike V-Sync which caps your GPU’s frame rate to match with your display’s refresh rate, Adaptive Sync dynamically changes the monitor’s refresh rate in response to the game’s required framerates to render. This means it does not only annihilate screen tearing but also addresses the juddering effect that V-Sync causes when the FPS falls.

To illustrate Adaptive Sync with a diagram explained by VESA, you will see that Display A will wait till Render B is completed and ready before updating to Display B. This ensures that each frame is displayed as soon as possible, thus reducing the possibility of input lag. Frames will not be repeated within the display’s refresh rate set to avoid game stuttering. It will adapt the refresh rate to the rendering framerate to avoid any screen tearing.

NVIDIA G-Sync uses the same principle as Adaptive Sync. But it relies on proprietary hardware that must be built into the display. With the additional hardware and strict regulations enforced by NVIDIA, monitors supporting G-Sync have tighter quality control and are more premium in price.

Both solutions are also hardware bound. If you own a monitor equipped with G-Sync, you will need to get an NVIDIA graphics card. Likewise, a FreeSync display will require an AMD graphics card. However, AMD has also released the technology for open use as part of the DisplayPort interface. This allows anyone can enjoy FreeSync on competing devices. There are also G-Sync Compatible monitors available in the market to pair with an NVIDIA GPU.

Back at CES 2019, when NVIDIA announced the new RTX 2060 graphics card, NVIDIA CEO Jensen Huang also announced support for “G-Sync compatible monitors”. This means that you can now enable the G-Sync feature even if you are using a FreeSync monitor, provided that you have a Pascal or Turing-based graphics card. That’s right, this support will only work for graphics cards from GTX 10 series to the latest RTX 30 series; and you will need the latest GeForce Driver 417.71 (up). Check out the list of Freesync monitors that are compatible with G-Sync below.

According to NVIDIA, they have tested hundreds of different monitors and only few of them have passed without any issues. Below are the FreeSync monitors that are certified to be compatible with NVIDIA’s G-Sync.

Note: We try to be as accurate as possible when collecting the data above. But please double-check since there may be changes or revisions on the monitor. Also, I noticed that the specs listed by retail stores may be slightly different from the manufacturer’s page.

I was a little bit disappointed when I saw the initial list since most are based on a TN panel. True that they are fast, they have a very low response time, but they don’t have good viewing angles and colors are not that great compared to IPS or VA panel. However, fast forward to 2021, I can see that there are now more IPS panel on the list. And some of these (gaming) monitors are excellent.

If you are currently using one of the monitors listed above, once the driver version 417.71 has been installed, G-Sync feature should be automatically enable. Those who are using FreeSync monitors that are not listed above / non-certified may also enable G-Sync manually via the NVIDIA control panel.

Do note that those monitors that are non-certified may encounter varying issues, like flickering, blurring and the likes. However, there are several users who have confirmed that their non-certified FreeSync monitor was working well with G-Sync enabled.

The list of certified FreeSync monitors compatible with G-Sync seems to be pretty underwhelming, due to the fact there are literally a lot of FreeSync monitors available in the market today compared to G-Sync monitors.

UPDATE: MSI has listed some of their FreeSync monitors that are compatible with G-Sync as well. However, take note though that NVIDIA has not officially included MSI’s monitors on their list of certified G-Sync compatible monitors. I have the MSI Optix MPG27CQ, it’s not certified, but when I enable G-sync, it does seem to work. I only tried it with Battlefield V, haven’t tested it thoroughly though.

Users from NVIDIA Subreddit have created a Google sheet where they share their experiences with the current FreeSync monitors that they have. We see some non-certified FreeSync monitors on that list are reported to work just fine with G-Sync. Other monitors have issues, while other monitors needed to be “tweaked” a little bit just to get G-Sync working with their FreeSync monitors. Can you check out the sheet here.

Ms.Josey

Ms.Josey

Ms.Josey

Ms.Josey