diy programmed oculus rift dk1 screen 7 lcd panel made in china

/cdn.vox-cdn.com/uploads/chorus_asset/file/14299073/iFixit_Oculus_Rift_teardown.1419979452.jpg)

I have wanted virtual reality ever since I watched "The Lawnmower Man" as a kid, my first attempt at virtual reality was the VictorMaxx Stuntmaster in the 1990s, LOL, yea it was bad, next was Elsa wired shutter glasses, a Nvidia card, and a CRT monitor, that was early 2000s, worked well but gave me headaches, and did not play well with my prescription glasses, after that I gave up, everything that worked well was to costly, and everything that was cheap was not worth using, but then not to long ago, I kept hearing about the Oculus Rift, and I wanted one, but even at $350.00 its a little to costly for me to buy unless I save up for a few months, so I decided to try DIY, and to keep the cost low, around $100.

I put in an order for an Air mouse today should be here later this week, I found out that when using Vireio Perception that the Oculus Rift settings seem to work better than just side by side, the Oculus Rift settings let you see more of the game, one problem I have is that some of the hot glue holding the LCD to the Plexiglass has came lose, but it should be an easy fix, the Plexiglass may not have been clean enough or might be to slick, I will clean the Plexiglass with alcohol and then rough up the Plexiglass a bit with sand paper before hot gluing it again.

I have been looking for demos or anything to play with and have been pretty disappointed, most seem to not want to work or go into VR mode because they fail to detect the Oculus Rift

I then covered the screen with the piece of sheet protector, at first it was hard to notice a change, but when playing a real video like one of the Oculus Rift GoPro movies on YouTube the image is improved, it is a very small improvement, but I like it, reminds me of an old 1980s CRT TV, LOL.

I used the file "FoculusEDID1.dat", and my screen went dead, but I was able to re-flash with the one I made up, but it kinda scared me, LOL, ALWAYS MAKE A BACKUP, later this week I am going to make up another EDID that works just for my LCD and see what it does, and not just use a copy of the Oculus Rift EDID, I am thinking all you need is to change a few values to fool the Oculus Rift software, and leave everything else alone in your original EDID.

but then I ran into a problem, my LCD would not stay programed with my custom EDID, every time I cut power to the LCD it would revert back to the factory EDID, that BTW was not even correct for the LCD

I like my DIY Rift a lot, but its got a few shortcomings, its heavy, and its low resolution, playing older games like Unreal and Quake are amazing, but trying to watch video is just awful, and some games like GTA San Andreas don"t fair so well with the low resolution, its a mixed bag.

I think there is a place for a cheap low resolution version of the Rift, and I know it can be made at the $100-$150 price range because I made one, the question is how low a resolution can you go and it still be usable, I think the 1024x600 LCD I use is the bottom limit, and I think 1280x720 or 1280x800 is the lowest "acceptable" resolution for something factory made.

I would have loved to get one, but my credit card will not work for overseas orders, but I can buy stuff through Amazon thats from China, not to happy about the month long or longer shipping, but I can, I had that $50 screen with only two days of shipping, that"s why I got it, it was quick and cheap, later I might upgrade to a better screen, but I kinda want to wait for the consumer version of the Oculus Rift, and I hope it comes out at the end of the year, or sooner, but I think I remember something about 2015

Its got a lot more to it than the DIY EDID spoofer I made LOL, I am using it right now, unfortunately it does not seem to like being programed from the computer, and would not copy the EDID from the DIY EDID spoofer, maybe it needs something I don"t know about to work with the EDID spoofer, it will copy the EDID from a monitor with no problem, and I was able to program my LCD with the modified EDID, then use the ConnectPRO to copy that EDID.

The ConnectPRO does help me because its better made than my EDID spoofer, and unlike my LCD that loses the custom EDID after it loses power, the ConnectPRO will store the EDID until you press its DDC button, I just wish it was easier to program with a custom EDID, I may keep an old junk LCD around just to flash with my custom EDID so the ConnectPRO has something to copy it from LOL, another option "might" be that the EDID chip on the ConnectPRO could be programed directly with something like PonyProg, I did see a 24LC02B on the bottom of the ConnectPRO.

the only thing I dislike is that it came un-programmed, it does not have any EDID programmed, and will not work out of the box on a computer, you will have to program it using something like this:

I printed a second copy of the 3D printed parts because I had modified the original parts too much and also used some more aluminum carpet trim, BTW I got an 8 foot long piece of aluminum carpet trim for $7, I looked at flat aluminum stock, it was double the price.

After using it a little, its much better, but the screen door problem is back, but its something I can deal with, but I do wonder if I should have applied some laminating pouch to the LCD before I put it together, I worry that removing the screws from the 3D printed parts to many times will strip the holes out, it"s a large improvement over the 1024x600 LCD, and videos are just good enough to watch now with the 1280x800 LCD, I wish I had started with the better LCD.

Well, its not 100% better, I know I went from 512x300 for each eye to 640x400 for each eye, but it feels about 50% to 60% better, it seems strange to me that just a few more pixels makes that huge of a difference, BUT there is a difference in LCDs however, the new LCD seems to have more LCD ghosting, the new LCD also has "some" rainbow effect on different objects and text, so its not perfect.

I have tried many more games and the LCD ghosting only seems to be in a few games, not in all of them, but the rainbow effect on bright white text I have seen on most games, not sure whats going on, might just be software, also some games work well with head tracking, others seem to have a drift, or they always seem to have a lean, maybe another software problem.

Could you tell what the need is to edit the EDID? Do you have to do the above steps or can you just flash the bin file straight from the dk1 to your EEPROM? This is just to insure maximum compatibility?

nah89 wrote:Could you tell what the need is to edit the EDID? Do you have to do the above steps or can you just flash the bin file straight from the dk1 to your EEPROM? This is just to insure maximum compatibility?

The 1024x600 LCD I first used, used different settings in the EDID than the Oculus Rift EDID, if I had used the unedited Oculus Rift EDID on the 1024x600 LCD, it would have had the wrong scaling, refresh rate, colors would have been off, stuff like that, the 1024x600 LCD will not work properly with the unedited Oculus Rift EDID.

So the N070ICG-LD1 LCD may not be an exact match, but it"s close, I still may have to edit the EDID later, like the color settings or timings, but for now the N070ICG-LD1 seems to work well with the Oculus Rift EDID.

Something I want to add, the RX-S702 / TKH702S automotive monitor I got the 1024x600 aa0700023001 LCD and controller from had the wrong EDID from the factory, it was using an EDID from a regular desktop monitor, but it worked, scaling was pretty good and colors were nice, so you don"t always need the correct or perfect EDID, just one that works.

It"s interesting that that LCD is so much cheaper than the defacto one used for the diy rifts. Even without the controller board the N070ICG-LD1 LCD comes out to around 50. I wonder if anyone has done a build with it?

When looking at it I understand that the HDMI pin 17 goes straight to A0, A1, A2, and VSS, pin 16 goes to SDA and VCC with the 5v coming from the USB to it with a 47K resistor connecting it to the cable coming from SCL and then also has another resistor between SDA and VCC. Pin 15 goes to SCL and then is also connected to the cable coming from pin 16 with the resistor. Right? Also why does the box mention pin 18 for 5v if it"s not connected to the spoofer circuit?

It"s interesting that that LCD is so much cheaper than the defacto one used for the diy rifts. Even without the controller board the N070ICG-LD1 LCD comes out to around 50. I wonder if anyone has done a build with it?

When looking at it I understand that the HDMI pin 17 goes straight to A0, A1, A2, and VSS, pin 16 goes to SDA and VCC with the 5v coming from the USB to it with a 47K resistor connecting it to the cable coming from SCL and then also has another resistor between SDA and VCC. Pin 15 goes to SCL and then is also connected to the cable coming from pin 16 with the resistor. Right? Also why does the box mention pin 18 for 5v if it"s not connected to the spoofer circuit?

Here is the image I found back in the mid 2000s, using a 24LC21 chip for a Sony PSone LCD, it might help you understand a little better, back then people were trying to use the Sony PSone LCD in cars, for computers and video projectors, but it was not designed for that, so people hacked it

but then ran into problems, first was that 75Hz was giving me discoloration, but 70Hz worked just fine, the second problem was that my main display would only do 60Hz at 1280×800, and for most stuff I clone the displays, when you clone displays it uses the lowest refresh rate, so I was back to 60Hz, then I tried Steam, the Steam games like Half-Life 2 use an extended display setup for VR, showing the game on the extended display not the main desktop, but Steam kept switching displays in VR mode, showing the desktop in my DIY HMD, or a blank screen, when I tried running Half-Life 2 and selecting VR mode in game it said the VR hardware was not detected, but the Oculus Rift configuration utility shows everything plugged-in, not sure what"s the problem.

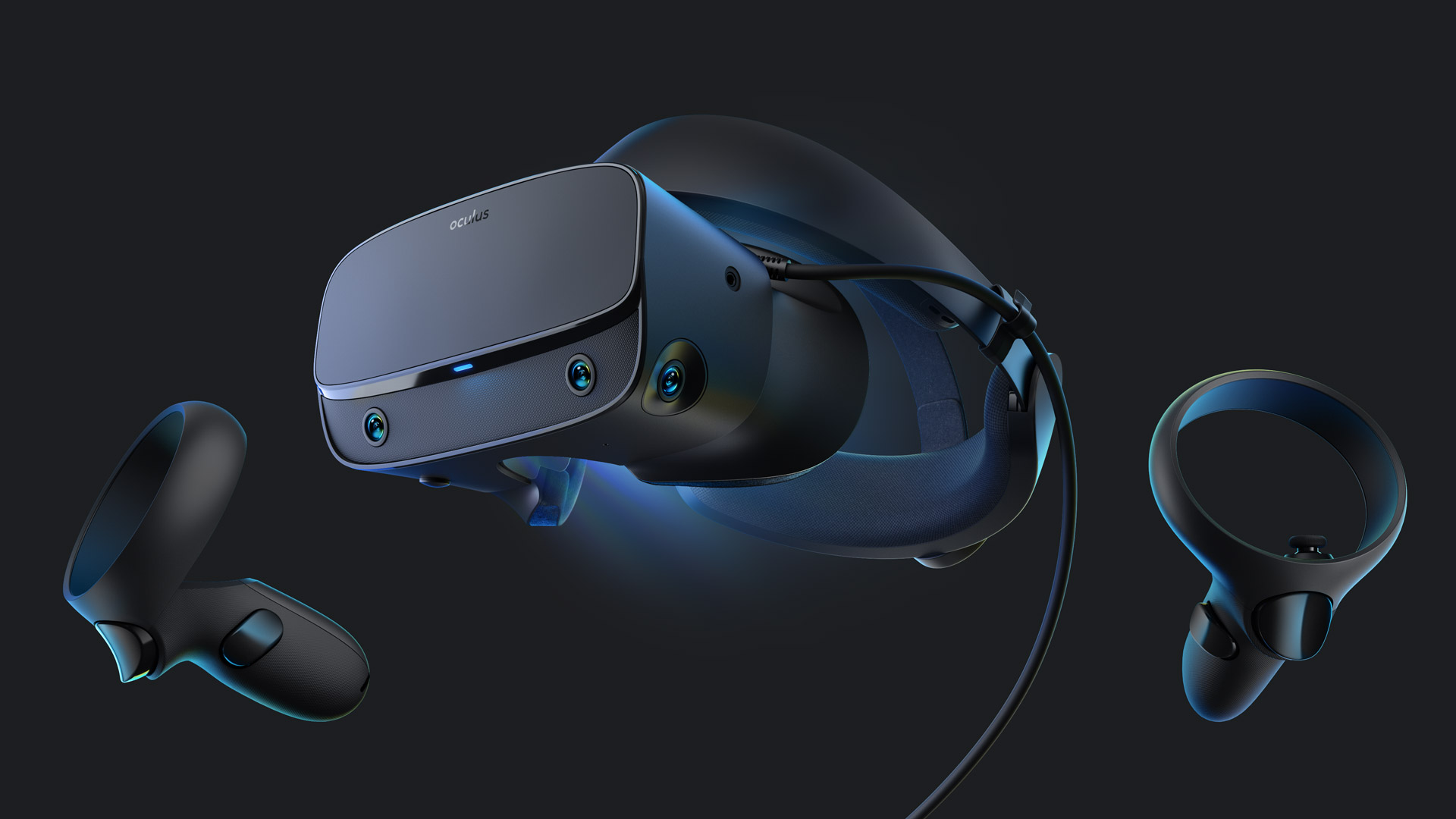

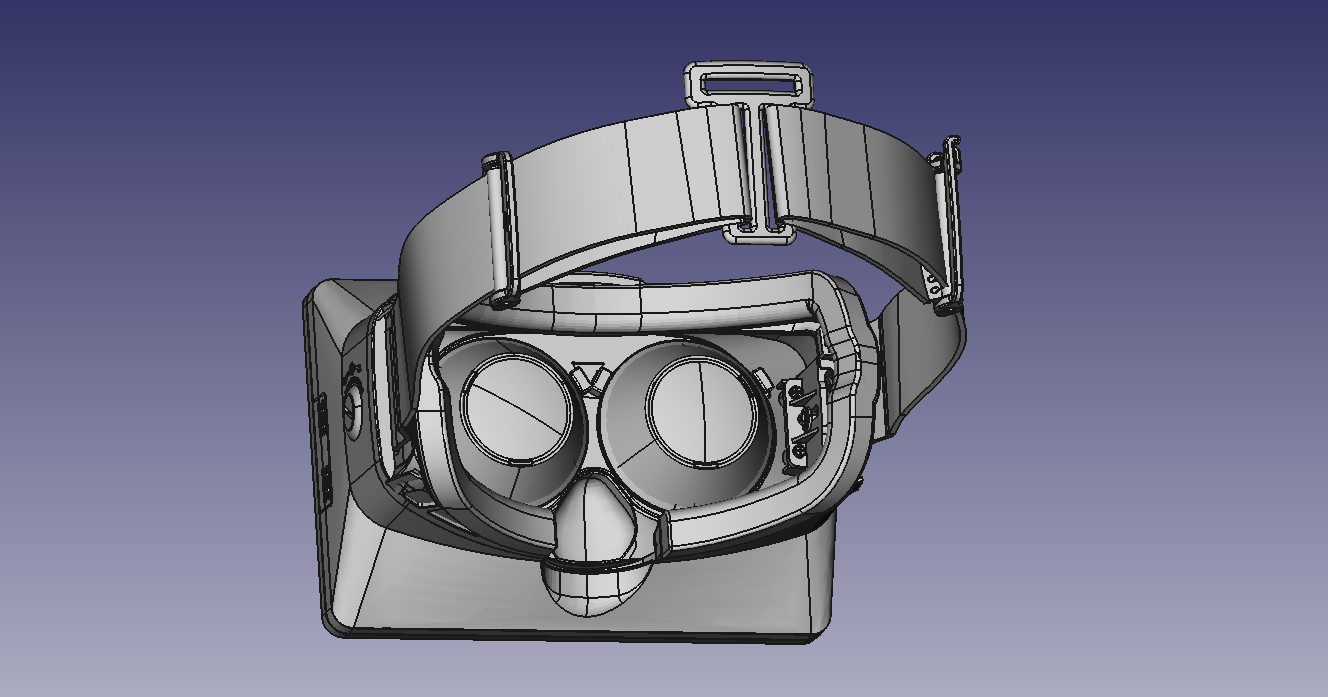

During a session at Oculus Connect, the company released the totality of the Oculus Rift DK1 plans to the public. Under an open-source license, anyone can now freely download the blueprints of the company’s first VR headset and even manufacture their own.

At Oculus Connect during a session called Building the First Rift Development Kit,Oculus Engineer Nirav Patel spoke about the challenges associated with the company’s first VR headset, the DK1.

At the end of the session, Patel, on stage in front of the audience, logged into the Oculus GitHub account to hit the publish button on the repository containing the essential elements of the DK1, including firmware, circuit board and mechanical designs, and the accompanying documentation.

“I can’t wait to see all of the Chinese knockoffs… God help us all,” Oculus CEO Brendan Iribe teased during his keynote presentation the following day. “But we do believe that this is important. It’s important for the hackers and the makers out there to be able to pick this up, take that tracker, glue it on to different things… take some source code, change it, modify it, ship it, make something awesome.” he continued.

While backers of the Oculus Rift Kickstarter ended up with what looked to be a polished DK1 development kit, the at-the-time 10 person company had little experience in manufacturing and shipping a hardware product. Patel, a former Apple software engineer, elaborated on the manufacturing process and its challenges during his session.

Speed was a major concern. The company aimed to have the DK1 shipped some five months after their 2012 Kickstarter. “Our initial ship date was December, but this turned out to be incredibly unrealistic. Even our later date was really aggressive,” said Patel.

With a design that lacked important specifications, like the type of foam that should pad the unit from the user’s face, Oculus made several trips to China to work directly with their manufacturer to make changes and evolve the design into something that could be mass produced.

Oculus had to find creative solutions to challenging problems. They found that they couldn’t send the expensive rate tables, a spinning apparatus used to calibrate trackers, to China—perhaps because the trackers often calibrated with such tables find their way into military equipment. Instead, Oculus rigged up some turntables to be computer-controlled and used them in the calibration process.

The young company also had to deal with a major change while working on the manufacturing process. The 5-inch screen to be used was discontinued and they had to scramble to find an alternative. They ended up with a 7-inch display which meant making adjustments to the design of the DK1.

“We had this mad rush to switch to a 7-inch panel… it meant that the panel didn’t match the rest of the design, including the optics. But it was a tradeoff we made for the sake of getting units out the door and content made as quickly as possible,” said Patel.

For now, leave the factory protective plastic cover on. On the backside you will find the only connector you need to be aware of, a mini-LVDS female that is used both for input and power supply (highlighted in red). Be very careful as you manipulate the screen because it is rather fragile and you don’t want to end up with a cracked panel like I did.

Next, let’s inspect the controller board. The board pictured here is an NT68674.5X. Using this exact model isn’t a requirement as long as you have a datasheet for the board you bought (ask the seller).

Connecting the board to the display will either be the easiest step in building your HMD or the hardest. To make it easy you should buy the LCD screen and the controller board from the same seller and ask them for the proper LVDS cable. I am going to assume that is what you did, if not I recommend you reach out to someone with an electronics background and plenty of soldering experience to help you build this cable.

Be very careful when connecting the LVDS cable to the screen. The male connector is very small and it is hard to tell which side is up. Try it both ways carefully – it should not take much force to slide it in the proper way.

If everything is in order, Windows should immediately recognize your assembled components as an external monitor. Next you need to run a few tests to make sure your LVDS cable isn’t noisy. A well-made LVDS cable has a few specific pairs twisted together to reduce noise. However most cables you will find on EBay are not properly twisted which can lead to visual artifacts. To make sure your cable is good, you should load several different images on your LCD screen, the more images you try, the better because sometimes the artifacts only show when a specific color is displayed.

There are lots of reasons that someone might want a head-mounted display. Camera operators and radio-controlled vehicle enthusiasts typically like these because they keep the sun off of their screen while working outdoors. Aside from those practical purposes, strapping a high-definition display to your head is just cool. Add some motion sensors to that and you’ve got a homemade Oculus Rift Virtual Reality display!

The electronics involved are fairly simple, consisting of a screen, a 9-DOF IMU board, and an Arduino. You can find the schematics and code on their site.

This article first appeared on USgamer, a partner publication of VG247. Some content, such as this article, has been migrated to VG247 for posterity after USgamer"s closure - but it has not been edited or further vetted by the VG247 team.

The former Vice President of Engineering at Oculus, McCauley was instrumental in the creation the first two Oculus Rift development kits. The Rift is out now, but McCauley is moving forward. He"s currently working on a new VR tracking system, which he hopes will solve many of the platform"s issues surrounding motion sickness.

I recently had a chance to talk with McCauley about his time with Oculus, the cirumstances around his departure, VR"s gaming potential, and what VR will look like in the future. Here"s what he had to say.

JM: Right, but I lack the ability to distinguish between buzz and hype and reality, and so I"ve been burned so many times, now I just wait, until I can get it in my hands. I wasn"t a VR guy, I was a videogame guy, and virtual reality and stereopsis tried to do that for years, and it just wasn"t there because the cell phone screens that we can use now were not high enough pixel density. It was not a good experience.

I thought that it was profound enough, and the technology that was being used, which came from mobile phones. These little sensors that are in mobile phones - the gyroscopes, the 9-DOF, MEMS components were finally in mass production and being shown in applications in similar devices, which made sort of rudimentary rotations and head position possible - just very fundamental stuff. It didn"t eliminate the motion sickness stuff, which many people experienced with DK1, it was terrible. But I could see where it was going, I could see the cell phone screens. The 4K stuff was being talked about. I said, "It"s just a matter of time before there"s a cell phone screen with 1080p, and a little bit beyond that, there"s going to be a cell phone screen with 4K resolution. So now we have a really good display, and we have these sensors." And I said, "Those are the parts that are naturally going to fall into place, and will be there." I knew from looking at DK1 and the foamcore prototype from [Oculus founder Palmer Luckey] that it was just a matter of time before we were able to crest that wave and get that newer stuff in there.

JM: Palmer, I met... so, here"s the story with that. Brendan goes, "There"s this guy named Palmer Luckey. He"s a VR enthusiast. He"s hooked up with [John Carmack]." I met [John Carmack] at Oculus, but I knew who he was. He works in a different area than I do. I"m a console guy, I work with the consoles, game consoles. I"m not a PC gamer guy. I was, years ago, but I migrated into consoles because that"s where I could find work. So, anyways, to make a long story short, Brendan told me [Carmack] was working with Palmer. Palmer had given him a prototype. Carmack had modified it and added a few small things to it, but basically, took Palmer"s formcore prototype and wrote a test application around it.

They didn"t have a building or anything, and we were meeting people down here in Livermore, basically. I think they were using Brendan"s apartment a little, but we filmed the Kickstarter video here. We redecorated the place for the Kickstarter video, we rented furniture, we set the place up to basically look like it was a functional place. And it was, for all intents and purposes. I had already engaged the factory about potentially making DK1s. We launched the Kickstarter video, we sold 10,000 pieces. And I thought at the time, if it"s 10,000 pieces, and we sell that many, that"s not enough, but it would be OK. So I took the thing to China and I produced it in China. All of the things that you see there, the design work, everything on DK1, I did completely in China. We only had ten or fifteen people at the company.

I brought prototypes back to Irvine. People tried them out, they hated them. Too heavy, uncomfortable. So we"d change a few things, and I ironed out some things. But to be honest, it"s a pretty simple thing. It"s got an [inertial measurement unit] in it, which is the motion sensing system, and a screen and a commercial display controller that we programmed in Taiwan. We tuned it to Nvidia, tuned the panels and the motion – when you make a display, it has to be tuned. It takes specialized equipment. You don"t realize when you see your phone, but the display is tuned on a piece of equipment, and you set some registers and parameters, so the display is nice and crisp and the pixels open up quickly and close quickly. We tuned it to Nvidia and launched it, was successful, had very few returns, and people loved it.

Pretty soon we were selling and selling, and we sold 70,000 of them before we stopped making them. So, we made 70,000 of those, and in the meantime we started on a DK2. And DK2 we were trying to solve positional tracking - the vestibular issues, I think, and tried to have a walk-around experience. Brendan was also working with Valve. I wasn"t involved with Valve and what he was doing up there, but Valve had their own VR initiative, and how that started and who started first kind of opened a debate. My view is Oculus started first, in any measurable or meaningful way. But, Brendan had some prototype from Valve that Valve had been working on which was pretty good. It could show us the direction we were going to go in. It used two Samsung panels side by side. If you look on my Twitter page you can see it there, my kid"s wearing it there in my profile shot.

JM: Yeah, so, my story, and my position on this thing, and this changes... I call Brendan and I said, "You"re not referring to me as a founder anymore." And the story is, "There"s only one founder, and it"s Palmer." Well, that"s not true. Palmer being very young at the beginning of that company and a VR enthusiast, enthusiastic nice guy, he"s a really nice person. Palmer doesn"t have any job skills, he"s 19. What do you know when you"re 19? You can kind of do some stuff. He hired big guns to produce the thing, and that big gun was me. And I know how to make stuff, that"s what I do. I consider myself crucial to the success of that company. If they didn"t have me there and my connections in China... no one wanted that thing. [Ed. Note: We reached out to Oculus for comment on this.] Even after we had all the hype on DK1, we interviewed with Foxconn, Flex, no one wanted to touch Oculus, because it"s too risky, and they didn"t need us. They"re making iPhones. They"re perfectly happy turning out iPhones 24/7. We went to my friend"s factory to produce it, it"s a personal friend of mine, I said, “Can you help me?” I said, "I"ll throw this project in and this project in, we"ll put it all into one project for you, we"ll make some money on it." And she said, "No, I don"t want to get involved in it. And who are those guys, how well do you know them? Do you know anything about their character?” I said, “Trust me, I"ve got good intuition on this." I always say that to her.

And so, we had to pay them a lot of money up front, we had to pay them three quarters of a million dollars cash to get started. So it was challenging to find a production partner. Now, 70,000 DK1s say we made money on them, we made pretty good margin in them, and the company was actually in the black, from the startup hardware company, which is amazing. That never happens. But we didn"t have enough capital to grow. We could pay our bills and stay afloat for a while if we kept selling DK1s, but we"d be like GoPro. We had to come out with another product, so we did DK2. To try to answer your question, I consider myself a founder. I think the founders are Nate, Mike, myself, Palmer, and Brendan. Those are the five key early employees. So, I get irritated by Brendan now referring to me as early employee, which wasn"t true. Brendan"s version of reality often changes. He"s a sales guy, that"s what they do, right? He"s a good sales guy, he"s the best.

This is the third or fourth thing I"ve done that"s been a smash hit, and I"m pretty happy with the outcome of it. I want them to succeed, and I want Oculus to beat those guys, our competitors. Can they do it? No one knows. If I were them I would be extremely worried about Sony. Sony does not do things without really thinking their way through it. They"re very smart, the company"s very well run. Facebook"s well run, but Sony"s very well run. They don"t take big risks. They take risks, but not giant ones, and VR"s very risky. For them to do this means that Sony sees something in it. There are people at Sony who are hardcore executive gamers, have been doing it for 25 years, know this product and know this business so well, and they"re on board. If I were Oculus or Oculus and Vive, I would be worried about them. I told Brendan very early on, "Brendan, Sony"s going to get on this thing, they"re going to do this, and they"re going to kick your ass, they"re going to kick our asses." I said, "You"ve got to be worried about them. Every time they"ve gotten into something like this they"ve done really well, with the exception of some other things."

USG: I was going to ask, what was the culture like early on in Oculus. You had Brendan, who you described as the pitch man, and then you had Palmer, who was very young. What was it like?

At a corporation or publisher, I"m not going to name any, or a large company with a blue F, it"s not really about that. It is about that, but it"s not really about that. It"s about gaining your own position in the company, and naturally people want to get recognized for what they"re doing. So, to answer your question, I"ll go to a studio and sit at Burnaby, at EA Burnaby for two or three years, no problem, or I"ll go to a studio, Red Octane or Neversoft, I have no problem, I"ll sit there for days and days and days and love it. But going to work for a corporation, it"s just not my thing, it"s just not for me. So, to answer your question, when everything"s going well and we"re all bailing – for instance, Nate, Palmer and myself all went to China to work on DK1. Palmer and Nate and I were at the factory working on it together. Neither of these guys knew how to work on anything, but we"re all trying to get it done, and I just loved it. It"s fun. The enthusiasm and the energy and the commitment by the people. Like I said, we were working, I was working, I"d go to work at 4 or 5 in the morning, I"d get off at 9 or 10 at night every night. Then at the factory I stayed awake for three days working, didn"t sleep once. But it was all voluntary. No one forced me to it, I just dug it.

To answer your question, Oculus was coming up, I saw the magazines, people were asking me about it. I didn"t really occur to me what it was. I am going to reserve my opinion on where VR is going to go until it actually happens. It could absolutely go upside down. These things do sometimes. No one knows why. Tesla could absolutely go tits up, pardon the expression. It could. I"ve seen that before. Apple did go belly up, almost. Apple Computer was almost out of business. People were hedging with what was going to happen to them. When they came out with the Macintosh, Steve Jobs predicted 10 million units, they sold 30,000. Because it was a $12,000 machine. So, pricing is very important. So, you never know what"s going to happen. Apple, the stodgy old people that run companies, very conservative companies like Palmolive and Coca-Cola would have continued to sell the Apple IIe, because it was selling well, instead of thinking, "We better stop selling this thing and come up with something new really fast." Well, if it was a company run by a bunch of old people, they would say, "No, don"t do that. We"ve got security, we"ve got revenue, we can pay our bills". Back then, Oculus was... they were risk takers. I"m a risk taker. I do risky stuff, like putting revenue into GoPro, and other things like that. I do, and I lose. I"ve had so many companies fail. I"ve done like ten of these things, or twelve, and the number of hits I"ve had is pretty low.

Seventy-six (76) naïve adults (37 females and 39 males) between the ages of 18 and 27 (19.54 ± 2.27) participated in this study. All observers were screened for their visual acuity using a standard Bailey-Lovie LogMAR Chart calibrated for 3 m, their stereo-acuity using a Random Dot Stereo Acuity Chart with Lea Symbols (Vision Assessment Corporation, 2007), observers’ pupillary distance and ocular health by an experienced clinician. All participants had visual acuities of LogMAR 0.3 (Snellen 6/12) or better in the worse eye with or without correction (legal requirement for private unconditional driving in Australia). All observers had no prior vestibular dysfunction and were not prone to motion sickness. Participants were excluded if they had a history of amblyopia or strabismus.

Sixty-one (61) participants could perceive global stereopsis. Of these, sixteen (16) participants observed the display under anisometropic suppression. Anisometropic suppression was confirmed with a follow-up stereo-acuity assessment. The remaining fifteen (15) could not perceive global stereopsis using the random dot stereogram. Participant characteristics are shown below in Table 1. Procedures were approved by the Human Research Ethics Advisory (HREA) panel at University of New South Wales (UNSW Sydney). Informed written consent was received by all participants and procedures were conducted in accordance with the HREA panel at UNSW Sydney guidelines and regulations and approved protocol.

Experiment 1 was conducted with a total of 6 trials presented in randomised order. The faster two speeds (speed “2” and “3”) were used. Displays either generated pure radial optic flow without head movements (passive viewing), pure radial optic flow despite head movements (active uncompensated viewing) or were compensated for head movements consistent with a constant spatial direction of self-motion (active compensated viewing). The displays were rendered to incorporate observer head movement information in real time during compensated viewing conditions. An audible tone was delivered to the Rift’s earpiece at a rate of 1 Hz for four of the trials. The audible tone signalled for the observer to sway (active viewing) at the rate of metronomic sound. Yaw, pitch, and roll changes in head orientation were recorded for all trials using the Rift’s inherent accelerometers and gyros and were computed as Euler angles in degrees. Experiment 2 consists of a total of 12 trials (with all speed settings) presented in randomised order.

The Oculus Rift (CV1) pupillary distance was adjusted based off the measurement found during screening. Each participant was seated and the HMD was placed on the observer until a comfortable fit was achieved. The observer was asked to adjust the head-mounted device vertically until the Oculus Home page was most clear. The device was then tightened to a comfortable point using the attached Velcro.

The experimenter instructed the observer of the steps involved in the example and experiment. The experimenter initiated the example modulus trial to the observer prior to the experimental trial to ensure they understood the task as well as provide a baseline comparison. In the first experiment, the HMD was then removed and the participants were asked to wear the first pair of anisometropic-suppressing spectacles. During each of the displays, the observer was asked to orient themselves using the green central target. They were then asked to fixate on the white fixation target during the presentation and concentrate on the experience (if any) of illusory self-motion in depth. The observer was asked to sway when an audible metronomic tone was heard in the earpiece of the Oculus Rift and remain stationary when there were no audible tones. The observer was asked to grade the level of vection strength, spatial presence and whether they felt sick in order to get used to method of answering using the directional keys and spacebar on the provided keyboard. After the first set, the observer was asked to remove both the HMD and the spectacles and the trials were repeated. This was then repeated again with the second pair of spectacles. In Experiment 2, participants only wore their optical correction (if any) and no anisometropic suppressing spectacles were used.

Having some issues with Oculus Runtime though. Without the driver everything works fine. I can use Rift as an extended display do whatever, but this way I can only start games in stereoscopic 3D only by using third-party software, like Perception and most games simply crash. Still good for watching movies in 3D-SBS after some aspect ratio tweaking in MPC-HC.

0.7.0 does not work, because it no longer supports extended display mode. DK1 is recognized only when debugging for DK1 is on, but still no image... At least I can start an SDK demo, which shows on my main screen only

In the past few years, there have been some significant advances in consumer virtual reality (VR) devices. Devices such as the Oculus Rift, HTC Vive, Leap Motion™ Controller, and Microsoft Kinect® are bringing immersive VR experiences into the homes of consumers with much lower cost and space requirements than previous generations of VR hardware. These new devices are also lowering the barrier to entry for VR engineering applications. Past research has suggested that there are significant opportunities for using VR during design tasks to improve results and reduce development time. This work reviews the latest generation of VR hardware and reviews research studying VR in the design process. Additionally, this work extracts the major themes from the reviews and discusses how the latest technology and research may affect the engineering design process. We conclude that these new devices have the potential to significantly improve portions of the design process.

As discussed by Steuer, the term virtual reality traditionally referred to a hardware setup consisting of items such as a stereoscopic display, computers, headphones, speakers, and 3D input devices [7]. More recently, the term has been broadly used to describe any program that includes a 3D component, regardless of the hardware they utilize [8]. Given this wide variation, it is pertinent to clarify and scope the term virtual reality.

Steuer also proposes that the definition of VR should not be a black-and-white distinction, since such a binary definition does not allow for comparisons between VR systems [7]. Based on this idea, we consider a VR system in the light of the VR experience it provides. A very basic definition of a VR experience is the replacing of one or more physical senses with virtual senses. A simple example of this is people listening to music on noise-canceling headphones; they have replaced the sounds of the physical world with sounds from the virtual world. This VR experience can be rated on two orthogonal scales of immersivity and fidelity, see Fig. 1. Immersivity refers to how much of the physical world is replaced with the virtual world, while fidelity refers to how realistic the inputs are. Returning to the previous example, this scale would rate the headphones as low–medium immersivity since only the hearing sense is affected, but a high fidelity since the audio matches what we might expect to hear in the physical world.

Various types of hardware are used to provide an immersive, high-fidelity VR experience for users. Given the relative importance the sense of sight has in our interaction with the world, we consider a display system that presents images in such a way that the user perceives them to be 3D (as opposed to seeing a 2D projection of a 3D scene on a common TV or computer screen) in combination with a head tracking system to be the minimum set of requirements for a highly immersive VR experience [1]. This type of hardware was found in almost all VR applications we reviewed, for example, Refs. [1], [3], [6], and [13–22]. This requirement is noted in Fig. 2 as the core capabilities for a VR experience. Usually, some additional features are also included to enhance the experience [7]. These additional features may include motion-capture input, 3D controller input, haptic feedback, voice control input, olfactory displays, gustatory displays, facial tracking, 3D-audio output, and/or audio recording. Figure 2 lists these features as the peripheral capabilities. To understand how core and peripheral capabilities can be used together to create a more compelling experience, consider a VR experience intended to test the ease of a product"s assembly. A VR experience with only the core VR capabilities might involve watching an assembly simulation from various angles. However, if haptic feedback and 3D input devices are added to the experience, the experience could now be interactive and the user could attempt to assemble the product themselves in VR while feeling collisions and interferences. On the other hand, adding an olfactory display to produce virtual smells would likely do little to enhance this particular experience. Hence, these peripheral capabilities are optional to a highly immersive VR experience and may be included based on the goals and needs of the experience. Figure 2 lists these core and peripheral capabilities, respectively, in the inner and outer circles. Devices for providing these various core and peripheral capabilities will be discussed in Secs. 3.1–3.3.

CAVE systems typically consist of two or more large projector screens forming a pseudoroom. The participant also wears a special set of glasses that work with the system to track the participant"s head position and also to present separate images to each eye. On the other hand, HMDs are devices that are worn on the user"s head and typically use half a screen to present an image to each eye. Due to the close proximity of the screen to the eye, these HMDs also typically include some specialized optics to allow the user"s eye to better focus on the screen [10,25]. Sections 3.1.1 and 3.1.2 will discuss each of these displays in more detail.

CAVE technology appears to have been first researched in the Electronic Visualization Lab at the University of Illinois [26]. In its full implementation, the CAVE consists of a room where all four walls, the ceiling, and the floor are projector screens; a special set of glasses that sync with the projectors to provide stereoscopic images; a system to sense and report the location and gaze of the viewer; and a specialized computer to calculate and render the scenes and drive the projectors [4]. When first revealed, CAVE technology was positioned as superior in most aspects to other available stereoscopic displays [27]. Included in these claims were larger field-of-view (FOV), higher visual acuity, and better support for collaboration [27]. While many of these claims were true at the time, HMDs are approaching and rivaling the capabilities of CAVE technology.

The claim about collaboration deserves special consideration. In their paper first introducing CAVE technology, Cruz-Neira et al. state, “One of the most important aspects of visualization is communication. For virtual reality to become an effective and complete visualization tool, it must permit more than one user in the same environment” [27]. CAVE technology is presented as meeting this requirement; however, there are certain caveats that make it less than ideal for many scenarios. The first is occlusion. As people move about the CAVE, they can block each other"s view of the screen. In general, this type of occlusion is not a serious issue when parts of the scene are beyond the other participant in virtual space although perhaps inconvenient. However, when the object being occluded is supposed to be between the viewer and someone else (in virtual space), the stereoscopic view collapses along with the usefulness of the simulation [4]. A second issue with collaboration in a CAVE is the issue of distortion. Since only a single viewer is tracked in the classic setup, all other viewers in the CAVE see the stereo image as if they were at that location. However, since two people cannot occupy the same physical space and hence cannot all stand at the same location, all viewers aside from the tracked viewer experience some distortion. The amount of distortion experienced is related to the viewer"s distance from the tracked viewer [22]. The proposed solution to this issue is to track all the viewers and calculate stereoscopic images for each person. While this has been shown to work in the two-viewer use case [22], commercial hardware with fast enough refresh rates to handle more than two or three viewers does not yet exist.

As discussed previously, HMDs are a type of VR display that is worn by the user on his or her head. Example HMDs are shown in Fig. 3. These devices typically consist of one or two small flat panel screens placed a few inches from the eyes. The left screen (or left half of the screen) presents an image to the left eye, and the right screen (or right half of the screen) presents an image to the right eye. Because of the difficulty, the human eye has with focusing on objects so close, there are typically some optics placed between the screen and eye that allow the eye to focus better. These optics typically introduce some distortion around the edges that is corrected in software by inversely distorting the images to appear undistorted through the optics. These same optics also magnify the screen, making the pixels and the space between pixels larger and more apparent to the user. This effect is referred to as the “screen-door” effect [31–33].

Shortcomings of this type of display can include: incompatibility with corrective eye-wear (although some devices provide adjustments to help mitigate this problem) [34], blurry images due to slow screen-refresh rates and image persistence [35], latency between user movements and screen redraws [36], the fact that the user must generally be tethered to a computer which can reduce the immersivity of a simulation [37], and the hindrance to collocated communication they can cause [20]. The major advantages of this type of display are: its significantly cheaper cost compared to CAVE technology, its ability to be driven by a standard computer, its much smaller space requirements, its ease of setup and take-down (allowing for temporary installations and uses), and its compatibility with many readily available software tools and development environments. Table 1 compares the specifications of several discrete consumer HMDs discussed more fully below.

As discussed in Sec. 3.1.1, the ability to communicate effectively is an important consideration of VR technology. Current iterations of VR HMDs obscure the user"s face and especially the eyes. This can create a communication barrier for users who are in close proximity which does not exist in a CAVE as discussed by Smith [20]. It should be noted here that this difference applies only to situations in which the collaborators are in the same room. If the collaborators are in different locations, HMDs and CAVE systems are on equal footing as far as communication is concerned. One method for attempting to solve this issue with HMDs is to instead use AR HMDs which allow you to see your collaborators. Billinghurst et al. have published some research in this area [60,61]. A second method for attempting to solve this issue is to take the entire interaction into VR. Movie producers have used facial recognition and motion capture technology to animate computer-generated imagery characters with the actor"s same facial expressions and movements. This same technology could and has been applied to VR to animate a virtual avatar. Li et al. have presented research supported by Oculus that demonstrates using facial capture to animate virtual avatars [62], and HMDs with varying levels of facial tracking have already been announced and demonstrated [50,63].

Oculus Rift CV1: The Oculus Rift Development Kit (DK) 1 was the first of the current generation of HMD devices and promised a renewed hope for a low-cost, high-fidelity VR experience and sparked a new interest in VR research, applications, and consumer experiences. The DK1 was first released in 2012 with the second generation (DK2) being released in 2014, and the first consumer version of the Oculus Rift (CV1) released in early 2016. To track head orientation, the Rift uses a six-degree-of-freedom (DOF) IMU along with an external camera. The camera is mounted facing the user to help improve tracking accuracy. Since these devices are using flat screens with optics to expand the field-of-view (FOV), they do show the screen-door effect, but it becomes less noticeable as resolution increases.

Steam VR/HTC Vive: The Steam Vive HMD is the result of a collaboration between HTC and Steam to develop a VR system directly intended for gaming. The actual HMD is similar in design to Oculus Rift. The difference though is that the HMD is only part of the full system. The system also includes a controller for each hand and two sensor stations that are used to track the absolute position of the HMD and the controllers in a roughly 4.5 m × 4.5 m (15 ft × 15 ft) space. These additional features can make the Steam VR system a good choice when the application requires the user to move around a physical room to explore the virtual world.

Avegant Glyph: The Avegant Glyph is primarily designed to allow the user to experience media such as movies in a personal theater. As such, it includes a set of built-in headphones and an audio only mode where it is worn like a traditional set of headphones. However, built into the headband is a stereoscopic display that can be positioned over the eyes that allows the user to view media on a simulated theater screen. Despite this primary purpose, the Avegant Glyph also supports true VR experiences. The really unique feature is that instead of using a screen like the previously discussed HMDs, the Glyph uses a set of mirrors and miniature projectors to project the image onto a user"s retina. This does away with pixels in the traditional sense and allows the Glyph to avoid the screen-door problem that plagues other HMDs. The downside to the Glyph, however, is that it has lower resolution and a much smaller FOV. The Glyph also includes a 9DOF IMU to track head position.

Google Cardboard: Google Cardboard is a different approach to VR than any of the previously discussed devices. Google Cardboard was designed to be as low cost as possible while still allowing people to experience VR. Google Cardboard is folded and fastened together from a cardboard template by the user. Once the cardboard template has been assembled, the user"s smart-phone is then inserted into the headset and acts as the screen via apps that are specifically designed for Google Cardboard. Since the device is using a smart-phone as the display, it can also use any IMU or other sensors built into the phone. The biggest advantage of Google Cardboard is its affordability, since it is only a fraction of the cost of the previously mentioned devices. However, to achieve this low cost, design choices have been made that make this a temporary, prototype-level device not well suited to everyday use. The other interesting feature of this HMD is that since all processing is done on the phone; no cords are needed to connect the HMD to a computer allowing for extra mobility.

Samsung Gear VR: Like Google Cardboard, the Samsung Gear VR device is designed to turn a Samsung smartphone (compatible only with certain models) into a VR HMD. The major difference between these two is the cost and quality. The Gear VR is designed by Oculus, and once the phone is attached it is similar to an Oculus Rift. Different from many other HMDs, the Gear VR includes a control pad and buttons built into the side of the HMD that can be used as an interaction/navigation method for the VR application. Also like the Google Cardboard, the Gear VR has no cable to attach to a computer, allowing more freedom of movement.

OSVR Hacker DK2: The Open-Source VR project (OSVR) is an attempt by Razer® to develop a modular HMD that users can modify or upgrade as well as software libraries to accompany the device. The shipping configuration of the OSVR Hacker DK2 is very similar to the Oculus Rift CV1. The notable differences are that OSVR uses a 9DOF IMU, and the optics use a dual lens design and diffusion film to reduce distortion and the screen-door effect.

Others: Along with the HMDs mentioned above, there are several other consumer-grade HMDs suitable for VR that are available now or in the near future. These include: The Sony Playstation® VR which is similar to the Oculus Rift, but driven from a PlayStation gaming console [64]. The Dlodlo Glass H1 which is similar to the Samsung Gear VR but is compatible with more than just Samsung phones and includes a built-in low-latency 9-Axis IMU [65]. The Dlodo V1 which is somewhat like the Oculus Rift, but designed to look like a pair of glasses for the more fashion conscious VR users and is also significantly lighter weight [48]. The FOVE HMD which again is similar to the Oculus Rift, but offers eye tracking to provide more engaging VR experiences [50]. The StarVR HMD is similar to the Oculus Rift with the notable difference of a significantly expanded FOV and consequently a larger device [52]. The Vrvana Totem is like the Oculus Rift, but includes built-in pass-through cameras to provide the possibility of AR as well as VR [54]. The Sulon HMD, like the Vrvana Totem, includes cameras for AR, but can also use the cameras for 3D mapping of the user"s physical environment [66]. The ImmersiON VRelia Go is similar to the Samsung Gear VR but is compatible with more than just Samsung phones [56]. The visusVR is an interesting cross of the Samsung Gear VR and the Oculus Rift. It uses a smartphone for the screen, but a computer for the actual processing and rendering to provide a fully wireless HMD [57]. The GameFace Labs HMD is another cross between the Samsung Gear VR and the Oculus Rift. However, this HMD has all the processing and power built into the HMD and runs Android OS [58].

Light-field HMDs: In the physical world, humans use a variety of depth cues to gauge object size and location as discussed by Cruz-Neira et al. [4]. Of the eight cues discussed, only the accommodation cue is not reproducible by current commercial technologies. Accommodation is the term used to describe how our eyes change their shape to be able to focus on an object of interest. Since, with current technologies, users view a flat screen that remains approximately the same distance away, the user"s eyes do not change focus regardless of the distance to the virtual object [4]. Research by Akeley et al. prototyped special displays to produce a directional light field [67]. These light-field displays are designed to support the accommodation depth cue by allowing the eye to focus as if the objects were a realistic distance from the user instead of pictures on a screen inches from the eyes. More recent research by Huang et al. has developed light-field displays that are suitable for use in HMDs [2] (Fig. 3).

Images of various HMDs discussed in Sec. 3.1.2. Top left to right: Oculus Rift, Steam VR/HTC Vive, and Avegant Glyph. Bottom left to right: Google Cardboard, Samsung Gear VR by Oculus, and OSVR HDK. (Images courtesy of Oculus, HTC, Avegant, and OSVR).

Images of various HMDs discussed in Sec. 3.1.2. Top left to right: Oculus Rift, Steam VR/HTC Vive, and Avegant Glyph. Bottom left to right: Google Cardboard, Samsung Gear VR by Oculus, and OSVR HDK. (Images courtesy of Oculus, HTC, Avegant, and OSVR).Close modal

Television-based CAVEs: Currently, CAVEs use rear-projection technology. This means that for a standard size 3 m × 3 m × 3 m CAVE, a room approximately 10 m × 10 m × 10 m is needed to house the CAVE and projector equipment [24]. Rooms this size must be custom built for the purpose of housing a CAVE, limiting the available locations for housing it and adding to the cost of installation. To reduce the amount of space needed to house a CAVE, some researchers have been exploring CAVEs built with a matrix of television panels instead [24]. These panel-based CAVEs have the advantage of being able to be deployed in more typically sized spaces.

Cybersickness: Aside from the more obvious historical barriers of cost and space to VR adoption, another challenge is cybersickness [68]. The symptoms of cybersickness are similar to motion sickness, but the root causes of cybersickness are not yet well understood [6]. Symptoms of cybersickness range from headache and sweating to disorientation, vertigo, nausea, and vomiting [69]. Researchers are still identifying the root causes, but it seems to be a combination of technological and physiological causes [70]. In some cases, symptoms can become so acute that participants must discontinue the experience to avoid becoming physically ill. It also appears that the severity of the symptoms can be correlated to characteristics of the VR experience, but no definite system for identifying or measuring these factors has been developed to date [6].

The method of user input must be carefully considered in an interactive VR system. Standard devices such as a keyboard and mouse are difficult to use in a highly immersive VR experience [37]. Given the need for alternative methods of interaction, many different methods and devices have been developed and tested. Past methods include wands [71,72], sensor-gloves [16,73,74], force-balls [75] and joysticks [16,37], voice command [37,76], and marked/markerless IR camera systems [77–80]. More recently, the markerless IR camera systems have been shrunk into consumer products such as the Leap Motion™ Controller and Microsoft Kinect®. Sections 3.2.1 and 3.2.2 will discuss the various devices used to provide input in a virtual environment. We divide the input devices into two categories: those that are primarily intended to digitize human motion, and those that provide other styles of input.

Leap Motion™ Controller: The Leap Motion™ Controller is an IR camera device approximately 2 in × 1 in × 0.5 in that is intended for capturing hand, finger, and wrist motion data. The device is small enough that it can either be set on a desk or table in front of the user or mounted to the front of an HMD. Since the device is camera based, it can only track what it can see. This constraint affects the device"s capabilities in two important ways: First, the view area of the camera is limited to approximately an 8 ft3 (0.23 m3) volume roughly in the shape of a compressed octahedron depicted in Fig. 4. For some applications, this volume is limiting. The second constraint on the device"s capabilities is its loss of tracking capability when its view of the tracked object becomes blocked. This commonly occurs when the fingers are pointed away from the device and the back of the hand blocks the camera"s view. Weichert et al. [86] and Guna et al. [87] have performed analyses of the accuracy of the Leap Motion™ Controller. Taken together, these analyses show the Leap Motion™ Controller is reliable and accurate for tracking static points, and adequate for gesture-based human–computer interaction [87]. However, Guna et al. also note that there were issues with the stability of the tracking from the device [87] which can cause frustration or errors from the users. Thompson notes, however, that the manufacturer frequently updates the software with performance improvements [31], and since these analyses have been performed, major updates have been released.

Microsoft Kinect®: The Microsoft Kinect® is also an IR camera device; however, in contrast to the Leap Motion™ Controller, this device is made for tracking the entire skeleton. In addition to the IR depth camera, the Kinect® has some additional features. It includes a standard color camera which can be used with IR camera to produce full-color, depth-mapped images. It also includes a three-axis accelerometer that allows the device to sense which direction is down, and hence its current orientation. Finally, it includes a tilt motor for occasional adjustments to the camera tilt from the software. This can be used to optimize the view area. The limitations of the Kinect® are similar to that of the Leap Motion™ Controller; it can only track what it has a clear view of and a limited tracking volume. The tracking volume is approximately a truncated elliptical cone with a horizontal angle of 57 deg and vertical angle of 47 deg [88]. The truncated cone starts at approximately 4 ft from the camera and extends to approximately 11.5 ft from the camera. For skeletal tracking, the Kinect® also has the limitations of only being able to track two full skeletons at a time; the users must be facing the device, and its supplied libraries cannot track finer details such as fingers. Khoshelham and Elberink [89] and Dutta [90] evaluated the accuracy of the first version of the Kinect® and found it promising but limited. In 2013, Microsoft released an updated Kinect sensor which Wang et al. noted had improved skeletal tracking which would be further improved by use of statistical models [91].

In contrast to the motion capture devices discussed above, controllers are not primarily intended to capture a user"s body movements, but instead they generally allow the user to interact with the virtual world through some 3D embodiment (like a 3D mouse pointer). Many times, the controller supports a variety of actions much like a standard computer mouse provides either a left click or right click. A complete treatment of these controllers is outside the scope of this paper, and the reader is referred to Jayaram et al. [37] and Hayward et al. [93] for more discussion on various input devices. Chapter two of Virtual Reality Technology [94] also covers the underlying technologies used in many controllers.

Recently, the companies behind Oculus Rift and Vive have announced variants of the wand style controller that blur the line between controller and motion capture [43,95]. These controllers both track hand position and provide buttons for input. The Vive controllers are especially interesting as they work within Vive"s room-scale tracking system allowing users to move through an approximately 4.5 m × 4.5 m (15 ft × 15 ft) physical space.

Haptic display technology, sometimes referred to as force-feedback, allows a user to “feel” the virtual environment. There are a wide variety of ways this has been achieved. Many times haptic feedback motors are added to the input device used, and thus as the user tries to move the controller through the virtual space, the user will experience resistance when they encounter objects [96]. Other methods include using vibration to provide feedback on surface texture [97] or to indicate collisions [98], physical interaction [98], or to notify the user of certain events such as with modern cell phones and console controllers. Other haptics research has explored tension strings [99], exoskeleton suits [100,101], ultrasonics [101], and even electrical muscle stimulation [102].

In addition to localizing objects by sight and touch, humans also have the ability to localize objects through sound [107]. Some of our ability to localize audio sources can be explained by differences in the time of arrival and level of the signal at our ears [108]. However, when sound sources are directly in front of or behind us, these effects are essentially nonexistent. Even so, we are still generally able to pinpoint these sound sources due to the sound scattering effects of our bodies and particularly our ears. These scattering effects leave a “fingerprint” on the sounds that varies by sound source position and frequency giving our brains additional clues about the location of the source. This fingerprint can be mathematically defined and is termed the head-related transfer function (HRTF) [109].

One option for recreating virtual sounds is to use a surround-sound speaker system. This style of sound system uses multiple speakers distributed around the physical space. In using a this type of system, the virtual sounds would be played from the speaker(s) that best approximate the location of the virtual source. Since the sound is being produced external to the user, all cues for sound localization would be produced naturally. However, when this system was implemented in early CAVE environments, it was found that sound localization was compromised by reflections (echoes) off the projector screens (walls) [4].

While the senses of taste and smell have not received the same amount of research attention as have sight, touch, and audio; a patent granted to Morton Heilig in 1962 describes a mechanical device for creating a VR experience that engaged the senses of sight, sound, touch, and smell [116]. In more recent years, prototype olfactory displays have been developed by Matsukura et al. [117] and Ariyakul and Nakamoto [118]. In experiments with olfactory displays, Bordegoni and Carulli showed that an olfactory display could be used to positively improve the level of presence a user in a VR experience perceives [119]. Additionally, Miyaura et al. suggest that olfactory displays could be used to improve concentration levels [120]. The olfactory displays discussed here generally work by storing a liquid smell and aerosolizing the liquid on command. Some additionally contain a fan or similar device to help direct the smell to the user"s nose. Taste has had even less research than smell; however, research by Narumi et al. showed that by combining a visual AR display with an olfactory display they were able to change the perceived taste of a cookie between chocolate, almond, strawberry, maple, and lemon [121].

Although different perspectives, domains, and industries may use different terminology, the engineering design process will typically include steps or stages called opportunity development, ideation, and conceptual design, followed by preliminary and detailed design phases [122]. Often the overall design process will include analysis after, during, or mixed in with, the various design stages followed by manufacturing, implementation, operations, and even retirement or disposal [123]. Furthermore, the particular application of a design process takes on various frameworks, such as the classical “waterfall” approach [124], “spiral” model [125], or “Vee” model [126], among others [127]. Each model has their own role in clarifying the design stages and guiding the engineers, designers, and other individuals within the process to realize the end product. As designs become more integrated and complex, the individuals traditionally assigned to the different stages or roles in the design process are required to collaborate in new ways and often concurrently. This, in turn, increases the need for design and communication tools that can meet the requirements for the ever advancing design process.

By leveraging the power of virtual reality, designers could almost literally step into the shoes of those they are designing for and experience the world through their eyes. A simple application that employs only VR displays and VR videos filmed from the prospective of end users could be sufficient to allow designers to better understand the perspectiv

Ms.Josey

Ms.Josey

Ms.Josey

Ms.Josey