diy programmed oculus rift dk1 screen 7 lcd panel pricelist

The second of my two HMD’s was built as an attempt to improve the first. I used an iPad Mini screen (not the Retina display) with a simple Chinese 9DOF board (a combo of gyro, accelerometer, and magnetometer).

There are indeed issues with resolution, as well as screen technology. Higher resolution reduces the “pixel grid” effect. Screens with tighter spacing between pixels reduces the pixel grid effect as well, even with identical resolutions and similar sized displays. It’s my opinion that the resolution has very little impact on the feeling of “realness” – it’s the motion sensing input.

Lag is what the Oculus Rift team have been putting a lot of effort into eliminating. If you’ve seen their latest prototypes they’re using motion tracking systems that use cameras and IR reflector dots in addition to sensors.

Instructable Update 2.0Thanks to the recent open-sourcing of the Oculus Rift DK1"s internals and schematics, cloned parts are now easily accessible and available to purchase from overseas via eBay. Threw out the old parts list and added a cloned DK1 monitor and head tracking unit to the parts list to avoid confusion.

After finishing the initial design, I decided that I could probably do a much better job. After researching some different designs, I decided to take apart the Nova entirely and start from scratch. The new design allows for the use of a set of flat-visor welding goggles (You can buy them from here) to be attached and offers a much lighter design than the previous one. The welding goggles completely block out outside light and conform to the face much better than the ski goggles, and they have air vents to prevent the lenses from fogging up. They also have a flat visor which can be switched out with my custom-made visor, which holds the two 5x loupe lenses. This means that the display can be detached from the lenses for any reason, such as removing dust from the screen. The lens are now much closer to each other in the visor, which meant I had to set up a divider inside the case to alleviate cross-talk between the two images. Cross-talk is where you see part of the image meant to be seen by your right eye in the image you"re supposed to see in your left eye, and vice-versa. The divider was made by cutting out a piece of cardboard, painting it black, and then taping it to the edge of the visor where the lens slide in to hold it in place. The IR tracking setup from before worked well, but had some glaring flaws that I felt could be fixed with a new head tracking system. So I decided to do something different this time and ordered a cheap air mouse (You can buy the one I used here, but I"d recommend getting a better and more accurate model than I did). I hot glued the PCB of the air mouse to the top of the Head Mounted Display, which means all you have to do is hold your head at a regular position, turn on the air mouse, and you can now move the mouse (and subsequently, the game"s view) with your head. It works well, but has some drift that can be adjusted manually with the mouse.

/cdn.vox-cdn.com/uploads/chorus_asset/file/14765019/2014-03-25_07-19-55.0.1410538779.jpg)

For now, leave the factory protective plastic cover on. On the backside you will find the only connector you need to be aware of, a mini-LVDS female that is used both for input and power supply (highlighted in red). Be very careful as you manipulate the screen because it is rather fragile and you don’t want to end up with a cracked panel like I did.

Next, let’s inspect the controller board. The board pictured here is an NT68674.5X. Using this exact model isn’t a requirement as long as you have a datasheet for the board you bought (ask the seller).

Connecting the board to the display will either be the easiest step in building your HMD or the hardest. To make it easy you should buy the LCD screen and the controller board from the same seller and ask them for the proper LVDS cable. I am going to assume that is what you did, if not I recommend you reach out to someone with an electronics background and plenty of soldering experience to help you build this cable.

Be very careful when connecting the LVDS cable to the screen. The male connector is very small and it is hard to tell which side is up. Try it both ways carefully – it should not take much force to slide it in the proper way.

If everything is in order, Windows should immediately recognize your assembled components as an external monitor. Next you need to run a few tests to make sure your LVDS cable isn’t noisy. A well-made LVDS cable has a few specific pairs twisted together to reduce noise. However most cables you will find on EBay are not properly twisted which can lead to visual artifacts. To make sure your cable is good, you should load several different images on your LCD screen, the more images you try, the better because sometimes the artifacts only show when a specific color is displayed.

Oculus Rift is one of the most popular VR devices for PCs. The glasses are designed to completely immerse its user in virtual reality and they allow you to track the movement of controllers and the position of the headset in space. Rift has an impressive range of games and applications. In 2018, Oculus took the second place among the VR glasses manufacturers with a share of 19.4% on the world market due to a good correlation of price, quality and usability.

The Oculus Rift release was preceded by a four-year development and fundraising stage at Kikstarter. The success led to the fact that in March 2014 Facebook bought the Oculus VR company for 2 billion dollars.

DK1 (August 2012) — it was distinguished by its low resolution (640x800 for each eye), a small viewing radius of 90 degrees and headset tracking with the help of an accelerometer, magnetometer and a gyroscope. The device was presented by Palmer Luckey at the E3 exhibition;

The first versions of the device were sold with a standard joystick from Xbox One which was not integrated into virtual reality. There were two reasons: insufficient number of tracking games for Rift (many games required standard control) and an underdeveloped tracking system.

May 2017: the second front camera is included as standard one, which makes it possible to play with full immersion and it makes Rift a full competitor of HTC Vive.

HTC Vive Pro is a more advanced, comfortable however more expensive model. The resolution of the display is higher by 70%, and the gaming space is almost three times more than one in Oculus Rift.

The device is fixed on the head with a flexible plastic frame and rubber ties overhead. The size is easily adjustable with a pullout handle and elastic straps which fit the head tightly from above and from behind. The weight of the device is 470 g, which is less than that of the PS VR or Vive competitors.

Regulators on the lower edge of the glasses allow you to change the location of the lenses in accordance with the interpupillary distance of a user (IPD). There is no exact digital ruler for the arrangement of lenses, so it"s necessary to set the exact distance using a usual ruler. It is possible to use Oculus Rift when you wear glasses, although an hour long use can cause inconvenience. The point is not only in size limitations, but also that the side mounts of the device unpleasantly press the glasses temples. What’s more, there is a danger of lenses damage, and it can be very hot in the glasses itself even in an air-conditioned room.

Oculus Rift is equipped with the OLED display with a resolution of 1200x1080 for each eye — this is identical to the figure of its main competitors, but it lags behind flagship models like HTC Vive Pro or Windows Mixed Reality devices. The viewing angle is standard 110 degrees, but if you play wearing your own glasses it can become a bit narrower.

You can improve the graphics with Oculus Debug Tool. Firstly, you need to open Oculus Home, then run Debug Tool (it"s in the Support folder of the Oculus root folder) and change the Pixels Per Display Pixel Override parameter to figures from 1.1 to 2.0 — this will increase the number of pixels from 10% to 100% . You do not need to close Debug Tool during the game, and the parameter can be changed depending on a game and the PC capabilities.

Oculus Touch controllers are easy to get used to thanks to the familiar arrangement of buttons that is the same as in game consoles. Here there is everything that gamers are used to: a joystick, hammers and usual buttons. Hands are protected from possible accidental blows by plastic arcs, and for additional security wrist straps are provided.

Oculus sensors detect the location of the headset and controllers by means of infrared identification of devices in space. Each of the devices sends signals picked up by sensors. That is why it is important that there are no mirrors and too bright windows in the room. Thus, the user"s head and hands are merged into virtual space. This system is called Constellation Tracking System.

In addition to the tracking of user’s movements there is an accelerometer, a magnetometer and a gyroscope in the headset. To make it much closer to the reality the position of the user"s eyes is also tracked: in real life our eyes focus on objects that are farther or nearer, but they cannot see everything clearly and distinctly at the same time . In order not to make brain rack because of constant clarity, Rift uses the technology of eyes pupils monitoring.

It is interesting that sensors can work as a usual web camera — this fact was discovered in mid-2017 and caused a wave of indignation in the VR community. It is mentioned nowhere in the instructions about such sensors’ capabilities, and Windows connects them as a usual third-party USB device. De facto you connect 2-3 additional webcams, although it"s no secret that many users cover front cameras on laptops because of a discomfort.

It is believed that this was done in order to hide the secret of the sensors functioning, and Facebook assures that the image from the camera is not transmitted and processed. However, there is a risk of hacking Rift sensors by intruders in order to obtain images.

Oculus Rift creates a gaming space with at least two sensors (in fact, they are usual cameras) which are located on both sides of the monitor creating a cone-shaped gaming space. The device has a system for monitoring the gaming space boundaries which is called Guardian. To create a space you need to draw its arbitrary boundaries with the help of controllers, and a cube will be formed automatically inside. It will be highlighted by thin lines during the game so that a gamer does not lose orientation in space.

The size of the gaming space is small: 1.5 x 1.5 m if you play with two cameras located on both sides of the monitor. When you purchase an additional third camera, you can expand your gaming area to 2.5 x 2.5 m. Unlike HTC devices, in Rift you cannot integrate real-world objects into VR.

Oculus Home is a standard shell for installing and running games and applications which is configured automatically when the device is connected to the PC. The application is very user-friendly and is similar to Steam.

During the recent year Steam is actively working to become the main platform for VR games for different types of devices. You can start games from there to Oculus if you enable the parameters Public Test Channel and Include Unknown Sources in Oculus Home settings.

Oculus Rift is sold in a big black box, and each component is fixed inside with Velcro and is located on a soft lining. There are not many base components, so the box can easily be used to transport the device.

Oculus Rift Headset is a virtual reality headset with built-in headphones and a cord for connecting to a computer. It combines two wires: HDMI and USB 3.0.

For a new gaming computer you will have to pay 800 USD. If only a video card is missing, you need to be ready for the cost of 220 USD, and upgrading to Windows 10 from Windows 7 will cost about 140 USD. Oculus warned users that Windows 7 and 8.1 supporting might be stopped soon.

What’s more, all the most important replacement parts for the standard Occulus Rift components are available on the official website in case of their damage and obsolescence. This cannot but rejoice, for example, PlayStation VR does not have so many replaceable parts.

Equipment installation for Oculus Rift is more difficult than for PS VR, but it"s easier than for HTC Vive. First of all, you will need to download the Oculus App from the website, create an account and follow the instructions on the screen. The process is relatively simple, and the installation experience can be studied in detail by video reviews.

Two camera-sensors are placed near the computer. The third one must be placed in the back top corner of the proposed gaming space. To do this, you need to use a ceiling mount or a high stand. Cameras create a cone-shaped virtual zone in front of you, so when they are placed in front of a gamer, a blind zone for the VR remains at the back part from a user. Some users use even four cameras for more accurate tracking. There is no need to buy more sensors, this can lead to malfunctions in Oculus software regardless of the PC level.

Users recommend buying an additional USB 3.0 controller and trying to connect the glasses to the port on the motherboard. What’s more, you need to get into the Windows device manager and in the power settings of the USB controller uncheck the item "Enable this device to be turned off to save power". If nothing helps, then the manufacturer recommends replacing the motherboard. And this is one more argument in favor of the fact that before buying Oculus Rift you should seriously evaluate the capabilities of your PC.

Problems with SteamVr. Problems with the software are most often related to connecting via SteamVR: for some games Beta Update is needed, and for others you need a stable program version. It is hoped that in the future compatibility problems will decrease as updates are made. Also some problems with the control and management in some games intended for HTC Vive were noticed — it"s all about the lack of buttons on the Oculus Touch controllers.

Finally, the common object of attention for VR devices is user health problems that can arise during games. Although Oculus users complain much less than those who played, for example, in PS VR, some action games can cause nausea and dizziness.

Users mention that there is almost no difference in the graphics between Oculus Rift and HTC Vive, but Oculus tracking is slightly less perceptible than in its competitor. Among the advantages the convenient fastening of glasses and built-in headphones are also mentioned. When you play for a long time in the headset it can be hot because of a warm padding, so it is better to keep a headscarf or napkins near by and take regular breaks.

Buying Oculus Rift can make sense if there is a powerful gaming PC, but there is no desire to spend too much money on HTC devices. In this case, a user gets access to a wide database of games and enjoys unlimited tracking of hands and head in VR.

In the past few years, there have been some significant advances in consumer virtual reality (VR) devices. Devices such as the Oculus Rift, HTC Vive, Leap Motion™ Controller, and Microsoft Kinect® are bringing immersive VR experiences into the homes of consumers with much lower cost and space requirements than previous generations of VR hardware. These new devices are also lowering the barrier to entry for VR engineering applications. Past research has suggested that there are significant opportunities for using VR during design tasks to improve results and reduce development time. This work reviews the latest generation of VR hardware and reviews research studying VR in the design process. Additionally, this work extracts the major themes from the reviews and discusses how the latest technology and research may affect the engineering design process. We conclude that these new devices have the potential to significantly improve portions of the design process.

As discussed by Steuer, the term virtual reality traditionally referred to a hardware setup consisting of items such as a stereoscopic display, computers, headphones, speakers, and 3D input devices [7]. More recently, the term has been broadly used to describe any program that includes a 3D component, regardless of the hardware they utilize [8]. Given this wide variation, it is pertinent to clarify and scope the term virtual reality.

Steuer also proposes that the definition of VR should not be a black-and-white distinction, since such a binary definition does not allow for comparisons between VR systems [7]. Based on this idea, we consider a VR system in the light of the VR experience it provides. A very basic definition of a VR experience is the replacing of one or more physical senses with virtual senses. A simple example of this is people listening to music on noise-canceling headphones; they have replaced the sounds of the physical world with sounds from the virtual world. This VR experience can be rated on two orthogonal scales of immersivity and fidelity, see Fig. 1. Immersivity refers to how much of the physical world is replaced with the virtual world, while fidelity refers to how realistic the inputs are. Returning to the previous example, this scale would rate the headphones as low–medium immersivity since only the hearing sense is affected, but a high fidelity since the audio matches what we might expect to hear in the physical world.

Various types of hardware are used to provide an immersive, high-fidelity VR experience for users. Given the relative importance the sense of sight has in our interaction with the world, we consider a display system that presents images in such a way that the user perceives them to be 3D (as opposed to seeing a 2D projection of a 3D scene on a common TV or computer screen) in combination with a head tracking system to be the minimum set of requirements for a highly immersive VR experience [1]. This type of hardware was found in almost all VR applications we reviewed, for example, Refs. [1], [3], [6], and [13–22]. This requirement is noted in Fig. 2 as the core capabilities for a VR experience. Usually, some additional features are also included to enhance the experience [7]. These additional features may include motion-capture input, 3D controller input, haptic feedback, voice control input, olfactory displays, gustatory displays, facial tracking, 3D-audio output, and/or audio recording. Figure 2 lists these features as the peripheral capabilities. To understand how core and peripheral capabilities can be used together to create a more compelling experience, consider a VR experience intended to test the ease of a product"s assembly. A VR experience with only the core VR capabilities might involve watching an assembly simulation from various angles. However, if haptic feedback and 3D input devices are added to the experience, the experience could now be interactive and the user could attempt to assemble the product themselves in VR while feeling collisions and interferences. On the other hand, adding an olfactory display to produce virtual smells would likely do little to enhance this particular experience. Hence, these peripheral capabilities are optional to a highly immersive VR experience and may be included based on the goals and needs of the experience. Figure 2 lists these core and peripheral capabilities, respectively, in the inner and outer circles. Devices for providing these various core and peripheral capabilities will be discussed in Secs. 3.1–3.3.

CAVE systems typically consist of two or more large projector screens forming a pseudoroom. The participant also wears a special set of glasses that work with the system to track the participant"s head position and also to present separate images to each eye. On the other hand, HMDs are devices that are worn on the user"s head and typically use half a screen to present an image to each eye. Due to the close proximity of the screen to the eye, these HMDs also typically include some specialized optics to allow the user"s eye to better focus on the screen [10,25]. Sections 3.1.1 and 3.1.2 will discuss each of these displays in more detail.

CAVE technology appears to have been first researched in the Electronic Visualization Lab at the University of Illinois [26]. In its full implementation, the CAVE consists of a room where all four walls, the ceiling, and the floor are projector screens; a special set of glasses that sync with the projectors to provide stereoscopic images; a system to sense and report the location and gaze of the viewer; and a specialized computer to calculate and render the scenes and drive the projectors [4]. When first revealed, CAVE technology was positioned as superior in most aspects to other available stereoscopic displays [27]. Included in these claims were larger field-of-view (FOV), higher visual acuity, and better support for collaboration [27]. While many of these claims were true at the time, HMDs are approaching and rivaling the capabilities of CAVE technology.

The claim about collaboration deserves special consideration. In their paper first introducing CAVE technology, Cruz-Neira et al. state, “One of the most important aspects of visualization is communication. For virtual reality to become an effective and complete visualization tool, it must permit more than one user in the same environment” [27]. CAVE technology is presented as meeting this requirement; however, there are certain caveats that make it less than ideal for many scenarios. The first is occlusion. As people move about the CAVE, they can block each other"s view of the screen. In general, this type of occlusion is not a serious issue when parts of the scene are beyond the other participant in virtual space although perhaps inconvenient. However, when the object being occluded is supposed to be between the viewer and someone else (in virtual space), the stereoscopic view collapses along with the usefulness of the simulation [4]. A second issue with collaboration in a CAVE is the issue of distortion. Since only a single viewer is tracked in the classic setup, all other viewers in the CAVE see the stereo image as if they were at that location. However, since two people cannot occupy the same physical space and hence cannot all stand at the same location, all viewers aside from the tracked viewer experience some distortion. The amount of distortion experienced is related to the viewer"s distance from the tracked viewer [22]. The proposed solution to this issue is to track all the viewers and calculate stereoscopic images for each person. While this has been shown to work in the two-viewer use case [22], commercial hardware with fast enough refresh rates to handle more than two or three viewers does not yet exist.

As discussed previously, HMDs are a type of VR display that is worn by the user on his or her head. Example HMDs are shown in Fig. 3. These devices typically consist of one or two small flat panel screens placed a few inches from the eyes. The left screen (or left half of the screen) presents an image to the left eye, and the right screen (or right half of the screen) presents an image to the right eye. Because of the difficulty, the human eye has with focusing on objects so close, there are typically some optics placed between the screen and eye that allow the eye to focus better. These optics typically introduce some distortion around the edges that is corrected in software by inversely distorting the images to appear undistorted through the optics. These same optics also magnify the screen, making the pixels and the space between pixels larger and more apparent to the user. This effect is referred to as the “screen-door” effect [31–33].

Shortcomings of this type of display can include: incompatibility with corrective eye-wear (although some devices provide adjustments to help mitigate this problem) [34], blurry images due to slow screen-refresh rates and image persistence [35], latency between user movements and screen redraws [36], the fact that the user must generally be tethered to a computer which can reduce the immersivity of a simulation [37], and the hindrance to collocated communication they can cause [20]. The major advantages of this type of display are: its significantly cheaper cost compared to CAVE technology, its ability to be driven by a standard computer, its much smaller space requirements, its ease of setup and take-down (allowing for temporary installations and uses), and its compatibility with many readily available software tools and development environments. Table 1 compares the specifications of several discrete consumer HMDs discussed more fully below.

As discussed in Sec. 3.1.1, the ability to communicate effectively is an important consideration of VR technology. Current iterations of VR HMDs obscure the user"s face and especially the eyes. This can create a communication barrier for users who are in close proximity which does not exist in a CAVE as discussed by Smith [20]. It should be noted here that this difference applies only to situations in which the collaborators are in the same room. If the collaborators are in different locations, HMDs and CAVE systems are on equal footing as far as communication is concerned. One method for attempting to solve this issue with HMDs is to instead use AR HMDs which allow you to see your collaborators. Billinghurst et al. have published some research in this area [60,61]. A second method for attempting to solve this issue is to take the entire interaction into VR. Movie producers have used facial recognition and motion capture technology to animate computer-generated imagery characters with the actor"s same facial expressions and movements. This same technology could and has been applied to VR to animate a virtual avatar. Li et al. have presented research supported by Oculus that demonstrates using facial capture to animate virtual avatars [62], and HMDs with varying levels of facial tracking have already been announced and demonstrated [50,63].

Oculus Rift CV1: The Oculus Rift Development Kit (DK) 1 was the first of the current generation of HMD devices and promised a renewed hope for a low-cost, high-fidelity VR experience and sparked a new interest in VR research, applications, and consumer experiences. The DK1 was first released in 2012 with the second generation (DK2) being released in 2014, and the first consumer version of the Oculus Rift (CV1) released in early 2016. To track head orientation, the Rift uses a six-degree-of-freedom (DOF) IMU along with an external camera. The camera is mounted facing the user to help improve tracking accuracy. Since these devices are using flat screens with optics to expand the field-of-view (FOV), they do show the screen-door effect, but it becomes less noticeable as resolution increases.

Steam VR/HTC Vive: The Steam Vive HMD is the result of a collaboration between HTC and Steam to develop a VR system directly intended for gaming. The actual HMD is similar in design to Oculus Rift. The difference though is that the HMD is only part of the full system. The system also includes a controller for each hand and two sensor stations that are used to track the absolute position of the HMD and the controllers in a roughly 4.5 m × 4.5 m (15 ft × 15 ft) space. These additional features can make the Steam VR system a good choice when the application requires the user to move around a physical room to explore the virtual world.

Avegant Glyph: The Avegant Glyph is primarily designed to allow the user to experience media such as movies in a personal theater. As such, it includes a set of built-in headphones and an audio only mode where it is worn like a traditional set of headphones. However, built into the headband is a stereoscopic display that can be positioned over the eyes that allows the user to view media on a simulated theater screen. Despite this primary purpose, the Avegant Glyph also supports true VR experiences. The really unique feature is that instead of using a screen like the previously discussed HMDs, the Glyph uses a set of mirrors and miniature projectors to project the image onto a user"s retina. This does away with pixels in the traditional sense and allows the Glyph to avoid the screen-door problem that plagues other HMDs. The downside to the Glyph, however, is that it has lower resolution and a much smaller FOV. The Glyph also includes a 9DOF IMU to track head position.

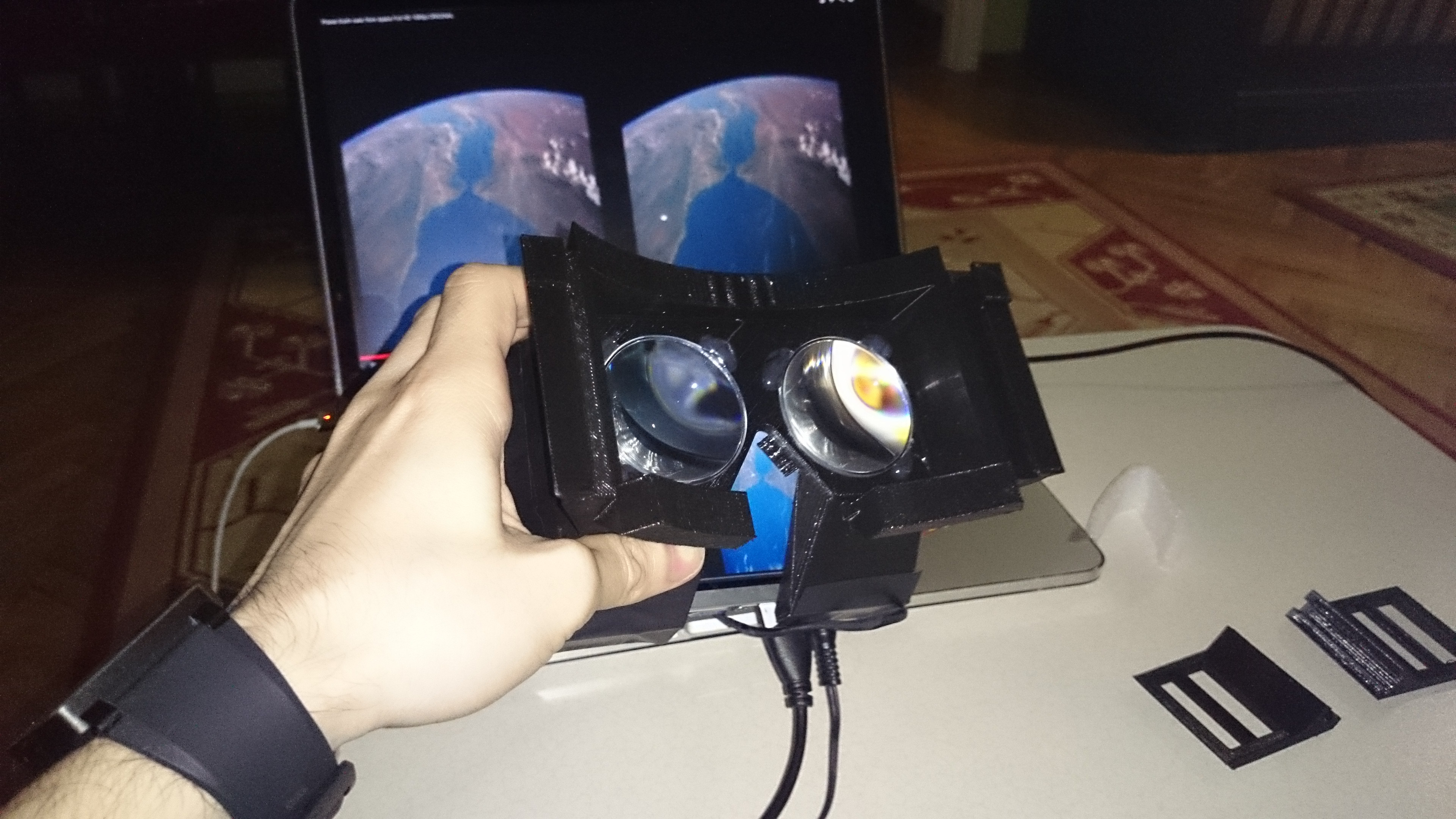

Google Cardboard: Google Cardboard is a different approach to VR than any of the previously discussed devices. Google Cardboard was designed to be as low cost as possible while still allowing people to experience VR. Google Cardboard is folded and fastened together from a cardboard template by the user. Once the cardboard template has been assembled, the user"s smart-phone is then inserted into the headset and acts as the screen via apps that are specifically designed for Google Cardboard. Since the device is using a smart-phone as the display, it can also use any IMU or other sensors built into the phone. The biggest advantage of Google Cardboard is its affordability, since it is only a fraction of the cost of the previously mentioned devices. However, to achieve this low cost, design choices have been made that make this a temporary, prototype-level device not well suited to everyday use. The other interesting feature of this HMD is that since all processing is done on the phone; no cords are needed to connect the HMD to a computer allowing for extra mobility.

Samsung Gear VR: Like Google Cardboard, the Samsung Gear VR device is designed to turn a Samsung smartphone (compatible only with certain models) into a VR HMD. The major difference between these two is the cost and quality. The Gear VR is designed by Oculus, and once the phone is attached it is similar to an Oculus Rift. Different from many other HMDs, the Gear VR includes a control pad and buttons built into the side of the HMD that can be used as an interaction/navigation method for the VR application. Also like the Google Cardboard, the Gear VR has no cable to attach to a computer, allowing more freedom of movement.

OSVR Hacker DK2: The Open-Source VR project (OSVR) is an attempt by Razer® to develop a modular HMD that users can modify or upgrade as well as software libraries to accompany the device. The shipping configuration of the OSVR Hacker DK2 is very similar to the Oculus Rift CV1. The notable differences are that OSVR uses a 9DOF IMU, and the optics use a dual lens design and diffusion film to reduce distortion and the screen-door effect.

Others: Along with the HMDs mentioned above, there are several other consumer-grade HMDs suitable for VR that are available now or in the near future. These include: The Sony Playstation® VR which is similar to the Oculus Rift, but driven from a PlayStation gaming console [64]. The Dlodlo Glass H1 which is similar to the Samsung Gear VR but is compatible with more than just Samsung phones and includes a built-in low-latency 9-Axis IMU [65]. The Dlodo V1 which is somewhat like the Oculus Rift, but designed to look like a pair of glasses for the more fashion conscious VR users and is also significantly lighter weight [48]. The FOVE HMD which again is similar to the Oculus Rift, but offers eye tracking to provide more engaging VR experiences [50]. The StarVR HMD is similar to the Oculus Rift with the notable difference of a significantly expanded FOV and consequently a larger device [52]. The Vrvana Totem is like the Oculus Rift, but includes built-in pass-through cameras to provide the possibility of AR as well as VR [54]. The Sulon HMD, like the Vrvana Totem, includes cameras for AR, but can also use the cameras for 3D mapping of the user"s physical environment [66]. The ImmersiON VRelia Go is similar to the Samsung Gear VR but is compatible with more than just Samsung phones [56]. The visusVR is an interesting cross of the Samsung Gear VR and the Oculus Rift. It uses a smartphone for the screen, but a computer for the actual processing and rendering to provide a fully wireless HMD [57]. The GameFace Labs HMD is another cross between the Samsung Gear VR and the Oculus Rift. However, this HMD has all the processing and power built into the HMD and runs Android OS [58].

Light-field HMDs: In the physical world, humans use a variety of depth cues to gauge object size and location as discussed by Cruz-Neira et al. [4]. Of the eight cues discussed, only the accommodation cue is not reproducible by current commercial technologies. Accommodation is the term used to describe how our eyes change their shape to be able to focus on an object of interest. Since, with current technologies, users view a flat screen that remains approximately the same distance away, the user"s eyes do not change focus regardless of the distance to the virtual object [4]. Research by Akeley et al. prototyped special displays to produce a directional light field [67]. These light-field displays are designed to support the accommodation depth cue by allowing the eye to focus as if the objects were a realistic distance from the user instead of pictures on a screen inches from the eyes. More recent research by Huang et al. has developed light-field displays that are suitable for use in HMDs [2] (Fig. 3).

Images of various HMDs discussed in Sec. 3.1.2. Top left to right: Oculus Rift, Steam VR/HTC Vive, and Avegant Glyph. Bottom left to right: Google Cardboard, Samsung Gear VR by Oculus, and OSVR HDK. (Images courtesy of Oculus, HTC, Avegant, and OSVR).

Images of various HMDs discussed in Sec. 3.1.2. Top left to right: Oculus Rift, Steam VR/HTC Vive, and Avegant Glyph. Bottom left to right: Google Cardboard, Samsung Gear VR by Oculus, and OSVR HDK. (Images courtesy of Oculus, HTC, Avegant, and OSVR).Close modal

Television-based CAVEs: Currently, CAVEs use rear-projection technology. This means that for a standard size 3 m × 3 m × 3 m CAVE, a room approximately 10 m × 10 m × 10 m is needed to house the CAVE and projector equipment [24]. Rooms this size must be custom built for the purpose of housing a CAVE, limiting the available locations for housing it and adding to the cost of installation. To reduce the amount of space needed to house a CAVE, some researchers have been exploring CAVEs built with a matrix of television panels instead [24]. These panel-based CAVEs have the advantage of being able to be deployed in more typically sized spaces.

Cybersickness: Aside from the more obvious historical barriers of cost and space to VR adoption, another challenge is cybersickness [68]. The symptoms of cybersickness are similar to motion sickness, but the root causes of cybersickness are not yet well understood [6]. Symptoms of cybersickness range from headache and sweating to disorientation, vertigo, nausea, and vomiting [69]. Researchers are still identifying the root causes, but it seems to be a combination of technological and physiological causes [70]. In some cases, symptoms can become so acute that participants must discontinue the experience to avoid becoming physically ill. It also appears that the severity of the symptoms can be correlated to characteristics of the VR experience, but no definite system for identifying or measuring these factors has been developed to date [6].

The method of user input must be carefully considered in an interactive VR system. Standard devices such as a keyboard and mouse are difficult to use in a highly immersive VR experience [37]. Given the need for alternative methods of interaction, many different methods and devices have been developed and tested. Past methods include wands [71,72], sensor-gloves [16,73,74], force-balls [75] and joysticks [16,37], voice command [37,76], and marked/markerless IR camera systems [77–80]. More recently, the markerless IR camera systems have been shrunk into consumer products such as the Leap Motion™ Controller and Microsoft Kinect®. Sections 3.2.1 and 3.2.2 will discuss the various devices used to provide input in a virtual environment. We divide the input devices into two categories: those that are primarily intended to digitize human motion, and those that provide other styles of input.

Leap Motion™ Controller: The Leap Motion™ Controller is an IR camera device approximately 2 in × 1 in × 0.5 in that is intended for capturing hand, finger, and wrist motion data. The device is small enough that it can either be set on a desk or table in front of the user or mounted to the front of an HMD. Since the device is camera based, it can only track what it can see. This constraint affects the device"s capabilities in two important ways: First, the view area of the camera is limited to approximately an 8 ft3 (0.23 m3) volume roughly in the shape of a compressed octahedron depicted in Fig. 4. For some applications, this volume is limiting. The second constraint on the device"s capabilities is its loss of tracking capability when its view of the tracked object becomes blocked. This commonly occurs when the fingers are pointed away from the device and the back of the hand blocks the camera"s view. Weichert et al. [86] and Guna et al. [87] have performed analyses of the accuracy of the Leap Motion™ Controller. Taken together, these analyses show the Leap Motion™ Controller is reliable and accurate for tracking static points, and adequate for gesture-based human–computer interaction [87]. However, Guna et al. also note that there were issues with the stability of the tracking from the device [87] which can cause frustration or errors from the users. Thompson notes, however, that the manufacturer frequently updates the software with performance improvements [31], and since these analyses have been performed, major updates have been released.

Microsoft Kinect®: The Microsoft Kinect® is also an IR camera device; however, in contrast to the Leap Motion™ Controller, this device is made for tracking the entire skeleton. In addition to the IR depth camera, the Kinect® has some additional features. It includes a standard color camera which can be used with IR camera to produce full-color, depth-mapped images. It also includes a three-axis accelerometer that allows the device to sense which direction is down, and hence its current orientation. Finally, it includes a tilt motor for occasional adjustments to the camera tilt from the software. This can be used to optimize the view area. The limitations of the Kinect® are similar to that of the Leap Motion™ Controller; it can only track what it has a clear view of and a limited tracking volume. The tracking volume is approximately a truncated elliptical cone with a horizontal angle of 57 deg and vertical angle of 47 deg [88]. The truncated cone starts at approximately 4 ft from the camera and extends to approximately 11.5 ft from the camera. For skeletal tracking, the Kinect® also has the limitations of only being able to track two full skeletons at a time; the users must be facing the device, and its supplied libraries cannot track finer details such as fingers. Khoshelham and Elberink [89] and Dutta [90] evaluated the accuracy of the first version of the Kinect® and found it promising but limited. In 2013, Microsoft released an updated Kinect sensor which Wang et al. noted had improved skeletal tracking which would be further improved by use of statistical models [91].

In contrast to the motion capture devices discussed above, controllers are not primarily intended to capture a user"s body movements, but instead they generally allow the user to interact with the virtual world through some 3D embodiment (like a 3D mouse pointer). Many times, the controller supports a variety of actions much like a standard computer mouse provides either a left click or right click. A complete treatment of these controllers is outside the scope of this paper, and the reader is referred to Jayaram et al. [37] and Hayward et al. [93] for more discussion on various input devices. Chapter two of Virtual Reality Technology [94] also covers the underlying technologies used in many controllers.

Recently, the companies behind Oculus Rift and Vive have announced variants of the wand style controller that blur the line between controller and motion capture [43,95]. These controllers both track hand position and provide buttons for input. The Vive controllers are especially interesting as they work within Vive"s room-scale tracking system allowing users to move through an approximately 4.5 m × 4.5 m (15 ft × 15 ft) physical space.

Haptic display technology, sometimes referred to as force-feedback, allows a user to “feel” the virtual environment. There are a wide variety of ways this has been achieved. Many times haptic feedback motors are added to the input device used, and thus as the user tries to move the controller through the virtual space, the user will experience resistance when they encounter objects [96]. Other methods include using vibration to provide feedback on surface texture [97] or to indicate collisions [98], physical interaction [98], or to notify the user of certain events such as with modern cell phones and console controllers. Other haptics research has explored tension strings [99], exoskeleton suits [100,101], ultrasonics [101], and even electrical muscle stimulation [102].

In addition to localizing objects by sight and touch, humans also have the ability to localize objects through sound [107]. Some of our ability to localize audio sources can be explained by differences in the time of arrival and level of the signal at our ears [108]. However, when sound sources are directly in front of or behind us, these effects are essentially nonexistent. Even so, we are still generally able to pinpoint these sound sources due to the sound scattering effects of our bodies and particularly our ears. These scattering effects leave a “fingerprint” on the sounds that varies by sound source position and frequency giving our brains additional clues about the location of the source. This fingerprint can be mathematically defined and is termed the head-related transfer function (HRTF) [109].

One option for recreating virtual sounds is to use a surround-sound speaker system. This style of sound system uses multiple speakers distributed around the physical space. In using a this type of system, the virtual sounds would be played from the speaker(s) that best approximate the location of the virtual source. Since the sound is being produced external to the user, all cues for sound localization would be produced naturally. However, when this system was implemented in early CAVE environments, it was found that sound localization was compromised by reflections (echoes) off the projector screens (walls) [4].

While the senses of taste and smell have not received the same amount of research attention as have sight, touch, and audio; a patent granted to Morton Heilig in 1962 describes a mechanical device for creating a VR experience that engaged the senses of sight, sound, touch, and smell [116]. In more recent years, prototype olfactory displays have been developed by Matsukura et al. [117] and Ariyakul and Nakamoto [118]. In experiments with olfactory displays, Bordegoni and Carulli showed that an olfactory display could be used to positively improve the level of presence a user in a VR experience perceives [119]. Additionally, Miyaura et al. suggest that olfactory displays could be used to improve concentration levels [120]. The olfactory displays discussed here generally work by storing a liquid smell and aerosolizing the liquid on command. Some additionally contain a fan or similar device to help direct the smell to the user"s nose. Taste has had even less research than smell; however, research by Narumi et al. showed that by combining a visual AR display with an olfactory display they were able to change the perceived taste of a cookie between chocolate, almond, strawberry, maple, and lemon [121].

Although different perspectives, domains, and industries may use different terminology, the engineering design process will typically include steps or stages called opportunity development, ideation, and conceptual design, followed by preliminary and detailed design phases [122]. Often the overall design process will include analysis after, during, or mixed in with, the various design stages followed by manufacturing, implementation, operations, and even retirement or disposal [123]. Furthermore, the particular application of a design process takes on various frameworks, such as the classical “waterfall” approach [124], “spiral” model [125], or “Vee” model [126], among others [127]. Each model has their own role in clarifying the design stages and guiding the engineers, designers, and other individuals within the process to realize the end product. As designs become more integrated and complex, the individuals traditionally assigned to the different stages or roles in the design process are required to collaborate in new ways and often concurrently. This, in turn, increases the need for design and communication tools that can meet the requirements for the ever advancing design process.

By leveraging the power of virtual reality, designers could almost literally step into the shoes of those they are designing for and experience the world through their eyes. A simple application that employs only VR displays and VR videos filmed from the prospective of end users could be sufficient to allow designers to better understand the perspective of those for whom they are designing. Employing haptics and/or advanced controllers also has great potential to enhance the experience. The addition of advanced controllers that allow the designer to control a first person avatar in a more natural way improves immersion and the illusion of ownership over a virtual body [134]. Beyond this, the use of advanced controllers would allow the designer to have basic interactions with a virtual environment using an avatar that represents a specific population such as young children or elderly persons. The anatomy and abilities of the virtual avatar and environment can be manipulated to simulate these conditions while maintaining a strong illusion of ownership [135–137], thereby giving designers a powerful tool to develop empathy. As in most applications involving human–computer interaction, employing haptics would allow for more powerful interactions with the virtual environment and could also likely be used to better simulate many conditions and scenarios. This technology would have the potential to simulate a wide range of user conditions including physical disabilities, human variability, and cultural differences. Beyond this, designers could conceivably simulate situations or environments such as zero gravity that would be impossible or impractical for them to experience otherwise.

Performing geometric computer-aided drafting (CAD) design in a virtual environment has the potential to make 3D modeling both more effective and more intuitive for both new and experienced users. Understanding 3D objects represented on a 2D interface requires enhanced spatial reasoning [146]. Conversely, visualizing 3D models in virtual reality makes them considerably easier to understand and is less demanding in terms of spatial reasoning skills [147], and would significantly reduce the learning curve required by 3D modeling applications. By the same reasoning, using virtual reality for model demonstrations to non CAD users such as management and clients could dramatically increase the effectiveness of such meetings. It should also be noted that there are many user-interface related challenges to creating an effective VR CAD system that may be alleviated by the use of advanced controllers in addition to a VR display.

A considerable quantity of research has been and continues to be conducted in the realm of virtual reality CAD. A 1997 paper by Volkswagen describes various methods that were implemented for CAD data visualization and manipulation, including the integration of the CAD geometry kernel ACIS with VR, allowing for basic operations directly on the native CAD data [148]. A similar kernel-based VR modeler was implemented by Fiorentino et al. in 2002 [149] called SpaceDesign, intended for freeform curve and surface modeling for industrial design applications. Krause et al. developed a system for conceptual design in virtual reality that uses advanced controllers to simulate clay modeling in virtual reality [150]. In 2012 and 2013, De Araujo et al. developed and proved a system which provides users with a stereoscopically rendered CAD environment that supports input both on and above a surface. Preliminary user testing of this environment shows favorable results for this interaction scheme [151,152]. Other researchers have further expanded this field by leveraging haptics in order to allow designers to physically interact with and feel aspects of their design. In 2010, Bordegoni implemented a system based on a haptic strip that conforms to a curve, thereby allowing the designer to feel and analyze critical aspects of a design by physically touching them [153]. Kim et al. also showed that haptics can be used to improve upon traditional modeling workflows by using haptically guided selection intent or freeform modeling based on material properties that the user can feel [154].

Much of the research that has been done in this area in the past was limited in application due to the high costs of the VR systems of the 1990 s and 2000 s. The recent advent of high-quality, low-cost VR technology opens the door for VR CAD to be used in everyday settings by engineers and designers. A recent study that uses an Occulus Rift and the Unity game development engine to visualize engineering models demonstrates the feasibility of such applications [155]; however, research in VR CAD needs to expand into this area in order make the use of low-cost VR technology a reality for day-to-day design tasks.

Significant progress has been made in this field in the last 25 years, and researchers have explored a range of applications, from simple 3D viewers to haptically enabled environments that provide new ways of exploring the data. A few early studies proved that VR could be used to simulate a wind tunnel while viewing computational fluid dynamics (CFD) results [157,158]. Bruno et al. also showed that similar techniques can be used to overlay and view analysis results on physical objects using augmented reality [159]. In 2009, Fiorentino et al. expanded on this by creating an augmented reality system that allowed users to deform a physical cantilever beam and see the stress/strain distribution overlaid on the deformed beam in real-time [160,161]. A 2007 study details the methodology and implementation of a VR analysis tool for finite element models that allows users to view and interact with finite element analysis (FEA) results [162]. Another study uses neural nets for surrogate modeling to explore deformation changes in an FEA model in real-time [163]. Similar research from Iowa State University uses NURBS-based freeform deformation, sensitivity analysis, and collision detection to create an interactive environment to view and modify geometry and evaluate stresses in a tractor arm. Ryken and Vance applied the system developed to an industry problem and found that the system allowed the designer to discover a unique solution to the problem [164].

Significant research has also been performed in applying haptic devices and techniques to enhance interaction with results from various types of engineering analyses. Several studies have shown that simple haptic systems can be used to interact with CFD data and provide feedback to the user based on the force gradients [165,166]. Ferrise et al. developed a haptic finite element analysis environment to enhance the learning of mechanical behavior of materials that allows users to feel how different structures behave in real-time. They also showed that learners using their system were able to understand the principles significantly faster and with less errors [167,168]. In 2006, Kim et al. developed a similar system that allows users to explore a limited structural model using high degree-of-freedom haptics [154].

The notion of using virtual reality as a platform for raw data visualization has been a topic of interest since the early days of VR. Research has shown that virtual reality significantly enhances spatial understanding of 3D data [169]. Furthermore, just as it is possible to visualize 3D data in 2D, virtual reality can make interfacing with higher-dimensional data more meaningful. A 1999 study out of Iowa State shows that VR provides significant advantages over 2D displays for viewing higher dimensionality data [170]. A more recent study found that virtual reality provides a platform for viewing higher-dimensional data and gives “better perception of datascape geometry, more intuitive data understanding, and a better retention of the perceived relationships in the data” [171]. Similar to how analysis results can be explored in virtual reality, haptics and advanced controllers can be used to explore the data in novel ways [147,172]. Brooks et al. also proved this in 1990 by creating a system that allows users to explore molecular geometry and their associated force fields that allowed chemists to better understand receptor sites for new drugs [173].

Design reviews are a highly valued step in the design process. Many of the vital decisions that decide the final outcome of a product are made in a design review setting. For this reason, they have been and continue to be an attractive application for virtual reality in the design process and are one of the most common applications of VR to engineering design [174]. Two particularly compelling ways in which virtual reality can enhance design reviews are by introducing the possibility for improved communication paradigms for distributed teams and enhanced engineering data visualization. In this way, most VR design review applications are extensions of collaborative virtual environments (CVEs). CVEs are distributed virtual systems that offer a “graphically realised, potentially infinite, digital landscape” within which “individuals can share information through interaction with each other and through individual and collaborative interaction with data representations” [175].

A number of different architectures have been suggested for improving collaboration through virtual design reviews [174,176,177]. Beyond this, various parties have researched many of the issues surrounding virtual design reviews. A system developed in the late 1990 s called MASSIVE allows distributed users to interact with digital representations (avatars) of each other in a virtual environment [178,179]. A joint project between the National Center for Supercomputing Applications, the German National Research Center for Information Technology, and Caterpillar produced a VR design review system that allows distributed team members to meet and view virtual prototypes [180]. A later project in 2001 also allows users to view engineering models while also representing distributed team members with avatars [181].

It should be noted that considerable effort has also been expended in exploring the potential for leveraging virtual and augmented reality technology to enhance design reviews for collocated teams. In 1998, Szalavári et al. developed an augmented reality system for collocated design meetings that allows users to view a shared model and individually control the view of the model as well as different data layers [182]. A more recent study in 2013 compares the immersivity and effectiveness of two different CAVE systems for virtual architectural design reviews [183]. Other research has examined the use of CAVE systems for collocated virtual design reviews [183]. In 2007, Santos et al. further opened this space by proposing and validating an application for design reviews that can be carried out across multiple VR and AR devices [174]. Yet other research has shown that multiple design tools, such as interactive structural analysis, can be integrated directly into the design review environment [161].

Aesthetic evaluation: Because virtual reality enables both stereoscopic viewing of 3D models and an immersive environment in which to view them, using VRPs can provide a much more realistic and effective platform for aesthetic evaluation of a design. Not only does VR allow models to be rendered in 3D but they can also be viewed in a virtual environment that is similar to one in which the product would be used, thereby giving better context to the model. Furthermore, VR can enable users to view the model at whatever scale is most beneficial, whether it be viewing small models at a large scale to inspect details, or viewing large models at a one-to-one scale for increased realism. Research at General Motors has found that viewing 3D models of car bodies and interiors at full scale provides a more accurate understanding of the car"s true shape than looking at small-scale physical prototypes [20]. Another study at Volvo showed that using VR to view car bodies at full scale was a more effective method for evaluating the aesthetic impact of body panel tolerances than using traditional viewing methods [187].

Research in this field has ranged from early systems that used positional constraints to verify assembly plans [197] and VR-based training for manufacturing equipment [198] to the exploration of integrated design, manufacturing and assembly in a virtual environment [199], and haptically enabled virtual assembly environments [200].

As systems grow larger, more complex, and more expensive, maintainability becomes a serious concern, and design for maintainability becomes more and more difficult [205]. One of the issues that exacerbates this difficulty is that serious analysis of the maintainability of a design cannot be performed until high-fidelity prototypes have been created [206]. One way in which designers have attempted to address this issue is through simulated maintenance verification using CAD tools [207]. This approach, however, is limited by the considerable time required to perform the analysis, and the lack of fidelity when using simulated human models.

For years, CAVE systems have been considered the gold standard for VR applications. However, because of the capital investment required to build and maintain a CAVE installation, companies rarely have more than one CAVE if any. This significantly limits access to these systems and their use must be prioritized for only select activities. This situation could be considered analogous to the mainframe computers of the 1960 s and 1970 s. While these mainframes improved the engineering design process and enabled new and improved designs, it was not until the advent of the personal computer (PC) that computing was able to impact day-to-day engineering activities and make previously unimagined applications commonplace. In a similar fashion, we suggest that this new generation of low-cost, high-quality VR technology has the potential to bring the power of VR to day-to-day engineering activities. Much like the PCs we can expect initial implementations and applications to be somewhat crude and unwieldy while the technology continues to grow and better practices emerge, but the ultimate impact is likely only limited by the imagination of engineers and developers.

The authors were supported in part by the National Science Foundation, Award No. 1067940 “IUCRC BYU v-CAx Research Site for the Center for e-Design I/UCRC”.

How to Wear an Oculus Rift and HTC Vive With Glasses,” CNET, San Francisco, CA, accessed July 15, 2016, http://www.cnet.com/how-to/how-to-wear-an-oculus-rift-and-htc-vive-with-glasses/

Palmer Luckey and Nate Mitchell Interview: Low Persistence ‘Fundamentally Changes the Oculus Rift Experience’,” Road to VR, accessed July 15, 2016, http://www.roadtovr.com/ces-2014-oculus-rift-crystal-cove-prototype-palmer-luckey-nate-mitchell-low-persistence-positional-tracking-interview-video/

John Carmack"s Delivers Some Home Truths on Latency,” Oculus VR, Menlo Park, CA, accessed July 15, 2016, http://oculusrift-blog.com/john-carmacks-message-of-latency/682/

Spec Comparison: The Rift Is Less Expensive Than the Vive, But Is It a Better Value?,” Digital Trends, Portland, OR, accessed June 22, 2016, https://www.f3nws.com/news/spec-comparison-does-the-rift-s-touch-update-make-it-a-true-vive-competitor-GW2mJJ

The Ars VR Headset Showdown–Oculus Rift versus. HTC Vive,” Ars Technica, New York, accessed June 22, 2016, http://arstechnica.com/gaming/2016/04/the-ars-vr-headset-showdown-oculus-rift-vs-htc-vive/

StarVR Specs Make the Oculus Rift Look Like a Kid"s Toy,” Digital Trends, Portland, OR, accessed July 15, 2016, http://www.digitaltrends.com/computing/starvr-vr-headset-5120-1440-resolution/

Hands On: Sulon Cortex Review,” Future US, Inc., San Francisco, CA, accessed July 15, 2016, http://www.techradar.com/reviews/gaming/sulon-cortex-1288470/review

Hands-On With GameFace Labs" VR Headset,” IGN Entertainment, San Francisco, CA, accessed July 15, 2016, http://www.ign.com/articles/2015/07/01/hands-on-with-gameface-labs-vr-headset

Veeso Wants to Share Your Smiles and Eye-Rolls in VR,” Techchrunch, San Francisco, CA, accessed July 15, 2016, https://techcrunch.com/2016/07/25/veeso-wants-to-share-your-smiles-and-eye-rolls-in-vr/

Kinect for Windows SDK 1.8: Skeletal Tracking,” Microsoft, Redmond, WA, accessed July 15, 2016, https://msdn.microsoft.com/en-us/library/hh973074.aspx

Storytelling and Repetitive Narratives for Design Empathy: Case Suomenlinna,” Nordes Nordic Design Research Conference, Stockholm, Sweden, May 27–30, Vol.

There are lots of reasons that someone might want a head-mounted display. Camera operators and radio-controlled vehicle enthusiasts typically like these because they keep the sun off of their screen while working outdoors. Aside from those practical purposes, strapping a high-definition display to your head is just cool. Add some motion sensors to that and you’ve got a homemade Oculus Rift Virtual Reality display!

The electronics involved are fairly simple, consisting of a screen, a 9-DOF IMU board, and an Arduino. You can find the schematics and code on their site.

Oculus Rift is a discontinued line of virtual reality headsets developed and manufactured by Oculus VR, a division of Meta Platforms, released on March 28, 2016.

In 2012 Oculus initiated a Kickstarter campaign to fund the Rift"s development, after being founded as an independent company two months prior. The project proved successful, raising almost US$2.5 million from around 10,000 contributors.Facebook for $2 billion.

The Rift went through various pre-production models since the Kickstarter campaign, around fi

Ms.Josey

Ms.Josey

Ms.Josey

Ms.Josey