set custom lcd panel text on poweredge r720 manufacturer

I haven"t found a complete reference of Dell"s proprietary IPMI commands, but according to the documentation I found here, the first invocation of ipmitool puts the supplied string into one of the display"s registers, and the second one flips the display buffer to actually show this.

I recently bought a pair of these servers to take over VMware duties from a pair of HP ProLiant DL380 G5 servers. Having had a few bad Dell experiences years ago I had stopped buying PowerEdge machines as I considered their design to be inferior (think PE1850) but I’m pleasantly surprised by these R710 machines.

In the server’s own BIOS options there is a Custom LCD field but entering text here and restarting doesn’t change the panel – it still just shows the Service Tag. Strangely, the iDRAC BIOS doesn’t offer you any control here at all, it just lists what the custom string currently is.

To make matters worse, I had accidentally got the desired result on one of the servers, but couldn’t get the second one configured. The answer lies with the buttons next to the LCD. Though you can view IP settings, temperature, power usage, etc., there is also a Setup option. With 48GB of RAM, each POST of the machine takes about 5 minutes so I had been too cautious to mess about with these options in case I undid some of my initial iDRAC config. I assumed that they would only provide a subset of the BIOS options. Wrong! You needto use the panel – even the iDRAC WebUI doesn’t seem to configure the LCD screen.

You can change it in BIOS Setup F2 by going to Embedded Server Management and setting Front-Panel LCD Options to User-Defined String, then goin gto User-Defined LCD String to set the string.

You can also change it from the OS using OMSA (OpenManage Server Administrator by going to System, Main System Chassis, Front Panel (sub tab), and set LCD Line to Custom.

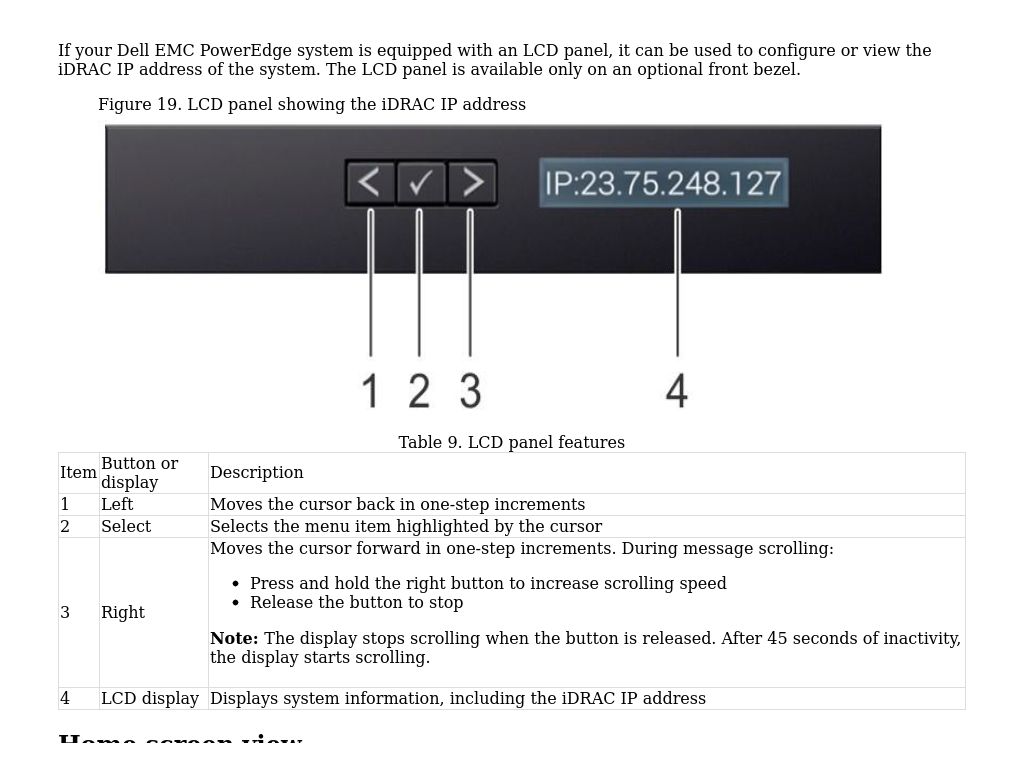

If your Dell EMC PowerEdge system is equipped with an LCD panel, it can be used to configure or view the iDRAC IP address of the system. The LCD panel is available only on an optional front bezel.

From the Setup menu, use the iDRAC option to select DHCP or Static IP to configure the network mode. If Static IP is selected, the following fields are available:

The Dell PowerEdge R720 12th Generation is a 2-socket, 2U server that features the Intel Xeon E5-2600 processor family and supports up to 768GB of DDR3 memory. Dell offers the R720 in various backplane configurations with up to 16 2.5-inch internal hard drives or 8 3.5-inch drives. A new optional feature though, designed to take the performance compute server market by storm, are 4 hot-plug front-access 2.5-inch Express Flash PCIe SSDs geared for high throughput and incredibly low latency. The PowerEdge R720’s Express Flash connectivity makes it unique among servers of its class, and is one of the reasons we have added two R720 units to the lab.

It’s not just storage technology itself that is a moving target – other critical components of enterprise storage infrastructure like interconnects and compute platforms are also continually evolving. Compute servers are not our primary focus, but performance and scalability differences between similarly-spec’d servers from different manufacturers or different generations from the same manufacturer can have important consequences for storage performance. It is also important to understand how factors like chassis construction and layout will affect long-term routine maintenance of a server.

StorageReview’s two new PowerEdge R720 servers feature Xeon E5-2640 2.50GHz processors with 15M cache and 7 PCIe slots to power real-world enterprise testing environments as well as see how storage devices perform when used in conjunction with compute servers from various manufacturers. One of our R720 servers is configured with 8 2.5-inch SFF internal drive bays and 4 front-accessible Express Flash bays. The other R720 features 16 2.5-inch SFF internal drive bays. Our review will focus on the PowerEdge R720 with Express Flash, noting key differences between the two when appropriate. The PowerEdge R720 is also available with a chassis configured for 8 3.5-inch LFF drives.

Availability: High-efficiency, hot-plug, redundant power supplies; hot-plug drive bays; TPM; dual internal SD support; hot-plug redundant fan; optional bezel; luggage-tag; ECC memory, interactive LCD screen; extended thermal support; ENERGY STAR® compliant, extended power range; switch agnostic partitioning (SWAP)

The most notable option offered by the PowerEdge R720 is a chassis that supports up to four front-access 2.5-inch PCIe Express Flash drives. The PowerEdge R720 uses a x16 PCIe breakout board for Express Flash connectivity, each drive requiring four lanes. Express Flash storage can be configured as cache or as a primary storage, offering lower latency and much greater performance than an SSD connected via SAS or SATA. Express Flash drives supplied in our R720 are 2.5-inch Micron P320h models, which use 34nm SLC NAND and are engineered for write-heavy applications. Dell warrants the lifetime of Express Flash drives in terms of bytes written; current 175GB and 350GB models offer 12.5 and 25 petabytes of drive writes, respectively. Dell software management applications can be configured to notify the server administrator when these wear limits are nearing. Our Express Flash R720 shipped with four 350GB drives.

Express Flash PCIe SSDs support orderly insertion, when a drive is added to a running system in a bay where an Express Flash drive has not been previously inserted since booting. Express Flash also supports orderly removal, where the system is notified prior to drive removal, and orderly swap, when a drive can be replaced with prior system notification. PowerEdge R720 servers also support Dell’s CacheCade technology, which provides automated storage tiering on SSDs when using PERC H810 and H710P controllers. The R720 can employ redundant failsafe hypervisors, and can be used as part of Dell’s Virtual Integrated System (VIS) solution.

The PowerEdge R720 supports Dell’s Select Network Adapters daughter cards to house the server’s LOM subsystem without requiring a PCIe slot. Network connectivity options for the R720 include 1000 Base-T, 10Gb Base-T, and 10Gb SFP+ interfaces from Intel and Broadcom. It can be configured to operate with a single 495W, 750W, or 1100W AC power supply module, or can be equipped with a redundant power supply. As a top-tier option, Dell offers an 1100W DC power supply for the PowerEdge R720. In the default redundant configuration, power is supplied equally from both supplies, but may be reconfigured via iDRAC to enable a hot spare feature which switches one power supply to sleep unless needed.

The R720 can support up to four passively-cooled graphics processing units (GPU) to accelerate virtual desktop infrastructure and high performance computing applications. Breaking it down by power capabilities, the R720 can support two 300W, full-length, double-wide internal GPUs or up to four 150W, full-length, single-wide GPUs. Each GPU can support up to 6GB of dedicated GDDR5 memory. Actively-cooled GPU cards are not supported as they interfere with and are not designed for forced-air cooling inside a server. The R720 can also connect to PowerEdge C410x external GPUs through a host interface card (HIC) with an iPass cable. Both the NVIDIA and Dell x16 HICs require the R720s single x16 PCIe slot to support up to four external GPUs.

As with StorageReview’s Gen8 HP ProLiant DL380p, the R720 supports up to 768GB of memory across 24 DIMMs when equipped with dual-processors. Each processor has 4 memory channels, each channel supporting up to 3 DIMMs. In addition to supporting unbuffered DIMMs (UDIMMs) and registered DIMMs (RDIMMs), the R720 supports load reduced DIMMs (LRDIMMs). In our configurations Dell supplied 24 8GB RDIMMs to populate all memory channels inside the R720 providing 192GB of system memory.

12th generation PowerEdge servers are part of Dell’s OpenManage platform, built around the Integrated Dell Remote Access Controller 7 (iDRAC7) with Lifecycle Controller, which provides agent-based and agent-free management. To integrate with agent-based solutions, Dell provides the OpenManage Server Administration (OMSA) which provides one-to-one systems management with a CLI interface or Web-based GUI. iDRAC7 can also provide remote access to the system whether or not there is an operating system installed. Through the iDRAC7 interface, users can quickly learn vital system information in many categories including storage, thermals, power and others. When first logged in to, iDRAC7 presents the user with health stats in all categories, as well as a preview of the iKVM.

Drilling down into the storage category, users can view information on the current disk configuration, as well as individual drive stats. Shown below is the RAID10 disk array we configured utilizing eight 300GB Seagate Cheetah 15K.3 enterprise hard drives connected through the Dell PERC H710p on-board RAID controller. Through this screen users would be able to quickly find out information about a drive failure remotely as well as narrow it down to which slot has the defective drive.

For remote system access where Remote Desktop or SSH might not be feasible, iDRAC7 includes a virtual console that gives users access to the system through a standard web browser with JAVA. This window also has useful features for remotely triggering power controls to restart a frozen system or even turn on a system without local access. Users can also mount local media to be accessible by the remote system, or map ISOs to quickly provision systems over the network.

The PowerEdge R720 debuts a new PowerEdge chassis design intended to support greater scalability compared to 11th generation PowerEdge servers, with an increased number of DIMMs, PCIe slots, and hard drives. Also unique to the 12th generation R720 are the four ExpressFlash slots, which currently support 175GB and 350GB Micron RealSSD P320h PCIe SSDs. When we reviewed the HHHL Micron P320h last year, we found it to offer class-leading performance.

The front of the server features a power button and indicator, recessed non-maskable interrupt (NMI) button to troubleshoot software and device driver errors, two USB connectors, Dell’s vFlash SD media reader (activated with iDRAC7), video connector, and a simple LCD control panel interface for local management. The vFlash media SD card reader is used for configuration, scripts, imaging, and other local management tasks. Dell’s 12th generation PowerEdge servers feature a model-specific QR code that links to video overviews of system internals and externals, task-oriented videos and installation wizards, reference materials, LCD diagnostics, and an electrical overview. This code appears several places on the chassis.

The rear of the unit offers access to up to two hot-plug power supplies and associated indicators, network connectivity via Dell’s Select Network Adapter family, two USB ports, iDRAC7 Enterprise port, video connector, and serial connector. Also visible are the available PCIe slots, rear carrying handle, as well as the two redundant 1,100 watt power supplies.

The R720 supports ReadyRails II sliding rails for tool-less mounting in 4-post racks with square or unthreaded round holes, or tooled mounting in 4-post threaded hole racks. The R720 is also compatible with ReadyRails static rails for tool-less mounting in 4-post racks with square or unthreaded round holes or tooled mounting in 4-post threaded as well as 2-post Telco racks.

For improved cable management, Dell offers a toolless cable management arm compatible with the PowerEdge R720. In our lab evaluation, the sliding ReadyRails II were quick to clip into position in our Eaton S-Series Rack, and offered a secure fit with minimal slack.

To install the R720 in the rails, users hold the server by the front and the rear carrying handle and carefully lower it into the extended rails while aligning the mounting pins with their appropriate slots. We found this process to be very intuitive and easy to nail on the first try, which quickly sped up the time required to get the server into production status.

The PowerEdge R720 supports hot-swappable cooling fans in an N+1 configuration, allowing a technician to replace any one fan at a time. Supporting newer environmental conditions, the R720 also incorporates Dell’s Fresh Air cooling design, allowing the server to operate above 35°C/95°F to reduce power consumption and related cooling expenses. This is also beneficial should a user want to deploy the R720 outside of traditional datacenter environments, where temperatures may be more variable.

When it comes to servicing components, the R720 can be operated temporarily without one of its cooling fans, allowing hot-swap replacement. The design of the cooling fan assembly makes it straightforward to remove and replace individual fans or the entire assembly. When replacing an individual fan, you grip the fan’s release button and lift the fan out of position, which also releases the power connection in one easy step. For more expansive repairs requiring the removal of the entire cooling assembly, users can lift latches on both sides of the server and lift out the entire unit in one piece.

When it comes to managing airflow, Dell allows the user to select the appropriate cooling mode for that specific environment. These user-selectable modes are invaluable to servers with additional equipment, such as PCIe Application Accelerators, which can overheat when certain automatic cooling modes. This happens because the server incorrectly throttles fan speeds based on chassis temperatures while local temps of the AA are still high. In these cases, being able to modify the cooling parameters to run faster than normal can allow better performance and increase reliability.

Utilizing our high-I/O FIO synthetic benchmarks we stressed the four Express Flash PCIe SSDs with a chassis inlet temperature of 27C. We found PCIe SSD temperatures dropped from 62C to 49C by switching the cooling profile from Auto to Maximum performance, and enabling the High Fan Speed Offset. To put it another way, without having that adjustment the PCIe SSDs would have been operating 26.5% hotter, which might affect long-term reliability. The downside is this changes the R720’s acoustic profile (increased fan noise) but given their production environments, datacenter noise levels for high-performance servers aren’t greatly impacted. In the tier-one server market right now, HP allows users to customize the fan speeds through the BIOS in the ProLiant DL380p Gen8 although Lenovo with their ThinkServer RD630 does not.

Dell goes to great lengths to optimize the new 12th generation PowerEdge R720 for power efficiency. For the R720, Dell offers four AC 100-240v PSUs, ranging from 495W up to 1,100W. By gearing the known load to a given power supply, users can achieve up to 96% efficiency with some models, which helps to lower overall power and thermal demands inside a datacenter. In this same category, HP offers power supplies ranging from 460 to 1,200 watts with their most efficient models rated at 94% for the DL380p Gen8, while Lenovo offers just one 800W 80Plus Gold option for their RD630.

Another way the Dell PowerEdge R720 can reduce power consumption inside a datacenter is by capping the system at a user-defined limit. When this limit is reached, the processors are throttled to lower system power usage until the target is reached. This can be useful when introducing new servers into an environment that is designed around strict power or thermal limits.

After the R720 has been customized for a specific environment by choosing the best PSU to fit the requirements and adjusting the power cap policy to fit the datacenter needs, Dell offers excellent monitoring tools for tracking power usage through iDRAC7.

When it comes to describing the performance advantage of front-mounted hot-swappable PCIe storage, the paradigm shift of transitioning from rotating media to 2.5″ SATA or SAS SSDs comes to mind. Random I/O and sequential bandwidth is on a much higher level, which would require many of the industry’s fastest SAS SSDs in RAID to match the performance of one Express Flash PCIe SSD… let alone four of them.

We’ve included a quick performance comparison of four Express Flash PCIe SSDs up against eight 15k SAS HDDs and one Smart Optimus Enterprise SAS SSD in our 8k 70/30 synthetic benchmark. We chose the SMART Optimus for this comparison, since at the time of this review it offered the highest 8k 70/30 performance in the SAS/SATA category. And note, this is a small tease of what’s to come, a detailed storage performance breakdown will take place in a second review highlighting the Express Flash technology.

Breaking it down by the numbers, at peak performance with a load of 16T/16Q per drive/array, the Dell Express Flash solution offered 467,644 IOPS, the SMART Optimus measured 41,586 IOPS, and the eight-drive 15K SAS RAID10 array came in with 4,617 IOPS. To match the performance of four Express Flash SSDs, you’d need 12 of the industry’s fastest SAS SSDs, or more than 800 15K SAS HDDs in RAID. In either of those situations, even if you were able to match the performance by scaling out, you’d lose the benefits of reliability, power consumption, and footprint, since you’d be dramatically increasing the components inside (or outside) the system.

With Dell’s Express Flash layout on the PowerEdge R720, you can still have your cake and eat it too. You don’t have to trade capacity for performance, since you still keep eight SFF bays on the front of the chassis to populate with your favorite SAS or SATA drives. You also gain an edge over other compute server platforms, since four x4 PCIe devices only consume one x16 slot. To exceed the performance of four Express Flash SSDs, you’d need to install three x8 HHHL Micron P320h cards, taking up three out of the seven available slots. That performance-density gives Dell a distinct advantage over the HP ProLiant DL380p Gen8 (6 PCIe 3.0 slots) or Lenovo ThinkServer RD630 (5 PCIe 3.0 slots), which would have to scale out Application Accelerators to match a four-drive Express Flash configuration, taking up valuable PCI-Express slots where Dell needs just one x16 slot.

The Dell PowerEdge R720 12th Generation marks more than just a progressive step in mainstream 2U server technology. Dell has been the first to embrace front-mounted, hot-swappable PCIe storage technology in the new R720. For enterprise users who want maximum performance with all the serviceability benefits of traditional SFF drives, the new Express Flash design is a savior for so many reasons. As we’ve seen in our cursory performance look, the Micron Express Flash drives simply dominate the best in class 15K and SAS SSD options in the market today, while still providing a total capacity of up to 1.4TB in the four bays. Should additional storage be needed, users can deploy SFF hard drives in capacities up to 1.2TB now that provide a great backstop to the flash drives in caching use cases and anywhere else where a platter tier makes sense. And because the Express Flash drives in aggregate only take up a single PCIe slot, there’s still plenty of expandability in the 6 available risers for additional PCIe storage if needed. As noted, we’ll dive more into storage performance within the R720 specifically in subsequent content.

While we certainly appreciate the storage aspects of the R720, there are a ton of other reasons to be excited about the platform as well including management, hardware design and thermal controls. The R720 provides an intuitive package dubbed iDRAC7 for remotely monitoring and managing the server, while providing a landing page with every health stat readily available. Turning to hardware design, the R720 packs plenty of mounting and serviceability options, where almost all frequently accessed components are easy to swap out if servicing is required. For cooling and power needs, Dell offers a wide range of PSU options to tailor the system for the best efficiency. Dell then takes things a step further by allowing users to adjust the cooling profiles for high-end devices like PCIe storage that require higher airflow requirements than the automatic mode can provide. Overall buyers can effectively use the Dell PowerEdge R720 as a blank slate, customizing it exactly for their needs, versus trying to shoehorn in an option-fixed model that might not be best in all situations.

As we compare the Dell PowerEdge R720 to other 2U servers on the market that we have reviewed previously from HP and Lenovo, one point is very clear; the R720 currently offers the fastest storage platform on the market in a 2U form factor. While you could try to match it by scaling out with multiple Application Accelerators in other server platforms, you’d lose potentially valuable PCIe real-estate. Dell’s thoughtful design is evident throughout, and the Express Flash components are even upgradable as newer iterations of that technology come out, like NVM Express.

The Dell PowerEdge R720 12th Generation server is not only well-designed with loads of great management features, it’s also the best performing server on the market in this class. Sure, it’s great as a garden variety standard compute server, but with Express Flash technology the R720 really shines, easily lapping all others. Dell has put the definitive stake in the ground by adopting new technology, giving their users a best of breed solution.

....................34 Operating With A Setup Password Enabled ...........................34 Entering The UEFI Boot Manager ......................35 Using The Boot Manager Navigation Keys ............................35 Boot Manager Screen ...............................36 UEFI Boot Menu ..........................36 Embedded System Management ..............................36 iDRAC Settings Utility ........................36 Entering The iDRAC Settings Utility 3 Installing System Components....................37 ..............................37 Recommended Tools...

.....................58 Removing The Cooling-Fan Assembly (Optional) ....................58 Installing The Cooling-Fan Assembly (Optional) ........................59 Internal USB Memory Key (Optional) ........................59 Replacing The Internal USB Key ..............................60 PCIe Card Holder ........................60 Removing The PCIe Card Holder ........................61 Installing The PCIe Card Holder ..............................61 Expansion Cards ......................61...

............................101 System Left Side Cover ......................101 Removing The System Left Side Cover ......................102 Installing The System Left Side Cover ................................102 Control Panel ....................103 Removing The Control Panel—Tower Mode ....................104 Installing The Control Panel—Tower Mode ..................104 Removing The Control Panel Module—Rack Mode .....................106 Installing The Control Panel—Rack Mode .......................106...

..........................122 Troubleshooting Processors 5 Using System Diagnostics.....................123 ............................123 Dell Online Diagnostics ........................123 Dell Embedded System Diagnostics ..................123 When To Use The Embedded System Diagnostics ....................123 Running The Embedded System Diagnostics ...........................124 System Diagnostic Controls 6 Jumpers And Connectors......................125 ..........................125 System Board Jumper Settings ............................126...

Figure 2. Front-Panel Features and Indicators—3.5 Inch Hard-Drive Chassis Item Indicator, Button, or Icon Description Connector Optical drive (optional) One optional SATA DVD-ROM drive or DVD+/-RW drive. vFlash media card slot Allows you to insert a vFlash media card. Power-on indicator, power The power-on indicator lights when the system power is button on.

USB 2.0-compliant. Hard drives 3.5 inch Up to twelve 3.5 inch hot-swappable hard-drive hard drives. systems Up to four Dell PowerEdge Express Flash devices (PCIe SSDs). 2.5 inch Up to thirty two 2.5 inch hot-swappable hard- drive hard drives. systems...

Item Indicator, Button, or Icon Description Connector NOTE: In systems supporting S110 Software RAID configuration, hard- drive slots 4 through 7 do not support any hard drives and are installed with hard-drive blanks. LCD panel Displays system ID, status information, and system error messages.

Item Indicator, Button, or Icon Description Connector System identification button The identification buttons on the front and back panels can be used to locate a particular system within a rack. When one of these buttons is pressed, the LCD panel on the front and the system status indicator on the back flash until one of the buttons is pressed again.

• For the full name of an abbreviation or acronym used in this document, see the Glossary at support.dell.com/ manuals. NOTE: Always check for updates on support.dell.com/manuals and read the updates first because they often...

Figure 14. Memory Socket Locations Memory channels are organized as follows: Processor 1 channel 0: slots A1, A5, and A9 channel 1: slots A2, A6, and A10 channel 2: slots A3, A7, and A11 channel 3: slots A4, A8, and A12 Processor 2 channel 0: slots B1, B5, and B9 channel 1: slots B2, B6, and B10...

NOTE: 1R, 2R, and 4R in the following tables indicate single-, dual-, and quad-rank DIMMs respectively. Table 1. Memory Configurations—Single Processor System Capacity DIMM Size (in Number of DIMM Rank, Organization, DIMM Slot Population (in GB) DIMMs and Frequency 1R, x8, 1333 MT/s, 1R, x8, 1600 MT/s 1R, x8, 1333 MT/s, A1, A3...

System DIMM Size (in Number of DIMM Rank, DIMM Slot Population Capacity (in DIMMs Organization, and Frequency 2R, x8, 1333 MT/s A1, A2, A3, A4, A5, A6, A7, A8 B1, B2, B3, B4, B5, B6, B7, B8 2R, x4, 1333 MT/s, A1, A2, A3, A4 2R, x4, 1600 MT/s B1, B2, B3, B4...

Figure 29. Removing and Installing a Processor 1. heat sink 2. captive screws (4) 3. processor 4. slots (4) CAUTION: The processor is held in its socket under strong pressure. Be aware that the release lever can spring up suddenly if not firmly grasped. Position your thumb firmly over the processor socket-release lever near the unlock icon and release the lever from the locked position by pushing down and out from under the tab.

Figure 30. Processor Shield Opening and Closing Lever Sequence 1. socket release lever 2. close first icon 3. processor 4. socket release lever 5. open first icon 11. Hold the tab on the processor shield and rotate it upward and out of the way. 12.

DC power and to safety grounds. Do not attempt connecting to DC power or installing grounds yourself. All electrical wiring must comply with applicable local or national codes and practices. Damage due to servicing that is not authorized by Dell is not covered by your warranty. Read and follow all safety instructions that came with the product.

Figure 38. Removing and Installing a 3.5 Inch (x8) SAS/SATA Backplane 1. backplane power connector 5. backplane 2. backplane power cable 6. SAS A cable 3. release pin 4. signal cable...

Figure 39. Cabling—3.5 Inch (x8) SAS/SATA Backplane 1. power connector on backplane 5. signal connector on system board 2. SAS A connector on backplane 6. signal connector on backplane 3. SAS A connector on system board 4. power connector on PIB...

Figure 40. Removing and Installing a 3.5 Inch (x8) SAS/SATA Backplane With a Single PERC Card 1. power connector 5. signal cable 2. power cable 6. backplane 3. release pin 7. SAS A cable 4. SAS B cable...

Figure 41. Cabling—3.5 Inch (x8) SAS/SATA Backplane With a Single PERC Card 1. power connector on backplane 6. signal cable on system board 2. SAS A connector on backplane 7. signal cable on backplane 3. SAS B connector on PERC card 8.

Figure 42. Removing and Installing a 3.5 Inch (x8) Plus 2.5 Inch (x4) SAS/SATA Backplane 1. PCIe C cable 8. release pin 2. power connector 9. SAS B cable 3. PCIe D cable 10. signal cable 4. PCIe B cable 11.

Figure 43. Cabling—3.5 Inch (x8) Plus 2.5 Inch (x4) SAS/SATA Backplane 1. PCIe B cable on PCIe SSD backplane 2. PCIe A cable on PCIe SSD backplane 3. SAS B cable from 3.5 inch backplane on PERC card 4. SAS A cable from 3.5 inch backplane on PERC card 5.

12. SAS B cable on 3.5 inch backplane 13. SAS A cable on 3.5 inch backplane 14. backplane power cable 15. backplane power connector 16. PCIe SSD backplane signal cable 17. PCIe D cable on PCIe SSD backplane 18. PCIe C cable on PCIe SSD backplane Figure 44.

Figure 45. Cabling—3.5 Inch (x12) SAS/SATA Backplane 1. power connector on backplane 2. SAS B connector on backplane 3. SAS B connector on PERC 4. SAS A connector on PERC 5. power connector on PIB 6. signal connector on system board 7.

Figure 46. Removing and Installing 2.5 Inch (x16) SAS/SATA Backplane 1. backplane 5. power connector 2. signal cable 6. SAS A cable 3. release pin 7. SAS B cable 4. power cable...

Figure 47. Cabling—2.5 Inch (x16) SAS/SATA Backplane 1. SAS B connector on PERC 6. SAS B connector on backplane 2. SAS A connector on PERC 7. power connector on backplane 3. power connector on PIB 8. signal connector on backplane 4.

Figure 49. Cabling—2.5 Inch (x16) Plus 2.5 Inch (x4) SAS/SATA Backplane 1. PCIe B cable on PCIe SSD backplane 11. SAS A connector on backplane 2. PCIe A cable on PCIe SSD backplane 12. SAS B connector on backplane 3. SAS B connector on PERC 13.

Figure 51. Cabling—2.5 Inch (x32) SAS/SATA Backplane With Two PERC Cards 1. SAS A connector on 2nd PERC 10. SAS B connector on backplane 2 2. SAS B connector on 2nd PERC 11. power connector on backplane 2 3. SAS B connector on 1st PERC 12.

Figure 52. Removing and Installing 2.5 Inch (x32) SAS/SATA Backplane With a Single PERC Card 1. signal cable 8. signal cable 2. release pin 9. power cable 3. power connector 10. SAS A1 cable 4. SAS A cable 11. SAS B1 cable 5.

Figure 53. Cabling—2.5 Inch (x32) SAS/SATA Backplane With a Single PERC Card 1. signal connector on backplane 1 10. backplane 2 SAS A connector 2. SAS B connector on backplane 1 11. backplane 2 SAS B connector 3. SAS A connector on backplane 1 12.

Figure 56. Removing and Installing the Control Panel Module—Rack Mode 1. control panel module 2. screw Figure 57. Removing and Installing the Control Panel From the Control Panel Module—Rack Mode 1. control panel 2. control panel cable...

BP_SIG2 Backplane signal connector 2 FAN5 Fan5 connector PWR_CONN_1 Power connector BP_SIG0, BP_SIG1 Dell PowerEdge Express Flash (PCIe SSD) signal connector, backplane signal connector 1 FAN4 Fan4 connector LCD panel connector A3, A7, A11, A4, A8, A12 Memory module sockets...

0 through 11 Eight plus four-hard drive systems Up to eight 3.5 inch, internal, hot-swappable SAS, SATA, SATA SSD, or Nearline SAS hard drives, and four Dell PowerEdge Express Flash devices (PCIe SSDs) Sixteen–hard-drive systems Up to sixteen 2.5 inch, internal, hot-swappable SAS,...

16 MB shared Environmental NOTE: For additional information about environmental measurements for specific system configurations, see dell.com/environmental_datasheets. Standard Operating Temperature Continuous operation: 10 °C to 35 °C at 10% to 80% relative humidity (RH), with 26 °C max dew point. De-rate maximum allowable dry bulb temperature at 1 °C per 300...

150 W processors are not supported. • Non-redundant power supplies are not supported. • Non Dell qualified peripheral cards and/or peripheral cards greater than 25 W are not supported. Storage Temperature –40 °C to 65 °C (–40 °F to 149 °F) with a maximum...

Error Code Message Information AMP0302 Message The system board < name > current is greater than the upper warning threshold. name > current is outside of the optimum range. Details System board < Action 1. Review system power policy. 2. Check system logs for power related failures. 3.

Error Code Message Information BAT0002 Message The system board battery has failed. LCD Message The system board battery has failed. Check battery. Details The system board battery is either missing or bad. Action Getting Help. BAT0017 name > battery has failed. Message The <...

Error Code Message Information CPU0204 Message CPU < number > < name > voltage is outside of range. number > < name > voltage is outside of range. Re-seat CPU. LCD Message CPU < Details Voltages outside the allowable range may damage electrical components or may cause the system to shutdown.

Error Code Message Information 4. Reapply input power and turn on the system. 5. If the issue persists, see Getting Help. CPU0703 Message CPU bus initialization error detected. LCD Message CPU bus initialization error detected. Power cycle system. Details System event log and operating system logs may indicate that the exception is external to the processor.

Error Code Message Information Details Fan has failed. Action Remove and reinstall failed fans or install additional fans. HWC1001 name > is absent. Message The < name > is absent. Check hardware. LCD Message The < Details The absent device may be necessary for proper operation. System functionality may be degraded.

Error Code Message Information Action Check the memory configuration. Re-seat the memory modules. If the issue persists, Getting Help. MEM0701 Message Correctable memory error rate exceeded for < location >. Details The memory may not be operational. This an early indicator of a possible future uncorrectable error.

Error Code Message Information Action Cycle input power, update component drivers, if device is removable, reinstall the device. PCI1308 Message A PCI parity error was detected on a component at bus < bus >device< device >function func >. < LCD Message PCI parity error on bus < bus >...

Error Code Message Information Action Remove and re-seat the failed disk. If the issue persists, see Getting Help. PDR1016 number > is removed from disk drive bay < bay >. Message Drive < number > removed from disk drive bay < bay >. Check drive. LCD Message Drive <...

Error Code Message Information LCD Message Power supply < number > is incorrectly configured. Check PSU. Details Power supplies should be of the same input type and power rating. Action Install matched power supplies and review proper configuration in this manual. PSU0016 number >...

Error Code Message Information PSU0035 Message An over voltage fault detected on power supply < number >. number >. Check PSU. LCD Message Over voltage fault on PSU < Action Check input power or reinstall the power supply. If the issue persists, see Getting Help.

Error Code Message Information Action Check the event log for power supply failures. Review system configuration and power consumption and upgrade or install power supplies accordingly. PWR1005 Message The system performance degraded because the user-defined power capacity has changed. Details The user-defined power settings have affected system operation.

Error Code Message Information Action Reinstall the SD module. RFM2004 name >. Message Failure detected on Internal Dual SD Module < name > failed. Check SD Card. LCD Message Internal Dual SD Module < Details The SD card module is installed but improperly configured or failed to initialize. Action Reinstall the SD module and remove and reinstall SD cards.

Error Code Message Information SEL1204 Message An unknown system hardware failure detected. LCD Message Unknown system hardware failure. Details If the system event log failed to initialize, platform status and failure events are not captured. Some management software do not report platform exceptions. Action Re-configure system to the minimum supported configuration.

....................34 Operating With A Setup Password Enabled ...........................34 Entering The UEFI Boot Manager ......................35 Using The Boot Manager Navigation Keys ............................35 Boot Manager Screen ...............................36 UEFI Boot Menu ..........................36 Embedded System Management ..............................36 iDRAC Settings Utility ........................36 Entering The iDRAC Settings Utility 3 Installing System Components....................37 ..............................37 Recommended Tools...

.....................58 Removing The Cooling-Fan Assembly (Optional) ....................58 Installing The Cooling-Fan Assembly (Optional) ........................59 Internal USB Memory Key (Optional) ........................59 Replacing The Internal USB Key ..............................60 PCIe Card Holder ........................60 Removing The PCIe Card Holder ........................61 Installing The PCIe Card Holder ..............................61 Expansion Cards ......................61...

............................101 System Left Side Cover ......................101 Removing The System Left Side Cover ......................102 Installing The System Left Side Cover ................................102 Control Panel ....................103 Removing The Control Panel—Tower Mode ....................104 Installing The Control Panel—Tower Mode ..................104 Removing The Control Panel Module—Rack Mode .....................106 Installing The Control Panel—Rack Mode .......................106...

..........................122 Troubleshooting Processors 5 Using System Diagnostics.....................123 ............................123 Dell Online Diagnostics ........................123 Dell Embedded System Diagnostics ..................123 When To Use The Embedded System Diagnostics ....................123 Running The Embedded System Diagnostics ...........................124 System Diagnostic Controls 6 Jumpers And Connectors......................125 ..........................125 System Board Jumper Settings ............................126...

Figure 2. Front-Panel Features and Indicators—3.5 Inch Hard-Drive Chassis Item Indicator, Button, or Icon Description Connector Optical drive (optional) One optional SATA DVD-ROM drive or DVD+/-RW drive. vFlash media card slot Allows you to insert a vFlash media card. Power-on indicator, power The power-on indicator lights when the system power is button on.

USB 2.0-compliant. Hard drives 3.5 inch Up to twelve 3.5 inch hot-swappable hard-drive hard drives. systems Up to four Dell PowerEdge Express Flash devices (PCIe SSDs). 2.5 inch Up to thirty two 2.5 inch hot-swappable hard- drive hard drives. systems...

Item Indicator, Button, or Icon Description Connector NOTE: In systems supporting S110 Software RAID configuration, hard- drive slots 4 through 7 do not support any hard drives and are installed with hard-drive blanks. LCD panel Displays system ID, status information, and system error messages.

Item Indicator, Button, or Icon Description Connector System identification button The identification buttons on the front and back panels can be used to locate a particular system within a rack. When one of these buttons is pressed, the LCD panel on the front and the system status indicator on the back flash until one of the buttons is pressed again.

• For the full name of an abbreviation or acronym used in this document, see the Glossary at support.dell.com/ manuals. NOTE: Always check for updates on support.dell.com/manuals and read the updates first because they often...

Figure 14. Memory Socket Locations Memory channels are organized as follows: Processor 1 channel 0: slots A1, A5, and A9 channel 1: slots A2, A6, and A10 channel 2: slots A3, A7, and A11 channel 3: slots A4, A8, and A12 Processor 2 channel 0: slots B1, B5, and B9 channel 1: slots B2, B6, and B10...

NOTE: 1R, 2R, and 4R in the following tables indicate single-, dual-, and quad-rank DIMMs respectively. Table 1. Memory Configurations—Single Processor System Capacity DIMM Size (in Number of DIMM Rank, Organization, DIMM Slot Population (in GB) DIMMs and Frequency 1R, x8, 1333 MT/s, 1R, x8, 1600 MT/s 1R, x8, 1333 MT/s, A1, A3...

System DIMM Size (in Number of DIMM Rank, DIMM Slot Population Capacity (in DIMMs Organization, and Frequency 2R, x8, 1333 MT/s A1, A2, A3, A4, A5, A6, A7, A8 B1, B2, B3, B4, B5, B6, B7, B8 2R, x4, 1333 MT/s, A1, A2, A3, A4 2R, x4, 1600 MT/s B1, B2, B3, B4...

Figure 29. Removing and Installing a Processor 1. heat sink 2. captive screws (4) 3. processor 4. slots (4) CAUTION: The processor is held in its socket under strong pressure. Be aware that the release lever can spring up suddenly if not firmly grasped. Position your thumb firmly over the processor socket-release lever near the unlock icon and release the lever from the locked position by pushing down and out from under the tab.

Figure 30. Processor Shield Opening and Closing Lever Sequence 1. socket release lever 2. close first icon 3. processor 4. socket release lever 5. open first icon 11. Hold the tab on the processor shield and rotate it upward and out of the way. 12.

DC power and to safety grounds. Do not attempt connecting to DC power or installing grounds yourself. All electrical wiring must comply with applicable local or national codes and practices. Damage due to servicing that is not authorized by Dell is not covered by your warranty. Read and follow all safety instructions that came with the product.

Figure 38. Removing and Installing a 3.5 Inch (x8) SAS/SATA Backplane 1. backplane power connector 5. backplane 2. backplane power cable 6. SAS A cable 3. release pin 4. signal cable...

Figure 39. Cabling—3.5 Inch (x8) SAS/SATA Backplane 1. power connector on backplane 5. signal connector on system board 2. SAS A connector on backplane 6. signal connector on backplane 3. SAS A connector on system board 4. power connector on PIB...

Figure 40. Removing and Installing a 3.5 Inch (x8) SAS/SATA Backplane With a Single PERC Card 1. power connector 5. signal cable 2. power cable 6. backplane 3. release pin 7. SAS A cable 4. SAS B cable...

Figure 41. Cabling—3.5 Inch (x8) SAS/SATA Backplane With a Single PERC Card 1. power connector on backplane 6. signal cable on system board 2. SAS A connector on backplane 7. signal cable on backplane 3. SAS B connector on PERC card 8.

Figure 42. Removing and Installing a 3.5 Inch (x8) Plus 2.5 Inch (x4) SAS/SATA Backplane 1. PCIe C cable 8. release pin 2. power connector 9. SAS B cable 3. PCIe D cable 10. signal cable 4. PCIe B cable 11.

Figure 43. Cabling—3.5 Inch (x8) Plus 2.5 Inch (x4) SAS/SATA Backplane 1. PCIe B cable on PCIe SSD backplane 2. PCIe A cable on PCIe SSD backplane 3. SAS B cable from 3.5 inch backplane on PERC card 4. SAS A cable from 3.5 inch backplane on PERC card 5.

12. SAS B cable on 3.5 inch backplane 13. SAS A cable on 3.5 inch backplane 14. backplane power cable 15. backplane power connector 16. PCIe SSD backplane signal cable 17. PCIe D cable on PCIe SSD backplane 18. PCIe C cable on PCIe SSD backplane Figure 44.

Figure 45. Cabling—3.5 Inch (x12) SAS/SATA Backplane 1. power connector on backplane 2. SAS B connector on backplane 3. SAS B connector on PERC 4. SAS A connector on PERC 5. power connector on PIB 6. signal connector on system board 7.

Figure 46. Removing and Installing 2.5 Inch (x16) SAS/SATA Backplane 1. backplane 5. power connector 2. signal cable 6. SAS A cable 3. release pin 7. SAS B cable 4. power cable...

Figure 47. Cabling—2.5 Inch (x16) SAS/SATA Backplane 1. SAS B connector on PERC 6. SAS B connector on backplane 2. SAS A connector on PERC 7. power connector on backplane 3. power connector on PIB 8. signal connector on backplane 4.

Figure 49. Cabling—2.5 Inch (x16) Plus 2.5 Inch (x4) SAS/SATA Backplane 1. PCIe B cable on PCIe SSD backplane 11. SAS A connector on backplane 2. PCIe A cable on PCIe SSD backplane 12. SAS B connector on backplane 3. SAS B connector on PERC 13.

Figure 51. Cabling—2.5 Inch (x32) SAS/SATA Backplane With Two PERC Cards 1. SAS A connector on 2nd PERC 10. SAS B connector on backplane 2 2. SAS B connector on 2nd PERC 11. power connector on backplane 2 3. SAS B connector on 1st PERC 12.

Figure 52. Removing and Installing 2.5 Inch (x32) SAS/SATA Backplane With a Single PERC Card 1. signal cable 8. signal cable 2. release pin 9. power cable 3. power connector 10. SAS A1 cable 4. SAS A cable 11. SAS B1 cable 5.

Figure 53. Cabling—2.5 Inch (x32) SAS/SATA Backplane With a Single PERC Card 1. signal connector on backplane 1 10. backplane 2 SAS A connector 2. SAS B connector on backplane 1 11. backplane 2 SAS B connector 3. SAS A connector on backplane 1 12.

Figure 56. Removing and Installing the Control Panel Module—Rack Mode 1. control panel module 2. screw Figure 57. Removing and Installing the Control Panel From the Control Panel Module—Rack Mode 1. control panel 2. control panel cable...

BP_SIG2 Backplane signal connector 2 FAN5 Fan5 connector PWR_CONN_1 Power connector BP_SIG0, BP_SIG1 Dell PowerEdge Express Flash (PCIe SSD) signal connector, backplane signal connector 1 FAN4 Fan4 connector LCD panel connector A3, A7, A11, A4, A8, A12 Memory module sockets...

0 through 11 Eight plus four-hard drive systems Up to eight 3.5 inch, internal, hot-swappable SAS, SATA, SATA SSD, or Nearline SAS hard drives, and four Dell PowerEdge Express Flash devices (PCIe SSDs) Sixteen–hard-drive systems Up to sixteen 2.5 inch, internal, hot-swappable SAS,...

16 MB shared Environmental NOTE: For additional information about environmental measurements for specific system configurations, see dell.com/environmental_datasheets. Standard Operating Temperature Continuous operation: 10 °C to 35 °C at 10% to 80% relative humidity (RH), with 26 °C max dew point. De-rate maximum allowable dry bulb temperature at 1 °C per 300...

150 W processors are not supported. • Non-redundant power supplies are not supported. • Non Dell qualified peripheral cards and/or peripheral cards greater than 25 W are not supported. Storage Temperature –40 °C to 65 °C (–40 °F to 149 °F) with a maximum...

Error Code Message Information AMP0302 Message The system board < name > current is greater than the upper warning threshold. name > current is outside of the optimum range. Details System board < Action 1. Review system power policy. 2. Check system logs for power related failures. 3.

Error Code Message Information BAT0002 Message The system board battery has failed. LCD Message The system board battery has failed. Check battery. Details The system board battery is either missing or bad. Action Getting Help. BAT0017 name > battery has failed. Message The <...

Error Code Message Information CPU0204 Message CPU < number > < name > voltage is outside of range. number > < name > voltage is outside of range. Re-seat CPU. LCD Message CPU < Details Voltages outside the allowable range may damage electrical components or may cause the system to shutdown.

Error Code Message Information 4. Reapply input power and turn on the system. 5. If the issue persists, see Getting Help. CPU0703 Message CPU bus initialization error detected. LCD Message CPU bus initialization error detected. Power cycle system. Details System event log and operating system logs may indicate that the exception is external to the processor.

Error Code Message Information Details Fan has failed. Action Remove and reinstall failed fans or install additional fans. HWC1001 name > is absent. Message The < name > is absent. Check hardware. LCD Message The < Details The absent device may be necessary for proper operation. System functionality may be degraded.

Error Code Message Information Action Check the memory configuration. Re-seat the memory modules. If the issue persists, Getting Help. MEM0701 Message Correctable memory error rate exceeded for < location >. Details The memory may not be operational. This an early indicator of a possible future uncorrectable error.

Error Code Message Information Action Cycle input power, update component drivers, if device is removable, reinstall the device. PCI1308 Message A PCI parity error was detected on a component at bus < bus >device< device >function func >. < LCD Message PCI parity error on bus < bus >...

Error Code Message Information Action Remove and re-seat the failed disk. If the issue persists, see Getting Help. PDR1016 number > is removed from disk drive bay < bay >. Message Drive < number > removed from disk drive bay < bay >. Check drive. LCD Message Drive <...

Error Code Message Information LCD Message Power supply < number > is incorrectly configured. Check PSU. Details Power supplies should be of the same input type and power rating. Action Install matched power supplies and review proper configuration in this manual. PSU0016 number >...

Error Code Message Information PSU0035 Message An over voltage fault detected on power supply < number >. number >. Check PSU. LCD Message Over voltage fault on PSU < Action Check input power or reinstall the power supply. If the issue persists, see Getting Help.

Error Code Message Information Action Check the event log for power supply failures. Review system configuration and power consumption and upgrade or install power supplies accordingly. PWR1005 Message The system performance degraded because the user-defined power capacity has changed. Details The user-defined power settings have affected system operation.

Error Code Message Information Action Reinstall the SD module. RFM2004 name >. Message Failure detected on Internal Dual SD Module < name > failed. Check SD Card. LCD Message Internal Dual SD Module < Details The SD card module is installed but improperly configured or failed to initialize. Action Reinstall the SD module and remove and reinstall SD cards.

Error Code Message Information SEL1204 Message An unknown system hardware failure detected. LCD Message Unknown system hardware failure. Details If the system event log failed to initialize, platform status and failure events are not captured. Some management software do not report platform exceptions. Action Re-configure system to the minimum supported configuration.

I have a farm of Dell R720s, each with 4 SAS SSDs, and two Nvidia K20Xs. The system"s main video output is (apparently) a Matrox SVGA affair. These are currently running Windows Server 2012 (oh, the horror, I know) and I"m trying to migrate these to Ubuntu. Which version? Any version. 14.04 Desktop LTS, 14.04 Server LTS, 16.04 Desktop LTS, 16.04 Server LTS.

In all cases, the install proceeds sucessfully, nice pretty GUI, purple/red screen SVGA. Then when it"s done and reboots, dumped to terminal. The xstart apparently dumps out with a seg fault.

I"ve tried removing lightgm, installing,. reinstalling, repairing gdm, starting from scratch with unity-desktop etc... all same result. When booting, the purple screen shows up and the display starts flashing a few times (seg faults!), then I"m dumped to terminal. After fighting with this for quite a while my suspicion is the dm (whichever I try and use) is getting really confused about the archaic, ancient matrox (primary) display adapter and the two (headless, 21st century) Tesla K20x"s...

So, two part question: (1) what logs would you folks find helpful to help me resolve this issue? The x server init is seeingf all three adpters then bombing out with a segfault... and (2) has anyone here dealt with a Ubuntu server system with two headless GPU cards and a 20th century primary video display system?

We’ve got a client that does big batch jobs every day, loading hundreds of gigabytes of data or more in short bursts. They were frustrated with slow performance on the batch jobs, and after we performed our SQL Critical Care® with ’em, it was really clear that their hardware was the bottleneck. They were using a virtual server backed by an iSCSI SAN, and they were getting bottlenecked on reads and writes. We could put some more memory in it to cache more data, preventing the read problem, but we would still get bottlenecked trying to write lots of data quickly to the shared storage.

We recommended two things: first, switch to a standalone bare metal SQL Server (instead of a virtual machine), and second, switch to cheap commodity-grade local solid state storage. Both of those suggestions were a little controversial at the client, but the results were amazing.

Theoretically, virtualization makes for easier high availability and disaster recovery. In practice, there are some situations – like this one – where it doesn’t make sense.

In the event of a failure, 15-30 minutes of downtime were acceptable. The server was important, but not mission-critical. In the event of an outage, they didn’t mind manually failing over to a secondary server. This meant we could avoid the complexity of a failover cluster and shared storage.

Slow performance was not acceptable during normal production. They wanted to put the pedal to the metal and make an order-of-magnitude improvement in their processing speeds with as few code changes as possible.

The Dell R720 is a 2-processor, 2-rack-unit server with room for 16 2.5″ drives across the front of the server, and two RAID controllers. It’s got room for up to 768GB of memory. It’s my favorite 2-processor SQL Server box at the moment.

I’m not against shared storage – I love it – but when I’m dealing with large batch jobs, a limited budget, and no clustering requirement, it’s tough to beat local SSDs. The R720 lets us use a big stack of 2.5″ solid state drives with two RAID controllers for processing data. Quantity is important here since affordable SSDs tend to be relatively small – 1TB or less. Some larger drives exist, like the Samsung 860 EVO 4TB, but bang-for-the-buck doesn’t quite match the 1TB-class yet.

The Dell R720XD is a similar server, but it’s absolutely slathered with drive bays, handling up to 26 2.5″ drives. While that sounds better – especially with today’s fastest SSD drives still being a little size-constrained – the R720XD only has one RAID controller instead of the R720’s two.

For our Plan B – where we’d fail over if the primary server died – we actually stuck with a virtual server. We built a small 2-vCPU, 8GB RAM guest with SQL Server. We keep it current using the database backups from the primary server. Remember, this application is batch-oriented, so we just need to run backups once a day after the batch completes, and then restore them on the secondary server. When disaster strikes, they can shut down the VMware guest, add more CPU and memory power to it, and it’s off and running as the new primary while they troubleshoot the physical box. It’s not as speedy as the primary physical box, but that’s a business decision – if they want full speed, they can easily add a second physical box later.

A 400GB MLC drive is $1,200 rack, so filling all 16 bays would cost $19,200. To put things in perspective, the server itself is about $10k with 2 blazing fast quad-core CPUs, 384GB of memory, and spinners on the fans, so buying Dell’s SSDs triples the cost of the server.

And it’s usually half the cost of the Dell drive, meaning we could fill the R720 with 8TB of smokin’ fast storage for a few thousand bucks, plus leave a couple of hot spares on the shelf.

There are risks with this approach – Dell won’t guarantee that their controller and their software will work correctly with this combination. For example, during our load testing, the DSM SA Data Manager service repeatedly stopped, and we couldn’t always use the Dell OpenManage GUI to build RAID arrays.

3. Ignore the drive bays, and use PCI Express cards. Drives from Intel and Plextor bypass the RAID controller altogether and can deliver even faster performance – but at the cost of higher prices, smaller space, and tougher management. You can’t take four of these drives and RAID 10 them together for more space, for example. (Although that’s starting to change with Windows 2012’s Storage Spaces, and I’m starting to see that deployed in the wild.)

The R720 has two separate RAID controllers, each of which can see 8 of the Samsung drives. The drawback of this server design is that you can’t make one big 16-drive RAID 10 array. That’s totally okay, though, because even just a couple of these race car drives can actually saturate one RAID controller.

How few drives can we get away with? For future client projects, if we didn’t need to fill up the drive bays in order to get capacity, could we saturate the controllers with just, say, 4 drives instead of 8? Can we leave enough space to have hot spare drives? I run the performance tests with 2, 4, 6, and 8 SSD drives.

How much of a performance penalty do we pay for RAID 5? RAID 10 splits your drive capacity in half by storing two copies of everything. RAID 5 lets you store more data – especially important on limited-capacity solid state drives – but is notoriously slow on writes. (Thus, the Battle Against Any Raid Five.) But what if the drives are so fast that the controller is the bottleneck anyway?

Should we turn the controller caching on or off? RAID controllers have a very limited amount of memory (in our case, 1GB) that can be used to cache reads, writes, or both. In the past, I’ve seen SSD-equipped servers actually perform slower with the caching enabled because the caching logic wasn’t fast enough to keep up with the SSDs. Dell’s recent PowerEdge controllers are supposed to be able to keep up with today’s SSDs, but what’s the real story?

Does NTFS allocation unit size still matter? In my SQL Server setup checklist, I note that for most storage subsystems, drives should be formatted with 64K NTFS allocation units for maximum performance. Unfortunately, often we get called into client engagements where the drives are already formatted and the database server is live in production – but the NTFS allocation unit is just 4K, the default. To fix that, you have to reformat the drives – but how much of a difference will it make, and is it worth the downtime?

The answers to these questions change fast, and I need to check again about once a quarter. When I need to double-check again, and I’m working with a client on a new server build with all SSDs, I offer them a big discount if I can get remote access to the server for a couple of days.

Turning off read caching didn’t affect performance. The controller’s small cache (1GB) just isn’t enough to help SQL Servers, which tend to cache most of their data in memory anyway. When we need to hit disk, especially for long sustained sequential reads, the controller’s minimal cache didn’t help – even with just 4 SSDs involved.

The controller’s write caching, however, did help. Write throughput almost tripled as opposed to having caching disabled. Interestingly, as long as write caching at the controller was enabled, it didn’t matter whether read caching was enabled or not – we saw the same benefit. I would expect higher write throughput if all of the 1GB of cache was available to cache writes, but that doesn’t appear to be the case with the R720’s controllers at least.

NTFS allocation unit size made no difference. This combination of RAID controller and drives is the honey badger of storage – it just don’t care. You can leave the default caching settings AND the default NTFS allocation unit size, and it’s still crazy fast.

In RAID 10, adding drives didn’t improve random performance. We got roughly the same random performance with 4 drives and 8 drives. Sequential read throughput improved about 35% – good, but maybe not worth the financial cost of doubling the number of drives. Sequential writes saw a big boost of about 60%, but keep in mind that sustained sequential writes is a fairly rare case for a database server. It’s unusual that we’re not doing *any* reads, and we’re writing our brains out in only one file.

SSD speeds still can’t beat a RAMdrive. With SQL Server Standard Edition being confined to just 64GB of memory, some folks are choosing to install RAMdrive software to leverage that extra cheap memory left in the server. If your queries are spilling to TempDB because they need memory for sorts & joins, this approach might sound tempting. Microsoft’s even got an old knowledge base article about it. The dark side is that you’re installing another software driver on your system, and I always hate doing that on production systems. Just for giggles, I installed DataRam’s RAMdisk for comparison. The SSDs are on the left, RAMdisk on the right, and pay particular attention to the bottom row of results:

The bottom row, 4K operations with a queue depth of 32, is vaguely similar to heavy activity on multiple TempDB data files. This particular RAMdrive software manages about 4x more write throughput (and IOPs as well) than the RAID 10 array of 8 drives. (For the record, a RAID 0 array of 8 drives doesn’t beat the RAMdrive on random writes either.)

And finally, here’s the performance penalty for RAID 5.RAID 10 is on the left, 5 on the right. Same number of drives, same cache settings, same allocation unit settings:

It’s not a surprise that RAID 5 is faster for reads, but in this round of testing, it was even faster for sequential and large writes. The only place where RAID 5 takes a hit: the bottom right, 4K writes with queue depth 32.

We’re not just testing the drives here – we’re also testing the RAID controller. To get a true picture, we have to run another test. On the left is a RAID 10 array with 8 drives. On the right, just one drive by itself:

The jawdropper hits in the bottom half of the results – when dealing with small random operations, more drives may not be faster. In fact, the more drives you add, the slower writes get, because the controller has to manage a whole lot of writes across a whole bunch of drives.

See, we’re not just testing the drives – we’re testing the RAID controller too. It’s a little computer with its own processor, and it has to be able to keep up with the data we’re throwing at it. In the wrong conditions, when it’s sitting between a fast server and a fast set of solid state drives, this component becomes the b

Ms.Josey

Ms.Josey

Ms.Josey

Ms.Josey